Physics-Based TOF Imaging Simulation for Space Targets Based on Improved Path Tracing

Abstract

:1. Introduction

- (1)

- An improved path tracing algorithm is developed to adapt to the TOF camera by introducing a cosine component to characterize the modulated light in the TOF camera.

- (2)

- The background light suppression model is introduced, and the physics-based simulation is realized by considering the BRDF model fitted by the measured data in the near-infrared band of space materials

- (3)

- A ground test scene is built, and the correctness of the proposed TOF camera imaging simulation method is verified by quantitative evaluation between the simulated image and measured image.

2. Materials and Methods

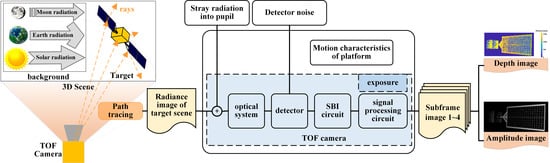

2.1. Imaging Principle of TOF Camera

2.2. Imaging Characteristic Modeling

2.2.1. Target Material Characteristics Modeling

2.2.2. Background Characteristics Modeling

- (1)

- The irradiance generated by direct solar radiation at the target is:where is the wavelength in µm; is the first blackbody radiation constant; is the second blackbody radiation constant; is the solar radiation temperature, and K; is the solar radius, and km; is the distance between the Sun and the Earth; is the observation band of the TOF camera.

- (2)

- Assuming that the space target is in a high Earth orbit and the Earth is assumed to be a diffuse sphere, the irradiance generated by solar radiation reflected by the Earth at the target is approximate as follows.where is the average albedo of the Earth and ; is the radius of the Earth and km; is the height of the target from the ground; is the angle between vector and vector , and the value range is .

- (3)

- Similarly, assuming that the Moon is a diffuse sphere, the irradiance generated by solar radiation reflected by the Moon at the target is:where is the average albedo of the Moon and ; is the radius of the Moon and km; is the distance between the target and the center of mass of the Moon; is the angle between vector and vector , and the value range is .

2.2.3. SBI Characteristics Modeling

2.3. Imaging Simulation Modeling

2.3.1. Improved Path Tracing Algorithm of the TOF Camera

2.3.2. Imaging Link Impact Modeling

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Opromolla, R.; Fasano, G.; Rufino, G.; Grassi, M. A review of cooperative and uncooperative spacecraft pose determination techniques for close-proximity operations. Prog. Aerosp. Sci. 2017, 93, 53–72. [Google Scholar] [CrossRef]

- Klionovska, K.; Burri, M. Hardware-in-the-Loop Simulations with Umbra Conditions for Spacecraft Rendezvous with PMD Visual Sensors. Sensors 2021, 21, 1455. [Google Scholar] [CrossRef] [PubMed]

- Ravandoor, K.; Busch, S.; Regoli, L.; Schilling, K. Evaluation and Performance Optimization of PMD Camera for RvD Application. IFAC Proc. Vol. 2013, 46, 149–154. [Google Scholar] [CrossRef]

- Potier, A.; Kuwahara, T.; Pala, A.; Fujita, S.; Sato, Y.; Shibuya, Y.; Tomio, H.; Tanghanakanond, P.; Honda, T.; Shibuya, T.; et al. Time-of-Flight Monitoring Camera System of the De-orbiting Drag Sail for Microsatellite ALE-1. Trans. Jpn. Soc. Aeronaut. Space Sci. Aerosp. Technol. Jpn. 2021, 19, 774–783. [Google Scholar] [CrossRef]

- Martínez, H.G.; Giorgi, G.; Eissfeller, B. Pose estimation and tracking of non-cooperative rocket bodies using Time-of-Flight cameras. Acta Astronaut. 2017, 139, 165–175. [Google Scholar] [CrossRef]

- Ruel, S.; English, C.; Anctil, M.; Daly, J.; Smith, C.; Zhu, S. Real-time 3D vision solution for on-orbit autonomous rendezvous and docking. In Spaceborne Sensors III; SPIE: Bellingham, WA, USA, 2006; p. 622009. [Google Scholar] [CrossRef]

- Lebreton, J.; Brochard, R.; Baudry, M.; Jonniaux, G.; Salah, A.H.; Kanani, K.; Goff, M.L.; Masson, A.; Ollagnier, N.; Panicucci, P. Image simulation for space applications with the SurRender software. arXiv 2021, arXiv:2106.11322. [Google Scholar]

- Han, Y.; Lin, L.; Sun, H.; Jiang, J.; He, X. Modeling the space-based optical imaging of complex space target based on the pixel method. Optik 2015, 126, 1474–1478. [Google Scholar] [CrossRef]

- Zhang, Y.; Lv, L.; Yang, C.; Gu, Y. Research on Digital Imaging Simulation Method of Space Target Navigation Camera. In Proceedings of the 2021 IEEE 16th Conference on Industrial Electronics and Applications (ICIEA), Chengdu, China, 1–4 August 2021; pp. 1643–1648. [Google Scholar]

- Li, W.; Cao, Y.; Meng, D.; Wu, Z. Space target scattering characteristic imaging in the visible range based on ray tracing algorithm. In Proceedings of the 12th International Symposium on Antennas, Propagation and EM Theory (ISAPE), Hangzhou, China, 3–6 December 2018; pp. 1–3. [Google Scholar] [CrossRef]

- Xu, C.; Shi, H.; Gao, Y.; Zhou, L.; Shi, Q.; Li, J. Space-Based optical imaging dynamic simulation for spatial target. In Proceedings of the AOPC 2019: Optical Sensing and Imaging Technology, Beijing, China, 7–9 July 2019; p. 1133815. [Google Scholar]

- Wang, H.; Zhang, W. Visible imaging characteristics of the space target based on bidirectional reflection distribution function. J. Mod. Opt. 2012, 59, 547–554. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, W.; Wang, F. Infrared imaging characteristics of space-based targets based on bidirectional reflection distribution function. Infrared Phys. Technol. 2012, 55, 368–375. [Google Scholar] [CrossRef]

- Ding, Z.; Han, Y. Infrared characteristics of satellite based on bidirectional reflection distribution function. Infrared Phys. Technol. 2019, 97, 93–100. [Google Scholar] [CrossRef]

- Wang, H.; Chen, Y. Modeling and simulation of infrared dynamic characteristics of space-based space targets. Infrared Laser Eng. 2016, 45, 0504002. [Google Scholar] [CrossRef]

- Wang, F.; Eibert, T.F.; Jin, Y.-Q. Simulation of ISAR Imaging for a Space Target and Reconstruction under Sparse Sampling via Compressed Sensing. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3432–3441. [Google Scholar] [CrossRef]

- Schlutz, M. Synthetic Aperture Radar Imaging Simulated in MATLAB. Master’s Thesis, California Polytechnic State University, San Luis Obispo, CA, USA, 2009. [Google Scholar]

- Keller, M.; Kolb, A. Real-time simulation of time-of-flight sensors. Simul. Model. Pract. Theory 2009, 17, 967–978. [Google Scholar] [CrossRef]

- Keller, M.; Orthmann, J.; Kolb, A.; Peters, V. A simulation framework for time-of-flight sensors. In Proceedings of the 2007 International Symposium on Signals, Circuits and Systems, Iasi, Romania, 13–14 July 2007; pp. 1–4. [Google Scholar]

- Meister, S.; Nair, R.; Kondermann, D. Simulation of Time-of-Flight Sensors using Global Illumination. In Proceedings of the VMV, Lugano, Switzerland, 11–13 September 2013; pp. 33–40. [Google Scholar]

- Lambers, M.; Hoberg, S.; Kolb, A. Simulation of Time-of-Flight Sensors for Evaluation of Chip Layout Variants. IEEE Sens. J. 2015, 15, 4019–4026. [Google Scholar] [CrossRef]

- Bulczak, D.; Lambers, M.; Kolb, A. Quantified, Interactive Simulation of AMCW ToF Camera Including Multipath Effects. Sensors 2018, 18, 13. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Thoman, P.; Wippler, M.; Hranitzky, R.; Fahringer, T. RTX-RSim: Accelerated Vulkan room response simulation for time-of-flight imaging. In Proceedings of the International Workshop on OpenCL, Munich, Germany, 27–29 April 2020; pp. 1–11. [Google Scholar]

- Cho, J.; Choi, J.; Kim, S.-J.; Park, S.; Shin, J.; Kim, J.D.K.; Yoon, E. A 3-D Camera With Adaptable Background Light Suppression Using Pixel-Binning and Super-Resolution. IEEE J. Solid-State Circuits 2014, 49, 2319–2332. [Google Scholar] [CrossRef]

- Shin, J.; Kang, B.; Lee, K.; Kim, J.D.K. A 3D image sensor with adaptable charge subtraction scheme for background light suppression. In Proceedings of the Sensors, Cameras, and Systems for Industrial and Scientific Applications XIV, Burlingame, CA, USA, 3–7 February 2013; p. 865907. [Google Scholar] [CrossRef]

- Davidovic, M.; Seiter, J.; Hofbauer, M.; Gaberl, W.; Zimmermann, H. A background light resistant TOF range finder with integrated PIN photodiode in 0.35 μm CMOS. In Proceedings of the Videometrics, Range Imaging, and Applications XII; and Automated Visual Inspection, Munich, Germany, 13–16 May 2013; p. 87910R. [Google Scholar] [CrossRef]

- Davidovic, M.; Hofbauer, M.; Schneider-Hornstein, K.; Zimmermann, H. High dynamic range background light suppression for a TOF distance measurement sensor in 180nm CMOS. In Proceedings of the SENSORS, Limerick, Ireland, 28–31 October 2011; pp. 359–362. [Google Scholar] [CrossRef]

- Davidovic, M.; Zach, G.; Schneider-Hornstein, K.; Zimmermann, H. TOF range finding sensor in 90nm CMOS capable of suppressing 180 klx ambient light. In Proceedings of the SENSORS, Waikoloa, HI, USA, 1–4 November 2010; pp. 2413–2416. [Google Scholar] [CrossRef]

- Schmidt, M.; Jähne, B. A Physical Model of Time-of-Flight 3D Imaging Systems, Including Suppression of Ambient Light. In Workshop on Dynamic 3D Imaging; Springer: Berlin/Heidelberg, Germany, 2009; pp. 1–15. [Google Scholar] [CrossRef]

- Torrance, K.E.; Sparrow, E.M. Theory for Off-Specular Reflection from Roughened Surfaces. J. Opt. Soc. Am. 1967, 57, 1105–1114. [Google Scholar] [CrossRef]

- Hou, Q.; Zhi, X.; Zhang, H.; Zhang, W. Modeling and validation of spectral BRDF on material surface of space target. In International Symposium on Optoelectronic Technology and Application 2014: Optical Remote Sensing Technology and Applications; International Society for Optics and Photonics: Bellignham, WA, USA, 2014; p. 929914. [Google Scholar]

- Sun, C.; Yuan, Y.; Zhang, X.; Wang, Q.; Zhou, Z. Research on the model of spectral BRDF for space target surface material. In Proceedings of the 2010 International Symposium on Optomechatronic Technologies, Toronto, ON, Canada, 25–27 October 2010; pp. 1–6. [Google Scholar] [CrossRef]

- Peng, L.I.; Zhi, L.I.; Can, X.U. Measuring and Modeling the Bidirectional Reflection Distribution Function of Space Object’s Surface Material. In Proceedings of the 3rd International Conference on Materials Engineering, Manufacturing Technology and Control (ICMEMTC 2016), Taiyuan, China, 27–28 February 2016. [Google Scholar]

- Schwarte, R.; Xu, Z.; Heinol, H.-G.; Olk, J.; Klein, R.; Buxbaum, B.; Fischer, H.; Schulte, J. New electro-optical mixing and correlating sensor: Facilities and applications of the photonic mixer device (PMD). In Proceedings of the Sensors, Sensor Systems, and Sensor Data Processing, Munich, Germany, 16–17 June 1997; pp. 245–253. [Google Scholar] [CrossRef]

- Ringbeck, T.; Möller, T.; Hagebeuker, B. Multidimensional measurement by using 3-D PMD sensors. Adv. Radio Sci. 2007, 5, 135–146. [Google Scholar] [CrossRef]

- Conde, M.H. Compressive Sensing for the Photonic Mixer Device; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Kajiya, J.T. The rendering equation. In Proceedings of the 13th Annual Conference on Computer Graphics and Interactive Techniques, Dallas, TX, USA, 18–22 August 1986; pp. 143–150. [Google Scholar]

- Pharr, M.; Jakob, W.; Humphreys, G. Physically Based Rendering: From Theory to Implementation; Morgan Kaufmann: Burlington, MA, USA, 2016. [Google Scholar]

- Li, J.; Liu, Z. Image quality enhancement method for on-orbit remote sensing cameras using invariable modulation transfer function. Opt. Express 2017, 25, 17134–17149. [Google Scholar] [CrossRef] [PubMed]

- Sukumar, V.; Hess, H.L.; Noren, K.V.; Donohoe, G.; Ay, S. Imaging system MTF-modeling with modulation functions. In Proceedings of the 2008 34th Annual Conference of IEEE Industrial Electronics, Orlando, FL, USA, 10–13 November 2008; pp. 1748–1753. [Google Scholar]

- Langmann, B.; Hartmann, K.; Loffeld, O. Increasing the accuracy of Time-of-Flight cameras for machine vision applications. Comput. Ind. 2013, 64, 1090–1098. [Google Scholar] [CrossRef]

- Langmann, B. Wide Area 2D/3D Imaging: Development, Analysis and Applications; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

| Thermal control material | 0.649 | 0.081 | 8.51 | 0.014 | 0.775 | 2.6% |

| Silicon solar cells | 0.350 | 0.053 | 2.22 | 0.010 | 0.021 | 5.2% |

| Indexes | Value |

|---|---|

| Resolution | 224 × 171 pixel |

| Wavelength of light source | 850 nm |

| Field angle | 62° × 45° |

| Focal length () | (208.33, 208.33) |

| Aperture | 2 mm |

| Acquisition time per frame | 4.8 ms typical at 45 fps |

| Average power consumption | 300 mW |

| Indexes | Value |

|---|---|

| Illumination area | 305 mm × 305 mm |

| Maximum angle of incidence | (half angle) < ±0.5° |

| Typical power output | 100 mW/cm2 (1 SUN), ±20% Adjustable |

| Uniformity | <±2% |

| Spectral match | 9.7–16.1% (800–900 nm) |

| Indexes | Value |

|---|---|

| Size of satellite body | 20 × 20 × 20 cm |

| Size of solar panel | 63 × 35 cm |

| 1.5 m | |

| 60° | |

| 10° | |

| 36,500 |

| Index | Measured Mage | Ref. [22]’s Results | Our Results | Ref. [22]’s Error | Our Error | |

|---|---|---|---|---|---|---|

| Grey | Mean | 17.79 | 16.73 | 18.25 | 5.96% | 2.59% |

| Var | 1411.67 | 1347.53 | 1358.06 | 4.54% | 3.80% | |

| MSE | — | 1788.35 | 1782.60 | — | — | |

| SSIM | — | 0.70 | 0.72 | — | — | |

| PSNR | — | 15.60 | 15.62 | — | — | |

| Depth | Mean | 403.04 | 750.28 | 476.75 | 86.16% | 18.29% |

| Var | 2.95 × 105 | 4.15 × 105 | 3.38 × 105 | 40.68% | 14.58% | |

| MSE | — | 5.46 × 105 | 4.45 × 105 | — | — | |

| SSIM | — | 0.80 | 0.85 | — | — | |

| PSNR | — | 38.95 | 39.85 | — | — | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yan, Z.; Wang, H.; Liu, X.; Ning, Q.; Lu, Y. Physics-Based TOF Imaging Simulation for Space Targets Based on Improved Path Tracing. Remote Sens. 2022, 14, 2868. https://doi.org/10.3390/rs14122868

Yan Z, Wang H, Liu X, Ning Q, Lu Y. Physics-Based TOF Imaging Simulation for Space Targets Based on Improved Path Tracing. Remote Sensing. 2022; 14(12):2868. https://doi.org/10.3390/rs14122868

Chicago/Turabian StyleYan, Zhiqiang, Hongyuan Wang, Xiang Liu, Qianhao Ning, and Yinxi Lu. 2022. "Physics-Based TOF Imaging Simulation for Space Targets Based on Improved Path Tracing" Remote Sensing 14, no. 12: 2868. https://doi.org/10.3390/rs14122868