NDFTC: A New Detection Framework of Tropical Cyclones from Meteorological Satellite Images with Deep Transfer Learning

Abstract

:1. Introduction

- (1)

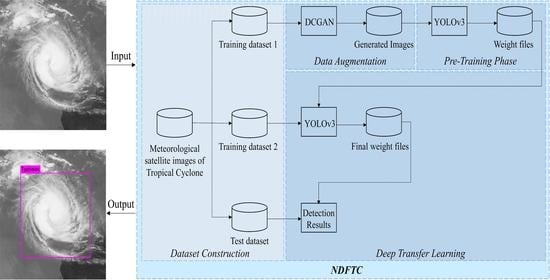

- In view of the finite data volume and complex backgrounds encountered in meteorological satellite images, a new detection framework of tropical cyclones (NDFTC) is proposed for accurate TC detection. The algorithm process of NDFTC consists of three major steps: data augmentation, a pre-training phase, and transfer learning, which ensures the effectiveness of detecting different kinds of TCs in complex backgrounds with finite data volume.

- (2)

- We used DCGAN as the data augmentation method instead of traditional data augmentation methods such as flip and crop. DCGAN can generate images simulated to TCs by learning the salient characteristics of TCs, which improves the utilization of finite data.

- (3)

- We used the YOLOv3 model as the detection model in the pre-training phase. The detection model is trained with the generated images obtained from DCGAN, which can help the model to learn the salient characteristics of TCs.

- (4)

- In the transfer learning phase, YOLOv3 is still the detection model, and it is trained with real TC images. Most importantly, the initial weights of the model are weights transferred from the model trained with generated images, which is a typically network-based deep transfer learning method. After that, the detection model can extract universal characteristics from real images of TCs and obtain a high accuracy.

2. Materials and Methods

2.1. Deep Convolutional Generative Adversarial Networks

2.2. You Only Look Once (YOLO) v3 Model

2.3. Loss Function

2.3.1. Loss Function of DCGAN

2.3.2. Loss Function of YOLOv3

2.4. Algorithm Process

| Algorithm 1 The algorithm process of NDFTC. |

| Start |

| Input: 2400 meteorological satellite images of TCs; the images were collected from 1979 to 2019 in the South West Pacific Area. |

| A. Data Augmentation |

| (1) A total of 600 meteorological satellite images are input into the DCGAN model. The selection rule for these images is to randomly select 18 images from the TCs that occur every year (1979–2010), which contains the common characteristics of TCs over these years. |

| (2) A total of 1440 generated images with TC characteristics are obtained in the DCGAN model. These generated images are only used as training samples in the pre-training phase. |

| B. Pre-Training Phase |

| (3) The generated images obtained from step (2) are inputted into the YOLOv3 model. (4) Feature extraction and preliminary detection of the generated images are completed. |

| (5) The weight trained to 10,000 times in step (4) is reserved in this phase. |

| C. Transfer Learning |

| (6) A total of 1800 meteorological satellite images are still available after step (1). A total of 80% of these data are used as the training samples in this phase. In other words, 1440 meteorological satellite images from 1979 to 2011 are used as training samples. |

| (7) The model starts to train with training samples of step (6) and weights of step (5) are initial weights in this phase, which is a typically network-based deep transfer learning method. |

| (8) A total of 360 meteorological satellite images from 2011 to 2019 are used as the testing samples. Then, the test is completed. |

| Output: detection results, accuracy, average precision. |

| End |

3. Experimental Results

3.1. Data Set

3.2. Experiment Setup

3.3. Results and Discussion

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| TC | Tropical cyclone |

| TCs | Tropical cyclones |

| NDFTC | New detection framework of tropical cyclones |

| GAN | Generative adversarial nets |

| DCGAN | Deep convolutional generative adversarial networks |

| YOLO | You Only Look Once |

| NWP | Numerical weather prediction |

| ML | Machine learning |

| DT | Decision trees |

| RF | Random forest |

| SVM | Support vector machines |

| DNN | Deep neural networks |

| ReLU | Rectified linear unit |

| TP | True positive |

| TN | True negative |

| FP | False positive |

| FN | False negative |

| ACC | Accuracy |

| AP | Average precision |

| IOU | Intersection over union |

References

- Khalil, G.M. Cyclones and storm surges in Bangladesh: Some mitigative measures. Nat. Hazards 1992, 6, 11–24. [Google Scholar] [CrossRef]

- Hunter, L.M. Migration and Environmental Hazards. Popul. Environ. 2005, 26, 273–302. [Google Scholar] [CrossRef] [PubMed]

- Mabry, C.M.; Hamburg, S.P.; Lin, T.-C.; Horng, F.-W.; King, H.-B.; Hsia, Y.-J. Typhoon Disturbance and Stand-level Damage Patterns at a Subtropical Forest in Taiwan1. Biotropica 1998, 30, 238–250. [Google Scholar] [CrossRef]

- Dale, V.H.; Joyce, L.A.; McNulty, S.; Neilson, R.P.; Ayres, M.P.; Flannigan, M.D.; Hanson, P.J.; Irland, L.C.; Lugo, A.E.; Peterson, C.J.; et al. Climate Change and Forest Disturbances. Bioscience 2001, 51, 723. [Google Scholar] [CrossRef] [Green Version]

- Pielke, R.A., Jr.; Gratz, J.; Landsea, C.W.; Collins, D.; Saunders, M.A.; Musulin, R. Normalized hurricane damage in the united states: 1900–2005. Nat. Hazards Rev. 2008, 9, 29–42. [Google Scholar] [CrossRef]

- Zhang, Q.; Liu, Q.; Wu, L. Tropical Cyclone Damages in China 1983–2006. Am. Meteorol. Soc. 2009, 90, 489–496. [Google Scholar] [CrossRef] [Green Version]

- Lian, Y.; Liu, Y.; Dong, X. Strategies for controlling false online information during natural disasters: The case of Typhoon Mangkhut in China. Technol. Soc. 2020, 62, 101265. [Google Scholar] [CrossRef]

- Kang, H.Y.; Kim, J.S.; Kim, S.Y.; Moon, Y.I. Changes in High- and Low-Flow Regimes: A Diagnostic Analysis of Tropical Cyclones in the Western North Pacific. Water Resour. Manag. 2017, 31, 3939–3951. [Google Scholar] [CrossRef]

- Kim, J.S.; Jain, S.; Kang, H.Y.; Moon, Y.I.; Lee, J.H. Inflow into Korea’s Soyang Dam: Hydrologic variability and links to typhoon impacts. J. Hydro Environ. Res. 2019, 22, 50–56. [Google Scholar] [CrossRef]

- Burton, D.; Bernardet, L.; Faure, G.; Herndon, D.; Knaff, J.; Li, Y.; Mayers, J.; Radjab, F.; Sampson, C.; Waqaicelua, A. Structure and intensity change: Operational guidance. In Proceedings of the 7th International Workshop on Tropical Cyclones, La Réunion, France, 15–20 November 2010. [Google Scholar]

- Halperin, D.J.; Fuelberg, H.E.; Hart, R.E.; Cossuth, J.H.; Sura, P.; Pasch, R.J. An Evaluation of Tropical Cyclone Genesis Forecasts from Global Numerical Models. Weather Forecast. 2013, 28, 1423–1445. [Google Scholar] [CrossRef]

- Heming, J.T. Tropical cyclone tracking and verification techniques for Met Office numerical weather prediction models. Meteorol. Appl. 2017, 26, 1–8. [Google Scholar] [CrossRef]

- Park, M.-S.; Elsberry, R.L. Latent Heating and Cooling Rates in Developing and Nondeveloping Tropical Disturbances during TCS-08: TRMM PR versus ELDORA Retrievals*. J. Atmos. Sci. 2013, 70, 15–35. [Google Scholar] [CrossRef] [Green Version]

- Rhee, J.; Im, J.; Carbone, G.J.; Jensen, J.R. Delineation of climate regions using in-situ and remotely-sensed data for the Carolinas. Remote Sens. Environ. 2008, 112, 3099–3111. [Google Scholar] [CrossRef]

- Zhang, W.; Fu, B.; Peng, M.S.; Li, T. Discriminating Developing versus Nondeveloping Tropical Disturbances in the Western North Pacific through Decision Tree Analysis. Weather Forecast. 2015, 30, 446–454. [Google Scholar] [CrossRef]

- Han, H.; Lee, S.; Im, J.; Kim, M.; Lee, M.-I.; Ahn, M.H.; Chung, S.-R. Detection of Convective Initiation Using Meteorological Imager Onboard Communication, Ocean, and Meteorological Satellite Based on Machine Learning Approaches. Remote Sens. 2015, 7, 9184–9204. [Google Scholar] [CrossRef] [Green Version]

- Kim, D.H.; Ahn, M.H. Introduction of the in-orbit test and its performance for the first meteorological imager of the Communication, Ocean, and Meteorological Satellite. Atmos. Meas. Tech. 2014, 7, 2471–2485. [Google Scholar] [CrossRef] [Green Version]

- Xu, Y.; Meng, X.; Li, Y.; Xu, X. Research on privacy disclosure detection method in social networks based on multi-dimensional deep learning. Comput. Mater. Contin. 2020, 62, 137–155. [Google Scholar] [CrossRef]

- Peng, H.; Li, Q. Research on the automatic extraction method of web data objects based on deep learning. Intell. Autom. Soft Comput. 2020, 26, 609–616. [Google Scholar] [CrossRef]

- He, S.; Li, Z.; Tang, Y.; Liao, Z.; Li, F.; Lim, S.-J. Parameters compressing in deep learning. Comput. Mater. Contin. 2020, 62, 321–336. [Google Scholar] [CrossRef]

- Courtrai, L.; Pham, M.-T.; Lefèvre, S. Small Object Detection in Remote Sensing Images Based on Super-Resolution with Auxiliary Generative Adversarial Networks. Remote Sens. 2020, 12, 3152. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In European Conference on Computer Vision; Springer: Amsterdam, The Netherlands, 2016; pp. 21–37. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the CVPR, Columbus, OH, USA, 24–27 June 2014. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 11–18 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems 28 (NIPS 2015), Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Liu, Y.; Racah, E.; Correa, J. Application of deep convolutional neural networks for detecting extreme weather in climate datasets. arXiv 2016, arXiv:1605.01156. [Google Scholar]

- Nakano, D.M.; Sugiyama, D. Detecting Precursors of Tropical Cyclone using Deep Neural Networks. In Proceedings of the 7th International Workshop on Climate Informatics, Boulder, CO, USA, 20–22 September 2017. [Google Scholar]

- Kumler-Bonfanti, C.; Stewart, J.; Hall, D. Tropical and Extratropical Cyclone Detection Using Deep Learning. J. Appl. Meteorol. Climatol. 2020, 59, 1971–1985. [Google Scholar] [CrossRef]

- Giffard-Roisin, S.; Yang, M.; Charpiat, G. Tropical cyclone track forecasting using fused deep learning from aligned reanalysis data. Front. Big Data 2020, 3, 1. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Matsuoka, D.; Nakano, M.; Sugiyama, D. Deep learning approach for detecting tropical cyclones and their precursors in the simulation by a cloud-resolving global nonhydrostatic atmospheric model. Prog. Earth Planet. Sci. 2018, 5, 1–16. [Google Scholar] [CrossRef] [Green Version]

- Cao, J.; Chen, Z.; Wang, B. Deep Convolutional networks with superpixel segmentation for hyperspectral image classification. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 3310–3313. [Google Scholar]

- Li, Z.; Guo, F.; Li, Q.; Ren, G.; Wang, L. An Encoder–Decoder Convolution Network with Fine-Grained Spatial Information for Hyperspectral Images Classification. IEEE Access 2020, 8, 33600. [Google Scholar] [CrossRef]

- Gorban, A.; Mirkes, E.; Tukin, I. How deep should be the depth of convolutional neural networks: A backyard dog case study. Cogn. Comput. 2020, 12, 388. [Google Scholar] [CrossRef] [Green Version]

- Pan, S.J.; Tsang, I.W.; Kwok, J.T.; Yang, Q. Domain Adaptation via Transfer Component Analysis. IEEE Trans. Neural Netw. 2011, 22, 199–210. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yang, J.; Zhao, Y.; Chan, J. Learning and transferring deep joint spectral–spatial features for hyperspectral classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4729–4742. [Google Scholar] [CrossRef]

- Liu, X.; Sun, Q.; Meng, Y.; Fu, M.; Bourennane, S. Hyperspectral image classification based on parameter-optimized 3D-CNNs combined with transfer learning and virtual samples. Remote Sens. 2018, 10, 1425. [Google Scholar] [CrossRef] [Green Version]

- Jiang, Y.; Li, Y.; Zhang, H. Hyperspectral image classification based on 3-D separable ResNet and transfer learning. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1949–1953. [Google Scholar] [CrossRef]

- Tan, C.; Sun, F.; Kong, T.; Zhang, W.; Yang, C.; Liu, C. A Survey on Deep Transfer Learning. arXiv 2018, arXiv:1808.01974. [Google Scholar]

- Liu, X.; Liu, Z.; Wang, G.; Cai, Z.; Zhang, H. Ensemble transfer learning algorithm. IEEE Access 2018, 6, 2389–2396. [Google Scholar] [CrossRef]

- Tzeng, E.; Hoffman, J.; Zhang, N.; Saenko, K.; Darrell, T. Deep domain confusion: Maximizing for domain invariance. arXiv 2014, arXiv:1412.3474. [Google Scholar]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks? arXiv 2014, arXiv:1411.1792. [Google Scholar]

- Long, M.; Cao, Z.; Wang, J.; Jordan, M.I. Domain adaptation with randomized multilinear adversarial networks. arXiv 2017, arXiv:1705.10667. [Google Scholar]

- Zhao, M.; Liu, X.; Yao, X. Better Visual Image Super-Resolution with Laplacian Pyramid of Generative Adversarial Networks. CMC Comput. Mater. Contin. 2020, 64, 1601–1614. [Google Scholar] [CrossRef]

- Fu, K.; Peng, J.; Zhang, H. Image super-resolution based on generative adversarial networks: A brief review. Comput. Mater. Contin. 2020, 64, 1977–1997. [Google Scholar] [CrossRef]

- Li, X.; Liang, Y.; Zhao, M. Few-shot learning with generative adversarial networks based on WOA13 data. Comput. Mater. Contin. 2019, 60, 1073–1085. [Google Scholar] [CrossRef] [Green Version]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. Adv. Neural Inf. Process. Syst. 2014, 27, 2672–2680. [Google Scholar]

- Denton, E.; Gross, S.; Fergus, R. Semi-supervised learning with context-conditional generative adversarial networks. arXiv 2016, arXiv:1611.06430. [Google Scholar]

- Li, H.; Gao, S.; Liu, G.; Guo, D.L.; Grecos, C.; Ren, P. Visual Prediction of Typhoon Clouds With Hierarchical Generative Adversarial Networks. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1478–1482. [Google Scholar] [CrossRef]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- National Institute of Informatics. Digital Typhoon. 2009. Available online: http://agora.ex.nii.ac.jp/digital-typhoon/search_date.html.en#id2 (accessed on 29 March 2021).

- Ham, Y.; Kim, J.; Luo, J. Deep learning for multi-year ENSO forecasts. Nature 2019, 573, 568–572. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Rafael Padilla. Object Detection Metrics. 2018. Available online: https://github.com/rafaelpadilla/Object-Detection-Metrics (accessed on 22 June 2018).

- Everingham, M.; Van Gool, L.; Williams, C. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef] [Green Version]

- Davis, J.; Goadrich, M. The relationship between Precision-Recall and ROC curves. In Proceedings of the 23rd International Conference on Machine Learning, Pittsburgh, PA, USA, 25–29 June 2006; pp. 233–240. [Google Scholar]

- Neyshabur, B.; Bhojanapalli, S.; McAllester, D.; Srebro, N. Exploring generalization in deep learning. arXiv 2017, arXiv:1706.08947. [Google Scholar]

- Hammami, M.; Friboulet, D.; Kechichian, R. Cycle GAN-Based Data Augmentation for Multi-Organ Detection in CT Images Via Yolo. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 25–28 October 2020; pp. 390–393. [Google Scholar]

- Song, T.; Jiang, J.; Li, W. A deep learning method with merged LSTM Neural Networks for SSHA Prediction. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 2853–2860. [Google Scholar] [CrossRef]

- Song, T.; Wang, Z.; Xie, P. A novel dual path gated recurrent unit model for sea surface salinity prediction. J. Atmos. Ocean. Technol. 2020, 37, 317–325. [Google Scholar] [CrossRef]

| Model | Typhoon Types | 10,000 Times | 20,000 Times | 30,000 Times | 40,000 Times | 50,000 Times |

|---|---|---|---|---|---|---|

| YOLOv3 | TS | 71.21 | 80.30 | 87.88 | 90.91 | 92.42 |

| STS | 83.46 | 86.47 | 89.47 | 90.98 | 94.74 | |

| TY | 85.59 | 88.29 | 90.09 | 91.89 | 92.79 | |

| STY | 88.75 | 90.00 | 91.25 | 92.50 | 95.00 | |

| SuperTY | 88.89 | 91.11 | 93.33 | 93.33 | 94.44 | |

| NDFTC | TS | 87.50 | 92.50 | 92.50 | 95.00 | 97.50 |

| STS | 88.46 | 91.35 | 92.31 | 93.27 | 98.07 | |

| TY | 89.41 | 92.94 | 94.12 | 95.29 | 96.47 | |

| STY | 91.67 | 93.33 | 95.00 | 96.67 | 98.33 | |

| SuperTY | 91.55 | 94.37 | 95.77 | 97.18 | 98.59 |

| Model | Typhoon Types | 10,000 Times | 20,000 Times | 30,000 Times | 40,000 Times | 50,000 Times |

|---|---|---|---|---|---|---|

| YOLOv3 | TS | 60.91 | 61.24 | 63.96 | 68.26 | 66.85 |

| STS | 80.77 | 83.46 | 83.59 | 82.42 | 86.84 | |

| TY | 79.16 | 76.93 | 79.91 | 80.90 | 78.11 | |

| STY | 88.66 | 89.12 | 87.12 | 87.60 | 88.63 | |

| SuperTY | 82.82 | 81.14 | 83.23 | 81.43 | 79.81 | |

| NDFTC | TS | 67.16 | 69.12 | 63.55 | 67.96 | 63.89 |

| STS | 78.13 | 74.64 | 84.15 | 81.40 | 82.22 | |

| TY | 79.76 | 83.60 | 81.57 | 86.70 | 83.04 | |

| STY | 89.23 | 86.97 | 89.79 | 84.89 | 91.34 | |

| SuperTY | 84.03 | 85.20 | 79.89 | 80.50 | 82.52 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pang, S.; Xie, P.; Xu, D.; Meng, F.; Tao, X.; Li, B.; Li, Y.; Song, T. NDFTC: A New Detection Framework of Tropical Cyclones from Meteorological Satellite Images with Deep Transfer Learning. Remote Sens. 2021, 13, 1860. https://doi.org/10.3390/rs13091860

Pang S, Xie P, Xu D, Meng F, Tao X, Li B, Li Y, Song T. NDFTC: A New Detection Framework of Tropical Cyclones from Meteorological Satellite Images with Deep Transfer Learning. Remote Sensing. 2021; 13(9):1860. https://doi.org/10.3390/rs13091860

Chicago/Turabian StylePang, Shanchen, Pengfei Xie, Danya Xu, Fan Meng, Xixi Tao, Bowen Li, Ying Li, and Tao Song. 2021. "NDFTC: A New Detection Framework of Tropical Cyclones from Meteorological Satellite Images with Deep Transfer Learning" Remote Sensing 13, no. 9: 1860. https://doi.org/10.3390/rs13091860