UAV Remote Sensing Image Automatic Registration Based on Deep Residual Features

Abstract

:1. Introduction

2. Materials and Methods

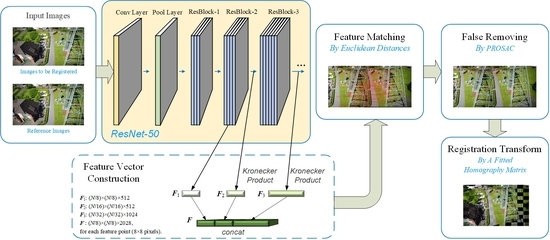

2.1. Deep Residual Feature Extraction Network

2.2. Feature Description Vector Construction and Matching

2.2.1. Feature Description Vector Construction

2.2.2. Feature Matching

2.3. False Match Elimination and Transform Model Fitting

3. Results

3.1. Experimental UAV Images

3.2. Visual Evaluation of Registration Results

3.3. Quantitative Comparison of Registration Results

4. Discussion and Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Li, W.; Li, C.; Wang, F. Research on UAV image registration based on SIFT algorithm acceleration. In Proceedings of the 2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019. [Google Scholar]

- Yang, C. A Compilation of UAV applications for precision agriculture. Smart Agric. 2020, 2, 1–22. [Google Scholar]

- Tsouros, D.C.; Bibi, S.; Sarigiannidis, P.G. A review on UAV-based applications for precision agriculture. Information 2019, 10, 349. [Google Scholar] [CrossRef] [Green Version]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision (ICCV), Kerkyra, Greece, 20–27 September 1999. [Google Scholar]

- Zhang, M.L.; Li, S.; Yu, F.; Tian, X. Image fusion employing adaptive spectral-spatial gradient sparse regularization in UAV remote sensing. Signal. Process. 2020, 170, 107434. [Google Scholar] [CrossRef]

- Jeong, D.M.; Kim, J.H.; Lee, Y.W.; Kim, B.G. Robust weighted keypoint matching algorithm for image retrieval. In Proceedings of the 2nd International Conference on Video and Image Processing (ICVIP 2018), Hong Kong, China, 29–31 December 2018. [Google Scholar]

- Wang, C.Y.; Chen, J.B.; Chen, J.S.; Yue, A.Z.; He, D.X.; Huang, Q.Q.; Yi Zhang, Y. Unmanned aerial vehicle oblique image registration using an ASIFT-based matching method. J. Appl. Remote. Sens. 2018, 12, 025002. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; Gool, L.V. SURF: Speeded up robust features. Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Hossein-Nejad, Z.; Nasri, M. Image registration based on SIFT features and adaptive RANSAC transform. In Proceedings of the 2016 International Conference on Communication and Signal Processing (ICCSP), Melmaruvathur, India, 6–8 April 2016. [Google Scholar]

- Nex, F.; Gerke, M.; Remondino, F.; Przybilla, H.J.; Bäumker, M.; Zurhorst, A. ISPRS benchmark for MultiPlatform photogrammetry. ISPRS Ann. 2015, II-3/W4, 135–142. [Google Scholar]

- Yu, R.; Yang, Y.; Yang, K. Small UAV based multi-viewpoint image registration for extracting the information of cultivated land in the hills and mountains. In Proceedings of the 26th International Conference on Geoinformatics, Kunming, China, 28–30 June 2018. [Google Scholar]

- Fernandez, P.; Bartoli, A.; Davison, A. KAZE features. In Proceedings of the 12th European Conference on Computer Vision, Florence, Italy, 7–13 October 2012. [Google Scholar]

- Gu, J.; Wang, Z.; Kuen, J. Recent Advances in Convolutional Neural Networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef] [Green Version]

- Meng, L.; Zhou, J.; Liu, S.; Ding, L. Investigation and evaluation of algorithms for unmanned aerial vehicle multispectral image registration. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102403. [Google Scholar] [CrossRef]

- Fischer, P.; Dosovitskiy, A.; Brox, T. Descriptor matching with convolutional neural networks: A comparison to SIFT. Comput. Sci. 2014, 4, 678–694. [Google Scholar]

- Nassar, A.; Amer, K.; ElHakim, R.; ElHelw, M. A deep CNN-based framework for enhanced aerial imagery registration with applications to UAV geolocalization. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Nguyen, T.; Chen, S.W.; Shivakumar, S.S.; Taylor, C.J.; Kumar, V. Unsupervised deep homography: A fast and robust homography estimation model. IEEE Robot. Autom. Lett. 2018, 3, 2346–2353. [Google Scholar] [CrossRef] [Green Version]

- Ye, F.; Su, Y.; Xiao, H. Remote sensing image registration using convolutional neural network features. IEEE Geosci. Remote Sens. Lett. 2018, 15, 232–236. [Google Scholar] [CrossRef]

- Wang, X.; Zeng, W.; Yang, X. Bi-channel image registration and deep-learning segmentation (BIRDS) for efficient, versatile 3D mapping of mouse brain. Nat. Libra Medic. 2021, 10, e63455. [Google Scholar]

- Zhang, R.; Xu, F.; Yu, H.; Yang, W.; Li, H.C. Edge-driven object matching for UAV images and satellite SAR images. In Proceedings of the 2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020. [Google Scholar]

- Nazib, R.A.; Moh, S. Energy-efficient and fast data collection in UAV-aided wireless sensor networks for Hilly Ter-Rains. IEEE Access 2021, 9, 23168–23190. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Bae, W.; Yoo, J.; Ye, J.C. Beyond deep residual learning for image restoration: Persistent homology-guided manifold simplification. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Yang, Z.; Dan, T.; Yang, Y. Multi-temporal remote sensing image registration using deep convolutional features. IEEE Access 2018, 6, 38544–38555. [Google Scholar] [CrossRef]

- Ye, H.; Su, K.; Huang, S. Image enhancement method based on bilinear interpolating and wavelet transform. In Proceedings of the 2021 IEEE 5th Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chongqing, China, 12–14 March 2021. [Google Scholar]

- Chum, O.; Matas, J. Matching with PROSAC—Progressive sample consensus. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 21–23 September 2005. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011. [Google Scholar]

- López, A.; Jurado, J.M.; Ogayar, C.J.; Feito, F.R. A framework for registering UAV-based imagery for crop-tracking in precision agriculture. Int. J. Appl. Earth Obs. Geoinf. 2021, 97, 102274. [Google Scholar] [CrossRef]

- Jhan, J.; Rau, J. A generalized tool for accurate and efficient image registration of UAV multi-lens multispectral cameras by N-SURF matching. IEEE J. Sel. Top Appl. Earth Obs. Remote Sens. 2021, 14, 6353–6362. [Google Scholar] [CrossRef]

- Mohamed, K.; Adel, H.; Raphael, C. Vine disease detection in UAV multispectral images using optimized image registration and deep learning segmentation approach. Comput. Electron. Agric. 2020, 174, 105446. [Google Scholar]

- Yan, J.; Wang, Z.; Wang, S. Real-time tracking of deformable objects based on combined matching-and-tracking. J. Electron. Imaging 2016, 25, 023019. [Google Scholar] [CrossRef] [Green Version]

- Ye, Y.; Bruzzone, L.; Shan, J.; Bovolo, F.; Zhu, Q. Fast and robust matching for multimodal remote sensing image registration. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9059–9070. [Google Scholar] [CrossRef] [Green Version]

- Hou, X.; Gao, Q.; Wang, R.; Luo, X. Satellite-borne optical remote sensing image registration based on point features. Sensors 2021, 21, 2695. [Google Scholar] [CrossRef] [PubMed]

| Methods | Urban | Roads | Buildings | Farmlands | Forests |

|---|---|---|---|---|---|

| ORB [27] | 1.32818 | 1.35295 | 1.32732 | 1.20049 | 1.28705 |

| SIFT [28] | 1.23216 | 1.18053 | 1.17576 | 1.37352 | 1.26922 |

| SURF [29] | 1.12424 | 1.26695 | 1.29178 | 1.39047 | 1.33442 |

| KAZE [30] | 1.18462 | 1.29448 | 1.21727 | 1.26681 | 1.22871 |

| AKAZE [31] | 1.02061 | 1.15461 | 1.11633 | 1.16056 | 1.23265 |

| CFOG [32] | 33.9525 | 37.9518 | 39.1872 | 33.9503 | 35.7468 |

| KNN + TAR [33] | 1.40850 | 2.50624 | 5.96340 | 1.88389 | 6.99030 |

| VGG-16 [23] | 1.07819 | 1.01689 | 1.02182 | 1.06978 | 1.02238 |

| DResNet-50 | 0.98294 | 0.96423 | 1.01685 | 0.95157 | 0.93103 |

| BResNet-50 | 0.99255 | 1.02085 | 1.06765 | 1.02273 | 0.91334 |

| KResNet-50 | 0.94289 | 0.97997 | 0.99376 | 0.92051 | 0.90167 |

| Methods | Urban | Roads | Buildings | Farmlands | Forests |

|---|---|---|---|---|---|

| ORB [27] | 2.25200 | 2.32400 | 3.45700 | 1.41400 | 1.72700 |

| SIFT [28] | 125.25400 | 137.02500 | 163.34800 | 50.89900 | 93.88900 |

| SURF [29] | 64.02300 | 86.79900 | 139.72500 | 46.65200 | 42.79400 |

| KAZE [30] | 128.40800 | 180.03100 | 194.02800 | 72.71200 | 84.77400 |

| AKAZE [31] | 101.05300 | 144.14600 | 117.05400 | 35.76800 | 103.44700 |

| CFOG [32] | 4.38921 | 4.56470 | 4.41006 | 4.36076 | 4.36785 |

| KNN + TAR [33] | 24.98238 | 7.96253 | 12.62796 | 2.87249 | 28.61729 |

| VGG-16 [23] | 179.06431 | 94.41617 | 187.56367 | 116.60120 | 193.42121 |

| DResNet-50 | 205.24510 | 102.79676 | 209.37979 | 130.89990 | 200.82188 |

| BResNet-50 | 223.31729 | 118.71738 | 217.50212 | 143.52165 | 222.49254 |

| KResNet-50 | 219.79922 | 114.83660 | 225.45332 | 142.06583 | 222.08461 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Luo, X.; Lai, G.; Wang, X.; Jin, Y.; He, X.; Xu, W.; Hou, W. UAV Remote Sensing Image Automatic Registration Based on Deep Residual Features. Remote Sens. 2021, 13, 3605. https://doi.org/10.3390/rs13183605

Luo X, Lai G, Wang X, Jin Y, He X, Xu W, Hou W. UAV Remote Sensing Image Automatic Registration Based on Deep Residual Features. Remote Sensing. 2021; 13(18):3605. https://doi.org/10.3390/rs13183605

Chicago/Turabian StyleLuo, Xin, Guangling Lai, Xiao Wang, Yuwei Jin, Xixu He, Wenbo Xu, and Weimin Hou. 2021. "UAV Remote Sensing Image Automatic Registration Based on Deep Residual Features" Remote Sensing 13, no. 18: 3605. https://doi.org/10.3390/rs13183605