Figure 1.

Growth in total photovoltaic (PV) power transactions and total PV energy consumption for 17 major cities and provinces. (a) Steady increase in average yearly PV power transactions over a decade using data retrieved from the Electric Power Statistics Information System, (b) rising trend in average yearly PV power consumption over a decade using data retrieved from the Korean Statistical Information Service.

Figure 1.

Growth in total photovoltaic (PV) power transactions and total PV energy consumption for 17 major cities and provinces. (a) Steady increase in average yearly PV power transactions over a decade using data retrieved from the Electric Power Statistics Information System, (b) rising trend in average yearly PV power consumption over a decade using data retrieved from the Korean Statistical Information Service.

Figure 2.

Basemap of the Korean peninsula showing the geographic location of the two test sites.

Figure 2.

Basemap of the Korean peninsula showing the geographic location of the two test sites.

Figure 3.

Comparison of spectral response functions (SRF) for Communications, Oceans, and Meteorological Satellite Meteorological Imager (COMS MI) and Himawari-8 Advanced Himawari Imager (H8 AHI) using spectral responsivity data supplied by the National Meteorological Satellite Center (NMSC) and Japan Aerospace Exploration Agency (JAXA). The black-colored curves represent H8 SRF and the colored curves represent COMS SRF.

Figure 3.

Comparison of spectral response functions (SRF) for Communications, Oceans, and Meteorological Satellite Meteorological Imager (COMS MI) and Himawari-8 Advanced Himawari Imager (H8 AHI) using spectral responsivity data supplied by the National Meteorological Satellite Center (NMSC) and Japan Aerospace Exploration Agency (JAXA). The black-colored curves represent H8 SRF and the colored curves represent COMS SRF.

Figure 4.

Geographic coverage of COMS and GK2A local area observations centered on Korea and H8 target area observations focused on Japan.

Figure 4.

Geographic coverage of COMS and GK2A local area observations centered on Korea and H8 target area observations focused on Japan.

Figure 5.

The increased temporal resolution of solar elevation (SE) and solar azimuth (SA) data within an hourly timestamp according to an arbitrary sun trajectory curve. Given a hypothetical forecast time horizon of n, the resulting solar geometry variables are (i) 4 SA variables each at h intervals and (ii) 4 SE variables each at h intervals.

Figure 5.

The increased temporal resolution of solar elevation (SE) and solar azimuth (SA) data within an hourly timestamp according to an arbitrary sun trajectory curve. Given a hypothetical forecast time horizon of n, the resulting solar geometry variables are (i) 4 SA variables each at h intervals and (ii) 4 SE variables each at h intervals.

Figure 6.

Detailed flowchart of the preprocessing steps for each input variable required to prepare a preprocessed input dataset in a suitable structure to implement into PV forecast models. For Step 1, individual satellite image bands are abbreviated as B1, B2, B4, B5 and B3, B7, B14, B15 for COMS and H8, respectively. The preprocessing steps demonstrate the required workflow for a single, hourly timestamp (t) and should be applied on all timestamps in the one-year time period to prepare the final preprocessed input dataset.

Figure 6.

Detailed flowchart of the preprocessing steps for each input variable required to prepare a preprocessed input dataset in a suitable structure to implement into PV forecast models. For Step 1, individual satellite image bands are abbreviated as B1, B2, B4, B5 and B3, B7, B14, B15 for COMS and H8, respectively. The preprocessing steps demonstrate the required workflow for a single, hourly timestamp (t) and should be applied on all timestamps in the one-year time period to prepare the final preprocessed input dataset.

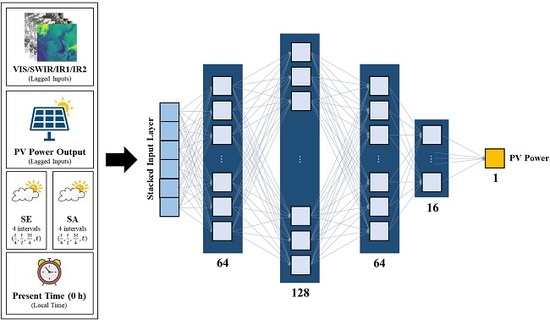

Figure 7.

Structure of the deep neural network (DNN) model used in this study showing four hidden layers with 64, 128, 64, and 16 nodes in order of each layer, fully connected to forecast a single PV power output prediction for each hourly timestamp. The various input variables are also included in the diagram to depict the implementation of the input dataset into the input layer of the DNN.

Figure 7.

Structure of the deep neural network (DNN) model used in this study showing four hidden layers with 64, 128, 64, and 16 nodes in order of each layer, fully connected to forecast a single PV power output prediction for each hourly timestamp. The various input variables are also included in the diagram to depict the implementation of the input dataset into the input layer of the DNN.

Figure 9.

2 hour (h)- and 1 h-ahead PV forecast results in normalized mean absolute error (NMAE) (%) for each spectral band from COMS and H8 datasets using support vector machine (SVM), artificial neural network (ANN), and DNN models. Solid color bars represent the “Sunlight H” time frame, whereas the overlapping stripe color bars represent the “All H” time frame using identical NMAE scales.

Figure 9.

2 hour (h)- and 1 h-ahead PV forecast results in normalized mean absolute error (NMAE) (%) for each spectral band from COMS and H8 datasets using support vector machine (SVM), artificial neural network (ANN), and DNN models. Solid color bars represent the “Sunlight H” time frame, whereas the overlapping stripe color bars represent the “All H” time frame using identical NMAE scales.

Figure 10.

2 h- and 1 h-ahead PV forecast results in NMAE (%) for each spectral band from COMS and H8 datasets using SVM, ANN, and DNN models based on setup 2.

Figure 10.

2 h- and 1 h-ahead PV forecast results in NMAE (%) for each spectral band from COMS and H8 datasets using SVM, ANN, and DNN models based on setup 2.

Figure 11.

Monthly accumulated precipitation at each study site where the background area indicates the average monthly precipitation values of the two test sites. Precipitation data are an accumulated sum of the measurements.

Figure 11.

Monthly accumulated precipitation at each study site where the background area indicates the average monthly precipitation values of the two test sites. Precipitation data are an accumulated sum of the measurements.

Figure 12.

Seasonal variation for the 2 h-ahead PV forecast presented by monthly averaged error metrics.

Figure 12.

Seasonal variation for the 2 h-ahead PV forecast presented by monthly averaged error metrics.

Table 1.

Specifications of each test site used in this study.

Table 1.

Specifications of each test site used in this study.

| Test Site | Site Location | Data Acquisition Period | Time Span |

|---|

| 1 | 35°19′00″ N, 128°20′59″ E | April 2018‒March 2019 | 1 year |

| 2 | 36°47′47″ N 127°35′40″ E |

Table 2.

Detailed comparison of the spectral bands for the meteorological satellite images mentioned in this study. Spectral characteristics of GEO-KOMPSAT-2A (GK2A) are also included to show the similarity of the H8 AHI and GK2A Advanced Meteorological Imager (AMI) sensors. The spectral bands used in this study are emphasized in bold text with an asterisk placed next to the band number.

Table 2.

Detailed comparison of the spectral bands for the meteorological satellite images mentioned in this study. Spectral characteristics of GEO-KOMPSAT-2A (GK2A) are also included to show the similarity of the H8 AHI and GK2A Advanced Meteorological Imager (AMI) sensors. The spectral bands used in this study are emphasized in bold text with an asterisk placed next to the band number.

| Band | Wavelength (µm) | Band | Wavelength (µm) |

|---|

| COMS MI | H8 AHI | GK2A AMI |

|---|

| | | | 1 | Blue | 0.460 | 0.470 |

| | | | 2 | Green | 0.510 | 0.510 |

| 1 * | VIS | 0.675 | 3 * | Red | 0.640 | 0.640 |

| | | | 4 | VIS | 0.860 | 0.860 |

| | | | 5 | NIR | 1.60 | 1.38 |

| | | | 6 | 2.30 | 1.61 |

| 2 * | NIR | 3.75 | 7 * | SWIR | 3.90 | 3.83 |

| | | | 8 | WV | 6.20 | 6.24 |

| 3 | WV | 6.75 | 9 | 6.90 | 6.95 |

| | | | 10 | 7.30 | 7.34 |

| | | | 11 | IR | 8.60 | 8.59 |

| | | | 12 | 9.60 | 9.63 |

| 4 * | IR1 | 10.8 | 13 | 10.4 | 10.4 |

| | | | 14 * | 11.2 | 11.2 |

| 5 * | IR2 | 12.0 | 15 * | 12.4 | 12.4 |

| | | | 16 | 13.3 | 13.3 |

Table 3.

Evaluation metrics selected to assess the accuracy of PV power forecast results. Data ranges and ideal values are given for each metric.

Table 3.

Evaluation metrics selected to assess the accuracy of PV power forecast results. Data ranges and ideal values are given for each metric.

| Metric | Range | Ideal Value | Formula |

|---|

| NMAE | 0 to 1 | 0 | |

| NRMSE | 0 to 1 | 0 | |

| CC | −1 to 1 | 1 | |

Table 4.

NMAE results from 2 h-ahead PV power forecast for COMS and H8 datasets using three forecast models in “Sunlight H” and “All H” time frames (bold text indicates the lowest NMAE for each forecast model).

Table 4.

NMAE results from 2 h-ahead PV power forecast for COMS and H8 datasets using three forecast models in “Sunlight H” and “All H” time frames (bold text indicates the lowest NMAE for each forecast model).

| Data | Model | Sunlight H NMAE (%) | All H NMAE (%) |

|---|

| VIS | SWIR | IR1 | IR2 | VIS | SWIR | IR1 | IR2 |

|---|

| Site 1 COMS | SVM | 6.13 | 6.07 | 5.91 | 5.88 | 4.63 | 4.47 | 4.43 | 4.44 |

| ANN | 5.59 | 5.93 | 5.76 | 5.89 | 4.15 | 4.26 | 4.14 | 4.34 |

| DNN | 5.34 | 5.45 | 5.34 | 5.30 | 3.53 | 3.81 | 3.60 | 3.62 |

| Site 1 H8 | SVM | 5.92 | 6.28 | 6.20 | 6.18 | 4.42 | 4.67 | 4.58 | 4.57 |

| ANN | 5.57 | 5.83 | 5.79 | 5.84 | 4.16 | 4.26 | 4.34 | 4.32 |

| DNN | 5.12 | 5.52 | 5.34 | 5.39 | 3.58 | 3.76 | 3.89 | 3.70 |

| Site 2 COMS | SVM | 6.20 | 6.39 | 6.39 | 6.38 | 4.83 | 5.08 | 4.65 | 4.66 |

| ANN | 5.75 | 6.17 | 6.00 | 6.06 | 4.20 | 4.65 | 4.55 | 4.82 |

| DNN | 5.55 | 5.93 | 6.09 | 6.03 | 3.94 | 4.04 | 4.29 | 4.13 |

| Site 2 H8 | SVM | 5.96 | 6.15 | 6.27 | 6.33 | 4.52 | 4.78 | 4.73 | 4.75 |

| ANN | 6.33 | 6.56 | 6.55 | 6.57 | 4.33 | 4.47 | 4.75 | 4.64 |

| DNN | 5.73 | 6.26 | 6.27 | 6.28 | 3.91 | 4.20 | 4.54 | 4.49 |

Table 5.

NMAE results from 1 h-ahead PV power forecast for COMS and H8 datasets using three forecast models in “Sunlight H” and “All H” time frames (bold text indicates the lowest NMAE values for each forecast model).

Table 5.

NMAE results from 1 h-ahead PV power forecast for COMS and H8 datasets using three forecast models in “Sunlight H” and “All H” time frames (bold text indicates the lowest NMAE values for each forecast model).

| Data | Model | Sunlight H NMAE (%) | All H NMAE (%) |

|---|

| VIS | SWIR | IR1 | IR2 | VIS | SWIR | IR1 | IR2 |

|---|

| Site 1 COMS | SVM | 4.05 | 4.07 | 4.05 | 4.05 | 3.32 | 3.14 | 3.03 | 3.04 |

| ANN | 3.70 | 3.68 | 3.64 | 3.68 | 2.65 | 2.50 | 2.62 | 2.58 |

| DNN | 3.59 | 3.56 | 3.70 | 3.51 | 2.25 | 2.35 | 2.36 | 2.32 |

| Site 1 H8 | SVM | 4.49 | 4.51 | 4.47 | 4.47 | 3.27 | 3.41 | 3.32 | 3.33 |

| ANN | 3.82 | 3.90 | 3.98 | 3.97 | 2.80 | 2.89 | 2.89 | 2.90 |

| DNN | 3.58 | 3.65 | 3.83 | 3.78 | 2.43 | 2.53 | 2.59 | 2.53 |

| Site 2 COMS | SVM | 4.23 | 4.53 | 4.50 | 4.46 | 3.45 | 3.46 | 3.32 | 3.31 |

| ANN | 3.81 | 3.76 | 3.84 | 3.89 | 2.71 | 2.58 | 2.68 | 2.61 |

| DNN | 3.57 | 3.69 | 3.68 | 3.73 | 2.28 | 2.22 | 2.27 | 2.31 |

| Site 2 H8 | SVM | 4.62 | 4.60 | 4.63 | 4.66 | 3.15 | 3.42 | 3.40 | 3.39 |

| ANN | 3.95 | 4.09 | 4.19 | 4.21 | 2.72 | 2.91 | 3.03 | 3.06 |

| DNN | 3.66 | 3.65 | 3.84 | 3.92 | 2.35 | 2.46 | 2.56 | 2.52 |

Table 6.

NRMSE results from the PV forecast for COMS and H8 datasets using the DNN model in “Sunlight H” and “All H” time frames.

Table 6.

NRMSE results from the PV forecast for COMS and H8 datasets using the DNN model in “Sunlight H” and “All H” time frames.

| Forecast Horizon | Test Site | Data | Sunlight H NRMSE (%) | All H NRMSE (%) |

|---|

| VIS | SWIR | IR1 | IR2 | VIS | SWIR | IR1 | IR2 |

|---|

| 2 h-Ahead | Site 1 | COMS | 8.65 * | 9.16 | 8.99 | 8.89 | 7.07 * | 7.66 | 7.31 | 7.36 |

| H8 | 8.42 * | 9.23 | 8.99 | 8.96 | 7.17 * | 7.71 | 7.78 | 7.36 |

| Site 2 | COMS | 9.56 * | 10.12 | 10.13 | 9.96 | 8.00 * | 8.28 | 8.47 | 8.36 |

| H8 | 9.81 * | 10.60 | 10.41 | 10.42 | 7.92 * | 8.74 | 8.91 | 8.93 |

| 1 h-Ahead | Site 1 | COMS | 6.07 | 6.03 | 6.24 | 6.03 | 4.78 * | 4.90 | 5.08 | 4.92 |

| H8 | 5.96 * | 6.14 | 6.27 | 6.26 | 4.94 * | 5.07 | 5.19 | 5.12 |

| Site 2 | COMS | 6.40 * | 6.56 | 6.52 | 6.56 | 5.06 * | 5.13 | 5.19 | 5.16 |

| H8 | 6.40 * | 6.50 | 6.60 | 6.67 | 5.44 * | 5.65 | 5.68 | 5.68 |

Table 7.

Correlation coefficient (CC) results from PV forecast for COMS and H8 datasets using the DNN model in “Sunlight H” and “All H” time frames.

Table 7.

Correlation coefficient (CC) results from PV forecast for COMS and H8 datasets using the DNN model in “Sunlight H” and “All H” time frames.

| Forecast Horizon | Test Site | Data | Sunlight H CC | All H CC |

|---|

| VIS | SWIR | IR1 | IR2 | VIS | SWIR | IR1 | IR2 |

|---|

| 2 h-Ahead | Site 1 | COMS | 0.953 * | 0.947 | 0.950 | 0.951 | 0.964 * | 0.958 | 0.962 | 0.962 |

| H8 | 0.953 * | 0.947 | 0.949 | 0.948 | 0.963 * | 0.958 | 0.959 | 0.961 |

| Site 2 | COMS | 0.931 * | 0.924 | 0.922 | 0.924 | 0.948 * | 0.941 | 0.939 | 0.940 |

| H8 | 0.928 * | 0.917 | 0.918 | 0.918 | 0.947 * | 0.936 | 0.934 | 0.932 |

| 1 h-Ahead | Site 1 | COMS | 0.977 | 0.977 | 0.976 | 0.977 | 0.984 * | 0.983 | 0.982 | 0.983 |

| H8 | 0.978 * | 0.976 | 0.976 | 0.976 | 0.983 * | 0.982 | 0.982 | 0.982 |

| Site 2 | COMS | 0.970 * | 0.969 | 0.970 | 0.969 | 0.978 * | 0.977 | 0.977 | 0.977 |

| H8 | 0.969 * | 0.969 | 0.968 | 0.967 | 0.977 * | 0.976 | 0.976 | 0.976 |

Table 8.

NMAE results from 2 h- and 1 h-ahead PV power forecasts for COMS and H8 datasets using three forecast models (bold text indicates the lowest NMAE values for each forecast model).

Table 8.

NMAE results from 2 h- and 1 h-ahead PV power forecasts for COMS and H8 datasets using three forecast models (bold text indicates the lowest NMAE values for each forecast model).

| Data | Model | 2 h-Ahead NMAE (%) | 1 h-Ahead NMAE (%) |

|---|

| VIS | SWIR | IR1 | IR2 | VIS | SWIR | IR1 | IR2 |

|---|

| Site 1 COMS | SVM | 6.87 | 6.88 | 6.76 | 6.74 | 5.26 | 5.24 | 5.21 | 5.22 |

| ANN | 6.49 | 6.69 | 6.51 | 6.52 | 4.89 | 4.78 | 4.92 | 4.83 |

| DNN | 6.27 | 6.34 | 6.33 | 6.24 | 4.44 | 4.41 | 4.35 | 4.49 |

| Site 1 H8 | SVM | 6.99 | 6.97 | 6.87 | 6.86 | 5.34 | 5.29 | 5.27 | 5.28 |

| ANN | 6.69 | 6.73 | 6.63 | 6.64 | 4.73 | 4.70 | 4.68 | 4.68 |

| DNN | 6.26 | 6.30 | 6.29 | 6.27 | 4.35 | 4.35 | 4.44 | 4.45 |

| Site 2 COMS | SVM | 6.97 | 6.91 | 6.93 | 6.89 | 5.29 | 5.24 | 5.31 | 5.28 |

| ANN | 6.73 | 6.80 | 6.88 | 6.79 | 4.83 | 4.80 | 4.94 | 4.80 |

| DNN | 6.41 | 6.59 | 6.53 | 6.51 | 4.57 | 4.63 | 4.70 | 4.60 |

| Site 2 H8 | SVM | 7.17 | 7.00 | 6.98 | 6.95 | 5.20 | 5.21 | 5.25 | 5.23 |

| ANN | 6.79 | 6.97 | 6.99 | 7.06 | 4.74 | 4.73 | 4.82 | 4.87 |

| DNN | 6.75 | 6.48 | 6.60 | 6.52 | 4.41 | 4.61 | 4.68 | 4.68 |

Table 9.

NRMSE results from PV forecast for COMS and H8 datasets using the DNN model (bold text indicates the lower NRMSE value between COMS and H8 while the asterisk emphasizes the lowest NRMSE values for each dataset per site).

Table 9.

NRMSE results from PV forecast for COMS and H8 datasets using the DNN model (bold text indicates the lower NRMSE value between COMS and H8 while the asterisk emphasizes the lowest NRMSE values for each dataset per site).

| Site | Data | 2 h-Ahead NRMSE (%) | 1 h-Ahead NRMSE (%) |

|---|

| VIS | SWIR | IR1 | IR2 | VIS | SWIR | IR1 | IR2 |

|---|

| Site 1 | COMS | 9.81 | 9.90 | 9.77 * | 9.78 | 7.17 | 7.07 | 6.98 * | 7.06 |

| H8 | 9.97 | 9.77 * | 9.85 | 9.91 | 7.02 | 6.94 * | 6.99 | 7.04 |

| Site 2 | COMS | 9.84 * | 9.99 | 10.03 | 9.96 | 7.20 | 7.17 | 7.24 | 7.14 * |

| H8 | 10.37 | 10.03 | 10.15 | 9.96* | 6.99 * | 7.18 | 7.27 | 7.29 |

Table 10.

CC results from PV forecast using the DNN model (bold text indicates the higher CC value between COMS and H8 while the asterisk emphasizes the highest CC values for each dataset per site).

Table 10.

CC results from PV forecast using the DNN model (bold text indicates the higher CC value between COMS and H8 while the asterisk emphasizes the highest CC values for each dataset per site).

| Site | Data | 2 h-Ahead CC | 1 h-Ahead CC |

|---|

| VIS | SWIR | IR1 | IR2 | VIS | SWIR | IR1 | IR2 |

|---|

| Site 1 | COMS | 0.923 | 0.923 | 0.925 * | 0.924 | 0.959 | 0.960 | 0.961 | 0.962 * |

| H8 | 0.916 | 0.920 * | 0.918 | 0.918 | 0.961 | 0.962 * | 0.961 | 0.961 |

| Site 2 | COMS | 0.916 | 0.916 | 0.915 | 0.915 | 0.956 | 0.957 * | 0.956 | 0.957 * |

| H8 | 0.906 | 0.913* | 0.911 | 0.912 | 0.958 * | 0.957 | 0.957 | 0.955 |

Table 11.

Average NMAE results for 2 h- and 1 h-ahead forecasts from both setups organized with respect to the three forecast models (bold text indicates the lowest NMAE values for each model).

Table 11.

Average NMAE results for 2 h- and 1 h-ahead forecasts from both setups organized with respect to the three forecast models (bold text indicates the lowest NMAE values for each model).

| Forecast | Site | Data | Average NMAE (%) |

|---|

| Sunlight H | All H | Setup 2 |

|---|

| SVM | ANN | DNN | SVM | ANN | DNN | SVM | ANN | DNN |

|---|

| 2 h- Ahead | Site 1 | COMS | 5.99 | 5.79 | 5.36 | 4.49 | 4.22 | 3.64 | 6.81 | 6.55 | 6.29 |

| H8 | 6.14 | 5.76 | 5.34 | 4.56 | 4.27 | 3.73 | 6.92 | 6.67 | 6.28 |

| Site 2 | COMS | 6.34 | 6.00 | 5.90 | 4.80 | 4.55 | 4.10 | 6.92 | 6.80 | 6.51 |

| H8 | 6.18 | 6.50 | 6.13 | 4.69 | 4.55 | 4.29 | 7.03 | 6.95 | 6.59 |

| 1 h- Ahead | Site 1 | COMS | 4.49 | 4.22 | 3.64 | 3.13 | 2.59 | 2.32 | 5.23 | 4.85 | 4.42 |

| H8 | 4.56 | 4.27 | 3.73 | 3.33 | 2.87 | 2.52 | 5.29 | 4.70 | 4.40 |

| Site 2 | COMS | 4.80 | 4.55 | 4.10 | 3.39 | 2.64 | 2.27 | 5.28 | 4.84 | 4.63 |

| H8 | 4.69 | 4.55 | 4.29 | 3.34 | 2.93 | 2.47 | 5.22 | 4.79 | 4.60 |

Table 12.

Average NMAE results of individual spectral bands for 2 h- and 1 h-ahead forecasts via the DNN model in both setups (bold text indicates the lowest average NMAE values among the four spectral bands).

Table 12.

Average NMAE results of individual spectral bands for 2 h- and 1 h-ahead forecasts via the DNN model in both setups (bold text indicates the lowest average NMAE values among the four spectral bands).

| Site | 2 h-Ahead Forecast Results in Average NMAE (%) |

| Sunlight H | All H | Setup 2 |

| VIS | SWIR | IR1 | IR2 | VIS | SWIR | IR1 | IR2 | VIS | SWIR | IR1 | IR2 |

| Site 1 | 5.61 | 5.84 | 5.72 | 5.75 | 4.08 | 4.21 | 4.17 | 4.16 | 6.59 | 6.65 | 6.56 | 6.55 |

| Site 2 | 5.92 | 6.24 | 6.26 | 6.28 | 4.29 | 4.54 | 4.59 | 4.58 | 6.80 | 6.79 | 6.82 | 6.79 |

| Site | 1 h-Ahead Forecast Results in Average NMAE (%) |

| Sunlight H | All H | Setup 2 |

| VIS | SWIR | IR1 | IR2 | VIS | SWIR | IR1 | IR2 | VIS | SWIR | IR1 | IR2 |

| Site 1 | 3.87 | 3.90 | 3.95 | 3.91 | 2.79 | 2.80 | 2.80 | 2.78 | 4.83 | 4.79 | 4.81 | 4.83 |

| Site 2 | 3.97 | 4.05 | 4.11 | 4.14 | 2.64 | 2.72 | 2.79 | 2.78 | 4.84 | 4.87 | 4.95 | 4.91 |

Table 13.

Statistical significance of the t-test evaluation in terms of p-value at a significance level of 1%. Two-tailed p-values are used to evaluate the statistical significance of the results (bold text indicates p-values that are below 1% significance level, while the asterisk highlights very low p-values that are near-zero).

Table 13.

Statistical significance of the t-test evaluation in terms of p-value at a significance level of 1%. Two-tailed p-values are used to evaluate the statistical significance of the results (bold text indicates p-values that are below 1% significance level, while the asterisk highlights very low p-values that are near-zero).

| Forecast | Site | Time Frame (Setup) | Two-Tailed p-Value |

|---|

| 2 h-Ahead | Site 1 | Sunlight H (Setup 1) | 0.808 |

| All H (Setup 1) | 0.044 |

| Setup 2 | 0.751 |

| Site 2 | Sunlight H (Setup 1) | <0.001 * |

| All H (Setup 1) | <0.001 * |

| Setup 2 | 0.055 |

| 1 h-Ahead | Site 1 | Sunlight H (Setup 1) | 0.008 |

| All H (Setup 1) | <0.001 * |

| Setup 2 | 0.491 |

| Site 2 | Sunlight H (Setup 1) | 0.013 |

| All H (Setup 1) | <0.001 * |

| Setup 2 | 0.570 |

Table 14.

Detailed location of automated surface observing system (ASOS) units and their distance to test site locations. The name of each ASOS unit signifies its geographic location. The distance refers to the straight distance between the ASOS unit and the given test site.

Table 14.

Detailed location of automated surface observing system (ASOS) units and their distance to test site locations. The name of each ASOS unit signifies its geographic location. The distance refers to the straight distance between the ASOS unit and the given test site.

| Test Site | Name of ASOS Unit | Location of ASOS Unit | Distance |

|---|

| 1 | Uiryeong | 35°19′21″ N, 128°17′17″ E | 5.60 km |

| 2 | Cheongju | 36°38′21″ N, 127°16′26″ E | 22.0 km |

| Cheonan | 36°45′44″ N, 127°17′34″ E | 27.2 km |