Individualization of Pinus radiata Canopy from 3D UAV Dense Point Clouds Using Color Vegetation Indices

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area

2.2. UAV Flights

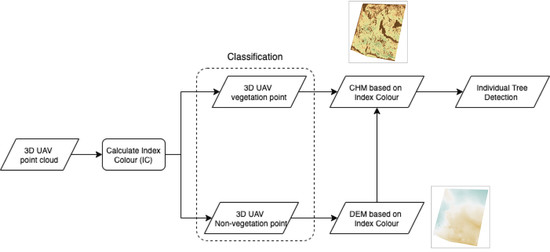

2.3. Ground Points Classification and Digital Elevation Model

2.4. Canopy Height Model and Individualization of Canopies

3. Results

3.1. Digital Elevation and Canopy Height Models

3.2. Individualization of Canopies

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Brack, C.; Schaefer, M.; Jovanovic, T.; Crawford, D. Comparing terrestrial laser scanners’ ability to measure tree height and diameter in a managed forest environment. Aust. For. 2020, 83, 161–171. [Google Scholar] [CrossRef]

- Mesas-Carrascosa, F.-J.; De Castro, A.I.; Torres-Sánchez, J.; Triviño-Tarradas, P.; Jiménez-Brenes, F.M.; García-Ferrer, A.; López-Granados, F. Classification of 3D Point Clouds Using Color Vegetation Indices for Precision Viticulture and Digitizing Applications. Remote Sens. 2020, 12, 317. [Google Scholar] [CrossRef] [Green Version]

- Gu, J.; Grybas, H.; Congalton, R.G. A Comparison of Forest Tree Crown Delineation from Unmanned Aerial Imagery Using Canopy Height Models vs. Spectral Lightness. Forests 2020, 11, 605. [Google Scholar] [CrossRef]

- Özdemir, S.; Akbulut, Z.; Karsli, F.; Acar, H. Automatic Extraction of Trees by Using Multiple Return Properties of the Lidar Point Cloud. Int. J. Eng. Geosci. 2021, 6, 20–26. [Google Scholar] [CrossRef]

- Lucas, R.; Van De Kerchove, R.; Otero, V.; Lagomasino, D.; Fatoyinbo, L.; Omar, H.; Satyanarayana, B.; Dahdouh-Guebas, F. Structural characterisation of mangrove forests achieved through combining multiple sources of remote sensing data. Remote Sens. Environ. 2019, 237, 111543. [Google Scholar] [CrossRef]

- Zhu, F.; Shen, W.; Diao, J.; Li, M.; Zheng, G. Integrating cross-sensor high spatial resolution satellite images to detect subtle forest vegetation change in the Purple Mountains, a national scenic spot in Nanjing, China. J. For. Res. 2019, 31, 1743–1758. [Google Scholar] [CrossRef] [Green Version]

- Vásconez, N.L.; Sevilla, H.C. Uso De Los Sensores Remotos En Mediciones Forestales. Eur. Sci. J. ESJ 2018, 14, 15. [Google Scholar] [CrossRef] [Green Version]

- Kokubu, Y.; Hara, S.; Tani, A. Mapping Seasonal Tree Canopy Cover and Leaf Area Using Worldview-2/3 Satellite Imagery: A Megacity-Scale Case Study in Tokyo Urban Area. Remote Sens. 2020, 12, 1505. [Google Scholar] [CrossRef]

- Axelsson, A.; Lindberg, E.; Olsson, H. Exploring Multispectral ALS Data for Tree Species Classification. Remote Sens. 2018, 10, 548. [Google Scholar] [CrossRef] [Green Version]

- Guerra-Hernández, J.; Cosenza, D.N.; Rodriguez, L.C.E.; Silva, M.; Tomé, M.; Díaz-Varela, R.A.; Gonzalez-Ferreiro, E. Comparison of ALS- and UAV(SfM)-derived high-density point clouds for individual tree detection in Eucalyptus plantations. Int. J. Remote Sens. 2018, 39, 5211–5235. [Google Scholar] [CrossRef]

- Windrim, L.; Carnegie, A.J.; Webster, M.; Bryson, M. Tree Detection and Health Monitoring in Multispectral Aerial Imagery and Photogrammetric Pointclouds Using Machine Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 2554–2572. [Google Scholar] [CrossRef]

- Medina, N.; Vidal, P.; Cifuentes, R.; Torralba, J.; Keusch, F. Evaluación del estado sanitario de individuos de Araucaria araucana a través de imágenes hiperespectrales. Rev. Teledetección 2018, 52, 41–53. [Google Scholar] [CrossRef]

- Woodgate, W.; Van Gorsel, E.; Hughes, D.; Suarez, L.; Jimenez-Berni, J.A.; Held, A. THEMS: An automated thermal and hyperspectral proximal sensing system for canopy reflectance, radiance and temperature. Plant Methods 2020, 16, 105. [Google Scholar] [CrossRef] [PubMed]

- Moe, K.T.; Owari, T.; Furuya, N.; Hiroshima, T. Comparing Individual Tree Height Information Derived from Field Surveys, LiDAR and UAV-DAP for High-Value Timber Species in Northern Japan. Forests 2020, 11, 223. [Google Scholar] [CrossRef] [Green Version]

- Næsset, E. Predicting forest stand characteristics with airborne scanning laser using a practical two-stage procedure and field data. Remote Sens. Environ. 2001, 80, 88–99. [Google Scholar] [CrossRef]

- Hyyppä, J.; Yu, X.; Hyyppä, H.; Vastaranta, M.; Holopainen, M.; Kukko, A.; Kaartinen, H.; Jaakkola, A.; Vaaja, M.; Koskinen, J.; et al. Advances in Forest Inventory Using Airborne Laser Scanning. Remote Sens. 2012, 4, 1190–1207. [Google Scholar] [CrossRef] [Green Version]

- Navarro, J.A.; Tomé, J.L.; Marino, E.; Guillén-Climent, M.L.; Fernández-Landa, A. Assessing the transferability of airborne laser scanning and digital aerial photogrammetry derived growing stock volume models. Int. J. Appl. Earth Obs. Geoinf. 2020, 91, 102135. [Google Scholar] [CrossRef]

- Magnussen, S.; Nord-Larsen, T.; Riis-Nielsen, T. Lidar supported estimators of wood volume and aboveground biomass from the Danish national forest inventory (2012–2016). Remote Sens. Environ. 2018, 211, 146–153. [Google Scholar] [CrossRef]

- Thomas, O.H.; Smith, C.E.; Wilkinson, B.E. Economics of Mapping Using Small Manned and Unmanned Aerial Vehicles. Photogramm. Eng. Remote Sens. 2017, 83, 581–591. [Google Scholar] [CrossRef]

- Musso, R.F.G.; Oddi, F.J.; Goldenberg, M.G.; Garibaldi, L.A. Applying unmanned aerial vehicles (UAVs) to map shrubland structural attributes in northern Patagonia, Argentina. Can. J. For. Res. 2020, 50, 615–623. [Google Scholar] [CrossRef]

- Kachamba, D.J.; Ørka, H.O.; Gobakken, T.; Eid, T.; Mwase, W. Biomass Estimation Using 3D Data from Unmanned Aerial Vehicle Imagery in a Tropical Woodland. Remote Sens. 2016, 8, 968. [Google Scholar] [CrossRef] [Green Version]

- Paneque-Gálvez, J.; McCall, M.K.; Napoletano, B.M.; Wich, S.A.; Koh, L.P. Small Drones for Community-Based Forest Monitoring: An Assessment of Their Feasibility and Potential in Tropical Areas. Forests 2014, 5, 1481–1507. [Google Scholar] [CrossRef] [Green Version]

- Waite, C.E.; Van Der Heijden, G.M.F.; Field, R.; Boyd, D.S. A view from above: Unmanned aerial vehicles (UAVs) provide a new tool for assessing liana infestation in tropical forest canopies. J. Appl. Ecol. 2019, 56, 902–912. [Google Scholar] [CrossRef]

- Zahawi, R.A.; Dandois, J.P.; Holl, K.D.; Nadwodny, D.; Reid, J.L.; Ellis, E.C. Using lightweight unmanned aerial vehicles to monitor tropical forest recovery. Biol. Conserv. 2015, 186, 287–295. [Google Scholar] [CrossRef] [Green Version]

- Zhang, J.; Hu, J.; Lian, J.; Fan, Z.; Ouyang, X.; Ye, W. Seeing the forest from drones: Testing the potential of lightweight drones as a tool for long-term forest monitoring. Biol. Conserv. 2016, 198, 60–69. [Google Scholar] [CrossRef]

- Baena, S.; Moat, J.; Whaley, O.; Boyd, D. Identifying species from the air: UAVs and the very high resolution challenge for plant conservation. PLoS ONE 2017, 12, e0188714. [Google Scholar] [CrossRef] [Green Version]

- Lim, Y.S.; La, P.H.; Park, J.S.; Lee, M.H.; Pyeon, M.W.; Kim, J.-I. Calculation of Tree Height and Canopy Crown from Drone Images Using Segmentation. Korean J. Geomat. 2015, 33, 605–614. [Google Scholar] [CrossRef] [Green Version]

- Nevalainen, O.; Honkavaara, E.; Tuominen, S.; Viljanen, N.; Hakala, T.; Yu, X.; Hyyppä, J.; Saari, H.; Pölönen, I.; Imai, N.N.; et al. Individual Tree Detection and Classification with UAV-Based Photogrammetric Point Clouds and Hyperspectral Imaging. Remote Sens. 2017, 9, 185. [Google Scholar] [CrossRef] [Green Version]

- Xu, Z.; Shen, X.; Cao, L.; Coops, N.C.; Goodbody, T.R.; Zhong, T.; Zhao, W.; Sun, Q.; Ba, S.; Zhang, Z.; et al. Tree species classification using UAS-based digital aerial photogrammetry point clouds and multispectral imageries in subtropical natural forests. Int. J. Appl. Earth Obs. Geoinf. 2020, 92, 102173. [Google Scholar] [CrossRef]

- Kwong, I.H.Y.; Fung, T. Tree height mapping and crown delineation using LiDAR, large format aerial photographs, and unmanned aerial vehicle photogrammetry in subtropical urban forest. Int. J. Remote Sens. 2020, 41, 5228–5256. [Google Scholar] [CrossRef]

- Wu, X.; Shen, X.; Cao, L.; Wang, G.; Cao, F. Assessment of Individual Tree Detection and Canopy Cover Estimation using Unmanned Aerial Vehicle based Light Detection and Ranging (UAV-LiDAR) Data in Planted Forests. Remote Sens. 2019, 11, 908. [Google Scholar] [CrossRef] [Green Version]

- Mesas-Carrascosa, F.-J.; Torres-Sánchez, J.; Clavero-Rumbao, I.; García-Ferrer, A.; Peña, J.-M.; Borra-Serrano, I.; López-Granados, F. Assessing Optimal Flight Parameters for Generating Accurate Multispectral Orthomosaicks by UAV to Support Site-Specific Crop Management. Remote Sens. 2015, 7, 12793–12814. [Google Scholar] [CrossRef] [Green Version]

- Rango, A.; Laliberte, A.; Steele, C.; Herrick, J.E.; Bestelmeyer, B.; Schmugge, T.; Roanhorse, A.; Jenkins, V. Research Article: Using Unmanned Aerial Vehicles for Rangelands: Current Applications and Future Potentials. Environ. Pract. 2006, 8, 159–168. [Google Scholar] [CrossRef] [Green Version]

- Yilmaz, V.; Yilmaz, C.S.; Tasci, L.; Gungor, O. Determination of Tree Crown Diameters with Segmentation of a UAS-Based Canopy Height Model. Ipsi Bgd Trans. Internet Res. 2017, 13, 63–67. [Google Scholar]

- Liu, G.; Wang, J.; Dong, P.; Chen, Y.; Liu, Z.J.F. Estimating Individual Tree Height and Diameter at Breast Height (DBH) from Terrestrial Laser Scanning (TLS) Data at Plot Level. Forests 2018, 9, 398. [Google Scholar] [CrossRef] [Green Version]

- Wagner, F.H.; Ferreira, M.P.; Sanchez, A.; Hirye, M.C.M.; Zortea, M.; Gloor, E.; Phillips, O.L.; de Souza Filho, C.R.; Shimabukuro, Y.E.; Aragão, L.E.O.C. Individual tree crown delineation in a highly diverse tropical forest using very high resolution satellite images. ISPRS J. Photogramm. Remote Sens. 2018, 145, 362–377. [Google Scholar] [CrossRef]

- Ferraz, A.; Saatchi, S.; Mallet, C.; Meyer, V. Lidar detection of individual tree size in tropical forests. Remote Sens. Environ. 2016, 183, 318–333. [Google Scholar] [CrossRef]

- Zhen, Z.; Quackenbush, L.J.; Zhang, L. Trends in Automatic Individual Tree Crown Detection and Delineation—Evolution of LiDAR Data. Remote Sens. 2016, 8, 333. [Google Scholar] [CrossRef] [Green Version]

- Ke, Y.; Quackenbush, L.J. A review of methods for automatic individual tree-crown detection and delineation from passive remote sensing. Int. J. Remote Sens. 2011, 32, 4725–4747. [Google Scholar] [CrossRef]

- Tuominen, S.; Pitkänen, T.; Balazs, A.; Kangas, A. Improving Finnish Multi-Source National Forest Inventory by 3D aerial imaging. Silva Fenn. 2017, 51, 7743. [Google Scholar] [CrossRef] [Green Version]

- Krofcheck, D.J.; Litvak, M.E.; Lippitt, C.D.; Neuenschwander, A. Woody Biomass Estimation in a Southwestern U.S. Juniper Savanna Using LiDAR-Derived Clumped Tree Segmentation and Existing Allometries. Remote Sens. 2016, 8, 453. [Google Scholar] [CrossRef] [Green Version]

- Hildreth, E.C.; Ando, H.; Andersen, R.A.; Treues, S. Recovering three-dimensional structure from motion with surface reconstruction. Vis. Res. 1995, 35, 117–137. [Google Scholar] [CrossRef] [Green Version]

- Zhang, J.; Lin, X. Advances in fusion of optical imagery and LiDAR point cloud applied to photogrammetry and remote sensing. Int. J. Image Data Fusion 2016, 8, 1–31. [Google Scholar] [CrossRef]

- Cao, L.; Liu, H.; Fu, X.; Zhang, Z.; Shen, X.; Ruan, H. Comparison of UAV LiDAR and Digital Aerial Photogrammetry Point Clouds for Estimating Forest Structural Attributes in Subtropical Planted Forests. Forests 2019, 10, 145. [Google Scholar] [CrossRef] [Green Version]

- Koch, B.; Heyder, U.; Weinacker, H. Detection of Individual Tree Crowns in Airborne Lidar Data. Photogramm. Eng. Remote Sens. 2006, 72, 357–363. [Google Scholar] [CrossRef] [Green Version]

- Silva, C.A.; Hudak, A.T.; Vierling, L.A.; Loudermilk, E.L.; O’Brien, J.J.; Hiers, J.K.; Jack, S.B.; Gonzalez-Benecke, C.; Lee, H.; Falkowski, M.J.; et al. Imputation of Individual Longleaf Pine (Pinus palustris Mill.) Tree Attributes from Field and LiDAR Data. Can. J. Remote Sens. 2016, 42, 554–573. [Google Scholar] [CrossRef]

- Véga, C.; Durrieu, S. Multi-level filtering segmentation to measure individual tree parameters based on Lidar data: Application to a mountainous forest with heterogeneous stands. Int. J. Appl. Earth Obs. Geoinf. 2011, 13, 646–656. [Google Scholar] [CrossRef] [Green Version]

- Zhen, Z.; Quackenbush, L.J.; Zhang, L. Impact of Tree-Oriented Growth Order in Marker-Controlled Region Growing for Individual Tree Crown Delineation Using Airborne Laser Scanner (ALS) Data. Remote Sens. 2014, 6, 555–579. [Google Scholar] [CrossRef] [Green Version]

- Noordermeer, L.; Bollandsås, O.M.; Ørka, H.O.; Næsset, E.; Gobakken, T. Comparing the accuracies of forest attributes predicted from airborne laser scanning and digital aerial photogrammetry in operational forest inventories. Remote Sens. Environ. 2019, 226, 26–37. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Malenovský, Z.; Turner, D.; Vopěnka, P. Assessment of Forest Structure Using Two UAV Techniques: A Comparison of Airborne Laser Scanning and Structure from Motion (SfM) Point Clouds. Forests 2016, 7, 62. [Google Scholar] [CrossRef] [Green Version]

- Larsen, M.; Rudemo, M. Optimizing templates for finding trees in aerial photographs. Pattern Recognit. Lett. 1998, 19, 1153–1162. [Google Scholar] [CrossRef]

- Ramli, M.F.; Tahar, K.N. Homogeneous tree height derivation from tree crown delineation using Seeded Region Growing (SRG) segmentation. Geo-Spat. Inf. Sci. 2020, 23, 195–208. [Google Scholar] [CrossRef]

- Gleason, C.J.; Im, J. A Fusion Approach for Tree Crown Delineation from Lidar Data. Photogramm. Eng. Remote Sens. 2012, 78, 679–692. [Google Scholar] [CrossRef]

- Sperlich, M.; Kattenborn, T.; Koch, B.; Kattenborn, G. Potential of Unmanned Aerial Vehicle Based Photogrammetric Point Clouds for Automatic Single Tree Detection. Gem. Tag. 2014, 23, 1–6. [Google Scholar]

- Kattenborn, T.; Sperlich, M.; Bataua, K.; Koch, B. Automatic Single Tree Detection in Plantations using UAV-based Photogrammetric Point clouds. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, XL-3, 139–144. [Google Scholar] [CrossRef] [Green Version]

- Instituto Nacional de Investigación de Recursos Naturales. Suelos: Descripciones. Pub. IREN N°2; Proyecto Aerofotogramétrico Chile/OEA/BID: Santiago de Chile, Chile, 1964. [Google Scholar]

- Torres-Sánchez, J.; Lopez-Granados, F.; Serrano, N.; Arquero, O.; Peña, J.M. High-Throughput 3-D Monitoring of Agricultural-Tree Plantations with Unmanned Aerial Vehicle (UAV) Technology. PLoS ONE 2015, 10, e0130479. [Google Scholar] [CrossRef] [Green Version]

- Mesas-Carrascosa, F.J.; Rumbao, I.C.; Berrocal, J.A.B.; Porras, A.G.-F. Positional Quality Assessment of Orthophotos Obtained from Sensors Onboard Multi-Rotor UAV Platforms. Sensors 2014, 14, 22394–22407. [Google Scholar] [CrossRef]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Plant species identification, size, and enumeration using machine vision techniques on near-binary images. SPIE 1993, 1836, 208–219. [Google Scholar] [CrossRef]

- Gée, C.; Bossu, J.; Jones, G.; Truchetet, F. Crop/weed discrimination in perspective agronomic images. Comput. Electron. Agric. 2008, 60, 49–59. [Google Scholar] [CrossRef]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef] [Green Version]

- Isenburg, M. Efficient LiDAR Processing Software (Version 170322). Rapidlasso. 2021. Available online: https://rapidlasso.com/lastools/ (accessed on 15 June 2020).

- Klápště, P.; Fogl, M.; Barták, V.; Gdulová, K.; Urban, R.; Moudrý, V. Sensitivity analysis of parameters and contrasting performance of ground filtering algorithms with UAV photogrammetry-based and LiDAR point clouds. Int. J. Digit. Earth 2020, 13, 1672–1694. [Google Scholar] [CrossRef]

- Zeybek, M.; Şanlıoğlu, I. Point cloud filtering on UAV based point cloud. Measurement 2018, 133, 99–111. [Google Scholar] [CrossRef]

- Zhang, Z.; Gerke, M.; Vosselman, G.; Yang, M.Y. Filtering photogrammetric point clouds using standard lidar filters towards dtm generation. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, IV-2, 319–326. [Google Scholar] [CrossRef] [Green Version]

- Mohan, M.; Silva, C.A.; Klauberg, C.; Jat, P.; Catts, G.; Cardil, A.; Hudak, A.T.; Dia, M. Individual Tree Detection from Unmanned Aerial Vehicle (UAV) Derived Canopy Height Model in an Open Canopy Mixed Conifer Forest. Forests 2017, 8, 340. [Google Scholar] [CrossRef] [Green Version]

- McGaughey, R. FUSION/LDV: Software for LiDAR analysis and visualization, FUSION version 3.78. 2018. Available online: https://forsys.cfr.washinton.edu.fusion.html/ (accessed on 19 June 2020).

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2019. [Google Scholar]

- Koh, L.P.; Wich, S.A. Dawn of Drone Ecology: Low-Cost Autonomous Aerial Vehicles for Conservation. Trop. Conserv. Sci. 2012, 5, 121–132. [Google Scholar] [CrossRef] [Green Version]

- Puttock, A.; Cunliffe, A.M.; Anderson, K.; Brazier, R. Aerial photography collected with a multirotor drone reveals impact of Eurasian beaver reintroduction on ecosystem structure. J. Unmanned Veh. Syst. 2015, 3, 123–130. [Google Scholar] [CrossRef]

- David, L.C.; Ballado, A.J. Vegetation indices and textures in object-based weed detection from UAV imagery. In Proceedings of the 6th IEEE International Conference on Control System, Compu-ting and Engineering, ICCSCE, Penang, Malaysia, 25–27 November 2016. [Google Scholar]

- Malek, S.; Bazi, Y.; Alajlan, N.; Hichri, H.; Melgani, F. Efficient Framework for Palm Tree Detection in UAV Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4692–4703. [Google Scholar] [CrossRef]

- Jiang, H.; Chen, S.; Li, D.; Wang, C.; Yang, J. Papaya Tree Detection with UAV Images Using a GPU-Accelerated Scale-Space Filtering Method. Remote Sens. 2017, 9, 721. [Google Scholar] [CrossRef] [Green Version]

- Dos Santos, A.A.; Marcato Junior, J.; Araújo, M.S.; Di Martini, D.R.; Tetila, E.C.; Siqueira, H.L.; Aoki, C.; Eltner, A.; Matsubara, E.T.; Pistori, H.; et al. Assessment of CNN-Based Methods for Individual Tree Detection on Images Captured by RGB Cameras Attached to UAVs. Sensors 2019, 19, 3595. [Google Scholar] [CrossRef] [Green Version]

- Wulder, M.; Niemann, K.; Goodenough, D.G. Local Maximum Filtering for the Extraction of Tree Locations and Basal Area from High Spatial Resolution Imagery. Remote Sens. Environ. 2000, 73, 103–114. [Google Scholar] [CrossRef]

- Li, W.; Fu, H.; Yu, L.; Cracknell, A. Deep Learning Based Oil Palm Tree Detection and Counting for High-Resolution Remote Sensing Images. Remote Sens. 2016, 9, 22. [Google Scholar] [CrossRef] [Green Version]

- Csillik, O.; Cherbini, J.; Johnson, R.; Lyons, A.; Kelly, M. Identification of Citrus Trees from Unmanned Aerial Vehicle Imagery Using Convolutional Neural Networks. Drones 2018, 2, 39. [Google Scholar] [CrossRef] [Green Version]

- Cox, S.E.; Booth, D.T. Shadow attenuation with high dynamic range images. Environ. Monit. Assess. 2008, 158, 231–241. [Google Scholar] [CrossRef] [PubMed]

- Dandois, J.P.; Olano, M.; Ellis, E.C. Optimal Altitude, Overlap, and Weather Conditions for Computer Vision UAV Estimates of Forest Structure. Remote Sens. 2015, 7, 13895–13920. [Google Scholar] [CrossRef] [Green Version]

- Birdal, A.C.; Avdan, U.; Türk, T. Estimating tree heights with images from an unmanned aerial vehicle. Geomat. Nat. Hazards Risk 2017, 8, 1144–1156. [Google Scholar] [CrossRef] [Green Version]

| Height Percentile [m] | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Digital Model | 0 | 10 | 20 | 30 | 40 | 50 | 60 | 70 | 80 | 90 | 100 |

| DEM-TIN | 53.30 | 59.17 | 60.55 | 62.05 | 63.80 | 64.93 | 66.20 | 67.50 | 68.59 | 70.98 | 83.40 |

| DEM-CVI | 53.15 | 58.36 | 59.47 | 60.46 | 62.11 | 65.66 | 64.65 | 65.83 | 67.10 | 68.16 | 74.75 |

| CHM-TIN | 0 | 0.59 | 4.31 | 8.59 | 11.52 | 13.62 | 15.27 | 16.70 | 18.16 | 19.91 | 28.54 |

| CHM-CVI | 0 | 0.75 | 4.95 | 11.58 | 14.34 | 16.09 | 17.48 | 18.72 | 19.99 | 21.55 | 29.01 |

| Plot | Manual Inventory | Color Vegetation Index | Triangulated Irregular Network | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| TP | FP | FN | S | P | F | TP | FP | FN | S | P | F | ||

| 1 | 16 | 16 | 0 | 0 | 1.00 | 1.00 | 1.00 | 11 | 0 | 5 | 0.69 | 1.00 | 0.81 |

| 2 | 21 | 16 | 0 | 5 | 0.76 | 1.00 | 0.86 | 10 | 1 | 10 | 0.50 | 0.91 | 0.65 |

| 3 | 9 | 5 | 0 | 4 | 0.56 | 1.00 | 0.71 | 4 | 1 | 4 | 0.50 | 0.80 | 0.62 |

| 4 | 22 | 11 | 2 | 9 | 0.55 | 0.85 | 0.67 | 6 | 2 | 14 | 0.30 | 0.75 | 0.43 |

| 5 | 20 | 15 | 1 | 4 | 0.79 | 0.94 | 0.86 | 14 | 1 | 5 | 0.74 | 0.93 | 0.82 |

| 6 | 28 | 22 | 0 | 6 | 0.79 | 1.00 | 0.88 | 19 | 2 | 7 | 0.73 | 0.90 | 0.81 |

| 7 | 30 | 25 | 0 | 5 | 0.83 | 1.00 | 0.91 | 16 | 0 | 14 | 0.53 | 1.00 | 0.70 |

| 8 | 17 | 11 | 1 | 5 | 0.69 | 0.92 | 0.79 | 11 | 1 | 5 | 0.69 | 0.92 | 0.79 |

| 9 | 26 | 16 | 0 | 10 | 0.62 | 1.00 | 0.76 | 13 | 2 | 11 | 0.54 | 0.87 | 0.67 |

| 10 | 25 | 18 | 0 | 7 | 0.72 | 1.00 | 0.84 | 18 | 0 | 7 | 0.72 | 1.00 | 0.84 |

| 11 | 25 | 13 | 0 | 12 | 0.52 | 1.00 | 0.68 | 11 | 0 | 14 | 0.44 | 1.00 | 0.61 |

| 12 | 24 | 19 | 1 | 4 | 0.83 | 0.95 | 0.88 | 8 | 1 | 15 | 0.35 | 0.89 | 0.50 |

| 13 | 24 | 14 | 0 | 10 | 0.58 | 1.00 | 0.74 | 12 | 0 | 12 | 0.50 | 1.00 | 0.67 |

| 14 | 17 | 15 | 0 | 2 | 0.88 | 1.00 | 0.94 | 13 | 0 | 4 | 0.76 | 1.00 | 0.87 |

| 15 | 28 | 23 | 0 | 5 | 0.82 | 1.00 | 0.90 | 16 | 0 | 12 | 0.57 | 1.00 | 0.73 |

| 16 | 10 | 8 | 0 | 2 | 0.80 | 1.00 | 0.89 | 9 | 0 | 1 | 0.90 | 1.00 | 0.95 |

| 17 | 20 | 13 | 0 | 7 | 0.65 | 1.00 | 0.79 | 11 | 0 | 9 | 0.55 | 1.00 | 0.71 |

| 18 | 31 | 23 | 0 | 8 | 0.74 | 1.00 | 0.85 | 25 | 0 | 6 | 0.81 | 1.00 | 0.89 |

| 19 | 29 | 27 | 0 | 2 | 0.93 | 1.00 | 0.96 | 22 | 0 | 7 | 0.76 | 1.00 | 0.86 |

| 20 | 23 | 18 | 0 | 5 | 0.78 | 1.00 | 0.88 | 16 | 0 | 7 | 0.70 | 1.00 | 0.82 |

| 21 | 21 | 11 | 0 | 10 | 0.52 | 1.00 | 0.69 | 10 | 1 | 10 | 0.50 | 0.91 | 0.65 |

| 22 | 15 | 12 | 0 | 3 | 0.80 | 1.00 | 0.89 | 11 | 0 | 4 | 0.73 | 1.00 | 0.85 |

| 23 | 18 | 14 | 1 | 3 | 0.82 | 0.93 | 0.88 | 10 | 1 | 7 | 0.59 | 0.91 | 0.71 |

| 24 | 18 | 15 | 0 | 3 | 0.83 | 1.00 | 0.91 | 11 | 1 | 6 | 0.65 | 0.92 | 0.76 |

| 25 | 26 | 17 | 0 | 9 | 0.65 | 1.00 | 0.79 | 13 | 3 | 10 | 0.57 | 0.81 | 0.67 |

| 26 | 18 | 13 | 0 | 5 | 0.72 | 1.00 | 0.84 | 11 | 3 | 4 | 0.73 | 0.79 | 0.76 |

| 27 | 29 | 20 | 0 | 9 | 0.69 | 1.00 | 0.82 | 18 | 1 | 10 | 0.64 | 0.95 | 0.77 |

| 28 | 33 | 22 | 0 | 11 | 0.67 | 1.00 | 0.80 | 17 | 0 | 16 | 0.52 | 1.00 | 0.68 |

| 29 | 10 | 7 | 1 | 2 | 0.78 | 0.88 | 0.82 | 5 | 1 | 4 | 0.56 | 0.83 | 0.67 |

| 30 | 27 | 22 | 1 | 4 | 0.85 | 0.96 | 0.90 | 21 | 1 | 5 | 0.81 | 0.95 | 0.88 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cabrera-Ariza, A.M.; Lara-Gómez, M.A.; Santelices-Moya, R.E.; Meroño de Larriva, J.-E.; Mesas-Carrascosa, F.-J. Individualization of Pinus radiata Canopy from 3D UAV Dense Point Clouds Using Color Vegetation Indices. Sensors 2022, 22, 1331. https://doi.org/10.3390/s22041331

Cabrera-Ariza AM, Lara-Gómez MA, Santelices-Moya RE, Meroño de Larriva J-E, Mesas-Carrascosa F-J. Individualization of Pinus radiata Canopy from 3D UAV Dense Point Clouds Using Color Vegetation Indices. Sensors. 2022; 22(4):1331. https://doi.org/10.3390/s22041331

Chicago/Turabian StyleCabrera-Ariza, Antonio M., Miguel A. Lara-Gómez, Rómulo E. Santelices-Moya, Jose-Emilio Meroño de Larriva, and Francisco-Javier Mesas-Carrascosa. 2022. "Individualization of Pinus radiata Canopy from 3D UAV Dense Point Clouds Using Color Vegetation Indices" Sensors 22, no. 4: 1331. https://doi.org/10.3390/s22041331