Biomedical Imaging & Instrumentation

A topical collection in Sensors (ISSN 1424-8220). This collection belongs to the section "Biomedical Sensors".

Viewed by 29145Editor

Interests: advanced biomedical imaging (modalities, processing and exploitation) and all ancillaries and applications thereof; preclinical imaging; 3D imaging, processing and visualisation; multimodality imaging, processing and visualisation; image-guided biomedical applications (diagnosis, therapy planning, monitoring, follow-up); virtual and augmented reality; high-performance computing and visualisation; telemedicine; biomedical image archival

Special Issues, Collections and Topics in MDPI journals

Topical Collection Information

Dear Colleagues,

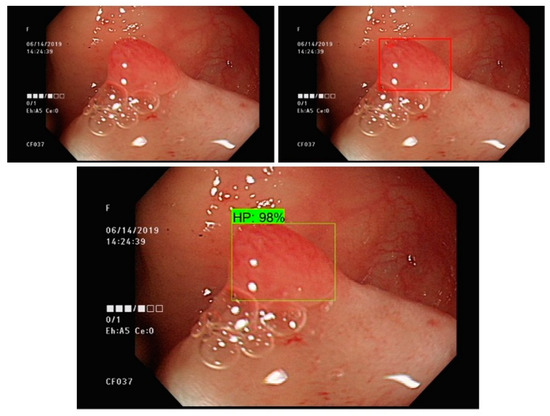

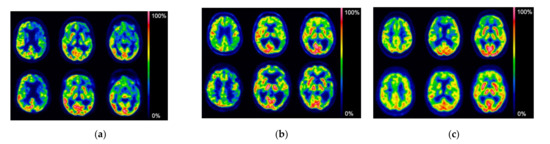

Modern healthcare relies ever more on technology—especially imaging in its many shapes—to progress and bring tangible benefits to both patients and society. This Special Issue of Sensors on “Biomedical Imaging and Instrumentation” will collect high-quality original contributions focusing on new or ongoing related technological developments at any stage of the translational pipeline, i.e., from lab to the clinic. Of particular relevance are imaging modalities and sensors (e.g., all types of optical imaging and spectroscopy, ultrasound, photoacoustic, X-ray, CT, MR, SPECT, PET, charged particles) for preclinical and clinical applications (e.g., micro to animals to humans in oncology, cardiovascular, pulmonary, neuro, critical care, interventional, surgery.), as well as related data and image processing, analysis and visualization (e.g., reconstruction, segmentation, radiomics, machine learning, 3D, AR/VR). Due to their already demonstrated potential, advanced approaches and instrumentation related to multimodality, hybrid and parametric imaging, image-guided therapy, theranostics, computational planning, and also hadron therapy are especially welcome. Even though manuscripts describing actual (pre)clinical applications are considered to be of interest, all contributions will still need to exhibit a significant technological content in order to comply with the overarching scope of this journal.

Prof. Dr. Luc Bidaut

Collection Editor

Manuscript Submission Information

Manuscripts should be submitted online at www.mdpi.com by registering and logging in to this website. Once you are registered, click here to go to the submission form. Manuscripts can be submitted until the deadline. All submissions that pass pre-check are peer-reviewed. Accepted papers will be published continuously in the journal (as soon as accepted) and will be listed together on the collection website. Research articles, review articles as well as short communications are invited. For planned papers, a title and short abstract (about 100 words) can be sent to the Editorial Office for announcement on this website.

Submitted manuscripts should not have been published previously, nor be under consideration for publication elsewhere (except conference proceedings papers). All manuscripts are thoroughly refereed through a single-blind peer-review process. A guide for authors and other relevant information for submission of manuscripts is available on the Instructions for Authors page. Sensors is an international peer-reviewed open access semimonthly journal published by MDPI.

Please visit the Instructions for Authors page before submitting a manuscript. The Article Processing Charge (APC) for publication in this open access journal is 2600 CHF (Swiss Francs). Submitted papers should be well formatted and use good English. Authors may use MDPI's English editing service prior to publication or during author revisions.

Keywords

- biomedical imaging modalities

- biomedical instrumentation

- new sensors for biomedical applications

- new imaging modalities or paradigms

- clinical translation

- disease characterization

- therapy planning, delivery or monitoring

- biomedical applications of ML/AI/DL (to diagnosis or therapy)