Advances in Sensors, Big Data and Machine Learning in Intelligent Animal Farming

A topical collection in Sensors (ISSN 1424-8220). This collection belongs to the section "Sensing and Imaging".

Viewed by 48762Editors

Interests: agricultural robots; deep learning; multi-sensor fusion; pattern recognition

Special Issues, Collections and Topics in MDPI journals

Interests: animal environment; poultry housing; precision animal farming; sustainable agriculture

Special Issues, Collections and Topics in MDPI journals

Interests: image analysis and recognition; intelligent detecting and control; agricultural information technology; digital plant and virtual technology; animal health and welfare

Interests: field robotics; SLAM; robot audition; computer vision; machine learning

Special Issues, Collections and Topics in MDPI journals

Topical Collection Information

Dear Colleagues,

Animal production (milk, meat, and eggs) provides the most valuable protein production for human beings and animals. However, animal production is facing several challenges worldwide, such as environmental impact and animal welfare/health concerns. In animal farming, accurate and efficient monitoring of animal information and behavior can help to analyze the physiological, health, and welfare status of animals, and identify sick or abnormal individuals to reduce economic losses and protect animal welfare.

In recent years, there has been a growing interest in animal welfare. At present, sensors, big data, machine learning, and artificial intelligence are used to improve management efficiency, reduce production costs, and enhance animal welfare. Although these technologies still have challenges and limitations, the application and exploration of these technologies in animal farms will greatly promote the intelligent management of farms.

Therefore, this Topical Collection will collect original papers with novel contributions based on technologies such as sensors, big data, machine learning, and artificial intelligence to study animal behavior monitoring and recognition, environmental monitoring, health evaluation, etc., to promote intelligent and accurate animal farm management.

This Topical Collection will focus on (but is not limited to) the following topics:

- Automatic animal detection and identification

- Animal welfare and health monitoring

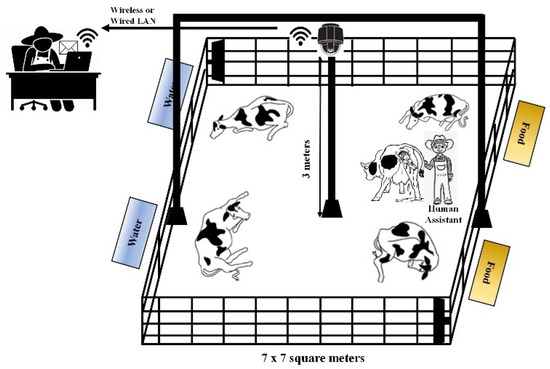

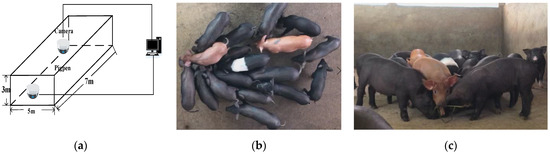

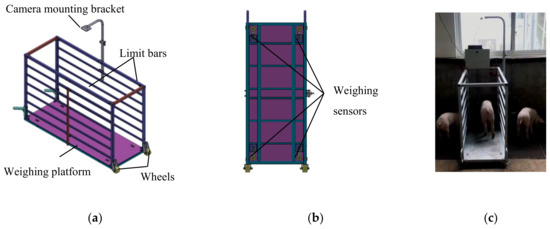

- Sensors and sensing techniques for animal behavior recognition and detection

- Sensors for automated animal and environmental management

- Quantification of individual animal behavioral and physiological variations

- Big data and machine learning for group behavior monitoring and evaluation

- Real-time modeling of animal responses and decision-making

- Sensors application in precision livestock farming

- Application of robots in animal farming

Dr. Yongliang Qiao

Dr. Lilong Chai

Prof. Dr. Dongjian He

Dr. Daobilige Su

Collection Editors

Manuscript Submission Information

Manuscripts should be submitted online at www.mdpi.com by registering and logging in to this website. Once you are registered, click here to go to the submission form. Manuscripts can be submitted until the deadline. All submissions that pass pre-check are peer-reviewed. Accepted papers will be published continuously in the journal (as soon as accepted) and will be listed together on the collection website. Research articles, review articles as well as short communications are invited. For planned papers, a title and short abstract (about 100 words) can be sent to the Editorial Office for announcement on this website.

Submitted manuscripts should not have been published previously, nor be under consideration for publication elsewhere (except conference proceedings papers). All manuscripts are thoroughly refereed through a single-blind peer-review process. A guide for authors and other relevant information for submission of manuscripts is available on the Instructions for Authors page. Sensors is an international peer-reviewed open access semimonthly journal published by MDPI.

Please visit the Instructions for Authors page before submitting a manuscript. The Article Processing Charge (APC) for publication in this open access journal is 2600 CHF (Swiss Francs). Submitted papers should be well formatted and use good English. Authors may use MDPI's English editing service prior to publication or during author revisions.

Keywords

- animal farming

- animal welfare

- big data

- behavior monitoring

- animal identification

- internet of things

- deep learning

- precision livestock farming