Smart Robotics for Automation

A topical collection in Sensors (ISSN 1424-8220). This collection belongs to the section "Physical Sensors".

Viewed by 43663Editor

Interests: robot control; mobile robotics; educational robotics; collaborative robotics

Special Issues, Collections and Topics in MDPI journals

Topical Collection Information

Dear Colleagues,

In recent years, the demand for efficient automation in distribution centers has increased sharply, mainly due to the increase in e-commerce. This trend continues and spreads to other sectors. The world’s population is aging, so more efficient automation is needed to cope with the relative reduction in the available workforce in the near future. In addition, as more people are moving to cities and fewer people are interested in working on farms, food production will also need to be automated more efficiently. Moreover, climate change tends to play an important role due to factors such as the decrease in available areas for food production.

To address these important problems, this Topical Collection on “Smart Robotics for Automation” is dedicated to publishing research papers, communications, and review articles proposing solutions to increase the efficiency of automation systems with the application of smart robotics. By smart robotics we mean solutions that apply the latest developments in artificial intelligence, sensor systems (including computer vision), and control to manipulators and mobile robots. Solutions that increase the adaptability and flexibility of current robotic applications are also welcome.

Topics of interest are related to robotics automation. A non-exhaustive list is as follows:

- Process automation with intelligent robotics;

- Intelligent robotic applications for production systems;

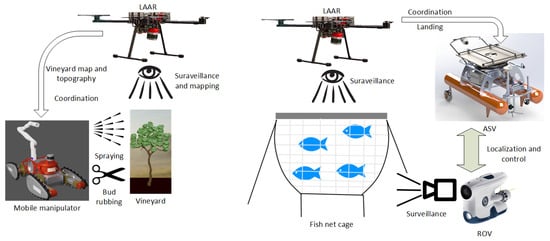

- Robotics applied to precision agriculture;

- AI and machine learning systems applied to robotics;

- Robot control;

- Robot manipulation and picking;

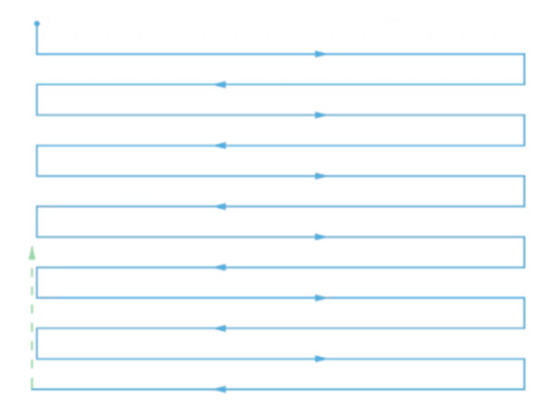

- Mobile robot navigation, localization, and mapping;

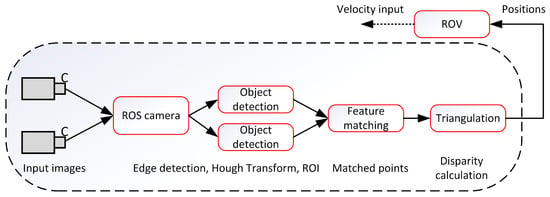

- Interpretation of sensor data (including vision systems);

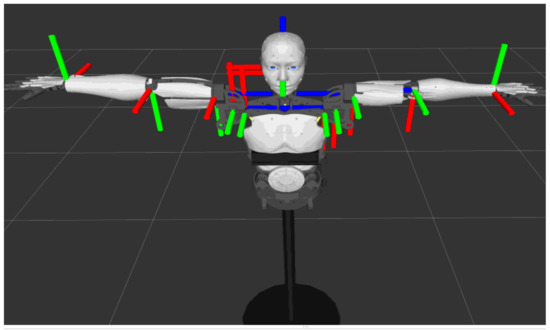

- Human–robot collaboration (including cobots);

- Multi-robot systems

This Topical Collection is focused more on sensors. Papers focus on Automation may choose our joint Topical Collection in Automation (ISSN 2673-4052).

Dr. Felipe N. Martins

Collection Editor

Manuscript Submission Information

Manuscripts should be submitted online at www.mdpi.com by registering and logging in to this website. Once you are registered, click here to go to the submission form. Manuscripts can be submitted until the deadline. All submissions that pass pre-check are peer-reviewed. Accepted papers will be published continuously in the journal (as soon as accepted) and will be listed together on the collection website. Research articles, review articles as well as short communications are invited. For planned papers, a title and short abstract (about 100 words) can be sent to the Editorial Office for announcement on this website.

Submitted manuscripts should not have been published previously, nor be under consideration for publication elsewhere (except conference proceedings papers). All manuscripts are thoroughly refereed through a single-blind peer-review process. A guide for authors and other relevant information for submission of manuscripts is available on the Instructions for Authors page. Sensors is an international peer-reviewed open access semimonthly journal published by MDPI.

Please visit the Instructions for Authors page before submitting a manuscript. The Article Processing Charge (APC) for publication in this open access journal is 2600 CHF (Swiss Francs). Submitted papers should be well formatted and use good English. Authors may use MDPI's English editing service prior to publication or during author revisions.

Keywords

- Control theory

- Manufacturing systems

- Learning systems

- Intelligent control systems

- Artificial neural networks

- Motion control and sensing

- Process automation and monitoring

- Sensors and signal processing

- Robotics and applications