Applications of Convolutional Neural Networks in Imaging and Sensing

A project collection of Sensors (ISSN 1424-8220). This project collection belongs to the section "Sensing and Imaging".

Papers displayed on this page all arise from the same project. Editorial decisions were made independently of project staff and handled by the Editor-in-Chief or qualified Editorial Board members.

Viewed by 32208Editors

Interests: computer vision; machine learning; optimization

Special Issues, Collections and Topics in MDPI journals

Interests: signal/image/video processing and understanding; color imaging; machine learning

Special Issues, Collections and Topics in MDPI journals

Interests: color imaging; spectral imaging; computational appearance

Special Issues, Collections and Topics in MDPI journals

Project Overview

Dear Colleagues,

Convolutional Neural Networks (CNNs or ConvNets) are a class of deep neural networks that leverage spatial information, and are, therefore, well-suited to be applied to different tasks in image-processing and computer vision.

Exploiting deep and end-to-end learnable architectures, CNNs allow to learn the best features or abstract representations to solve the particular problem at hand. This flexibility to adapt to different problems is among the reasons they now represent the state-of-the-art in many challenging image-processing and computer vision applications, mostly outperforming traditional techniques based on handcrafted features.

Nonetheless, deep learning presents its own set of specific challenges: the need for large-cardinality training sets can make data labeling cumbersome and expensive, and the high computational complexity of neural models can be an obstacle to mobile embedding and user personalization.

This Special Issue covers all the topics related to the application of CNNs to image processing and computer vision tasks, as well as topics related to the definition of new CNN architectures, highlighting their advantages in addressing the problems currently faced by the imaging community.

Possible contributions to the Special Issue include, but are not limited to, the following topics:

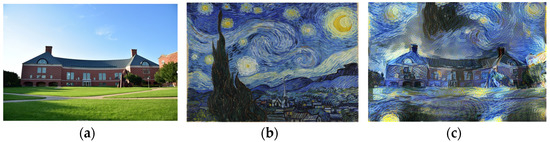

- Image synthesis and rendering;

- Image restoration and enhancement;

- Color, multi-spectral, and hyper-spectral imaging;

- Image and video quality assessment;

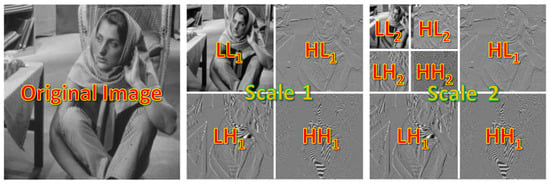

- Texture, image and video analysis;

- Image and video recognition, classification, and retrieval;

- Biomedical and biological image processing and analysis;

- Image and video quality control and anomaly detection;

- Image processing for cultural heritage;

- Image and video dehazing;

- Image processing form material and object appearance, soft-metrology;

- Image processing applications;

- Image sensors.

Dr. Simone Bianco

Dr. Marco Buzzelli

Dr. Jean Baptiste Thomas

Guest Editors

Manuscript Submission Information

Manuscripts should be submitted online at www.mdpi.com by registering and logging in to this website. Once you are registered, click here to go to the submission form. Manuscripts can be submitted until the deadline. All submissions that pass pre-check are peer-reviewed. Accepted papers will be published continuously in the journal (as soon as accepted) and will be listed together on the collection website. Research articles, review articles as well as short communications are invited. For planned papers, a title and short abstract (about 100 words) can be sent to the Editorial Office for announcement on this website.

Submitted manuscripts should not have been published previously, nor be under consideration for publication elsewhere (except conference proceedings papers). All manuscripts are thoroughly refereed through a single-blind peer-review process. A guide for authors and other relevant information for submission of manuscripts is available on the Instructions for Authors page. Sensors is an international peer-reviewed open access semimonthly journal published by MDPI.

Please visit the Instructions for Authors page before submitting a manuscript. The Article Processing Charge (APC) for publication in this open access journal is 2600 CHF (Swiss Francs). Submitted papers should be well formatted and use good English. Authors may use MDPI's English editing service prior to publication or during author revisions.