Process Data Analytics

A topical collection in Processes (ISSN 2227-9717).

Viewed by 159976Editors

Interests: process data analytics; machine learning; big data; visualization; process monitoring; Industry 4.0

Topical Collection Information

Dear Colleagues,

Data analytics is a term used to describe a set of computational methods for the analysis of data to abstract knowledge and affect decision making. Typical information of interest includes (1) uncovering unknown patterns or correlations within the data; (2) constructing predictions of some variables as functions of other variables; (3) identifying data points that are atypical of the overall dataset; and (4) classifying different groups of outliers. The development of data analytics methods has seen rapid growth in the last decade, primarily by the machine learning and related communities that formulate answers to specific questions in terms of optimization problems.

This Special Issue concerns process data analytics, which refers to data analytics methods that are suitable for the types of data and problems that arise in manufacturing processes. The quantity of process data that has become available and stored in historical databases for manufacturing processes has grown by orders of magnitude, but the abstraction of the most value from this data has been elusive. The commonly used tools used in industrial practice have significant limitations in utility and performance, to such an extent that most data stored in historical databases are not analyzed at all rather than being analyzed poorly. Tools from machine learning and related communities typically require significant modifications to be effective for process data, and the structure of the available prior mechanistic information and other domain knowledge on processes and the types of questions that arise in manufacturing processes have a specificity that need to be taken into account to be able to develop the most effective data analytics methods.

This Special Issue, ”Process Data Analytics”, aims to bring together recent advances, and invites all original contributions, fundamental and applied, which can add to our understanding of the field. Topics may include, but are not limited to:

- Process data analytics methods

- Machine learning methods adapted for application to manufacturing processes

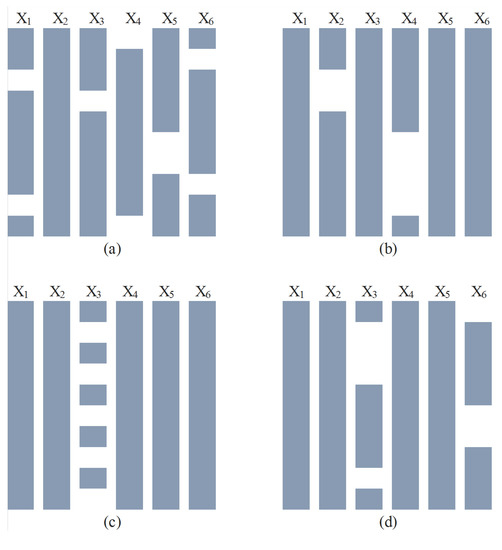

- Methods for better handling of missing data

- Fault detection and diagnosis

- Adaptive process monitoring

- Industrial case studies

- Applications to Big Data problems in manufacturing

- Hybrid data analytics methods

- Prognostic systems

Dr. Leo H. Chiang

Prof. Richard D. Braatz

Collection Editors

Manuscript Submission Information

Manuscripts should be submitted online at www.mdpi.com by registering and logging in to this website. Once you are registered, click here to go to the submission form. Manuscripts can be submitted until the deadline. All submissions that pass pre-check are peer-reviewed. Accepted papers will be published continuously in the journal (as soon as accepted) and will be listed together on the collection website. Research articles, review articles as well as short communications are invited. For planned papers, a title and short abstract (about 100 words) can be sent to the Editorial Office for announcement on this website.

Submitted manuscripts should not have been published previously, nor be under consideration for publication elsewhere (except conference proceedings papers). All manuscripts are thoroughly refereed through a single-blind peer-review process. A guide for authors and other relevant information for submission of manuscripts is available on the Instructions for Authors page. Processes is an international peer-reviewed open access monthly journal published by MDPI.

Please visit the Instructions for Authors page before submitting a manuscript. The Article Processing Charge (APC) for publication in this open access journal is 2400 CHF (Swiss Francs). Submitted papers should be well formatted and use good English. Authors may use MDPI's English editing service prior to publication or during author revisions.

Keywords

- Data analytics

- Process data analytics

- Big data

- Big data analytics

- Machine learning

- Diagnostic systems

- Prognostics

- Process monitoring

- Process health monitoring

- Fault detection and diagnosis