-

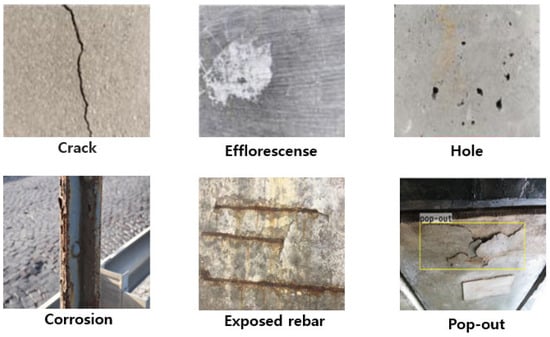

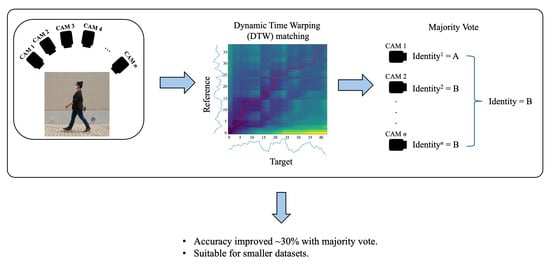

Source Camera Identification Techniques: A Survey

Source Camera Identification Techniques: A Survey -

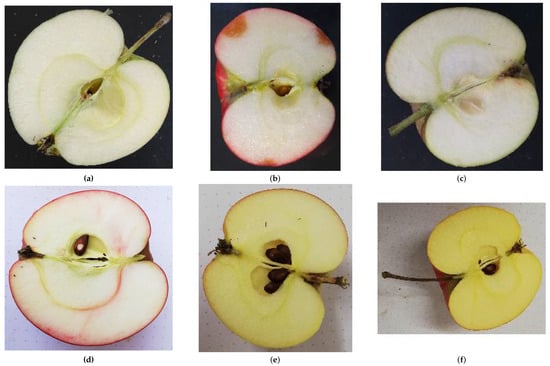

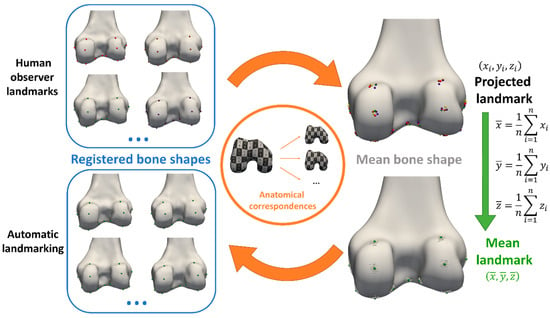

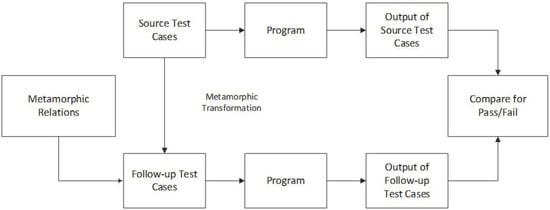

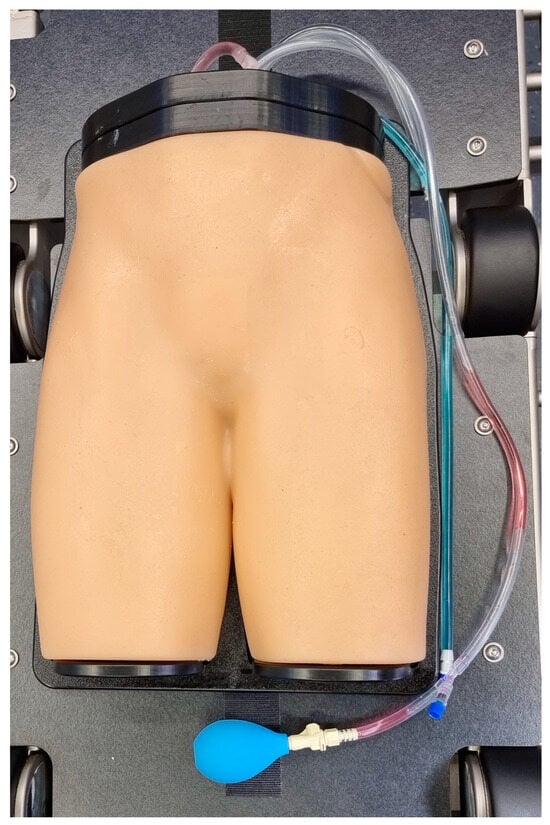

Point Projection Mapping System for Tracking, Registering, Labeling, and Validating Optical Tissue Measurements

Point Projection Mapping System for Tracking, Registering, Labeling, and Validating Optical Tissue Measurements -

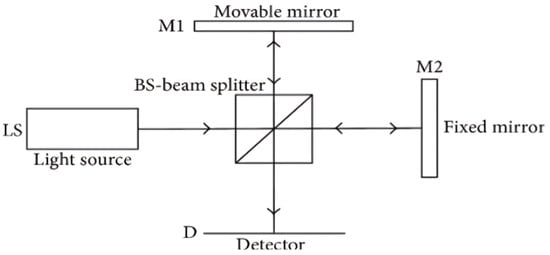

Identifying the Causes of Unexplained Dyspnea at High Altitude Using Normobaric Hypoxia with Echocardiography

Identifying the Causes of Unexplained Dyspnea at High Altitude Using Normobaric Hypoxia with Echocardiography -

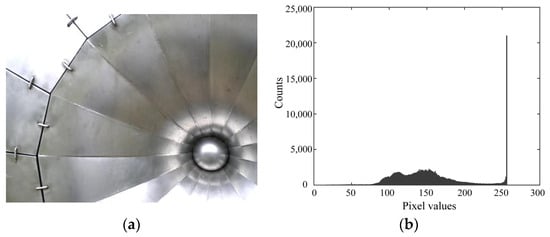

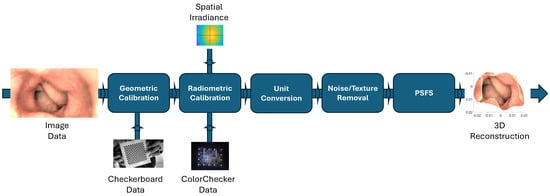

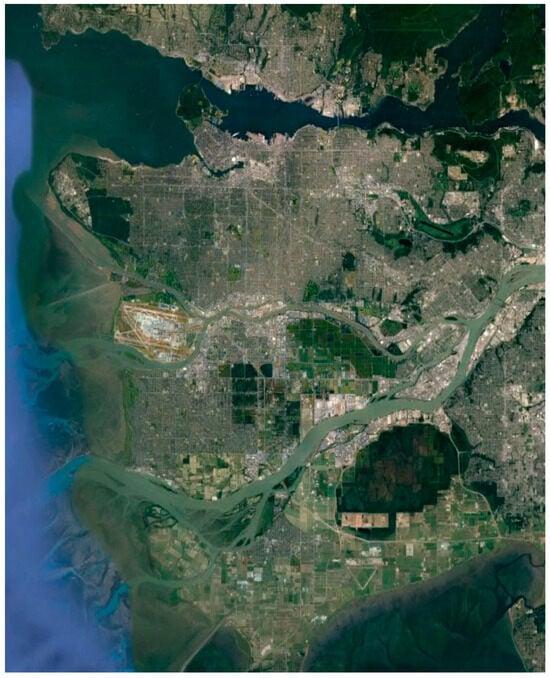

Fast Data Generation for Training Deep-Learning 3D Reconstruction Approaches for Camera Arrays

Fast Data Generation for Training Deep-Learning 3D Reconstruction Approaches for Camera Arrays

Journal Description

Journal of Imaging

- Open Access— free for readers, with article processing charges (APC) paid by authors or their institutions.

- High Visibility: indexed within Scopus, ESCI (Web of Science), PubMed, PMC, dblp, Inspec, Ei Compendex, and other databases.

- Journal Rank: CiteScore - Q2 (Computer Graphics and Computer-Aided Design)

- Rapid Publication: manuscripts are peer-reviewed and a first decision is provided to authors approximately 21.7 days after submission; acceptance to publication is undertaken in 3.8 days (median values for papers published in this journal in the second half of 2023).

- Recognition of Reviewers: reviewers who provide timely, thorough peer-review reports receive vouchers entitling them to a discount on the APC of their next publication in any MDPI journal, in appreciation of the work done.

Latest Articles

E-Mail Alert

News

Topics

Deadline: 31 May 2024

Deadline: 30 July 2024

Deadline: 31 August 2024

Deadline: 31 December 2024

Conferences

Special Issues

Deadline: 30 April 2024

Deadline: 24 May 2024

Deadline: 31 May 2024

Deadline: 30 June 2024