Journal Description

Econometrics

Econometrics

is an international, peer-reviewed, open access journal on econometric modeling and forecasting, as well as new advances in econometrics theory, and is published quarterly online by MDPI.

- Open Access— free for readers, with article processing charges (APC) paid by authors or their institutions.

- High Visibility: indexed within Scopus, ESCI (Web of Science), EconLit, EconBiz, RePEc, and other databases.

- Rapid Publication: manuscripts are peer-reviewed and a first decision is provided to authors approximately 53.8 days after submission; acceptance to publication is undertaken in 7.2 days (median values for papers published in this journal in the second half of 2023).

- Recognition of Reviewers: reviewers who provide timely, thorough peer-review reports receive vouchers entitling them to a discount on the APC of their next publication in any MDPI journal, in appreciation of the work done.

Impact Factor:

1.5 (2022);

5-Year Impact Factor:

1.7 (2022)

Latest Articles

A Pretest Estimator for the Two-Way Error Component Model

Econometrics 2024, 12(2), 9; https://doi.org/10.3390/econometrics12020009 (registering DOI) - 16 Apr 2024

Abstract

►

Show Figures

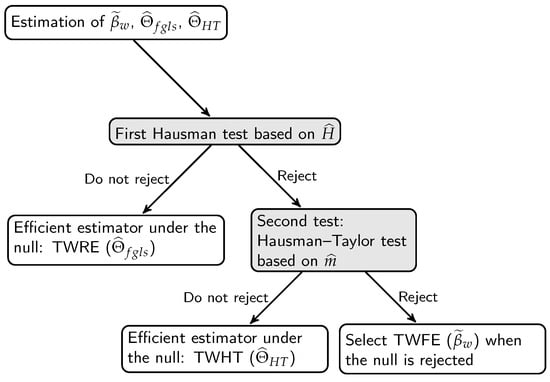

For a panel data linear regression model with both individual and time effects, empirical studies select the two-way random-effects (TWRE) estimator if the Hausman test based on the contrast between the two-way fixed-effects (TWFE) estimator and the TWRE estimator is not rejected. Alternatively,

[...] Read more.

For a panel data linear regression model with both individual and time effects, empirical studies select the two-way random-effects (TWRE) estimator if the Hausman test based on the contrast between the two-way fixed-effects (TWFE) estimator and the TWRE estimator is not rejected. Alternatively, they select the TWFE estimator in cases where this Hausman test rejects the null hypothesis. Not all the regressors may be correlated with these individual and time effects. The one-way Hausman-Taylor model has been generalized to the two-way error component model and allow some but not all regressors to be correlated with these individual and time effects. This paper proposes a pretest estimator for this two-way error component panel data regression model based on two Hausman tests. The first Hausman test is based upon the contrast between the TWFE and the TWRE estimators. The second Hausman test is based on the contrast between the two-way Hausman and Taylor (TWHT) estimator and the TWFE estimator. The Monte Carlo results show that this pretest estimator is always second best in MSE performance compared to the efficient estimator, whether the model is random-effects, fixed-effects or Hausman and Taylor. This paper generalizes the one-way pretest estimator to the two-way error component model.

Full article

Open AccessArticle

Biases in the Maximum Simulated Likelihood Estimation of the Mixed Logit Model

by

Maksat Jumamyradov, Murat Munkin, William H. Greene and Benjamin M. Craig

Econometrics 2024, 12(2), 8; https://doi.org/10.3390/econometrics12020008 - 27 Mar 2024

Abstract

►▼

Show Figures

In a recent study, it was demonstrated that the maximum simulated likelihood (MSL) estimator produces significant biases when applied to the bivariate normal and bivariate Poisson-lognormal models. The study’s conclusion suggests that similar biases could be present in other models generated by correlated

[...] Read more.

In a recent study, it was demonstrated that the maximum simulated likelihood (MSL) estimator produces significant biases when applied to the bivariate normal and bivariate Poisson-lognormal models. The study’s conclusion suggests that similar biases could be present in other models generated by correlated bivariate normal structures, which include several commonly used specifications of the mixed logit (MIXL) models. This paper conducts a simulation study analyzing the MSL estimation of the error components (EC) MIXL. We find that the MSL estimator produces significant biases in the estimated parameters. The problem becomes worse when the true value of the variance parameter is small and the correlation parameter is large in magnitude. In some cases, the biases in the estimated marginal effects are as large as 12% of the true values. These biases are largely invariant to increases in the number of Halton draws.

Full article

Figure 1

Open AccessArticle

Public Debt and Economic Growth: A Panel Kink Regression Latent Group Structures Approach

by

Chaoyi Chen, Thanasis Stengos and Jianhan Zhang

Econometrics 2024, 12(1), 7; https://doi.org/10.3390/econometrics12010007 - 05 Mar 2024

Abstract

►▼

Show Figures

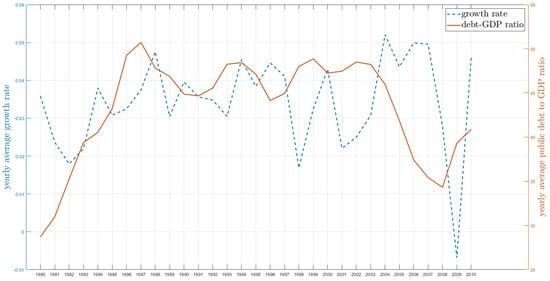

This paper investigates the relationship between public debt and economic growth in the context of a panel kink regression with latent group structures. The proposed model allows us to explore the heterogeneous threshold effects of public debt on economic growth based on unknown

[...] Read more.

This paper investigates the relationship between public debt and economic growth in the context of a panel kink regression with latent group structures. The proposed model allows us to explore the heterogeneous threshold effects of public debt on economic growth based on unknown group patterns. We propose a least squares estimator and demonstrate the consistency of estimating group structures. The finite sample performance of the proposed estimator is evaluated by simulations. Our findings reveal that the nonlinear relationship between public debt and economic growth is characterized by a heterogeneous threshold level, which varies among different groups, and highlight that the mixed results found in previous studies may stem from the assumption of a homogeneous threshold effect.

Full article

Figure 1

Open AccessEditorial

Introduction to the Special Issue “High-Dimensional Time Series in Macroeconomics and Finance”

by

Benedikt M. Pötscher, Leopold Sögner and Martin Wagner

Econometrics 2024, 12(1), 6; https://doi.org/10.3390/econometrics12010006 - 22 Feb 2024

Abstract

This Special Issue was organized in relation to the fifth Vienna Workshop on High-Dimensional Time Series in Macroeconomics and Finance, which took place at the Institute for Advanced Studies in Vienna on 9 June and 10 June 2022 [...]

Full article

Open AccessArticle

Multivariate Stochastic Volatility Modeling via Integrated Nested Laplace Approximations: A Multifactor Extension

by

João Pedro Coli de Souza Monteneri Nacinben and Márcio Laurini

Econometrics 2024, 12(1), 5; https://doi.org/10.3390/econometrics12010005 - 19 Feb 2024

Abstract

►▼

Show Figures

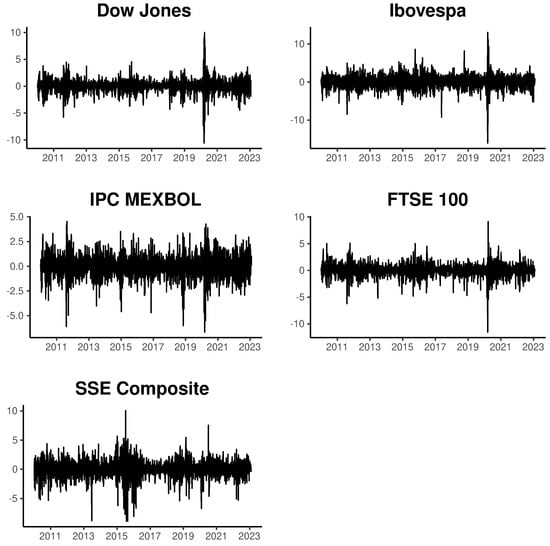

This study introduces a multivariate extension to the class of stochastic volatility models, employing integrated nested Laplace approximations (INLA) for estimation. Bayesian methods for estimating stochastic volatility models through Markov Chain Monte Carlo (MCMC) can become computationally burdensome or inefficient as the dataset

[...] Read more.

This study introduces a multivariate extension to the class of stochastic volatility models, employing integrated nested Laplace approximations (INLA) for estimation. Bayesian methods for estimating stochastic volatility models through Markov Chain Monte Carlo (MCMC) can become computationally burdensome or inefficient as the dataset size and problem complexity increase. Furthermore, issues related to chain convergence can also arise. In light of these challenges, this research aims to establish a computationally efficient approach for estimating multivariate stochastic volatility models. We propose a multifactor formulation estimated using the INLA methodology, enabling an approach that leverages sparse linear algebra and parallelization techniques. To evaluate the effectiveness of our proposed model, we conduct in-sample and out-of-sample empirical analyses of stock market index return series. Furthermore, we provide a comparative analysis with models estimated using MCMC, demonstrating the computational efficiency and goodness of fit improvements achieved with our approach.

Full article

Figure 1

Open AccessArticle

Influence of Digitalisation on Business Success in Austrian Traded Prime Market Companies—A Longitudinal Study

by

Christa Hangl

Econometrics 2024, 12(1), 4; https://doi.org/10.3390/econometrics12010004 - 09 Feb 2024

Abstract

►▼

Show Figures

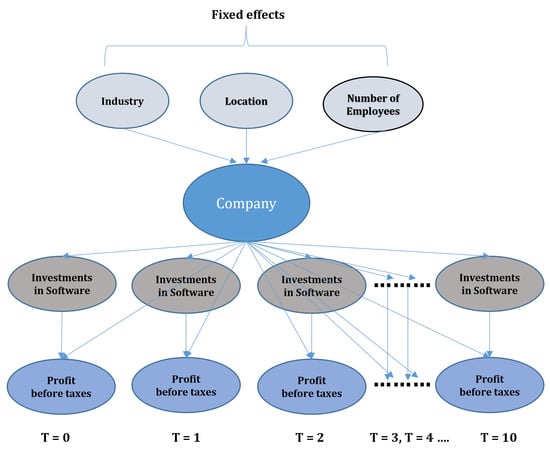

Software investments can significantly contribute to corporate success by optimising productivity, stimulating creativity, elevating customer satisfaction, and equipping organisations with the essential resources to adapt and thrive in a rapidly changing market. This paper examines whether software investments have an impact on the

[...] Read more.

Software investments can significantly contribute to corporate success by optimising productivity, stimulating creativity, elevating customer satisfaction, and equipping organisations with the essential resources to adapt and thrive in a rapidly changing market. This paper examines whether software investments have an impact on the economic success of the companies listed on the Austrian Traded Prime market (ATX companies). A literature review and qualitative content analysis are performed to answer the research questions. For testing hypotheses, a longitudinal study is conducted. Over a ten-year period, the consolidated financial statements of the businesses under review are evaluated. A panel will assist with the data analysis. This study offers notable distinctions from other research that has investigated the correlation between digitalisation and economic success. In contrast to prior studies that relied on surveys to assess the level of digitalisation, this study obtained the required data by conducting a comprehensive examination of the annual reports of all the organisations included in the analysis. The regression analysis of all businesses revealed no correlation between software expenditures and economic success. The regression models were subsequently calculated independently for financial and non-financial companies. The correlation between software investments and economic success in both industries is evident.

Full article

Figure 1

Open AccessArticle

Estimating Linear Dynamic Panels with Recentered Moments

by

Yong Bao

Econometrics 2024, 12(1), 3; https://doi.org/10.3390/econometrics12010003 - 17 Jan 2024

Abstract

This paper proposes estimating linear dynamic panels by explicitly exploiting the endogeneity of lagged dependent variables and expressing the crossmoments between the endogenous lagged dependent variables and disturbances in terms of model parameters. These moments, when recentered, form the basis for model estimation.

[...] Read more.

This paper proposes estimating linear dynamic panels by explicitly exploiting the endogeneity of lagged dependent variables and expressing the crossmoments between the endogenous lagged dependent variables and disturbances in terms of model parameters. These moments, when recentered, form the basis for model estimation. The resulting estimator’s asymptotic properties are derived under different asymptotic regimes (large number of cross-sectional units or long time spans), stable conditions (with or without a unit root), and error characteristics (homoskedasticity or heteroskedasticity of different forms). Monte Carlo experiments show that it has very good finite-sample performance.

Full article

Open AccessArticle

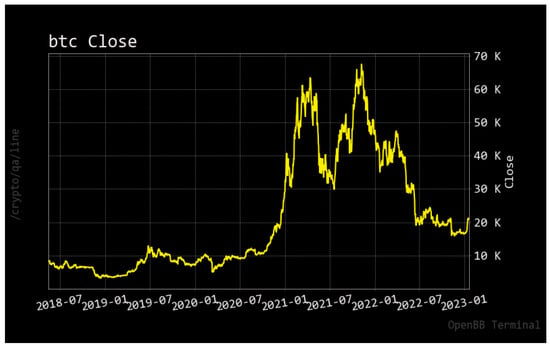

Is Monetary Policy a Driver of Cryptocurrencies? Evidence from a Structural Break GARCH-MIDAS Approach

by

Md Samsul Alam, Alessandra Amendola, Vincenzo Candila and Shahram Dehghan Jabarabadi

Econometrics 2024, 12(1), 2; https://doi.org/10.3390/econometrics12010002 - 05 Jan 2024

Abstract

►▼

Show Figures

The introduction of Bitcoin as a distributed peer-to-peer digital cash in 2008 and its first recorded real transaction in 2010 served the function of a medium of exchange, transforming the financial landscape by offering a decentralized, peer-to-peer alternative to conventional monetary systems. This

[...] Read more.

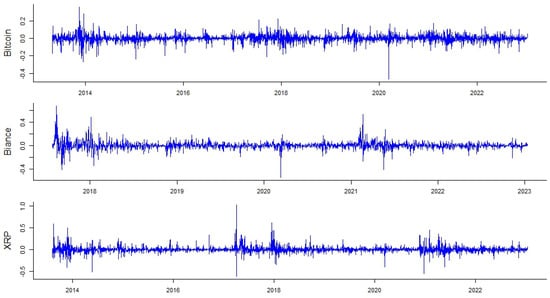

The introduction of Bitcoin as a distributed peer-to-peer digital cash in 2008 and its first recorded real transaction in 2010 served the function of a medium of exchange, transforming the financial landscape by offering a decentralized, peer-to-peer alternative to conventional monetary systems. This study investigates the intricate relationship between cryptocurrencies and monetary policy, with a particular focus on their long-term volatility dynamics. We enhance the GARCH-MIDAS (Mixed Data Sampling) through the adoption of the SB-GARCH-MIDAS (Structural Break Mixed Data Sampling) to analyze the daily returns of three prominent cryptocurrencies (Bitcoin, Binance Coin, and XRP) alongside monthly monetary policy data from the USA and South Africa with respect to potential presence of a structural break in the monetary policy, which provided us with two GARCH-MIDAS models. As of 30 June 2022, the most recent data observation for all samples are noted, although it is essential to acknowledge that the data sample time range varies due to differences in cryptocurrency data accessibility. Our research incorporates model confidence set (MCS) procedures and assesses model performance using various metrics, including AIC, BIC, MSE, and QLIKE, supplemented by comprehensive residual diagnostics. Notably, our analysis reveals that the SB-GARCH-MIDAS model outperforms others in forecasting cryptocurrency volatility. Furthermore, we uncover that, in contrast to their younger counterparts, the long-term volatility of older cryptocurrencies is sensitive to structural breaks in exogenous variables. Our study sheds light on the diversification within the cryptocurrency space, shaped by technological characteristics and temporal considerations, and provides practical insights, emphasizing the importance of incorporating monetary policy in assessing cryptocurrency volatility. The implications of our study extend to portfolio management with dynamic consideration, offering valuable insights for investors and decision-makers, which underscores the significance of considering both cryptocurrency types and the economic context of host countries.

Full article

Figure 1

Open AccessEditorial

Publisher’s Note: Econometrics—A New Era for a Well-Established Journal

by

Peter Roth

Econometrics 2024, 12(1), 1; https://doi.org/10.3390/econometrics12010001 - 28 Dec 2023

Abstract

Throughout its lifespan, a journal goes through many phases—and Econometrics (Econometrics Homepage n [...]

Full article

Open AccessArticle

Multistep Forecast Averaging with Stochastic and Deterministic Trends

by

Mohitosh Kejriwal, Linh Nguyen and Xuewen Yu

Econometrics 2023, 11(4), 28; https://doi.org/10.3390/econometrics11040028 - 15 Dec 2023

Abstract

►▼

Show Figures

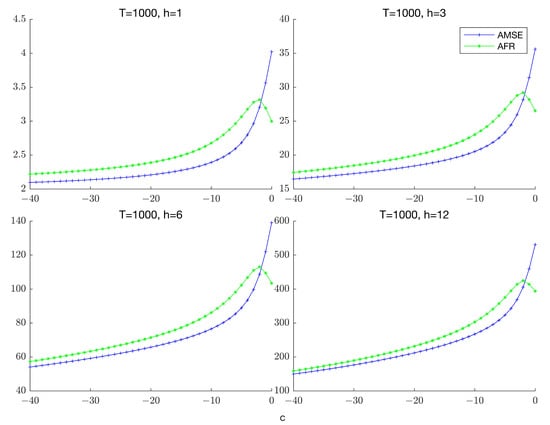

This paper presents a new approach to constructing multistep combination forecasts in a nonstationary framework with stochastic and deterministic trends. Existing forecast combination approaches in the stationary setup typically target the in-sample asymptotic mean squared error (AMSE), relying on its approximate equivalence with

[...] Read more.

This paper presents a new approach to constructing multistep combination forecasts in a nonstationary framework with stochastic and deterministic trends. Existing forecast combination approaches in the stationary setup typically target the in-sample asymptotic mean squared error (AMSE), relying on its approximate equivalence with the asymptotic forecast risk (AFR). Such equivalence, however, breaks down in a nonstationary setup. This paper develops combination forecasts based on minimizing an accumulated prediction errors (APE) criterion that directly targets the AFR and remains valid whether the time series is stationary or not. We show that the performance of APE-weighted forecasts is close to that of the optimal, infeasible combination forecasts. Simulation experiments are used to demonstrate the finite sample efficacy of the proposed procedure relative to Mallows/Cross-Validation weighting that target the AMSE as well as underscore the importance of accounting for both persistence and lag order uncertainty. An application to forecasting US macroeconomic time series confirms the simulation findings and illustrates the benefits of employing the APE criterion for real as well as nominal variables at both short and long horizons. A practical implication of our analysis is that the degree of persistence can play an important role in the choice of combination weights.

Full article

Figure 1

Open AccessArticle

Liquidity and Business Cycles—With Occasional Disruptions

by

Willi Semmler, Gabriel R. Padró Rosario and Levent Koçkesen

Econometrics 2023, 11(4), 27; https://doi.org/10.3390/econometrics11040027 - 12 Dec 2023

Abstract

►▼

Show Figures

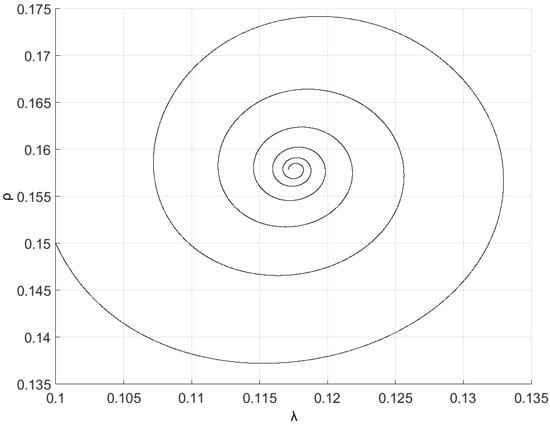

Some financial disruptions that started in California, U.S., in March 2023, resulting in the closure of several medium-size U.S. banks, shed new light on the role of liquidity in business cycle dynamics. In the normal path of the business cycle, liquidity and output

[...] Read more.

Some financial disruptions that started in California, U.S., in March 2023, resulting in the closure of several medium-size U.S. banks, shed new light on the role of liquidity in business cycle dynamics. In the normal path of the business cycle, liquidity and output mutually interact. Small shocks generally lead to mean reversion through market forces, as a low degree of liquidity dissipation does not significantly disrupt the economic dynamics. However, larger shocks and greater liquidity dissipation arising from runs on financial institutions and contagion effects can trigger tipping points, financial disruptions, and economic downturns. The latter poses severe challenges for Central Banks, which during normal times, usually maintain a hands-off approach with soft regulation and monitoring, allowing the market to operate. However, in severe times of liquidity dissipation, they must swiftly restore liquidity flows and rebuild trust in stability to avoid further disruptions and meltdowns. In this paper, we present a nonlinear model of the liquidity–macro interaction and econometrically explore those types of dynamic features with data from the U.S. economy. Guided by a theoretical model, we use nonlinear econometric methods of a Smooth Transition Regression type to study those features, which provide and suggest further regulation and monitoring guidelines and institutional enforcement of rules.

Full article

Figure 1

Open AccessArticle

When It Counts—Econometric Identification of the Basic Factor Model Based on GLT Structures

by

Sylvia Frühwirth-Schnatter, Darjus Hosszejni and Hedibert Freitas Lopes

Econometrics 2023, 11(4), 26; https://doi.org/10.3390/econometrics11040026 - 20 Nov 2023

Cited by 3

Abstract

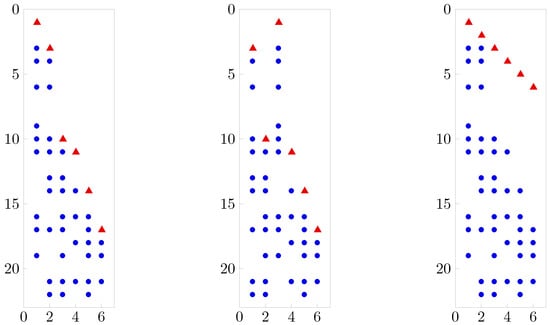

Despite the popularity of factor models with simple loading matrices, little attention has been given to formally address the identifiability of these models beyond standard rotation-based identification such as the positive lower triangular (PLT) constraint. To fill this gap, we review the advantages

[...] Read more.

Despite the popularity of factor models with simple loading matrices, little attention has been given to formally address the identifiability of these models beyond standard rotation-based identification such as the positive lower triangular (PLT) constraint. To fill this gap, we review the advantages of variance identification in simple factor analysis and introduce the generalized lower triangular (GLT) structures. We show that the GLT assumption is an improvement over PLT without compromise: GLT is also unique but, unlike PLT, a non-restrictive assumption. Furthermore, we provide a simple counting rule for variance identification under GLT structures, and we demonstrate that within this model class, the unknown number of common factors can be recovered in an exploratory factor analysis. Our methodology is illustrated for simulated data in the context of post-processing posterior draws in sparse Bayesian factor analysis.

Full article

(This article belongs to the Special Issue High-Dimensional Time Series in Macroeconomics and Finance)

►▼

Show Figures

Figure 1

Open AccessArticle

On the Proper Computation of the Hausman Test Statistic in Standard Linear Panel Data Models: Some Clarifications and New Results

by

Julie Le Gallo and Marc-Alexandre Sénégas

Econometrics 2023, 11(4), 25; https://doi.org/10.3390/econometrics11040025 - 08 Nov 2023

Abstract

►▼

Show Figures

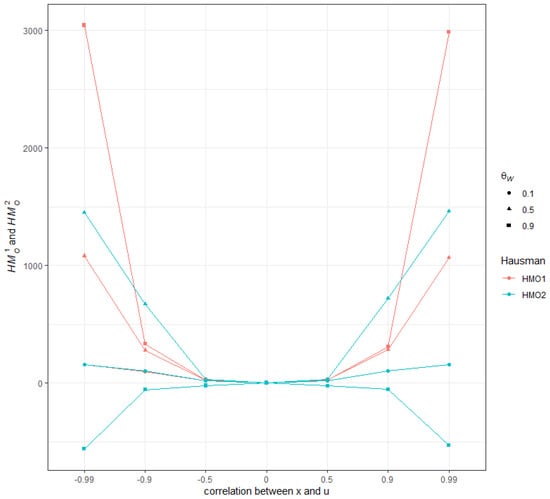

We provide new analytical results for the implementation of the Hausman specification test statistic in a standard panel data model, comparing the version based on the estimators computed from the untransformed random effects model specification under Feasible Generalized Least Squares and the one

[...] Read more.

We provide new analytical results for the implementation of the Hausman specification test statistic in a standard panel data model, comparing the version based on the estimators computed from the untransformed random effects model specification under Feasible Generalized Least Squares and the one computed from the quasi-demeaned model estimated by Ordinary Least Squares. We show that the quasi-demeaned model cannot provide a reliable magnitude when implementing the Hausman test in a finite sample setting, although it is the most common approach used to produce the test statistic in econometric software. The difference between the Hausman statistics computed under the two methods can be substantial and even lead to opposite conclusions for the test of orthogonality between the regressors and the individual-specific effects. Furthermore, this difference remains important even with large cross-sectional dimensions as it mainly depends on the within-between structure of the regressors and on the presence of a significant correlation between the individual effects and the covariates in the data. We propose to supplement the test outcomes that are provided in the main econometric software packages with some metrics to address the issue at hand.

Full article

Figure 1

Open AccessArticle

Dirichlet Process Log Skew-Normal Mixture with a Missing-at-Random-Covariate in Insurance Claim Analysis

by

Minkun Kim, David Lindberg, Martin Crane and Marija Bezbradica

Econometrics 2023, 11(4), 24; https://doi.org/10.3390/econometrics11040024 - 12 Oct 2023

Abstract

►▼

Show Figures

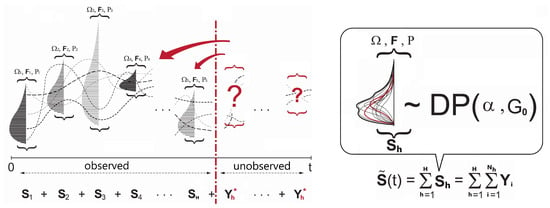

In actuarial practice, the modeling of total losses tied to a certain policy is a nontrivial task due to complex distributional features. In the recent literature, the application of the Dirichlet process mixture for insurance loss has been proposed to eliminate the risk

[...] Read more.

In actuarial practice, the modeling of total losses tied to a certain policy is a nontrivial task due to complex distributional features. In the recent literature, the application of the Dirichlet process mixture for insurance loss has been proposed to eliminate the risk of model misspecification biases. However, the effect of covariates as well as missing covariates in the modeling framework is rarely studied. In this article, we propose novel connections among a covariate-dependent Dirichlet process mixture, log-normal convolution, and missing covariate imputation. As a generative approach, our framework models the joint of outcome and covariates, which allows us to impute missing covariates under the assumption of missingness at random. The performance is assessed by applying our model to several insurance datasets of varying size and data missingness from the literature, and the empirical results demonstrate the benefit of our model compared with the existing actuarial models, such as the Tweedie-based generalized linear model, generalized additive model, or multivariate adaptive regression spline.

Full article

Figure 1

Open AccessArticle

A New Matrix Statistic for the Hausman Endogeneity Test under Heteroskedasticity

by

Alecos Papadopoulos

Econometrics 2023, 11(4), 23; https://doi.org/10.3390/econometrics11040023 - 10 Oct 2023

Abstract

We derive a new matrix statistic for the Hausman test for endogeneity in cross-sectional Instrumental Variables estimation, that incorporates heteroskedasticity in a natural way and does not use a generalized inverse. A Monte Carlo study examines the performance of the statistic for different

[...] Read more.

We derive a new matrix statistic for the Hausman test for endogeneity in cross-sectional Instrumental Variables estimation, that incorporates heteroskedasticity in a natural way and does not use a generalized inverse. A Monte Carlo study examines the performance of the statistic for different heteroskedasticity-robust variance estimators and different skedastic situations. We find that the test statistic performs well as regards empirical size in almost all cases; however, as regards empirical power, how one corrects for heteroskedasticity matters. We also compare its performance with that of the Wald statistic from the augmented regression setup that is often used for the endogeneity test, and we find that the choice between them may depend on the desired significance level of the test.

Full article

Open AccessArticle

Detecting Pump-and-Dumps with Crypto-Assets: Dealing with Imbalanced Datasets and Insiders’ Anticipated Purchases

by

Dean Fantazzini and Yufeng Xiao

Econometrics 2023, 11(3), 22; https://doi.org/10.3390/econometrics11030022 - 30 Aug 2023

Abstract

►▼

Show Figures

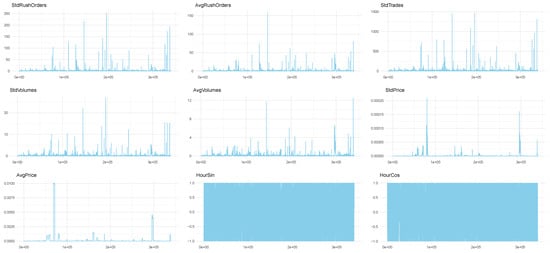

Detecting pump-and-dump schemes involving cryptoassets with high-frequency data is challenging due to imbalanced datasets and the early occurrence of unusual trading volumes. To address these issues, we propose constructing synthetic balanced datasets using resampling methods and flagging a pump-and-dump from the moment of

[...] Read more.

Detecting pump-and-dump schemes involving cryptoassets with high-frequency data is challenging due to imbalanced datasets and the early occurrence of unusual trading volumes. To address these issues, we propose constructing synthetic balanced datasets using resampling methods and flagging a pump-and-dump from the moment of public announcement up to 60 min beforehand. We validated our proposals using data from Pumpolymp and the CryptoCurrency eXchange Trading Library to identify 351 pump signals relative to the Binance crypto exchange in 2021 and 2022. We found that the most effective approach was using the original imbalanced dataset with pump-and-dumps flagged 60 min in advance, together with a random forest model with data segmented into 30-s chunks and regressors computed with a moving window of 1 h. Our analysis revealed that a better balance between sensitivity and specificity could be achieved by simply selecting an appropriate probability threshold, such as setting the threshold close to the observed prevalence in the original dataset. Resampling methods were useful in some cases, but threshold-independent measures were not affected. Moreover, detecting pump-and-dumps in real-time involves high-dimensional data, and the use of resampling methods to build synthetic datasets can be time-consuming, making them less practical.

Full article

Figure A1

Open AccessArticle

Competition–Innovation Nexus: Product vs. Process, Does It Matter?

by

Emil Palikot

Econometrics 2023, 11(3), 21; https://doi.org/10.3390/econometrics11030021 - 25 Aug 2023

Abstract

I study the relationship between competition and innovation, focusing on the distinction between product and process innovations. By considering product innovation, I expand upon earlier research on the topic of the relationship between competition and innovation, which focused on process innovations. New products

[...] Read more.

I study the relationship between competition and innovation, focusing on the distinction between product and process innovations. By considering product innovation, I expand upon earlier research on the topic of the relationship between competition and innovation, which focused on process innovations. New products allow firms to differentiate themselves from one another. I demonstrate that the competition level that creates the most innovation incentive is higher for process innovation than product innovation. I also provide empirical evidence that supports these results. Using the community innovation survey, I first show that an inverted U-shape characterizes the relationship between competition and both process and product innovations. The optimal competition level for promoting innovation is higher for process innovation.

Full article

Open AccessArticle

Locationally Varying Production Technology and Productivity: The Case of Norwegian Farming

by

Subal C. Kumbhakar, Jingfang Zhang and Gudbrand Lien

Econometrics 2023, 11(3), 20; https://doi.org/10.3390/econometrics11030020 - 18 Aug 2023

Abstract

►▼

Show Figures

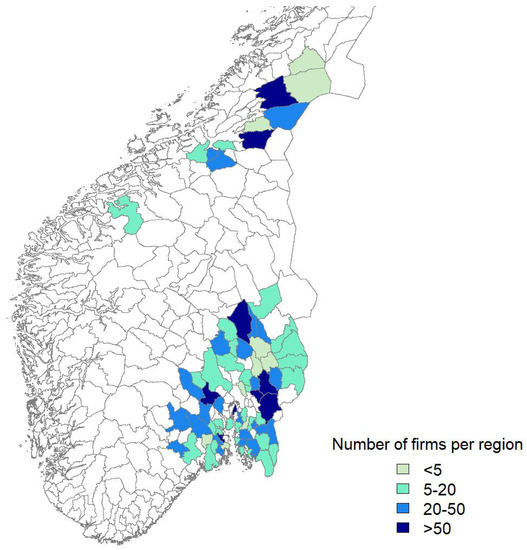

In this study, we leverage geographical coordinates and firm-level panel data to uncover variations in production across different locations. Our approach involves using a semiparametric proxy variable regression estimator, which allows us to define and estimate a customized production function for each firm

[...] Read more.

In this study, we leverage geographical coordinates and firm-level panel data to uncover variations in production across different locations. Our approach involves using a semiparametric proxy variable regression estimator, which allows us to define and estimate a customized production function for each firm and its corresponding location. By employing kernel methods, we estimate the nonparametric functions that determine the model’s parameters based on latitude and longitude. Furthermore, our model incorporates productivity components that consider various factors that influence production. Unlike spatially autoregressive-type production functions that assume a uniform technology across all locations, our approach estimates technology and productivity at both the firm and location levels, taking into account their specific characteristics. To handle endogenous regressors, we incorporate a proxy variable identification technique, distinguishing our method from geographically weighted semiparametric regressions. To investigate the heterogeneity in production technology and productivity among Norwegian grain farmers, we apply our model to a sample of farms using panel data spanning from 2001 to 2020. Through this analysis, we provide empirical evidence of regional variations in both technology and productivity among Norwegian grain farmers. Finally, we discuss the suitability of our approach for addressing the heterogeneity in this industry.

Full article

Figure 1

Open AccessArticle

Tracking ‘Pure’ Systematic Risk with Realized Betas for Bitcoin and Ethereum

by

Bilel Sanhaji and Julien Chevallier

Econometrics 2023, 11(3), 19; https://doi.org/10.3390/econometrics11030019 - 10 Aug 2023

Cited by 1

Abstract

►▼

Show Figures

Using the capital asset pricing model, this article critically assesses the relative importance of computing ‘realized’ betas from high-frequency returns for Bitcoin and Ethereum—the two major cryptocurrencies—against their classic counterparts using the 1-day and 5-day return-based betas. The sample includes intraday data from

[...] Read more.

Using the capital asset pricing model, this article critically assesses the relative importance of computing ‘realized’ betas from high-frequency returns for Bitcoin and Ethereum—the two major cryptocurrencies—against their classic counterparts using the 1-day and 5-day return-based betas. The sample includes intraday data from 15 May 2018 until 17 January 2023. The microstructure noise is present until 4 min in the BTC and ETH high-frequency data. Therefore, we opt for a conservative choice with a 60 min sampling frequency. Considering 250 trading days as a rolling-window size, we obtain rolling betas < 1 for Bitcoin and Ethereum with respect to the CRIX market index, which could enhance portfolio diversification (at the expense of maximizing returns). We flag the minimal tracking errors at the hourly and daily frequencies. The dispersion of rolling betas is higher for the weekly frequency and is concentrated towards values of

Figure 1

Open AccessArticle

Estimation of Realized Asymmetric Stochastic Volatility Models Using Kalman Filter

by

Manabu Asai

Econometrics 2023, 11(3), 18; https://doi.org/10.3390/econometrics11030018 - 31 Jul 2023

Abstract

Despite the growing interest in realized stochastic volatility models, their estimation techniques, such as simulated maximum likelihood (SML), are computationally intensive. Based on the realized volatility equation, this study demonstrates that, in a finite sample, the quasi-maximum likelihood estimator based on the Kalman

[...] Read more.

Despite the growing interest in realized stochastic volatility models, their estimation techniques, such as simulated maximum likelihood (SML), are computationally intensive. Based on the realized volatility equation, this study demonstrates that, in a finite sample, the quasi-maximum likelihood estimator based on the Kalman filter is competitive with the two-step SML estimator, which is less efficient than the SML estimator. Regarding empirical results for the S&P 500 index, the quasi-likelihood ratio tests favored the two-factor realized asymmetric stochastic volatility model with the standardized t distribution among alternative specifications, and an analysis on out-of-sample forecasts prefers the realized stochastic volatility models, rejecting the model without the realized volatility measure. Furthermore, the forecasts of alternative RSV models are statistically equivalent for the data covering the global financial crisis.

Full article

Highly Accessed Articles

Latest Books

E-Mail Alert

News

Topics

Conferences

Special Issues

Topical Collections

Topical Collection in

Econometrics

Econometric Analysis of Climate Change

Collection Editors: Claudio Morana, J. Isaac Miller