Journal Description

Computer Sciences & Mathematics Forum

Computer Sciences & Mathematics Forum

is an open access journal dedicated to publishing findings resulting from academic conferences, workshops, and similar events in the area of computer science and mathematics. Each conference proceeding can be individually indexed, is citable via a digital object identifier (DOI), and is freely available under an open access license. The conference organizers and proceedings editors are responsible for managing the peer-review process and selecting papers for conference proceedings.

Latest Articles

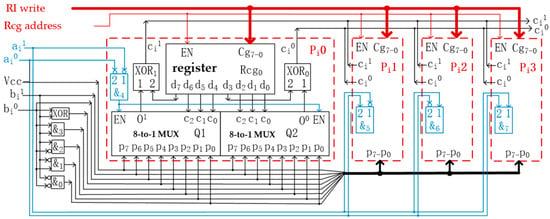

Using Reconfigurable Multi-Valued Logic Operators to Build a New Encryption Technology

Comput. Sci. Math. Forum 2023, 8(1), 99; https://doi.org/10.3390/cmsf2023008099 - 10 Apr 2024

Abstract

►

Show Figures

Current encryption technologies mostly rely on complex algorithms or difficult mathematical problems to improve security. Therefore, it is difficult for these encryption technologies to possess both high security and high efficiency, which are two properties that people desire. Trying to solve this dilemma,

[...] Read more.

Current encryption technologies mostly rely on complex algorithms or difficult mathematical problems to improve security. Therefore, it is difficult for these encryption technologies to possess both high security and high efficiency, which are two properties that people desire. Trying to solve this dilemma, we built a new encryption technology, called configurable encryption technology (CET), based on the typical structure of reconfigurable quaternary logic operator (RQLO) that was invented in 2018. We designed the CET as a block cipher for symmetric encryption, where we use four 32-quit RQLO typical structures as the encryptor, decryptor, and two key derivation operators. Taking advantage of the reconfigurability of the RQLO typical structure, the CET can automatically reconfigure the keys and symbol substitution rules of the encryptor and decryptor after each encryption operation. We found that a chip containing about 70,000 transistors and 500 MB of nonvolatile memory could provide all the CET devices and generalized keys needed for any user’s lifetime, to implement a practical one-time pad encryption technology. We also developed a strategy to solve the current key distribution problem with prestored generalized key source data and on-site appointment codes. The CET is expected to provide a theoretical basis and core technology for using the RQLO to build a new cryptographic system with high security, fast encryption/decryption speed, and low manufacturing cost.

Full article

Open AccessProceeding Paper

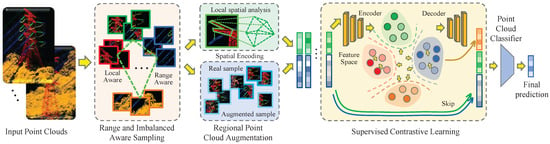

iBALR3D: imBalanced-Aware Long-Range 3D Semantic Segmentation

by

Keying Zhang, Ruirui Cai, Xinqiao Wu, Jiguang Zhao and Ping Qin

Comput. Sci. Math. Forum 2024, 9(1), 6; https://doi.org/10.3390/cmsf2024009006 - 14 Mar 2024

Abstract

Three-dimensional semantic segmentation is crucial for comprehending transmission line structure and environment. This understanding forms the basis for a variety of applications, such as automatic risk assessment of line tripping caused by wildfires, wind, and thunder. However, the performance of current 3D point

[...] Read more.

Three-dimensional semantic segmentation is crucial for comprehending transmission line structure and environment. This understanding forms the basis for a variety of applications, such as automatic risk assessment of line tripping caused by wildfires, wind, and thunder. However, the performance of current 3D point cloud segmentation methods tends to degrade on imbalanced data, which negatively impacts the overall segmentation results. In this paper, we proposed an imBalanced-Aware Long-Range 3D Semantic Segmentation framework (iBALR3D) which is specifically designed for large-scale transmission line segmentation. To address the unsatisfactory performance on categories with few points, an Enhanced Imbalanced Contrastive Learning module is first proposed to improve feature discrimination between points across sampling regions by contrasting the representations with the assistance of data augmentation. A structural Adaptive Spatial Encoder is designed to capture the distinguish measures across different components. Additionally, we employ a sampling strategy to enable the model to concentrate more on regions of categories with few points. This strategy further enhances the model’s robustness in handling challenges associated with long-range and significant data imbalances. Finally, we introduce a large-scale 3D point cloud dataset (500KV3D) captured from high-voltage long-range transmission lines and evaluate iBALR3D on it. Extensive experiments demonstrate the effectiveness and superiority of our approach.

Full article

(This article belongs to the Proceedings of The 2nd AAAI Workshop on Artificial Intelligence with Biased or Scarce Data (AIBSD))

►▼

Show Figures

Figure 1

Open AccessProceeding Paper

The Exploration of High Quality Education in Scientific and Technological Innovation Based on Artificial Intelligence

by

Xiaoli Yang and Songbai Wang

Comput. Sci. Math. Forum 2023, 8(1), 98; https://doi.org/10.3390/cmsf2023008098 - 26 Feb 2024

Abstract

This paper explains that setting up artificial intelligence courses can clearly enhance students’ interest in high technology, boost learning confidence and promote students’ overall development in the following three aspects: the significance of artificial intelligence education to students, the confusion regarding artificial intelligence

[...] Read more.

This paper explains that setting up artificial intelligence courses can clearly enhance students’ interest in high technology, boost learning confidence and promote students’ overall development in the following three aspects: the significance of artificial intelligence education to students, the confusion regarding artificial intelligence teaching in this stage, especially in rural middle schools, and some related suggestions.

Full article

Open AccessProceeding Paper

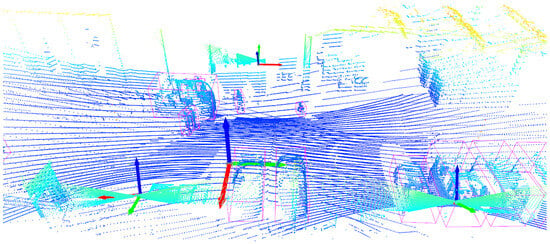

Exploring 3D Object Detection for Autonomous Factory Driving: Advanced Research on Handling Limited Annotations with Ground Truth Sampling Augmentation

by

Matthias Reuse, Karl Amende, Martin Simon and Bernhard Sick

Comput. Sci. Math. Forum 2024, 9(1), 5; https://doi.org/10.3390/cmsf2024009005 - 18 Feb 2024

Abstract

Autonomously driving vehicles in car factories and parking spaces can represent a competitive advantage in the logistics industry. However, the real-world application is challenging in many ways. First of all, there are no publicly available datasets for this specific task. Therefore, we equipped

[...] Read more.

Autonomously driving vehicles in car factories and parking spaces can represent a competitive advantage in the logistics industry. However, the real-world application is challenging in many ways. First of all, there are no publicly available datasets for this specific task. Therefore, we equipped two industrial production sites with up to 11 LiDAR sensors to collect and annotate our own data for infrastructural 3D object detection. These form the basis for extensive experiments. Due to the still limited amount of labeled data, the commonly used ground truth sampling augmentation is the core of research in this work. Several variations of this augmentation method are explored, revealing that in our case, the most commonly used is not necessarily the best. We show that an easy-to-create polygon can noticeably improve the detection results in this application scenario. By using these augmentation methods, it is even possible to achieve moderate detection results when only empty frames without any objects and a database with only a few labeled objects are used.

Full article

(This article belongs to the Proceedings of The 2nd AAAI Workshop on Artificial Intelligence with Biased or Scarce Data (AIBSD))

►▼

Show Figures

Figure 1

Open AccessProceeding Paper

Small Dataset, Big Gains: Enhancing Reinforcement Learning by Offline Pre-Training with Model-Based Augmentation

by

Girolamo Macaluso, Alessandro Sestini and Andrew D. Bagdanov

Comput. Sci. Math. Forum 2024, 9(1), 4; https://doi.org/10.3390/cmsf2024009004 - 18 Feb 2024

Abstract

Offline reinforcement learning leverages pre-collected datasets of transitions to train policies. It can serve as an effective initialization for online algorithms, enhancing sample efficiency and speeding up convergence. However, when such datasets are limited in size and quality, offline pre-training can produce sub-optimal

[...] Read more.

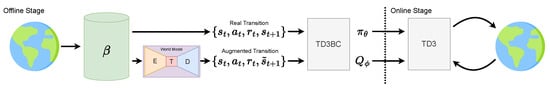

Offline reinforcement learning leverages pre-collected datasets of transitions to train policies. It can serve as an effective initialization for online algorithms, enhancing sample efficiency and speeding up convergence. However, when such datasets are limited in size and quality, offline pre-training can produce sub-optimal policies and lead to a degraded online reinforcement learning performance. In this paper, we propose a model-based data augmentation strategy to maximize the benefits of offline reinforcement learning pre-training and reduce the scale of data needed to be effective. Our approach leverages a world model of the environment trained on the offline dataset to augment states during offline pre-training. We evaluate our approach on a variety of MuJoCo robotic tasks, and our results show that it can jumpstart online fine-tuning and substantially reduce—in some cases by an order of magnitude—the required number of environment interactions.

Full article

(This article belongs to the Proceedings of The 2nd AAAI Workshop on Artificial Intelligence with Biased or Scarce Data (AIBSD))

►▼

Show Figures

Figure 1

Open AccessProceeding Paper

Study on the Application of Virtual Reality Technology in Cross-Border Higher Education

by

Yanfang Hou

Comput. Sci. Math. Forum 2023, 8(1), 97; https://doi.org/10.3390/cmsf2023008097 - 08 Feb 2024

Abstract

This paper summarizes the problems existing in cross-border higher education through the analysis of the development status and characteristics of virtual reality technology and cross-border higher education, and puts forward the important significance and enlightenment of the application of virtual reality technology in

[...] Read more.

This paper summarizes the problems existing in cross-border higher education through the analysis of the development status and characteristics of virtual reality technology and cross-border higher education, and puts forward the important significance and enlightenment of the application of virtual reality technology in cross-border higher education in the new era for solving the practical problems of cross-border education. It also points out that the new mode and situation of online and offline joint development created by the integration of virtual reality technology and cross-border higher education will have an important impact on accelerating the opening up of Chinese education and improving the quality and efficiency of cross-border higher education.

Full article

Open AccessProceeding Paper

Viewpoints on the Fundamentals of Information Science

by

Hailong Ji

Comput. Sci. Math. Forum 2023, 8(1), 96; https://doi.org/10.3390/cmsf2023008096 - 08 Feb 2024

Abstract

In this paper, the author starts with a critique of Wiener’s advocated concept of information and provides new definitions for a series of fundamental concepts in the fundamentals of information science. Furthermore, a fresh interpretation of several fundamental issues in information science is

[...] Read more.

In this paper, the author starts with a critique of Wiener’s advocated concept of information and provides new definitions for a series of fundamental concepts in the fundamentals of information science. Furthermore, a fresh interpretation of several fundamental issues in information science is presented, thereby establishing a distinct and innovative foundation for information science.

Full article

Open AccessProceeding Paper

The Certainty, Influence, and Multi-Dimensional Defense of Digital Socialist Ideology

by

Jian Zheng, Yuting Xie and Yaqi Ni

Comput. Sci. Math. Forum 2023, 8(1), 95; https://doi.org/10.3390/cmsf2023008095 - 07 Feb 2024

Abstract

With the development of modern network technology, human beings have constructed a development model of digital society. Human social practice has been given a unique digital color. Digital society determines the existence and development of a digital socialist ideology. At the same time,

[...] Read more.

With the development of modern network technology, human beings have constructed a development model of digital society. Human social practice has been given a unique digital color. Digital society determines the existence and development of a digital socialist ideology. At the same time, digital socialist ideology also promotes the development of the Chinese path to modernization in the new era. In the complex era of digital socialization, it is of great practical significance to elaborate on the determinacy of digital socialist ideology, analyze the impact areas of safeguarding digital socialist ideological security, and explore ways to safeguard digital socialist ideological security from multiple perspectives.

Full article

Open AccessProceeding Paper

Semi-Supervised Implicit Augmentation for Data-Scarce VQA

by

Bhargav Dodla, Kartik Hegde and A. N. Rajagopalan

Comput. Sci. Math. Forum 2024, 9(1), 3; https://doi.org/10.3390/cmsf2024009003 - 07 Feb 2024

Abstract

Vision-language models (VLMs) have demonstrated increasing potency in solving complex vision-language tasks in the recent past. Visual question answering (VQA) is one of the primary downstream tasks for assessing the capability of VLMs, as it helps in gauging the multimodal understanding of a

[...] Read more.

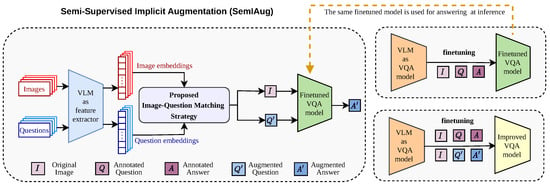

Vision-language models (VLMs) have demonstrated increasing potency in solving complex vision-language tasks in the recent past. Visual question answering (VQA) is one of the primary downstream tasks for assessing the capability of VLMs, as it helps in gauging the multimodal understanding of a VLM in answering open-ended questions. The vast contextual information learned during the pretraining stage in VLMs can be utilised effectively to finetune the VQA model for specific datasets. In particular, special types of VQA datasets, such as OK-VQA, A-OKVQA (outside knowledge-based), and ArtVQA (domain-specific), have a relatively smaller number of images and corresponding question-answer annotations in the training set. Such datasets can be categorised as data-scarce. This hinders the effective learning of VLMs due to the low information availability. We introduce SemIAug (Semi-Supervised Implicit Augmentation), a model and dataset agnostic strategy specially designed to address the challenges faced by limited data availability in the domain-specific VQA datasets. SemIAug uses the annotated image-question data present within the chosen dataset and augments it with meaningful new image-question associations. We show that SemIAug improves the VQA performance on data-scarce datasets without the need for additional data or labels.

Full article

(This article belongs to the Proceedings of The 2nd AAAI Workshop on Artificial Intelligence with Biased or Scarce Data (AIBSD))

►▼

Show Figures

Figure 1

Open AccessProceeding Paper

Frustratingly Easy Environment Discovery for Invariant Learning

by

Samira Zare and Hien Van Nguyen

Comput. Sci. Math. Forum 2024, 9(1), 2; https://doi.org/10.3390/cmsf2024009002 - 29 Jan 2024

Abstract

Standard training via empirical risk minimization may result in making predictions that overly rely on spurious correlations. This can degrade the generalization to out-of-distribution settings where these correlations no longer hold. Invariant learning has been shown to be a promising approach for identifying

[...] Read more.

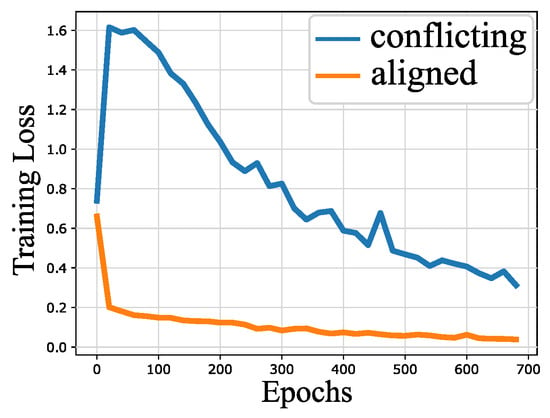

Standard training via empirical risk minimization may result in making predictions that overly rely on spurious correlations. This can degrade the generalization to out-of-distribution settings where these correlations no longer hold. Invariant learning has been shown to be a promising approach for identifying predictors that ignore spurious correlations. However, an important limitation of this approach is that it assumes access to different “environments” (also known as domains), which may not always be available. This paper proposes a simple yet effective strategy for discovering maximally informative environments from a single dataset. Our frustratingly easy environment discovery (FEED) approach trains a biased reference classifier using a generalized cross-entropy loss function and partitions the dataset based on its performance. These environments can be used with various invariant learning algorithms, including Invariant Risk Minimization, Risk Extrapolation, and Group Distributionally Robust Optimization. The results indicate that FEED can discover environments with a higher group sufficiency gap compared to the state-of-the-art environment inference baseline and leads to improved test accuracy on CMNIST, Waterbirds, and CelebA datasets.

Full article

(This article belongs to the Proceedings of The 2nd AAAI Workshop on Artificial Intelligence with Biased or Scarce Data (AIBSD))

►▼

Show Figures

Figure 1

Open AccessEditorial

Statement of Peer Review

by

Kuan-Chuan Peng, Abhishek Aich and Ziyan Wu

Comput. Sci. Math. Forum 2024, 9(1), 1; https://doi.org/10.3390/cmsf2024009001 - 23 Jan 2024

Abstract

In submitting conference proceedings to the Computer Sciences & Mathematics Forum, the volume editors of the proceedings certify to the publisher that all papers published in this volume have been subjected to peer review administered by the volume editors [...]

Full article

(This article belongs to the Proceedings of The 2nd AAAI Workshop on Artificial Intelligence with Biased or Scarce Data (AIBSD))

Open AccessProceeding Paper

The Hermeneutics of Artificial Text

by

Rafal Maciag

Comput. Sci. Math. Forum 2023, 8(1), 94; https://doi.org/10.3390/cmsf2023008094 - 12 Jan 2024

Abstract

Spectacular achievements of the so-called large language models (LLM), a technical solution that has emerged within natural language processing (NLP), are a common experience these days. In particular, this applies to the artificial text generated in various ways by these models. This text

[...] Read more.

Spectacular achievements of the so-called large language models (LLM), a technical solution that has emerged within natural language processing (NLP), are a common experience these days. In particular, this applies to the artificial text generated in various ways by these models. This text represents a level of semantic perfection comparable to that of or even equal to a human. On the other hand, there is extensive and old research on the role and meaning of the text in human culture and society, with a very rich philosophical background gathered in the field of hermeneutics. The paper justifies the necessity of using the research background of hermeneutics to study artificial texts and also proposes the first conclusions about these texts in the context of this background. It is the formulation of foundations of the research area that can be called the hermeneutics of artificial text.

Full article

Open AccessProceeding Paper

Pretrained Language Models as Containers of the Discursive Knowledge

by

Rafal Maciag

Comput. Sci. Math. Forum 2023, 8(1), 93; https://doi.org/10.3390/cmsf2023008093 - 12 Jan 2024

Abstract

Discourses can be treated as instances of knowledge. The dynamic space in which the trajectories of these discourses are described can be regarded as a model of knowledge. Such a space is called a discursive space. Its scope is defined by a set

[...] Read more.

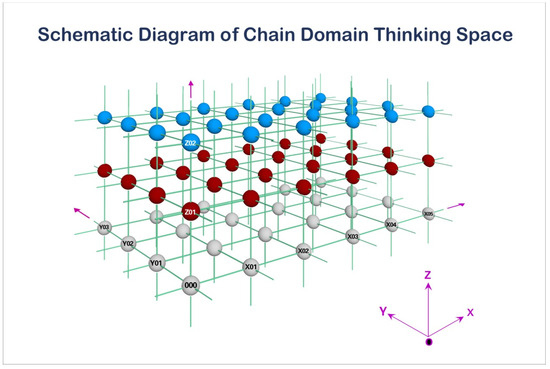

Discourses can be treated as instances of knowledge. The dynamic space in which the trajectories of these discourses are described can be regarded as a model of knowledge. Such a space is called a discursive space. Its scope is defined by a set of discourses. The procedure of constructing such a space is a serious problem, and so far, the only solution has been to identify the dimensions of this space through the qualitative analysis of texts on the basis of the discourses that were identified. This paper proposes a solution by using an extended variant of the embedding technique, which is the basis of neural language models (pre-trained language models and large language models) in the field of natural language processing (NLP). This technique makes it possible to create a semantic model of the language in the form of a multidimensional space. The solution proposed in this article is to repeat the embedding technique but at a higher level of abstraction, that is, the discursive level. First, the discourses would be isolated from the prepared corpus of texts, preserving their order. Then, from these discourses, identified by names, a sequence of names would be created, which would be a kind of supertext. A language model would be trained on this supertext. This model would be a multidimensional space. This space would be a discursive space constructed for one moment in time. The described steps repeated in time would allow one to construct the assumed dynamic space of discourses, i.e., discursive space.

Full article

Open AccessProceeding Paper

The Existence, Transcendence, and Evolution of the Subject—A Method Based on Subject Information

by

Zheng Wu

Comput. Sci. Math. Forum 2023, 8(1), 92; https://doi.org/10.3390/cmsf2023008092 - 08 Dec 2023

Abstract

Based on the modern dilemma of the existence of the subject, information philosophy is transformed into ontological “subject information”, and the basic elements of the virtual dimension and the real dimension are abstracted from it. And then, with the help of the alternate

[...] Read more.

Based on the modern dilemma of the existence of the subject, information philosophy is transformed into ontological “subject information”, and the basic elements of the virtual dimension and the real dimension are abstracted from it. And then, with the help of the alternate transformation of the virtual dimension information and the real dimension information, the existence and evolution of subject information are explored.

Full article

Open AccessProceeding Paper

How Digital Technology Can Reshape the Trust System of Engineering—Taking Beijing Daxing International Airport as an Example

by

Yiqi Wang and Dazhou Wang

Comput. Sci. Math. Forum 2023, 8(1), 91; https://doi.org/10.3390/cmsf2023008091 - 24 Oct 2023

Abstract

Digital technology has broken the traditional “individual–system” trust dichotomy and has brought in a new “increment” of trust state to society. Taking an engineering project as an example, the key to a successful digital design, digital construction and digital operation is that digital

[...] Read more.

Digital technology has broken the traditional “individual–system” trust dichotomy and has brought in a new “increment” of trust state to society. Taking an engineering project as an example, the key to a successful digital design, digital construction and digital operation is that digital technology has built an inclusive trust system and coordination mechanism in the whole life cycle of the project. In this process, the integration of the people, technology and system has broken the dimensional barrier of “man, machine and object” in the project, which not only exceeds the dependence on traditional individuals and systems, but also reduces the cost of system operation and improves the efficiency of project construction.

Full article

Open AccessProceeding Paper

Systematism—The Evolution from Holistic Cognition to Systematic Understanding

by

Hongjian Yuan and Yaru Chen

Comput. Sci. Math. Forum 2023, 8(1), 90; https://doi.org/10.3390/cmsf2023008090 - 17 Oct 2023

Abstract

►▼

Show Figures

Starting from the ancient philosophical proposition of “the whole is greater than the sum of its parts”, this article attempts to answer the different responses of different holistic theories to “1 + 1 > 2” in different times. When trying to define systems

[...] Read more.

Starting from the ancient philosophical proposition of “the whole is greater than the sum of its parts”, this article attempts to answer the different responses of different holistic theories to “1 + 1 > 2” in different times. When trying to define systems or systematism, due to the introduction of the concept of exchange and traditional holism, one must ask the question of how to sublimate them into modern systems theory? How does systems philosophy counteract-eat the traditional holism step by step and reshape holism under the framework of systematism?

Full article

Figure 1

Open AccessProceeding Paper

Towards Ethical Engineering: Artificial Intelligence as an Ethical Governance Tool for Emerging Technologies

by

Dazhou Wang

Comput. Sci. Math. Forum 2023, 8(1), 76; https://doi.org/10.3390/cmsf2023008076 - 10 Oct 2023

Abstract

As a governance framework for emerging technologies, the responsible research and innovation (RRI) approach faces some fundamental conflicts, particularly between “inclusivity” and “agility”. When we try to apply RRI principles to the development of AI, we also encounter similar difficulties. Therefore, it may

[...] Read more.

As a governance framework for emerging technologies, the responsible research and innovation (RRI) approach faces some fundamental conflicts, particularly between “inclusivity” and “agility”. When we try to apply RRI principles to the development of AI, we also encounter similar difficulties. Therefore, it may be helpful to change the approach by not only seeing AI as the object of ethical governance but also as an effective tool for it. This involves mainly three levels: first, using AI directly to solve general ethical problems; second, using AI to solve the ethical problems brought about by AI; and third, using AI to upgrade the RRI framework. By doing something at these three levels, we can promote the fusion of AI and technology ethics and move towards ethical engineering, thus pushing ethical governance to new heights. In traditional ethical governance approaches, ethics is external, brought in by external actors, and the focus is on actors. However, in this new approach, ethics must be internalized in technology, with the focus not only on actors, but also on technology itself. Its essence lies in the invention and creation of technologically ethical governance tools.

Full article

Open AccessProceeding Paper

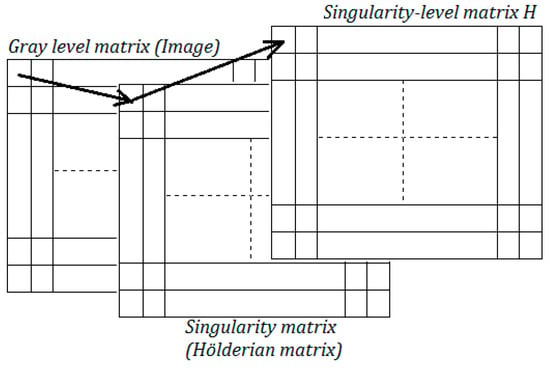

Contribution to the Characterization of the Kidney Ultrasound Image Using Singularity Levels

by

Mustapha Tahiri Alaoui and Redouan Korchiyne

Comput. Sci. Math. Forum 2023, 6(1), 12; https://doi.org/10.3390/cmsf2023006012 - 28 Sep 2023

Abstract

The aim to improve diagnosis decision systems of kidneys led to the concept of many methods of texture characterization of kidneys from ultrasound images. Here, as a first main contribution, we propose a texture characterization method based on the singularity defined by the

[...] Read more.

The aim to improve diagnosis decision systems of kidneys led to the concept of many methods of texture characterization of kidneys from ultrasound images. Here, as a first main contribution, we propose a texture characterization method based on the singularity defined by the Hölder exponent, which is multifractal local information. Indeed, the originality of our contribution here is to build a singularity-level matrix corresponding to different levels of regularities, from which we extract new texture features. Finally, as a second main contribution, we will evaluate the potential of our proposed multifractal features to characterize textured ultrasound images of the kidney. Having more reproducibility of the texture features first requires a good choice of Choquet capacity to calculate the irregularities and a selection of a more representative region of interest (ROIs) to analyze by carrying out an adapted virtual puncture in the kidney components. The results of the supervised classification, using three classes of images (young, healthy, and glomerulonephritis), are interesting and promising since the classification accuracy reaches about 80%. This encourages conducting further research to yield better results by overcoming the limitations and taking into account the recommendations made in this article.

Full article

(This article belongs to the Proceedings of The 3rd International Day on Computer Science and Applied Mathematics)

►▼

Show Figures

Figure 1

Open AccessProceeding Paper

Research on the Uncertainty of Musical Information

by

Xiaolong Yang

Comput. Sci. Math. Forum 2023, 8(1), 89; https://doi.org/10.3390/cmsf2023008089 - 27 Sep 2023

Abstract

Against the background of the rapid development of today’s human society, this article integrates the concept of musical uncertainty through informatization, uses the exploration of dialectics in philosophy to further summarize the informatization, uncertainty and essential attributes of music, integrates with the author’s

[...] Read more.

Against the background of the rapid development of today’s human society, this article integrates the concept of musical uncertainty through informatization, uses the exploration of dialectics in philosophy to further summarize the informatization, uncertainty and essential attributes of music, integrates with the author’s own understanding of the above musical concepts, and clarifies the author’s research results on the uncertainty of music.

Full article

Open AccessProceeding Paper

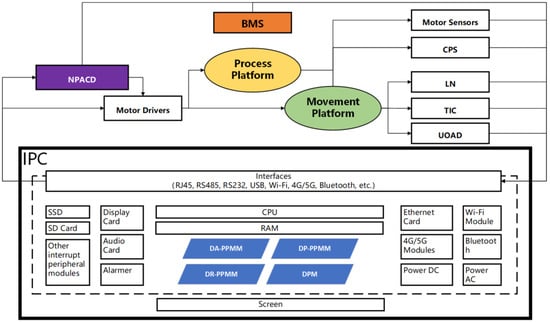

Architecture Design and Application of a Compound Robot Control System with Movement–Process Collaboration

by

Le Xiao, Qiang Li and Zhicheng Chen

Comput. Sci. Math. Forum 2023, 8(1), 59; https://doi.org/10.3390/cmsf2023008059 - 25 Sep 2023

Abstract

►▼

Show Figures

Movement–process collaborative compound robots are an important part of intelligent factory logistics systems, and they usually have two control systems located in the movement platform and the process platform. Interaction between the two platforms often requires the central logistics execution system to work.

[...] Read more.

Movement–process collaborative compound robots are an important part of intelligent factory logistics systems, and they usually have two control systems located in the movement platform and the process platform. Interaction between the two platforms often requires the central logistics execution system to work. This paper proposes a compound robot control system architecture that integrates movement control and process action control. Its hardware can be an industrial computer with movement- and process-related sensor interfaces, and its software with multi-process management completes the internal collaboration and external data exchange. This architecture can greatly reduce the cost of compound robots and the scheduling load on the central logistics system server, simplify the development of logistics control programs, improve real-time collaboration between movement and process control, and provide strong support for the realization of massive logistics devices’ collaborative management and control.

Full article

Figure 1

Highly Accessed Articles

Latest Books

E-Mail Alert

News

Topics