Journal Description

Computation

Computation

is a peer-reviewed journal of computational science and engineering published monthly online by MDPI.

- Open Access— free for readers, with article processing charges (APC) paid by authors or their institutions.

- High Visibility: indexed within Scopus, ESCI (Web of Science), CAPlus / SciFinder, Inspec, dblp, and other databases.

- Journal Rank: CiteScore - Q2 (Applied Mathematics)

- Rapid Publication: manuscripts are peer-reviewed and a first decision is provided to authors approximately 18 days after submission; acceptance to publication is undertaken in 4.4 days (median values for papers published in this journal in the second half of 2023).

- Recognition of Reviewers: reviewers who provide timely, thorough peer-review reports receive vouchers entitling them to a discount on the APC of their next publication in any MDPI journal, in appreciation of the work done.

Impact Factor:

2.2 (2022);

5-Year Impact Factor:

2.2 (2022)

Latest Articles

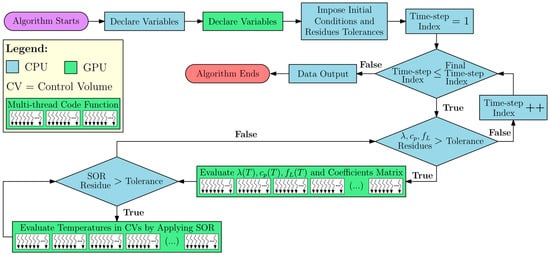

An Implementation of LASER Beam Welding Simulation on Graphics Processing Unit Using CUDA

Computation 2024, 12(4), 83; https://doi.org/10.3390/computation12040083 - 17 Apr 2024

Abstract

The maximum number of parallel threads in traditional CFD solutions is limited by the Central Processing Unit (CPU) capacity, which is lower than the capabilities of a modern Graphics Processing Unit (GPU). In this context, the GPU allows for simultaneous processing of several

[...] Read more.

The maximum number of parallel threads in traditional CFD solutions is limited by the Central Processing Unit (CPU) capacity, which is lower than the capabilities of a modern Graphics Processing Unit (GPU). In this context, the GPU allows for simultaneous processing of several parallel threads with double-precision floating-point formatting. The present study was focused on evaluating the advantages and drawbacks of implementing LASER Beam Welding (LBW) simulations using the CUDA platform. The performance of the developed code was compared to that of three top-rated commercial codes executed on the CPU. The unsteady three-dimensional heat conduction Partial Differential Equation (PDE) was discretized in space and time using the Finite Volume Method (FVM). The Volumetric Thermal Capacitor (VTC) approach was employed to model the melting-solidification. The GPU solutions were computed using a CUDA-C language in-house code, running on a Gigabyte Nvidia GeForce RTX™ 3090 video card and an MSI 4090 video card (both made in Hsinchu, Taiwan), each with 24 GB of memory. The commercial solutions were executed on an Intel® Core™ i9-12900KF CPU (made in Hillsboro, Oregon, United States of America) with a 3.6 GHz base clock and 16 cores. The results demonstrated that GPU and CPU processing achieve similar precision, but the GPU solution exhibited significantly faster speeds and greater power efficiency, resulting in speed-ups ranging from 75.6 to 1351.2 times compared to the CPU solutions. The in-house code also demonstrated optimized memory usage, with an average of 3.86 times less RAM utilization. Therefore, adopting parallelized algorithms run on GPU can lead to reduced CFD computational costs compared to traditional codes while maintaining high accuracy.

Full article

(This article belongs to the Special Issue 10th Anniversary of Computation—Computational Heat and Mass Transfer (ICCHMT 2023))

►

Show Figures

Open AccessArticle

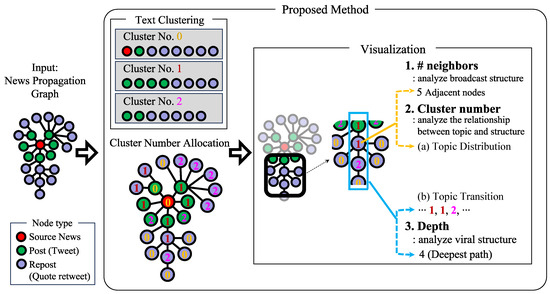

Graph-Based Interpretability for Fake News Detection through Topic- and Propagation-Aware Visualization

by

Kayato Soga, Soh Yoshida and Mitsuji Muneyasu

Computation 2024, 12(4), 82; https://doi.org/10.3390/computation12040082 - 15 Apr 2024

Abstract

In the context of the increasing spread of misinformation via social network services, in this study, we addressed the critical challenge of detecting and explaining the spread of fake news. Early detection methods focused on content analysis, whereas recent approaches have exploited the

[...] Read more.

In the context of the increasing spread of misinformation via social network services, in this study, we addressed the critical challenge of detecting and explaining the spread of fake news. Early detection methods focused on content analysis, whereas recent approaches have exploited the distinctive propagation patterns of fake news to analyze network graphs of news sharing. However, these accurate methods lack accountability and provide little insight into the reasoning behind their classifications. We aimed to fill this gap by elucidating the structural differences in the spread of fake and real news, with a focus on opinion consensus within these structures. We present a novel method that improves the interpretability of graph-based propagation detectors by visualizing article topics and propagation structures using BERTopic for topic classification and analyzing the effect of topic agreement on propagation patterns. By applying this method to a real-world dataset and conducting a comprehensive case study, we not only demonstrated the effectiveness of the method in identifying characteristic propagation paths but also propose new metrics for evaluating the interpretability of the detection methods. Our results provide valuable insights into the structural behavior and patterns of news propagation, contributing to the development of more transparent and explainable fake news detection systems.

Full article

(This article belongs to the Special Issue Computational Social Science and Complex Systems)

►▼

Show Figures

Figure 1

Open AccessArticle

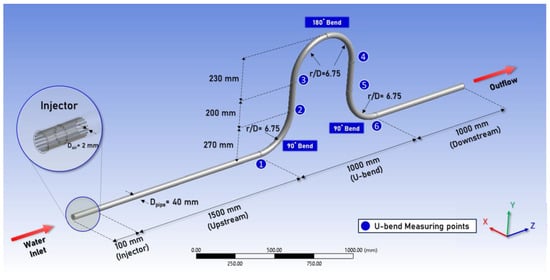

Air–Water Two-Phase Flow Dynamics Analysis in Complex U-Bend Systems through Numerical Modeling

by

Ergin Kükrer and Nurdil Eskin

Computation 2024, 12(4), 81; https://doi.org/10.3390/computation12040081 - 12 Apr 2024

Abstract

This study aims to provide insights into the intricate interactions between gas and liquid phases within flow components, which are pivotal in various industrial sectors such as nuclear reactors, oil and gas pipelines, and thermal management systems. Employing the Eulerian–Eulerian approach, our computational

[...] Read more.

This study aims to provide insights into the intricate interactions between gas and liquid phases within flow components, which are pivotal in various industrial sectors such as nuclear reactors, oil and gas pipelines, and thermal management systems. Employing the Eulerian–Eulerian approach, our computational model incorporates interphase relations, including drag and non-drag forces, to analyze phase distribution and velocities within a complex U-bend system. Comprising two horizontal-to-vertical bends and one vertical 180-degree elbow, the U-bend system’s behavior concerning bend geometry and airflow rates is scrutinized, highlighting their significant impact on multiphase flow dynamics. The study not only presents a detailed exposition of the numerical modeling techniques tailored for this complex geometry but also discusses the results obtained. Detailed analyses of local void fraction and phase velocities for each phase are provided. Furthermore, experimental validation enhances the reliability of our computational findings, with close agreement observed between computational and experimental results. Overall, the study underscores the efficacy of the Eulerian approach with interphase relations in capturing the complex behavior of the multiphase flow in U-bend systems, offering valuable insights for hydraulic system design and optimization in industrial applications.

Full article

(This article belongs to the Special Issue 10th Anniversary of Computation—Computational Heat and Mass Transfer (ICCHMT 2023))

►▼

Show Figures

Figure 1

Open AccessArticle

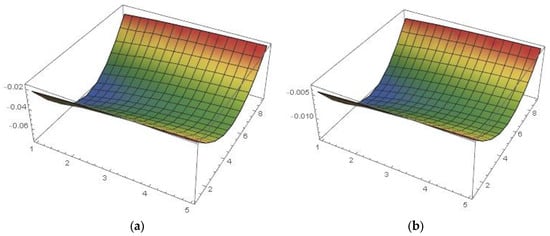

Application of an Extended Cubic B-Spline to Find the Numerical Solution of the Generalized Nonlinear Time-Fractional Klein–Gordon Equation in Mathematical Physics

by

Miguel Vivas-Cortez, M. J. Huntul, Maria Khalid, Madiha Shafiq, Muhammad Abbas and Muhammad Kashif Iqbal

Computation 2024, 12(4), 80; https://doi.org/10.3390/computation12040080 - 11 Apr 2024

Abstract

A B-spline function is a series of flexible elements that are managed by a set of control points to produce smooth curves. By using a variety of points, these functions make it possible to build and maintain complicated shapes. Any spline function of

[...] Read more.

A B-spline function is a series of flexible elements that are managed by a set of control points to produce smooth curves. By using a variety of points, these functions make it possible to build and maintain complicated shapes. Any spline function of a certain degree can be expressed as a linear combination of the B-spline basis of that degree. The flexibility, symmetry and high-order accuracy of the B-spline functions make it possible to tackle the best solutions. In this study, extended cubic B-spline (ECBS) functions are utilized for the numerical solutions of the generalized nonlinear time-fractional Klein–Gordon Equation (TFKGE). Initially, the Caputo time-fractional derivative (CTFD) is approximated using standard finite difference techniques, and the space derivatives are discretized by utilizing ECBS functions. The stability and convergence analysis are discussed for the given numerical scheme. The presented technique is tested on a variety of problems, and the approximate results are compared with the existing computational schemes.

Full article

(This article belongs to the Special Issue Advanced Numerical Methods for Solving Differential Equations with Applications in Science and Engineering)

►▼

Show Figures

Figure 1

Open AccessArticle

Efficient Numerical Solutions for Fuzzy Time Fractional Diffusion Equations Using Two Explicit Compact Finite Difference Methods

by

Belal Batiha

Computation 2024, 12(4), 79; https://doi.org/10.3390/computation12040079 - 11 Apr 2024

Abstract

►▼

Show Figures

This article introduces an extension of classical fuzzy partial differential equations, known as fuzzy fractional partial differential equations. These equations provide a better explanation for certain phenomena. We focus on solving the fuzzy time diffusion equation with a fractional order of 0 <

[...] Read more.

This article introduces an extension of classical fuzzy partial differential equations, known as fuzzy fractional partial differential equations. These equations provide a better explanation for certain phenomena. We focus on solving the fuzzy time diffusion equation with a fractional order of 0 < α ≤ 1, using two explicit compact finite difference schemes that are the compact forward time center space (CFTCS) and compact Saulyev’s scheme. The time fractional derivative uses the Caputo definition. The double-parametric form approach is used to transfer the governing equation from an uncertain to a crisp form. To ensure stability, we apply the von Neumann method to show that CFTCS is conditionally stable, while compact Saulyev’s is unconditionally stable. A numerical example is provided to demonstrate the practicality of our proposed schemes.

Full article

Figure 1

Open AccessReview

The Study of Molecules and Processes in Solution: An Overview of Questions, Approaches and Applications

by

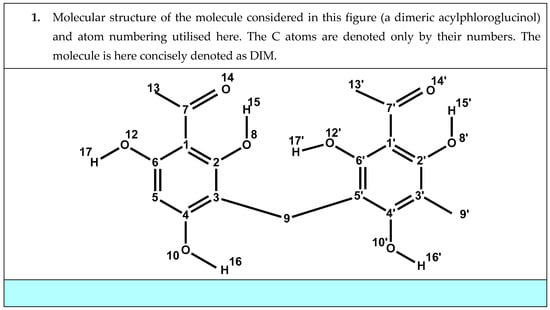

Neani Tshilande, Liliana Mammino and Mireille K. Bilonda

Computation 2024, 12(4), 78; https://doi.org/10.3390/computation12040078 - 09 Apr 2024

Abstract

Many industrial processes, several natural processes involving non-living matter, and all the processes occurring within living organisms take place in solution. This means that the molecules playing active roles in the processes are present within another medium, called solvent. The solute molecules are

[...] Read more.

Many industrial processes, several natural processes involving non-living matter, and all the processes occurring within living organisms take place in solution. This means that the molecules playing active roles in the processes are present within another medium, called solvent. The solute molecules are surrounded by solvent molecules and interact with them. Understanding the nature and strength of these interactions, and the way in which they modify the properties of the solute molecules, is important for a better understanding of the chemical processes occurring in solution, including possible roles of the solvent in those processes. Computational studies can provide a wealth of information on solute–solvent interactions and their effects. Two major models have been developed to this purpose: a model viewing the solvent as a polarisable continuum surrounding the solute molecule, and a model considering a certain number of explicit solvent molecules around a solute molecule. Each of them has its advantages and challenges, and one selects the model that is more suitable for the type of information desired for the specific system under consideration. These studies are important in many areas of chemistry research, from the investigation of the processes occurring within a living organism to drug design and to the design of environmentally benign solvents meant to replace less benign ones in the chemical industry, as envisaged by the green chemistry principles. The paper presents a quick overview of the modelling approaches and an overview of concrete studies, with reference to selected crucial investigation themes.

Full article

(This article belongs to the Special Issue Calculations in Solution)

►▼

Show Figures

Figure 1

Open AccessArticle

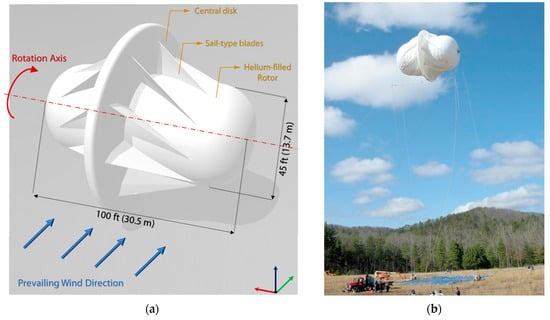

Performance Rating and Flow Analysis of an Experimental Airborne Drag-Type VAWT Employing Rotating Mesh

by

Doğan Güneş and Ergin Kükrer

Computation 2024, 12(4), 77; https://doi.org/10.3390/computation12040077 - 08 Apr 2024

Abstract

This paper presents the results of a performance analysis conducted on an experimental airborne vertical axis wind turbine (VAWT), specifically focusing on the MAGENN Air Rotor System (MARS) project. During its development phase, the company claimed that MARS could generate a power output

[...] Read more.

This paper presents the results of a performance analysis conducted on an experimental airborne vertical axis wind turbine (VAWT), specifically focusing on the MAGENN Air Rotor System (MARS) project. During its development phase, the company claimed that MARS could generate a power output of 100 kW under wind velocities of 12 m/s. However, no further information or numerical models supporting this claim were found in the literature. Extending our prior conference work, the main objective of our study is to assess the accuracy of the stated rated power output and to develop a comprehensive numerical model to analyze the airflow dynamics around this unique airborne rotor configuration. The innovative design of the solid model, resembling yacht sails, was developed using images in the related web pages and literature, announcing the power coefficient (Cp) as 0.21. In this study, results cover 12 m/s wind and flat terrain wind velocities (3, 5, 6, and 9 m/s) with varying rotational velocities. Through meticulous calculations for the atypical blade design, optimal rotational velocities and an expected Tip Speed Ratio (TSR) of around 1.0 were determined. Introducing the Centroid Speed Ratio (CSR), which is the ratio of the sail blade centroid and the superficial wind velocities for varied wind speeds, the findings indicate an average power generation potential of 90 kW at 1.4 rad/s for 12 m/s and approximately 16 kW at a 300 m altitude for a 6 m/s wind velocity.

Full article

(This article belongs to the Special Issue 10th Anniversary of Computation—Computational Heat and Mass Transfer (ICCHMT 2023))

►▼

Show Figures

Figure 1

Open AccessArticle

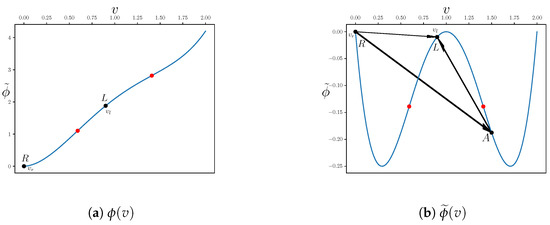

Why Stable Finite-Difference Schemes Can Converge to Different Solutions: Analysis for the Generalized Hopf Equation

by

Vladimir A. Shargatov, Anna P. Chugainova, Georgy V. Kolomiytsev, Irik I. Nasyrov, Anastasia M. Tomasheva, Sergey V. Gorkunov and Polina I. Kozhurina

Computation 2024, 12(4), 76; https://doi.org/10.3390/computation12040076 - 05 Apr 2024

Abstract

The example of two families of finite-difference schemes shows that, in general, the numerical solution of the Riemann problem for the generalized Hopf equation depends on the finite-difference scheme. The numerical solution may differ both quantitatively and qualitatively. The reason for this is

[...] Read more.

The example of two families of finite-difference schemes shows that, in general, the numerical solution of the Riemann problem for the generalized Hopf equation depends on the finite-difference scheme. The numerical solution may differ both quantitatively and qualitatively. The reason for this is the nonuniqueness of the solution to the Riemann problem for the generalized Hopf equation. The numerical solution is unique in the case of a flow function with two inflection points if artificial dissipation and dispersion are introduced, i.e., the generalized Korteweg–de Vries-Burgers equation is considered. We propose a method for selecting coefficients of dissipation and dispersion. The method makes it possible to obtain a physically justified unique numerical solution. This solution is independent of the difference scheme.

Full article

(This article belongs to the Special Issue Recent Advances in Numerical Simulation of Compressible Flows)

►▼

Show Figures

Figure 1

Open AccessArticle

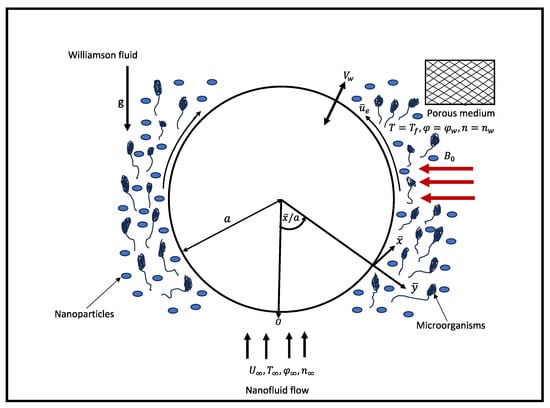

Overlapping Grid-Based Spectral Collocation Technique for Bioconvective Flow of MHD Williamson Nanofluid over a Radiative Circular Cylindrical Body with Activation Energy

by

Musawenkosi Patson Mkhatshwa

Computation 2024, 12(4), 75; https://doi.org/10.3390/computation12040075 - 05 Apr 2024

Abstract

The amalgamation of motile microbes in nanofluid (NF) is important in upsurging the thermal conductivity of various systems, including micro-fluid devices, chip-shaped micro-devices, and enzyme biosensors. The current scrutiny focuses on the bioconvective flow of magneto-Williamson NFs containing motile microbes through a horizontal

[...] Read more.

The amalgamation of motile microbes in nanofluid (NF) is important in upsurging the thermal conductivity of various systems, including micro-fluid devices, chip-shaped micro-devices, and enzyme biosensors. The current scrutiny focuses on the bioconvective flow of magneto-Williamson NFs containing motile microbes through a horizontal circular cylinder placed in a porous medium with nonlinear mixed convection and thermal radiation, heat sink/source, variable fluid properties, activation energy with chemical and microbial reactions, and Brownian motion for both nanoparticles and microbes. The flow analysis has also been considered subject to velocity slips, suction/injection, and heat convective and zero mass flux constraints at the boundary. The governing equations have been converted to a non-dimensional form using similarity variables, and the overlapping grid-based spectral collocation technique has been executed to procure solutions numerically. The graphical interpretation of various pertinent variables in the flow profiles and physical quantities of engineering attentiveness is provided and discussed. The results reveal that NF flow is accelerated by nonlinear thermal convection, velocity slip, magnetic fields, and variable viscosity parameters but decelerated by the Williamson fluid and suction parameters. The inclusion of nonlinear thermal radiation and variable thermal conductivity helps to enhance the fluid temperature and heat transfer rate. The concentration of both nanoparticles and motile microbes is promoted by the incorporation of activation energy in the flow system. The contribution of microbial Brownian motion along with microbial reactions on flow quantities justifies the importance of these features in the dynamics of motile microbes.

Full article

(This article belongs to the Special Issue 10th Anniversary of Computation—Computational Heat and Mass Transfer (ICCHMT 2023))

►▼

Show Figures

Figure 1

Open AccessReview

Computational Modelling and Simulation of Scaffolds for Bone Tissue Engineering

by

Haja-Sherief N. Musthafa, Jason Walker and Mariusz Domagala

Computation 2024, 12(4), 74; https://doi.org/10.3390/computation12040074 - 04 Apr 2024

Abstract

►▼

Show Figures

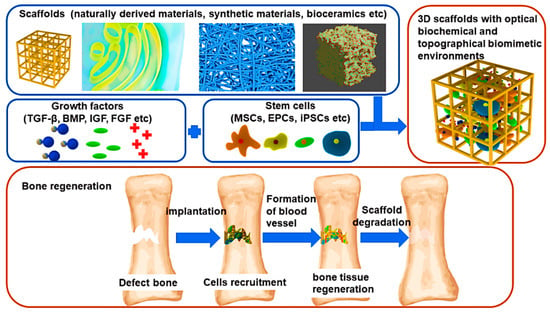

Three-dimensional porous scaffolds are substitutes for traditional bone grafts in bone tissue engineering (BTE) applications to restore and treat bone injuries and defects. The use of computational modelling is gaining momentum to predict the parameters involved in tissue healing and cell seeding procedures

[...] Read more.

Three-dimensional porous scaffolds are substitutes for traditional bone grafts in bone tissue engineering (BTE) applications to restore and treat bone injuries and defects. The use of computational modelling is gaining momentum to predict the parameters involved in tissue healing and cell seeding procedures in perfusion bioreactors to reach the final goal of optimal bone tissue growth. Computational modelling based on finite element method (FEM) and computational fluid dynamics (CFD) are two standard methodologies utilised to investigate the equivalent mechanical properties of tissue scaffolds, as well as the flow characteristics inside the scaffolds, respectively. The success of a computational modelling simulation hinges on the selection of a relevant mathematical model with proper initial and boundary conditions. This review paper aims to provide insights to researchers regarding the selection of appropriate finite element (FE) models for different materials and CFD models for different flow regimes inside perfusion bioreactors. Thus, these FEM/CFD computational models may help to create efficient designs of scaffolds by predicting their structural properties and their haemodynamic responses prior to in vitro and in vivo tissue engineering (TE) applications.

Full article

Figure 1

Open AccessArticle

Recent Developments in Using a Modified Transfer Matrix Method for an Automotive Exhaust Muffler Design Based on Computation Fluid Dynamics in 3D

by

Mihai Bugaru and Cosmin-Marius Vasile

Computation 2024, 12(4), 73; https://doi.org/10.3390/computation12040073 - 04 Apr 2024

Abstract

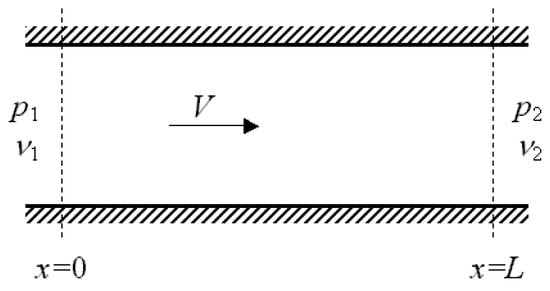

The present work aims to investigate the newly modified transfer matrix method (MTMM) to predict an automotive exhaust muffler’s transmission loss (AEMTL). The MTMM is a mixed method between a 3D-CFD (Computation Fluid Dynamics in 3D), namely AVL FIRETM M Engine (process-safe

[...] Read more.

The present work aims to investigate the newly modified transfer matrix method (MTMM) to predict an automotive exhaust muffler’s transmission loss (AEMTL). The MTMM is a mixed method between a 3D-CFD (Computation Fluid Dynamics in 3D), namely AVL FIRETM M Engine (process-safe 3D-CFD Simulations of Internal Combustions Engines), and the classic TMM for the exhaust muffler. For all the continuous and discontinuous sections of the exhaust muffler, the Mach number of the cross-section, the temperature, and the type of discontinuity of the exhaust gas flow were taken into consideration to evaluate the specific elements of the acoustic quadrupole that define the MTMM coupled with AVL FIRETM M Engine for one given muffler exhaust. Also, the perforations of intermediary ducts were considered in the new MTMM (AVL FIRETM M Engine linked with TMM) to predict the TL (transmission loss) of an automotive exhaust muffler with three expansion chambers. The results obtained for the TL in the frequency range 0.1-4 kHz agree with the experimental results published in the literature. The TMM was improved by adding the AVL FIRETM M Engine as a valuable tool in designing the automotive exhaust muffler (AEM).

Full article

(This article belongs to the Special Issue Experiments/Process/System Modeling/Simulation/Optimization (IC-EPSMSO 2023))

►▼

Show Figures

Figure 1

Open AccessArticle

Progression Learning Convolution Neural Model-Based Sign Language Recognition Using Wearable Glove Devices

by

Yijuan Liang, Chaiyan Jettanasen and Pathomthat Chiradeja

Computation 2024, 12(4), 72; https://doi.org/10.3390/computation12040072 - 03 Apr 2024

Abstract

►▼

Show Figures

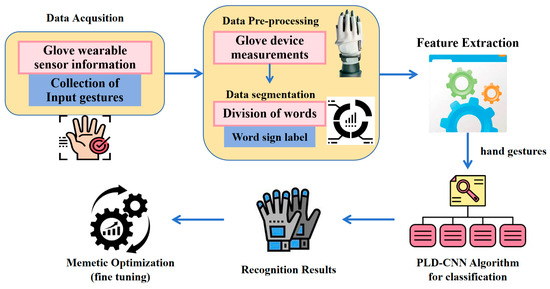

Communication among hard-of-hearing individuals presents challenges, and to facilitate communication, sign language is preferred. Many people in the deaf and hard-of-hearing communities struggle to understand sign language due to their lack of sign-mode knowledge. Contemporary researchers utilize glove and vision-based approaches to capture

[...] Read more.

Communication among hard-of-hearing individuals presents challenges, and to facilitate communication, sign language is preferred. Many people in the deaf and hard-of-hearing communities struggle to understand sign language due to their lack of sign-mode knowledge. Contemporary researchers utilize glove and vision-based approaches to capture hand movement and analyze communication; most researchers use vision-based techniques to identify disabled people’s communication because the glove-based approach causes individuals to feel uncomfortable. However, the glove solution successfully identifies motion and hand dexterity, even though it only recognizes the numbers, words, and letters being communicated, failing to identify sentences. Therefore, artificial intelligence (AI) is integrated with the sign language prediction system to identify disabled people’s sentence-based communication. Here, wearable glove-related sign language information is utilized to analyze the recognition system’s efficiency. The collected inputs are processed using progression learning deep convolutional neural networks (PLD-CNNs). The technique known as progression learning processes sentences by dividing them into words, creating a training dataset. The model assists in efforts to understand sign language sentences. A memetic optimization algorithm is used to calibrate network performance, minimizing recognition optimization problems. This process maximizes convergence speed and reduces translation difficulties, enhancing the overall learning process. The created system is developed using the MATLAB (R2021b) tool, and its proficiency is evaluated using performance metrics. The experimental findings illustrate that the proposed system works by recognizing sign language movements with excellent precision, recall, accuracy, and F1 scores, rendering it a powerful tool in the detection of gestures in general and sign-based sentences in particular.

Full article

Figure 1

Open AccessArticle

Scale-Resolving Simulation of Shock-Induced Aerobreakup of Water Droplet

by

Viola Rossano and Giuliano De Stefano

Computation 2024, 12(4), 71; https://doi.org/10.3390/computation12040071 - 03 Apr 2024

Abstract

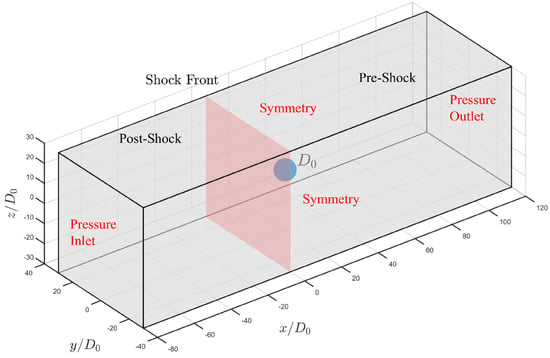

Two different scale-resolving simulation (SRS) approaches to turbulence modeling and simulation are used to predict the breakup of a spherical water droplet in air, due to the impact of a traveling plane shock wave. The compressible flow governing equations are solved by means

[...] Read more.

Two different scale-resolving simulation (SRS) approaches to turbulence modeling and simulation are used to predict the breakup of a spherical water droplet in air, due to the impact of a traveling plane shock wave. The compressible flow governing equations are solved by means of a finite volume-based numerical method, with the volume-of-fluid technique being employed to track the air–water interface on the dynamically adaptive mesh. The three-dimensional analysis is performed in the shear stripping regime, examining the drift, deformation, and breakup of the droplet for a benchmark flow configuration. The comparison of the present SRS results against reference experimental and numerical data, in terms of both droplet morphology and breakup dynamics, provides evidence that the adopted computational methods have significant practical potential, being able to locally reproduce unsteady small-scale flow structures. These computational models offer viable alternatives to higher-fidelity, more costly methods for engineering simulations of complex two-phase turbulent compressible flows.

Full article

(This article belongs to the Special Issue Recent Advances in Numerical Simulation of Compressible Flows)

►▼

Show Figures

Figure 1

Open AccessArticle

Impact of Ukrainian Refugees on the COVID-19 Pandemic Dynamics after 24 February 2022

by

Igor Nesteruk and Paul Brown

Computation 2024, 12(4), 70; https://doi.org/10.3390/computation12040070 - 03 Apr 2024

Abstract

The full-scale invasion of Ukraine caused an unprecedented number of refugees after 24 February 2022. To estimate the influence of this humanitarian disaster on the COVID-19 pandemic dynamics, the smoothed daily numbers of cases in Ukraine, the UK, Poland, Germany, the Republic of

[...] Read more.

The full-scale invasion of Ukraine caused an unprecedented number of refugees after 24 February 2022. To estimate the influence of this humanitarian disaster on the COVID-19 pandemic dynamics, the smoothed daily numbers of cases in Ukraine, the UK, Poland, Germany, the Republic of Moldova, and in the whole world were calculated and compared with values predicted by the generalized SIR model. In March 2022, the increase in the smoothed number of new cases in the UK, Germany, and worldwide was visible. A simple formula to estimate the effective reproduction number based on the smoothed accumulated numbers of cases is proposed. The results of calculations agree with the figures presented by John Hopkins University and demonstrate a short-term growth in the reproduction number in the UK, Poland, Germany, Moldova, and worldwide in March 2022.

Full article

(This article belongs to the Special Issue Artificial Intelligence Applications in Public Health)

►▼

Show Figures

Figure 1

Open AccessReview

Overview of the Application of Physically Informed Neural Networks to the Problems of Nonlinear Fluid Flow in Porous Media

by

Nina Dieva, Damir Aminev, Marina Kravchenko and Nikolay Smirnov

Computation 2024, 12(4), 69; https://doi.org/10.3390/computation12040069 - 02 Apr 2024

Abstract

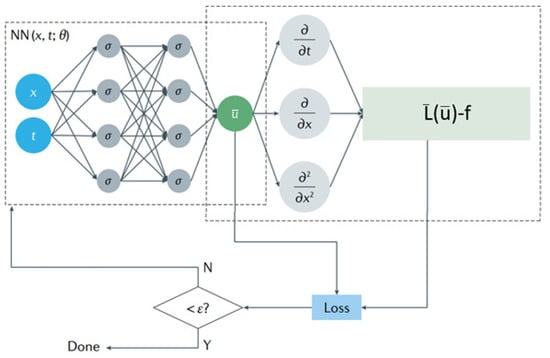

To describe unsteady multiphase flows in porous media, it is important to consider the non-Newtonian properties of fluids by including rheological laws in the hydrodynamic model. This leads to the formation of a nonlinear system of partial differential equations. To solve this direct

[...] Read more.

To describe unsteady multiphase flows in porous media, it is important to consider the non-Newtonian properties of fluids by including rheological laws in the hydrodynamic model. This leads to the formation of a nonlinear system of partial differential equations. To solve this direct problem, it is necessary to linearize the equation system. Algorithm construction for inverse problem solution is problematic since the numerical solution is unstable. The application of implicit methods is reduced to matrix equations with a high rank of the coefficient matrix, which requires significant computational resources. The authors of this paper investigated the possibility of parameterized function (physics-informed neural networks) application to solve direct and inverse problems of non-Newtonian fluid flows in porous media. The results of laboratory experiments to process core samples and field data from a real oil field were selected as examples of application of this method. Due to the lack of analytical solutions, the results obtained via the finite difference method and via real experiments were proposed for validation.

Full article

(This article belongs to the Special Issue Recent Advances in Numerical Simulation of Compressible Flows)

►▼

Show Figures

Figure 1

Open AccessArticle

Semi-Online Algorithms for the Hierarchical Extensible Bin-Packing Problem and Early Work Problem

by

Yaru Yang, Man Xiao and Weidong Li

Computation 2024, 12(4), 68; https://doi.org/10.3390/computation12040068 - 01 Apr 2024

Abstract

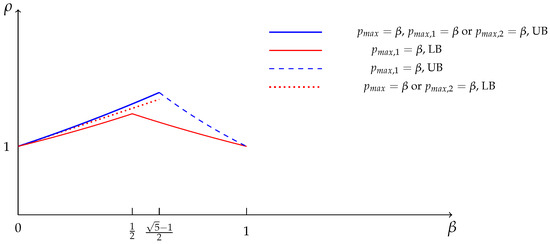

In this paper, we consider two types of semi-online problems with hierarchies. In the extensible bin-packing problem with two hierarchical bins, one bin can pack all items, while the other bin can only pack some items. The initial size of the bin can

[...] Read more.

In this paper, we consider two types of semi-online problems with hierarchies. In the extensible bin-packing problem with two hierarchical bins, one bin can pack all items, while the other bin can only pack some items. The initial size of the bin can be expanded, and the goal is to minimize the total size of the two bins. When the largest item size is given in advance, we provide some lower bounds and propose online algorithms. When the total item size is given in advance, we provide some lower bounds and propose online algorithms. In addition, we also consider the relevant early-work-maximization problem on two hierarchical machines; one machine can process any job, while the other machine can only process some jobs. Each job shares a common due date, and the goal is to maximize the total early work. When the largest job size is known, we provide some lower bounds and propose two online algorithms whose competitive ratios are close to the lower bounds.

Full article

(This article belongs to the Section Computational Engineering)

►▼

Show Figures

Figure 1

Open AccessArticle

Application of Machine Learning to Predict Blockage in Multiphase Flow

by

Nazerke Saparbayeva, Boris V. Balakin, Pavel G. Struchalin, Talal Rahman and Sergey Alyaev

Computation 2024, 12(4), 67; https://doi.org/10.3390/computation12040067 - 31 Mar 2024

Abstract

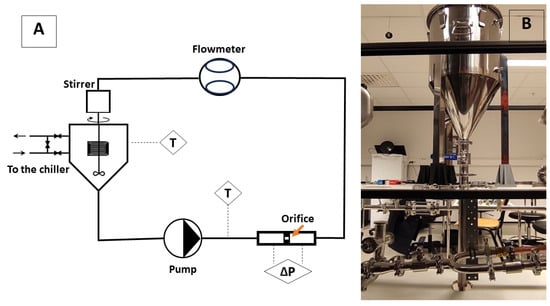

This study presents a machine learning-based approach to predict blockage in multiphase flow with cohesive particles. The aim is to predict blockage based on parameters like Reynolds and capillary numbers using a random forest classifier trained on experimental and simulation data. Experimental observations

[...] Read more.

This study presents a machine learning-based approach to predict blockage in multiphase flow with cohesive particles. The aim is to predict blockage based on parameters like Reynolds and capillary numbers using a random forest classifier trained on experimental and simulation data. Experimental observations come from a lab-scale flow loop with ice slurry in the decane. The plugging simulation is based on coupled Computational Fluid Dynamics with Discrete Element Method (CFD-DEM). The resulting classifier demonstrated high accuracy, validated by precision, recall, and F1-score metrics, providing precise blockage prediction under specific flow conditions. Additionally, sensitivity analyses highlighted the model’s adaptability to cohesion variations. Equipped with the trained classifier, we generated a detailed machine-learning-based flow map and compared it with earlier literature, simulations, and experimental data results. This graphical representation clarifies the blockage boundaries under given conditions. The methodology’s success demonstrates the potential for advanced predictive modelling in diverse flow systems, contributing to improved blockage prediction and prevention.

Full article

(This article belongs to the Special Issue 10th Anniversary of Computation—Computational Engineering)

►▼

Show Figures

Figure 1

Open AccessArticle

COVID-19 Image Classification: A Comparative Performance Analysis of Hand-Crafted vs. Deep Features

by

Sadiq Alinsaif

Computation 2024, 12(4), 66; https://doi.org/10.3390/computation12040066 - 30 Mar 2024

Abstract

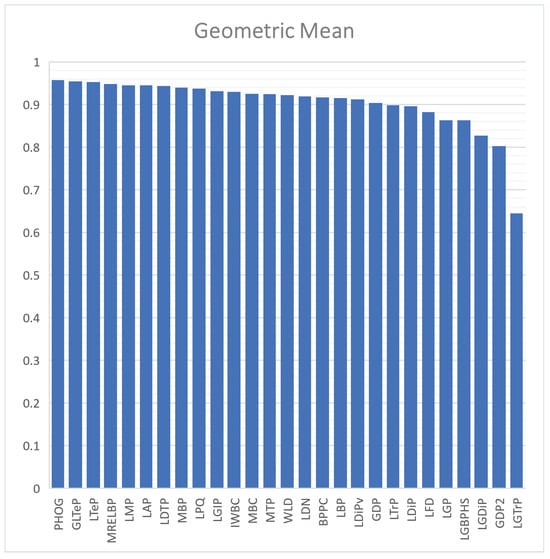

This study investigates techniques for medical image classification, specifically focusing on COVID-19 scans obtained through computer tomography (CT). Firstly, handcrafted methods based on feature engineering are explored due to their suitability for training traditional machine learning (TML) classifiers (e.g., Support Vector Machine (SVM))

[...] Read more.

This study investigates techniques for medical image classification, specifically focusing on COVID-19 scans obtained through computer tomography (CT). Firstly, handcrafted methods based on feature engineering are explored due to their suitability for training traditional machine learning (TML) classifiers (e.g., Support Vector Machine (SVM)) when faced with limited medical image datasets. In this context, I comprehensively evaluate and compare 27 descriptor sets. More recently, deep learning (DL) models have successfully analyzed and classified natural and medical images. However, the scarcity of well-annotated medical images, particularly those related to COVID-19, presents challenges for training DL models from scratch. Consequently, I leverage deep features extracted from 12 pre-trained DL models for classification tasks. This work presents a comprehensive comparative analysis between TML and DL approaches in COVID-19 image classification.

Full article

(This article belongs to the Special Issue Computational Medical Image Analysis)

►▼

Show Figures

Figure 1

Open AccessFeature PaperArticle

Multi-Directional Functionally Graded Sandwich Plates: Buckling and Free Vibration Analysis with Refined Plate Models under Various Boundary Conditions

by

Lazreg Hadji, Vagelis Plevris, Royal Madan and Hassen Ait Atmane

Computation 2024, 12(4), 65; https://doi.org/10.3390/computation12040065 - 27 Mar 2024

Abstract

This study conducts buckling and free vibration analyses of multi-directional functionally graded sandwich plates subjected to various boundary conditions. Two scenarios are considered: a functionally graded (FG) skin with a homogeneous hard core, and an FG skin with a homogeneous soft core. Utilizing

[...] Read more.

This study conducts buckling and free vibration analyses of multi-directional functionally graded sandwich plates subjected to various boundary conditions. Two scenarios are considered: a functionally graded (FG) skin with a homogeneous hard core, and an FG skin with a homogeneous soft core. Utilizing refined plate models, which incorporate a parabolic distribution of transverse shear stresses while ensuring zero shear stresses on both the upper and lower surfaces, equations of motion are derived using Hamilton’s principle. Analytical solutions for the buckling and free vibration analyses of multi-directional FG sandwich plates under diverse boundary conditions are developed and presented. The obtained results are validated against the existing literature for both the buckling and free vibration analyses. The composition of metal–ceramic-based FG materials varies longitudinally and transversely, following a power law. Various types of sandwich plates are considered, accounting for plate symmetry and layer thicknesses. This investigation explores the influence of several parameters on buckling and free vibration behaviors.

Full article

(This article belongs to the Special Issue Computational Methods in Structural Engineering)

►▼

Show Figures

Figure 1

Open AccessArticle

Solving a System of One-Dimensional Hyperbolic Delay Differential Equations Using the Method of Lines and Runge-Kutta Methods

by

S. Karthick, V. Subburayan and Ravi P. Agarwal

Computation 2024, 12(4), 64; https://doi.org/10.3390/computation12040064 - 27 Mar 2024

Abstract

►▼

Show Figures

In this paper, we consider a system of one-dimensional hyperbolic delay differential equations (HDDEs) and their corresponding initial conditions. HDDEs are a class of differential equations that involve a delay term, which represents the effect of past states on the present state. The

[...] Read more.

In this paper, we consider a system of one-dimensional hyperbolic delay differential equations (HDDEs) and their corresponding initial conditions. HDDEs are a class of differential equations that involve a delay term, which represents the effect of past states on the present state. The delay term poses a challenge for the application of standard numerical methods, which usually require the evaluation of the differential equation at the current step. To overcome this challenge, various numerical methods and analytical techniques have been developed specifically for solving a system of first-order HDDEs. In this study, we investigate these challenges and present some analytical results, such as the maximum principle and stability conditions. Moreover, we examine the propagation of discontinuities in the solution, which provides a comprehensive framework for understanding its behavior. To solve this problem, we employ the method of lines, which is a technique that converts a partial differential equation into a system of ordinary differential equations (ODEs). We then use the Runge–Kutta method, which is a numerical scheme that solves ODEs with high accuracy and stability. We prove the stability and convergence of our method, and we show that the error of our solution is of the order

Figure 1

Highly Accessed Articles

Latest Books

E-Mail Alert

News

Topics

Topic in

Algorithms, Computation, Entropy, Fractal Fract, MCA

Analytical and Numerical Methods for Stochastic Biological Systems

Topic Editors: Mehmet Yavuz, Necati Ozdemir, Mouhcine Tilioua, Yassine SabbarDeadline: 10 May 2024

Topic in

Axioms, Computation, MCA, Mathematics, Symmetry

Mathematical Modeling

Topic Editors: Babak Shiri, Zahra AlijaniDeadline: 31 May 2024

Topic in

Entropy, Algorithms, Computation, Fractal Fract

Computational Complex Networks

Topic Editors: Alexandre G. Evsukoff, Yilun ShangDeadline: 30 June 2024

Topic in

Applied Sciences, Computation, Entropy, J. Imaging

Color Image Processing: Models and Methods (CIP: MM)

Topic Editors: Giuliana Ramella, Isabella TorcicolloDeadline: 30 July 2024

Conferences

Special Issues

Special Issue in

Computation

Computational Social Science and Complex Systems

Guest Editors: Minzhang Zheng, Pedro ManriqueDeadline: 30 April 2024

Special Issue in

Computation

Emerging Trends and Applications in High-Fidelity Computational Fluid Dynamics Simulation

Guest Editors: Anup Zope, Shanti BhushanDeadline: 15 May 2024

Special Issue in

Computation

Artificial Intelligence Applications in Public Health

Guest Editors: Dmytro Chumachenko, Sergiy YakovlevDeadline: 31 May 2024

Special Issue in

Computation

Signal Processing and Machine Learning in Data Science

Guest Editors: Maria Trigka, Elias DritsasDeadline: 30 June 2024