Collection on Theoretical and Computational Neuroscience

Share This Topical Collection

Editor

Topical Collection Information

Dear Colleagues,

Improving our understanding of brain function requires interdisciplinary collaborations between theoretical, computational, and experimental disciplines and approaches. This collection fosters multidisciplinary interactions between theoretical, computational, and experimental work in the field of neuroscience.

We invite original contributions on a wide range of topics that promote theoretical modeling focused on understanding neural function at the molecular, cellular, and circuit levels via computational and model-based approaches that are experimentally testable. While the collection is primarily focused on theoretical and computational research, it welcomes experimental studies that validate and test theoretical conclusions. Primarily theoretical manuscripts should be highly relevant to the neural mechanisms of the neural function, while primarily experimental manuscripts should have implications for the computational analysis of nervous system function.

Manuscripts investigating physiological mechanisms underlying neuropathologies by combining theoretical and experimental approaches are highly encouraged. Similarly, manuscripts describing novel technological advances in data analysis techniques to further insights into the function of the nervous system are also highly encouraged. Modeling approaches at all levels, from biophysically motivated realistic simulations of neurons and synapses to high-level behavioral models of inference and decision-making, are also welcome.

Dr. Jose Lujan

Collection Editor

Manuscript Submission Information

Manuscripts should be submitted online at www.mdpi.com by registering and logging in to this website. Once you are registered, click here to go to the submission form. Manuscripts can be submitted until the deadline. All submissions that pass pre-check are peer-reviewed. Accepted papers will be published continuously in the journal (as soon as accepted) and will be listed together on the collection website. Research articles, review articles as well as short communications are invited. For planned papers, a title and short abstract (about 100 words) can be sent to the Editorial Office for announcement on this website.

Submitted manuscripts should not have been published previously, nor be under consideration for publication elsewhere (except conference proceedings papers). All manuscripts are thoroughly refereed through a single-blind peer-review process. A guide for authors and other relevant information for submission of manuscripts is available on the Instructions for Authors page. Brain Sciences is an international peer-reviewed open access monthly journal published by MDPI.

Please visit the Instructions for Authors page before submitting a manuscript.

The Article Processing Charge (APC) for publication in this open access journal is 2200 CHF (Swiss Francs).

Submitted papers should be well formatted and use good English. Authors may use MDPI's

English editing service prior to publication or during author revisions.

Keywords

- Neural modeling

- Neural computation

- Neural engineering

- Neural signal processing

- Brain–computer interfaces

- Neuroinformatics

- Neural circuits

- Machine learning

- Cognitive systems

- Perception

- Neurobiologically inspired evolutionary systems

Published Papers (12 papers)

Open AccessReview

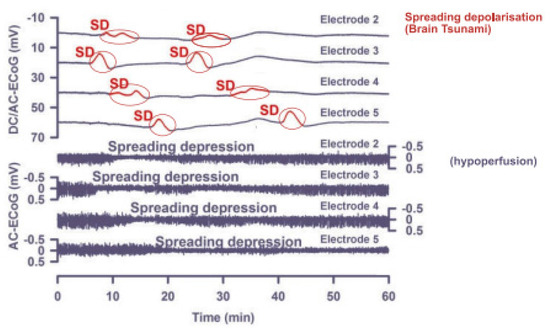

Mathematical Psychiatry: On Cortical Spreading Depression—A Review

by

Ejay Nsugbe

Viewed by 923

Abstract

The concept of migraine with aura (MwA) is a widespread condition that can affect up to 30% of migraine patients and manifests itself as a temporary visual illusion followed by a prolonged headache. It was initially pitched as a neurological disease, and observed

[...] Read more.

The concept of migraine with aura (MwA) is a widespread condition that can affect up to 30% of migraine patients and manifests itself as a temporary visual illusion followed by a prolonged headache. It was initially pitched as a neurological disease, and observed that the spread of accompanying electrophysiological waves as part of the condition, which came to be known as cortical spreading depression (CSD). A strong theoretical basis for a link between MwA and CSD has eventually led to knowledge of the dynamics between the pair. In addition to experiment-based observations, mathematical models make an important contribution towards a numerical means of expressing codependent neural-scale manifestations. This provides alternate means of understanding and observing the phenomena while helping to visualize the links between the variables and their magnitude in contributing towards the emanation and dynamic pulsing of the condition. A number of biophysical mechanisms are believed to contribute to the MwA-CSD, spanning ion diffusion, ionic currents of membranes, osmosis, spatial buffering, neurotransmission, gap junctions, metabolic pumping, and synapse connections. As part of this review study, the various mathematical models for the description of the condition are expressed, reviewed, and contrasted, all of which vary in their depth, perspective, and level of information presented. Subsequent to this, the review looked into links between electrophysiological data-driven manifestations from measurements such as EEG and fMRI. While concluding remarks forged a structured pathway in the area on sub-themes that need to be investigated in order to strengthen and robustify the existing models, they include an accounting for inter-personal variability in models, sex and hormonal factors, and age groups, i.e., pediatrics vs. adults.

Full article

►▼

Show Figures

Open AccessArticle

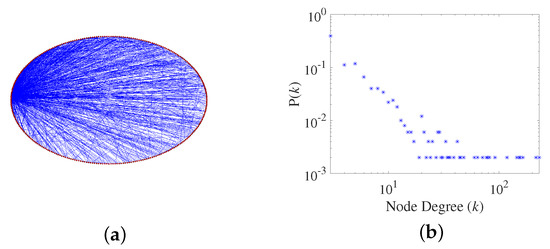

Anti-Disturbance of Scale-Free Spiking Neural Network against Impulse Noise

by

Lei Guo, Minxin Guo, Youxi Wu and Guizhi Xu

Viewed by 985

Abstract

The bio-brain presents robustness function to external stimulus through its self-adaptive regulation and neural information processing. Drawing from the advantages of the bio-brain to investigate the robustness function of a spiking neural network (SNN) is conducive to the advance of brain-like intelligence. However,

[...] Read more.

The bio-brain presents robustness function to external stimulus through its self-adaptive regulation and neural information processing. Drawing from the advantages of the bio-brain to investigate the robustness function of a spiking neural network (SNN) is conducive to the advance of brain-like intelligence. However, the current brain-like model is insufficient in biological rationality. In addition, its evaluation method for anti-disturbance performance is inadequate. To explore the self-adaptive regulation performance of a brain-like model with more biological rationality under external noise, a scale-free spiking neural network(SFSNN) is constructed in this study. Then, the anti-disturbance ability of the SFSNN against impulse noise is investigated, and the anti-disturbance mechanism is further discussed. Our simulation results indicate that: (i) our SFSNN has anti-disturbance ability against impulse noise, and the high-clustering SFSNN outperforms the low-clustering SFSNN in terms of anti-disturbance performance. (ii) The neural information processing in the SFSNN under external noise is clarified, which is a dynamic chain effect of the neuron firing, the synaptic weight, and the topological characteristic. (iii) Our discussion hints that an intrinsic factor of the anti-disturbance ability is the synaptic plasticity, and the network topology is a factor that affects the anti-disturbance ability at the level of performance.

Full article

►▼

Show Figures

Open AccessArticle

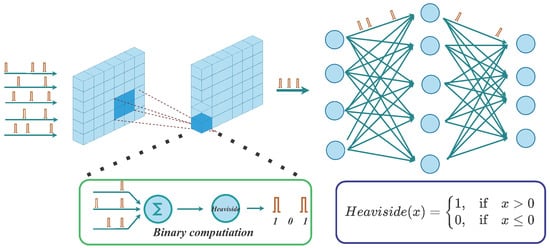

Constrain Bias Addition to Train Low-Latency Spiking Neural Networks

by

Ranxi Lin, Benzhe Dai, Yingkai Zhao, Gang Chen and Huaxiang Lu

Viewed by 1679

Abstract

In recent years, a third-generation neural network, namely, spiking neural network, has received plethora of attention in the broad areas of Machine learning and Artificial Intelligence. In this paper, a novel differential-based encoding method is proposed and new spike-based learning rules for backpropagation

[...] Read more.

In recent years, a third-generation neural network, namely, spiking neural network, has received plethora of attention in the broad areas of Machine learning and Artificial Intelligence. In this paper, a novel differential-based encoding method is proposed and new spike-based learning rules for backpropagation is derived by constraining the addition of bias voltage in spiking neurons. The proposed differential encoding method can effectively exploit the correlation between the data and improve the performance of the proposed model, and the new learning rule can take complete advantage of the modulation properties of bias on the spike firing threshold. We experiment with the proposed model on the environmental sound dataset RWCP and the image dataset MNIST and Fashion-MNIST, respectively, and assign various conditions to test the learning ability and robustness of the proposed model. The experimental results demonstrate that the proposed model achieves near-optimal results with a smaller time step by maintaining the highest accuracy and robustness with less training data. Among them, in MNIST dataset, compared with the original spiking neural network with the same network structure, we achieved a 0.39% accuracy improvement.

Full article

►▼

Show Figures

Open AccessArticle

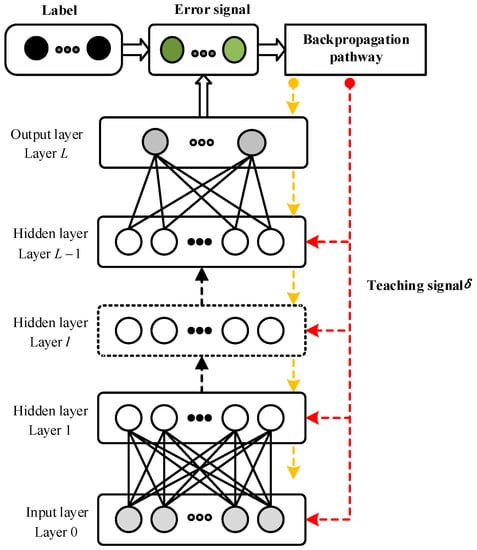

Supervised Learning Algorithm Based on Spike Train Inner Product for Deep Spiking Neural Networks

by

Xianghong Lin, Zhen Zhang and Donghao Zheng

Cited by 2 | Viewed by 1801

Abstract

By mimicking the hierarchical structure of human brain, deep spiking neural networks (DSNNs) can extract features from a lower level to a higher level gradually, and improve the performance for the processing of spatio-temporal information. Due to the complex hierarchical structure and implicit

[...] Read more.

By mimicking the hierarchical structure of human brain, deep spiking neural networks (DSNNs) can extract features from a lower level to a higher level gradually, and improve the performance for the processing of spatio-temporal information. Due to the complex hierarchical structure and implicit nonlinear mechanism, the formulation of spike train level supervised learning methods for DSNNs remains an important problem in this research area. Based on the definition of kernel function and spike trains inner product (STIP) as well as the idea of error backpropagation (BP), this paper firstly proposes a deep supervised learning algorithm for DSNNs named BP-STIP. Furthermore, in order to alleviate the intrinsic weight transport problem of the BP mechanism, feedback alignment (FA) and broadcast alignment (BA) mechanisms are utilized to optimize the error feedback mode of BP-STIP, and two deep supervised learning algorithms named FA-STIP and BA-STIP are also proposed. In the experiments, the effectiveness of the proposed three DSNN algorithms is verified on the MNIST digital image benchmark dataset, and the influence of different kernel functions on the learning performance of DSNNs with different network scales is analyzed. Experimental results show that the FA-STIP and BP-STIP algorithms can achieve 94.73% and 95.65% classification accuracy, which apparently possess better learning performance and stability compared with the benchmark algorithm BP-STIP.

Full article

►▼

Show Figures

Open AccessSystematic Review

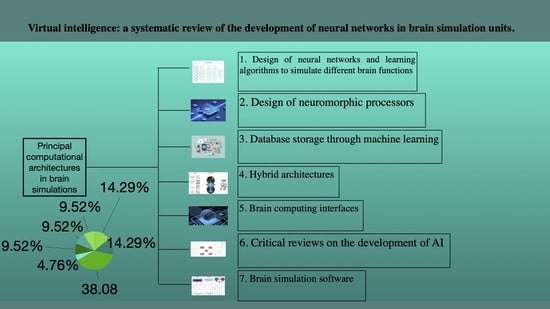

Virtual Intelligence: A Systematic Review of the Development of Neural Networks in Brain Simulation Units

by

Jesús Gerardo Zavala Hernández and Liliana Ibeth Barbosa-Santillán

Cited by 1 | Viewed by 2167

Abstract

The functioning of the brain has been a complex and enigmatic phenomenon. From the first approaches made by Descartes about this organism as the vehicle of the mind to contemporary studies that consider the brain as an organism with emergent activities of primary

[...] Read more.

The functioning of the brain has been a complex and enigmatic phenomenon. From the first approaches made by Descartes about this organism as the vehicle of the mind to contemporary studies that consider the brain as an organism with emergent activities of primary and higher order, this organism has been the object of continuous exploration. It has been possible to develop a more profound study of brain functions through imaging techniques, the implementation of digital platforms or simulators through different programming languages and the use of multiple processors to emulate the speed at which synaptic processes are executed in the brain. The use of various computational architectures raises innumerable questions about the possible scope of disciplines such as computational neurosciences in the study of the brain and the possibility of deep knowledge into different devices with the support that information technology (IT) brings. One of the main interests of cognitive science is the opportunity to develop human intelligence in a system or mechanism. This paper takes the principal articles of three databases oriented to computational sciences (EbscoHost Web, IEEE Xplore and Compendex Engineering Village) to understand the current objectives of neural networks in studying the brain. The possible use of this kind of technology is to develop artificial intelligence (AI) systems that can replicate more complex human brain tasks (such as those involving consciousness). The results show the principal findings in research and topics in developing studies about neural networks in computational neurosciences. One of the principal developments is the use of neural networks as the basis of much computational architecture using multiple techniques such as computational neuromorphic chips, MRI images and brain–computer interfaces (BCI) to enhance the capacity to simulate brain activities. This article aims to review and analyze those studies carried out on the development of different computational architectures that focus on affecting various brain activities through neural networks. The aim is to determine the orientation and the main lines of research on this topic and work in routes that allow interdisciplinary collaboration.

Full article

►▼

Show Figures

Open AccessArticle

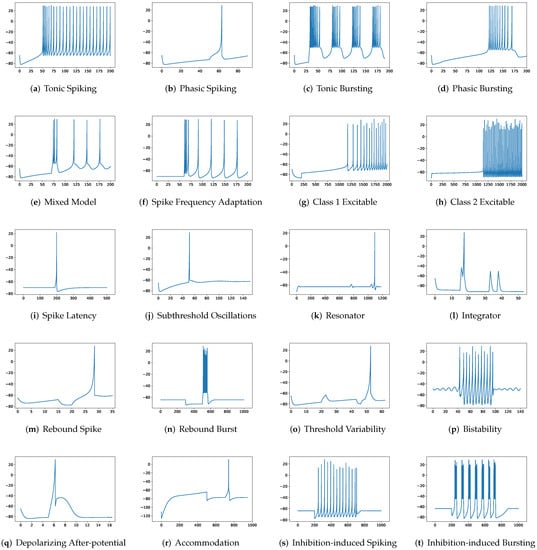

Regularized Spectral Spike Response Model: A Neuron Model for Robust Parameter Reduction

by

Yinuo Zeng, Wendi Bao, Liying Tao, Die Hu, Zonglin Yang, Liren Yang and Delong Shang

Viewed by 1689

Abstract

The modeling procedure of current biological neuron models is hindered by either hyperparameter optimization or overparameterization, which limits their application to a variety of biologically realistic tasks. This article proposes a novel neuron model called the Regularized Spectral Spike Response Model (RSSRM) to

[...] Read more.

The modeling procedure of current biological neuron models is hindered by either hyperparameter optimization or overparameterization, which limits their application to a variety of biologically realistic tasks. This article proposes a novel neuron model called the Regularized Spectral Spike Response Model (RSSRM) to address these issues. The selection of hyperparameters is avoided by the model structure and fitting strategy, while the number of parameters is constrained by regularization techniques. Twenty firing simulation experiments indicate the superiority of RSSRM. In particular, after pruning more than 99% of its parameters, RSSRM with 100 parameters achieves an RMSE of 5.632 in membrane potential prediction, a VRD of 47.219, and an F1-score of 0.95 in spike train forecasting with correct timing (±1.4 ms), which are 25%, 99%, 55%, and 24% better than the average of other neuron models with the same number of parameters in RMSE, VRD, F1-score, and correct timing, respectively. Moreover, RSSRM with 100 parameters achieves a memory use of 10 KB and a runtime of 1 ms during inference, which is more efficient than the Izhikevich model.

Full article

►▼

Show Figures

Open AccessArticle

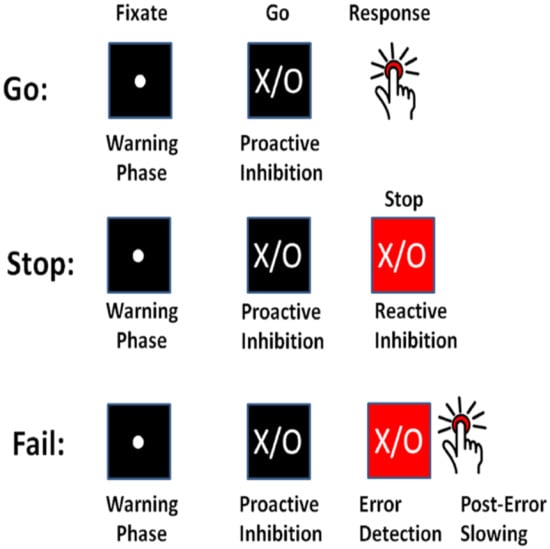

The Asymmetric Laplace Gaussian (ALG) Distribution as the Descriptive Model for the Internal Proactive Inhibition in the Standard Stop Signal Task

by

Mohsen Soltanifar, Michael Escobar, Annie Dupuis, Andre Chevrier and Russell Schachar

Cited by 2 | Viewed by 2995

Abstract

Measurements of response inhibition components of reactive inhibition and proactive inhibition within the stop-signal paradigm have been of particular interest to researchers since the 1980s. While frequentist nonparametric and Bayesian parametric methods have been proposed to precisely estimate the entire distribution of reactive

[...] Read more.

Measurements of response inhibition components of reactive inhibition and proactive inhibition within the stop-signal paradigm have been of particular interest to researchers since the 1980s. While frequentist nonparametric and Bayesian parametric methods have been proposed to precisely estimate the entire distribution of reactive inhibition, quantified by stop signal reaction times (SSRT), there is no method yet in the stop signal task literature to precisely estimate the entire distribution of proactive inhibition. We identify the proactive inhibition as the difference of go reaction times for go trials following stop trials versus those following go trials and introduce an Asymmetric Laplace Gaussian (ALG) model to describe its distribution. The proposed method is based on two assumptions of independent trial type (go/stop) reaction times and Ex-Gaussian (ExG) models. Results indicated that the four parametric ALG model uniquely describes the proactive inhibition distribution and its key shape features, and its hazard function is monotonically increasing, as are its three parametric ExG components. In conclusion, the four parametric ALG model can be used for both response inhibition components and its parameters and descriptive and shape statistics can be used to classify both components in a spectrum of clinical conditions.

Full article

►▼

Show Figures

Open AccessArticle

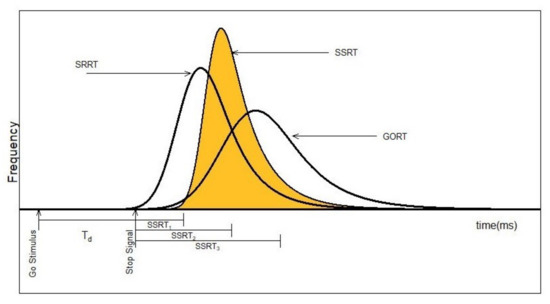

A Bayesian Mixture Modelling of Stop Signal Reaction Time Distributions: The Second Contextual Solution for the Problem of Aftereffects of Inhibition on SSRT Estimations

by

Mohsen Soltanifar, Michael Escobar, Annie Dupuis and Russell Schachar

Cited by 5 | Viewed by 2874

Abstract

The distribution of single Stop Signal Reaction Times (SSRT) in the stop signal task (SST) has been modelled with two general methods: a nonparametric method by Hans Colonius (1990) and a Bayesian parametric method by Dora Matzke, Gordon Logan and colleagues (2013). These

[...] Read more.

The distribution of single Stop Signal Reaction Times (SSRT) in the stop signal task (SST) has been modelled with two general methods: a nonparametric method by Hans Colonius (1990) and a Bayesian parametric method by Dora Matzke, Gordon Logan and colleagues (2013). These methods assume an equal impact of the preceding trial type (go/stop) in the SST trials on the SSRT distributional estimation without addressing the relaxed assumption. This study presents the required model by considering a two-state mixture model for the SSRT distribution. It then compares the Bayesian parametric single SSRT and mixture SSRT distributions in the usual stochastic order at the individual and the population level under ex-Gaussian (ExG) distributional format. It shows that compared to a single SSRT distribution, the mixture SSRT distribution is more varied, more positively skewed, more leptokurtic and larger in stochastic order. The size of the results’ disparities also depends on the choice of weights in the mixture SSRT distribution. This study confirms that mixture SSRT indices as a constant or distribution are significantly larger than their single SSRT counterparts in the related order. This result offers a vital improvement in the SSRT estimations.

Full article

►▼

Show Figures

Open AccessArticle

A Comprehensive sLORETA Study on the Contribution of Cortical Somatomotor Regions to Motor Imagery

by

Mustafa Yazici, Mustafa Ulutas and Mukadder Okuyan

Cited by 6 | Viewed by 3350

Abstract

Brain–computer interface (BCI) is a technology used to convert brain signals to control external devices. Researchers have designed and built many interfaces and applications in the last couple of decades. BCI is used for prevention, detection, diagnosis, rehabilitation, and restoration in healthcare. EEG

[...] Read more.

Brain–computer interface (BCI) is a technology used to convert brain signals to control external devices. Researchers have designed and built many interfaces and applications in the last couple of decades. BCI is used for prevention, detection, diagnosis, rehabilitation, and restoration in healthcare. EEG signals are analyzed in this paper to help paralyzed people in rehabilitation. The electroencephalogram (EEG) signals recorded from five healthy subjects are used in this study. The sensor level EEG signals are converted to source signals using the inverse problem solution. Then, the cortical sources are calculated using sLORETA methods at nine regions marked by a neurophysiologist. The features are extracted from cortical sources by using the common spatial pattern (CSP) method and classified by a support vector machine (SVM). Both the sensor and the computed cortical signals corresponding to motor imagery of the hand and foot are used to train the SVM algorithm. Then, the signals outside the training set are used to test the classification performance of the classifier. The 0.1–30 Hz and mu rhythm band-pass filtered activity is also analyzed for the EEG signals. The classification performance and recognition of the imagery improved up to 100% under some conditions for the cortical level. The cortical source signals at the regions contributing to motor commands are investigated and used to improve the classification of motor imagery.

Full article

►▼

Show Figures

Open AccessArticle

Estimating the Parameters of Fitzhugh–Nagumo Neurons from Neural Spiking Data

by

Resat Ozgur Doruk and Laila Abosharb

Cited by 10 | Viewed by 2816

Abstract

A theoretical and computational study on the estimation of the parameters of a single Fitzhugh–Nagumo model is presented. The difference of this work from a conventional system identification is that the measured data only consist of discrete and noisy neural spiking (spike times)

[...] Read more.

A theoretical and computational study on the estimation of the parameters of a single Fitzhugh–Nagumo model is presented. The difference of this work from a conventional system identification is that the measured data only consist of discrete and noisy neural spiking (spike times) data, which contain no amplitude information. The goal can be achieved by applying a maximum likelihood estimation approach where the likelihood function is derived from point process statistics. The firing rate of the neuron was assumed as a nonlinear map (logistic sigmoid) relating it to the membrane potential variable. The stimulus data were generated by a phased cosine Fourier series having fixed amplitude and frequency but a randomly shot phase (shot at each repeated trial). Various values of amplitude, stimulus component size, and sample size were applied to examine the effect of stimulus to the identification process. Results are presented in tabular and graphical forms, which also include statistical analysis (mean and standard deviation of the estimates). We also tested our model using realistic data from a previous research (H1 neurons of blowflies) and found that the estimates have a tendency to converge.

Full article

►▼

Show Figures

Open AccessArticle

EEG Signals Feature Extraction Based on DWT and EMD Combined with Approximate Entropy

by

Na Ji, Liang Ma, Hui Dong and Xuejun Zhang

Cited by 88 | Viewed by 6753

Abstract

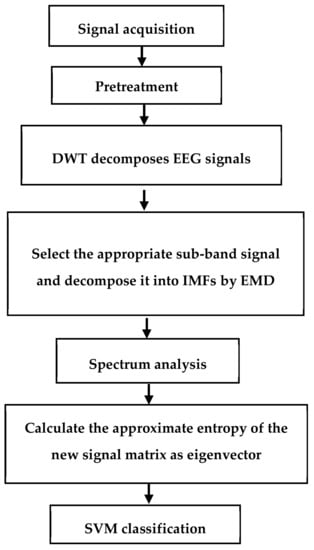

The classification recognition rate of motor imagery is a key factor to improve the performance of brain–computer interface (BCI). Thus, we propose a feature extraction method based on discrete wavelet transform (DWT), empirical mode decomposition (EMD), and approximate entropy. Firstly, the electroencephalogram (EEG)

[...] Read more.

The classification recognition rate of motor imagery is a key factor to improve the performance of brain–computer interface (BCI). Thus, we propose a feature extraction method based on discrete wavelet transform (DWT), empirical mode decomposition (EMD), and approximate entropy. Firstly, the electroencephalogram (EEG) signal is decomposed into a series of narrow band signals with DWT, then the sub-band signal is decomposed with EMD to get a set of stationary time series, which are called intrinsic mode functions (IMFs). Secondly, the appropriate IMFs for signal reconstruction are selected. Thus, the approximate entropy of the reconstructed signal can be obtained as the corresponding feature vector. Finally, support vector machine (SVM) is used to perform the classification. The proposed method solves the problem of wide frequency band coverage during EMD and further improves the classification accuracy of EEG signal motion imaging.

Full article

►▼

Show Figures

Open AccessArticle

Computational Modeling of the Photon Transport, Tissue Heating, and Cytochrome C Oxidase Absorption during Transcranial Near-Infrared Stimulation

by

Mahasweta Bhattacharya and Anirban Dutta

Cited by 27 | Viewed by 5442

Abstract

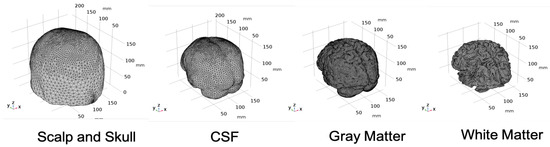

Transcranial near-infrared stimulation (tNIRS) has been proposed as a tool to modulate cortical excitability. However, the underlying mechanisms are not clear where the heating effects on the brain tissue needs investigation due to increased near-infrared (NIR) absorption by water and fat. Moreover, the

[...] Read more.

Transcranial near-infrared stimulation (tNIRS) has been proposed as a tool to modulate cortical excitability. However, the underlying mechanisms are not clear where the heating effects on the brain tissue needs investigation due to increased near-infrared (NIR) absorption by water and fat. Moreover, the risk of localized heating of tissues (including the skin) during optical stimulation of the brain tissue is a concern. The challenge in estimating localized tissue heating is due to the light interaction with the tissues’ constituents, which is dependent on the combination ratio of the scattering and absorption properties of the constituent. Here, apart from tissue heating that can modulate the cortical excitability (“photothermal effects”); the other mechanism reported in the literature is the stimulation of the mitochondria in the cells which are active in the adenosine triphosphate (ATP) synthesis. In the mitochondrial respiratory chain, Complex IV, also known as the cytochrome c oxidase (CCO), is the unit four with three copper atoms. The absorption peaks of CCO are in the visible (420–450 nm and 600–700 nm) and the near-infrared (760–980 nm) spectral regions, which have been shown to be promising for low-level light therapy (LLLT), also known as “photobiomodulation”. While much higher CCO absorption peaks in the visible spectrum can be used for the photobiomodulation of the skin, 810 nm has been proposed for the non-invasive brain stimulation (using tNIRS) due to the optical window in the NIR spectral region. In this article, we applied a computational approach to delineate the “photothermal effects” from the “photobiomodulation”, i.e., to estimate the amount of light absorbed individually by each chromophore in the brain tissue (with constant scattering) and the related tissue heating. Photon migration simulations were performed for motor cortex tNIRS based on a prior work that used a 500 mW cm

light source placed on the scalp. We simulated photon migration at 630 nm and 700 nm (red spectral region) and 810 nm (near-infrared spectral region). We found a temperature increase in the scalp below 0.25 °C and a minimal temperature increase in the gray matter less than 0.04 °C at 810 nm. Similar heating was found for 630 nm and 700 nm used for LLLT, so photothermal effects are postulated to be unlikely in the brain tissue.

Full article

►▼

Show Figures