Virtual and Augmented Reality Systems

(Closed)

Share This Topical Collection

Editors

Prof. Dr. Chang-Hun Kim

Prof. Dr. Chang-Hun Kim

Prof. Dr. Chang-Hun Kim

Prof. Dr. Chang-Hun Kim

E-Mail

Website

Guest Editor

Department of Computer Science & Engineering, Korea University, Seoul 02841, Korea

Interests: VR/AR; CG; visual simulation

Topical Collection Information

Dear Colleagues,

This Special Issue of the journal of Applied Sciences entitled “Virtual and Augmented Reality Systems” aims to present recent advances in virtual and augmented reality. Virtual and augmented reality is a form of human–computer interaction in which a real or imaginary environment is simulated and users interact with and manipulate that world. Virtual and augmented reality systems have emerged as a disruptive technology to enhance the performance of existing computer graphic techniques and to tackle the related intractable problems in human–computer interactions. Recently, the growing developments in VR/AR technology and hardware such as Oculus Rift, HTC Vive, and smartphone-based HMD have led to an increase in the interest of people in virtual reality. Many related types of research have been published, including graphical simulation, modeling, user interfaces, and AI technologies. This Special Issue is an opportunity for the scientific community to present recent high-quality research works regarding any branch of virtual and augmented reality.

Topics of interest include but are not limited to:

- Human–computer interactions in the virtual and augmented environment;

- 3D user interaction in virtual and augmented space;

- Shared VR and AR technologies;

- Untact technologies in virtual and augmented space;

- Graphical simulation for VR and AR systems;

- AI-based technologies for VR and AR systems;

- Immersive analytics and visualization;

- Multiuser and distributed systems;

- Graphical advancements in virtual and augmented space

Prof. Chang-Hun Kim

Prof. Soo Kyun Kim

Guest Editors

Manuscript Submission Information

Manuscripts should be submitted online at www.mdpi.com by registering and logging in to this website. Once you are registered, click here to go to the submission form. Manuscripts can be submitted until the deadline. All submissions that pass pre-check are peer-reviewed. Accepted papers will be published continuously in the journal (as soon as accepted) and will be listed together on the collection website. Research articles, review articles as well as short communications are invited. For planned papers, a title and short abstract (about 100 words) can be sent to the Editorial Office for announcement on this website.

Submitted manuscripts should not have been published previously, nor be under consideration for publication elsewhere (except conference proceedings papers). All manuscripts are thoroughly refereed through a single-blind peer-review process. A guide for authors and other relevant information for submission of manuscripts is available on the Instructions for Authors page. Applied Sciences is an international peer-reviewed open access semimonthly journal published by MDPI.

Please visit the Instructions for Authors page before submitting a manuscript.

The Article Processing Charge (APC) for publication in this open access journal is 2400 CHF (Swiss Francs).

Submitted papers should be well formatted and use good English. Authors may use MDPI's

English editing service prior to publication or during author revisions.

Keywords

- Virtual reality

- Virtual reality application

- Virtual reality system

- Augmented reality

- Augmented reality application

- Augmented reality system

- Human–computer interaction

- 3D user interfaces (3DUIs)

- Multisensory experiences

- Virtual environments

- AI Problems in VR and AR

Published Papers (34 papers)

Open AccessArticle

Real-Time Interaction for 3D Pixel Human in Virtual Environment

by

Haoke Deng, Qimeng Zhang, Hongyu Jin and Chang-Hun Kim

Cited by 2 | Viewed by 2024

Abstract

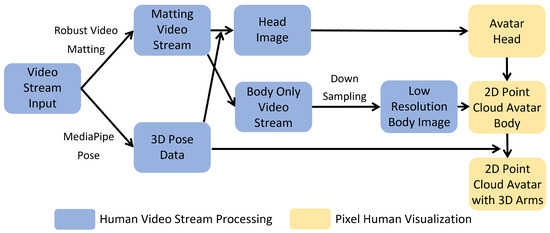

Conducting realistic interactions while communicating efficiently in online conferences is highly desired but challenging. In this work, we propose a novel pixel-style virtual avatar for interacting with virtual objects in virtual conferences that can be generated in real-time. It consists of a 2D

[...] Read more.

Conducting realistic interactions while communicating efficiently in online conferences is highly desired but challenging. In this work, we propose a novel pixel-style virtual avatar for interacting with virtual objects in virtual conferences that can be generated in real-time. It consists of a 2D segmented head video stream for real-time facial expressions and a 3D point cloud body for realistic interactions, both of which are generated from RGB video input of a monocular webcam. We obtain a human-only video stream with a human matting method and generate the 3D avatar’s arms with a 3D pose estimation method, which improves the stereoscopic realism and sense of interaction of conference participants while interacting with virtual objects. Our approach fills the gap between 2D video conferences and 3D virtual avatars and combines the advantages of both. We evaluated our pixel-style avatar by conducting a user study; the result proved that the efficiency of our method is superior to other various existing avatar types.

Full article

►▼

Show Figures

Open AccessArticle

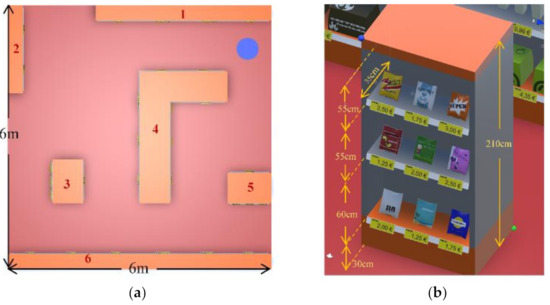

Development of Low-Fidelity Virtual Replicas of Products for Usability Testing

by

Janell S. Joyner, Aaron Kong, Julius Angelo, William He and Monifa Vaughn-Cooke

Cited by 1 | Viewed by 2480

Abstract

Designers perform early-stage formative usability tests with low-fidelity prototypes to improve the design of new products. This low-tech prototype style reduces the manufacturing resources but limits the functions that can be assessed. Recent advances in technology enable designers to create low-fidelity 3D models

[...] Read more.

Designers perform early-stage formative usability tests with low-fidelity prototypes to improve the design of new products. This low-tech prototype style reduces the manufacturing resources but limits the functions that can be assessed. Recent advances in technology enable designers to create low-fidelity 3D models for users to engage in a virtual environment. Three-dimensional models communicate design concepts and are not often used in formative usability testing. The proposed method discusses how to create a virtual replica of a product by assessing key human interaction steps and addresses the limitations of translating those steps into a virtual environment. In addition, the paper will provide a framework to evaluate the usability of a product in a virtual setting, with a specific emphasis on low-resource online testing in the user population. A study was performed to pilot the subject’s experience with the proposed approach and determine how the virtual online simulation impacted the performance. The study outcomes demonstrated that subjects were able to successfully interact with the virtual replica and found the simulation realistic. This method can be followed to perform formative usability tests earlier and incorporate subject feedback into future iterations of their design, which can improve safety and product efficacy.

Full article

►▼

Show Figures

Open AccessArticle

A Stray Light Detection Model for VR Head-Mounted Display Based on Visual Perception

by

Hung-Chung Li, Meng-Che Tsai and Tsung-Xian Lee

Cited by 4 | Viewed by 1846

Abstract

In recent years, the general public and the technology industry have favored stereoscopic vision, immersive experience, and real-time visual information reception of virtual reality (VR) and augmented reality (AR). The device carrier, the Head-Mounted Display (HMD), is recognized as one of the next

[...] Read more.

In recent years, the general public and the technology industry have favored stereoscopic vision, immersive experience, and real-time visual information reception of virtual reality (VR) and augmented reality (AR). The device carrier, the Head-Mounted Display (HMD), is recognized as one of the next generation’s most promising computing and communication platforms. HMD is a virtual image optical display device that combines optical lens modules and binocular displays. The visual impact it brings is much more complicated than the traditional display and also influences the performance of image quality. This research investigated the visual threshold of stray light for three kinds of VR HMD devices, and proposes a qualitative model, derived from psychophysical experiments and the measurement of images on VR devices. The recorded threshold data of the psychophysical stray light perception experiment was used as the target when training. VR display image captured by a wide-angle camera was processed, through a series of image processing procedures, to extract variables in the range of interest. The machine learning algorithm established an evaluation method for human eye-perceived stray light in the study. Four supervised learning algorithms, including K-Nearest Neighbor (KNN), Logistic Regression (LR), Support Vector Machine (SVM), and Random Forest (RF), were compared. The established model’s accuracy was about 90% in all four algorithms. It also proved that different percentages of thresholds could be used to label data according to demand to predict the feasibility of various subdivision inspection specifications in the future. This research aimed to provide a fast and effective stray light qualitative evaluation method to be used as a basis for future HMD optical system design and quality control. Thus, stray light evaluation will become one of the critical indicators of image quality and will be applicable to VR or AR content design.

Full article

►▼

Show Figures

Open AccessArticle

Smart Factory Using Virtual Reality and Online Multi-User: Towards a Metaverse for Experimental Frameworks

by

Luis Omar Alpala, Darío J. Quiroga-Parra, Juan Carlos Torres and Diego H. Peluffo-Ordóñez

Cited by 51 | Viewed by 7313

Abstract

Virtual reality (VR) has been brought closer to the general public over the past decade as it has become increasingly available for desktop and mobile platforms. As a result, consumer-grade VR may redefine how people learn by creating an engaging “hands-on” training experience.

[...] Read more.

Virtual reality (VR) has been brought closer to the general public over the past decade as it has become increasingly available for desktop and mobile platforms. As a result, consumer-grade VR may redefine how people learn by creating an engaging “hands-on” training experience. Today, VR applications leverage rich interactivity in a virtual environment without real-world consequences to optimize training programs in companies and educational institutions. Therefore, the main objective of this article was to improve the collaboration and communication practices in 3D virtual worlds with VR and metaverse focused on the educational and productive sector in smart factory. A key premise of our work is that the characteristics of the real environment can be replicated in a virtual world through digital twins, wherein new, configurable, innovative, and valuable ways of working and learning collaboratively can be created using avatar models. To do so, we present a proposal for the development of an experimental framework that constitutes a crucial first step in the process of formalizing collaboration in virtual environments through VR-powered metaverses. The VR system includes functional components, object-oriented configurations, advanced core, interfaces, and an online multi-user system. We present the study of the first application case of the framework with VR in a metaverse, focused on the smart factory, that shows the most relevant technologies of Industry 4.0. Functionality tests were carried out and evaluated with users through usability metrics that showed the satisfactory results of its potential educational and commercial use. Finally, the experimental results show that a commercial software framework for VR games can accelerate the development of experiments in the metaverse to connect users from different parts of the world in real time.

Full article

►▼

Show Figures

Open AccessArticle

Full-Body Motion Capture-Based Virtual Reality Multi-Remote Collaboration System

by

Eunchong Ha, Gongkyu Byeon and Sunjin Yu

Cited by 6 | Viewed by 4195

Abstract

Various realistic collaboration technologies have emerged in the context of the COVID-19 pandemic. However, as existing virtual reality (VR) collaboration systems generally employ an inverse kinematic method using a head-mounted display and controller, the user and character cannot be accurately matched. Accordingly, the

[...] Read more.

Various realistic collaboration technologies have emerged in the context of the COVID-19 pandemic. However, as existing virtual reality (VR) collaboration systems generally employ an inverse kinematic method using a head-mounted display and controller, the user and character cannot be accurately matched. Accordingly, the immersion level of the VR experience is low. In this study, we propose a VR remote collaboration system that uses motion capture to improve immersion. The system uses a VR character in which a user wearing motion capture equipment performs the same operations as the user. Nevertheless, an error can occur in the virtual environment when the sizes of the actual motion capture user and virtual character are different. To reduce this error, a technique for synchronizing the size of the character according to the user’s body was implemented and tested. The experimental results show that the error between the heights of the test subject and virtual character was 0.465 cm on average. To verify that the implementation of the motion-capture-based VR remote collaboration system is possible, we confirm that three motion-capture users can collaborate remotely using a photon server.

Full article

►▼

Show Figures

Open AccessArticle

An Adaptive UI Based on User-Satisfaction Prediction in Mixed Reality

by

Yujin Choi and Yoon Sang Kim

Viewed by 1598

Abstract

As people begin to notice mixed reality, various studies on user satisfaction in mixed reality (MR) have been conducted. User interface (UI) is one of the representative factors that affect interaction satisfaction in MR. In conventional platforms such as mobile devices and personal

[...] Read more.

As people begin to notice mixed reality, various studies on user satisfaction in mixed reality (MR) have been conducted. User interface (UI) is one of the representative factors that affect interaction satisfaction in MR. In conventional platforms such as mobile devices and personal computers, various studies have been conducted on providing adaptive UI, and recently, such studies have also been conducted in MR environments. However, there have been few studies on providing an adaptive UI based on interaction satisfaction. Therefore, in this paper, we propose a method based on interaction-satisfaction prediction to provide an adaptive UI in MR. The proposed method predicts interaction satisfaction based on interaction information (gaze, hand, head, object) and provides an adaptive UI based on predicted interaction satisfaction. To develop the proposed method, an experiment to measure data was performed, and a user-satisfaction-prediction model was developed based on the data collected through the experiment. Next, to evaluate the proposed method, an adaptive UI providing an application using the developed user-satisfaction-prediction model was implemented. From the experimental results using the implemented application, it was confirmed that the proposed method could improve user satisfaction compared to the conventional method.

Full article

►▼

Show Figures

Open AccessArticle

Autostereoscopic 3D Display System for 3D Medical Images

by

Dongwoo Kang, Jin-Ho Choi and Hyoseok Hwang

Cited by 7 | Viewed by 5272

Abstract

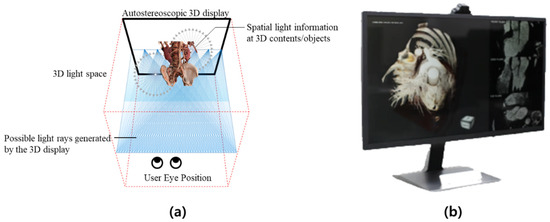

Recent advances in autostereoscopic three-dimensional (3D) display systems have led to innovations in consumer electronics and vehicle systems (e.g., head-up displays). However, medical images with stereoscopic depth provided by 3D displays have yet to be developed sufficiently for widespread adoption in diagnostics. Indeed,

[...] Read more.

Recent advances in autostereoscopic three-dimensional (3D) display systems have led to innovations in consumer electronics and vehicle systems (e.g., head-up displays). However, medical images with stereoscopic depth provided by 3D displays have yet to be developed sufficiently for widespread adoption in diagnostics. Indeed, many stereoscopic 3D displays necessitate special 3D glasses that are unsuitable for clinical environments. This paper proposes a novel glasses-free 3D autostereoscopic display system based on an eye tracking algorithm and explores its viability as a 3D navigator for cardiac computed tomography (CT) images. The proposed method uses a slit-barrier with a backlight unit, which is combined with an eye tracking method that exploits multiple machine learning techniques to display 3D images. To obtain high-quality 3D images with minimal crosstalk, the light field 3D directional subpixel rendering method combined with the eye tracking module is applied using a user’s 3D eye positions. Three-dimensional coronary CT angiography images were volume rendered to investigate the performance of the autostereoscopic 3D display systems. The proposed system was trialed by expert readers, who identified key artery structures faster than with a conventional two-dimensional display without reporting any discomfort or 3D fatigue. With the proposed autostereoscopic 3D display systems, the 3D medical image navigator system has the potential to facilitate faster diagnoses with improved accuracy.

Full article

►▼

Show Figures

Open AccessArticle

Benefit Analysis of Gamified Augmented Reality Navigation System

by

Chun-I Lee

Cited by 1 | Viewed by 2522

Abstract

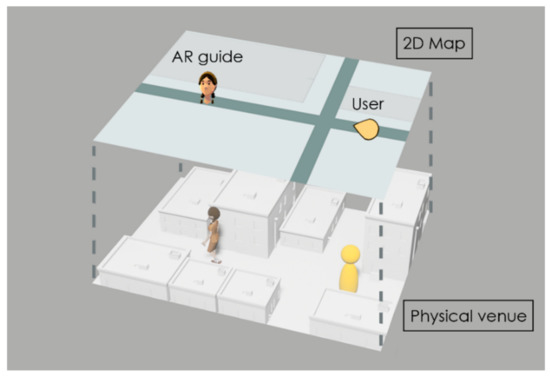

Augmented reality (AR) technology has received much attention in recent years. Users can view AR content through mobile devices, meaning that AR tee no significant differences between the exhibition-viewing behaviors associated with the game mode and the frchnology can be easily incorporated into

[...] Read more.

Augmented reality (AR) technology has received much attention in recent years. Users can view AR content through mobile devices, meaning that AR tee no significant differences between the exhibition-viewing behaviors associated with the game mode and the frchnology can be easily incorporated into applications in various fields. The auxiliary role of AR in exhibitions and its influence and benefits with regard to the behavior of exhibition visitors are worth investigating. To understand whether gamified AR and general AR navigation systems impact visitor behavior, we designed an AR app for an art exhibition. Using GPS and AR technology, the app presents a virtual guide that provides friendly guide services and helps visitors navigate outdoor paths and alleys in the exhibition venue, showing them the best route. The AR navigation system has three primary functions: (1) it allows visitors to scan the exhibit labels to access detailed text and audio introductions, (2) it offers a game mode and a free mode for navigation, and (3) it collects data on the exhibition-viewing behavior associated with each mode and uploads them to the cloud for analysis. The results of the experiment revealed no significant differences between the exhibition-viewing time or distance travelled in the two modes. However, the paths resulting from the game mode were more regular, which means that the participants were more likely to view the exhibition as instructed with the aid of gamified AR. This insight is useful for the control of crowd flow.

Full article

►▼

Show Figures

Open AccessArticle

Virtual Reality Metaverse System Supplementing Remote Education Methods: Based on Aircraft Maintenance Simulation

by

Hyeonju Lee, Donghyun Woo and Sunjin Yu

Cited by 84 | Viewed by 11422

Abstract

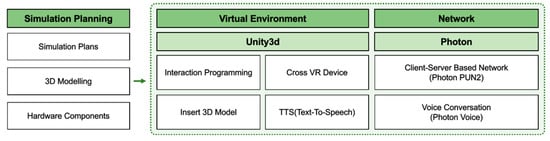

Due to the COVID-19 pandemic, there has been a shift from in-person to remote education, with most students taking classes via video meetings. This change inhibits active class participation from students. In particular, video education has limitations in replacing practical classes, which require

[...] Read more.

Due to the COVID-19 pandemic, there has been a shift from in-person to remote education, with most students taking classes via video meetings. This change inhibits active class participation from students. In particular, video education has limitations in replacing practical classes, which require both theoretical and empirical knowledge. In this study, we propose a system that incorporates virtual reality and metaverse methods into the classroom to compensate for the shortcomings of the existing remote models of practical education. Based on the proposed system, we developed an aircraft maintenance simulation and conducted an experiment comparing our system to a video training method. To measure educational effectiveness, knowledge acquisition, and retention tests were conducted and presence was investigated via survey responses. The results of the experiment show that the group using the proposed system scored higher than the video training group on both knowledge tests. As the responses given to the presence questionnaire confirmed a sense of spatial presence felt by the participants, the usability of the proposed system was judged to be appropriate.

Full article

►▼

Show Figures

Open AccessArticle

Visual Simulation of Turbulent Foams by Incorporating the Angular Momentum of Foam Particles into the Projective Framework

by

Ki-Hoon Kim, Jung Lee, Chang-Hun Kim and Jong-Hyun Kim

Cited by 1 | Viewed by 2322

Abstract

In this paper, we propose an angular momentum-based advection technique that can express the turbulent foam effect. The motion of foam particles, which are strongly bound to the motion of the underlying fluid, is viscous, and sometimes clumping problems occur. This problem is

[...] Read more.

In this paper, we propose an angular momentum-based advection technique that can express the turbulent foam effect. The motion of foam particles, which are strongly bound to the motion of the underlying fluid, is viscous, and sometimes clumping problems occur. This problem is a decisive factor that makes it difficult to express realistic foam effects. Since foam particles, which are secondary effects, depend on the motion of the underlying water, in order to exaggerate the foam effects or express more lively foam effects, it is inevitable to tune the motion of the underlying water and then readjust the foam particles. Because of such a cumbersome process, the readjustment of the foam effects requires a change in the motion of the underlying water, and it is not easy to produce such a scene because the water and foam effects must change at the same time. In this paper, we present a method to maintain angular momentum-based force from water particles without tuning the motion of the underlying water. We can restore the lost turbulent flow by additional advection of foam particles based on this force. In addition, our method can be integrated with screen-space projection frameworks, allowing us to fully embrace all the advantages of this approach. In this paper, the turbulence of the foam particles was improved by minimizing the viscous motion of the foam particles without tuning the motion of the underlying water, and as a result, lively foam effects can be expressed.

Full article

►▼

Show Figures

Open AccessArticle

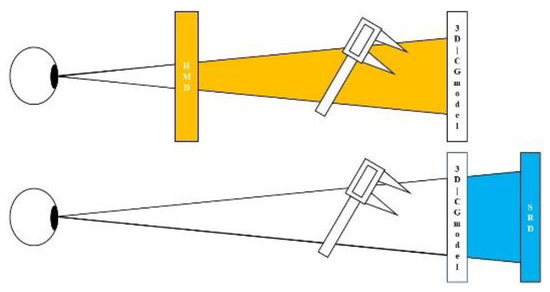

Comparison of the Observation Errors of Augmented and Spatial Reality Systems

by

Masataka Ariwa, Tomoki Itamiya, So Koizumi and Tetsutaro Yamaguchi

Cited by 2 | Viewed by 2382

Abstract

Using 3D technologies such as virtual reality (VR) and augmented reality (AR), has intensified nowadays. The mainstream AR devices in use today are head-mounted displays (HMDs), which, due to specification limitations, may not perform to their full potential within a distance of 1.0

[...] Read more.

Using 3D technologies such as virtual reality (VR) and augmented reality (AR), has intensified nowadays. The mainstream AR devices in use today are head-mounted displays (HMDs), which, due to specification limitations, may not perform to their full potential within a distance of 1.0 m. The spatial reality display (SRD) is another system that facilitates stereoscopic vision by the naked eye. The recommended working distance is 30.0~75.0 cm. It is crucial to evaluate the observation accuracy within 1.0 m for each device in the medical context. Here, 3D-CG models were created from dental models, and the observation errors of 3D-CG models displayed within 1.0 m by HMD and SRD were verified. The measurement error results showed that the HMD model yielded more significant results than the control model (Model) under some conditions, while the SRD model had the same measurement accuracy as the Model. The measured errors were 0.29~1.92 mm for HMD and 0.02~0.59 mm for SRD. The visual analog scale scores for distinctness were significantly higher for SRD than for HMD. Three-dimensionality did not show any relationship with measurement error. In conclusion, there is a specification limitation for using HMDs within 1.0 m, as shown by the measured values. In the future, it will be essential to consider the characteristics of each device in selecting the use of AR devices. Here, we evaluated the accuracies of 3D-CG models displayed in space using two different systems of AR devices.

Full article

►▼

Show Figures

Open AccessArticle

Learning First Aid with a Video Game

by

Cristina Rebollo, Cristina Gasch, Inmaculada Remolar and Daniel Delgado

Cited by 2 | Viewed by 3628

Abstract

Any citizen can be involved in a situation that requires basic first aid knowledge. For this reason, it is important to be trained in this kind of activity. Serious games have been presented as a good option to integrate entertainment into the coaching

[...] Read more.

Any citizen can be involved in a situation that requires basic first aid knowledge. For this reason, it is important to be trained in this kind of activity. Serious games have been presented as a good option to integrate entertainment into the coaching process. This work presents a video game for mobile platforms which facilitate the formation and training in the PWA (Protect, Warn, Aid) first aid protocol. Users have to overcome a series of challenges to bring theoretical concepts closer to practice. To easily change the point of view of the game play, Augmented Reality technology has been used. In order to make each game looks different, neural networks have been implemented to perform the behavior of the Non-Playable Characters autonomous. Finally, in order to evaluate the quality and playability of the application, as well as the motivation and learning of content, several experiments were carried out with a sample of 50 people aged between 18 and 26. The obtained results confirm the playability and attractiveness of the video game, the increase of interest in learning first aid, as well as the greater fixation of the different concepts dealt with in the video game. The results support that this application facilitates and improves the learning of first aid protocols, making it more enjoyable, attractive, and practical.

Full article

►▼

Show Figures

Open AccessArticle

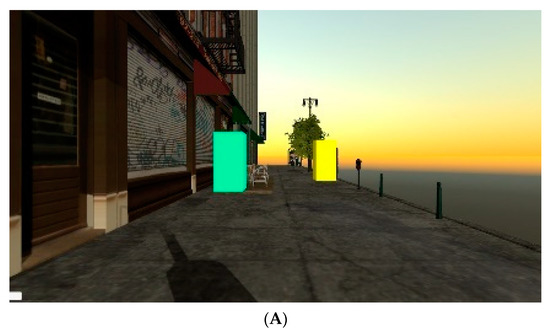

Judgments of Object Size and Distance across Different Virtual Reality Environments: A Preliminary Study

by

Hannah Park, Nafiseh Faghihi, Manish Dixit, Jyotsna Vaid and Ann McNamara

Cited by 4 | Viewed by 2385

Abstract

Emerging technologies offer the potential to expand the domain of the future workforce to extreme environments, such as outer space and alien terrains. To understand how humans navigate in such environments that lack familiar spatial cues this study examined spatial perception in three

[...] Read more.

Emerging technologies offer the potential to expand the domain of the future workforce to extreme environments, such as outer space and alien terrains. To understand how humans navigate in such environments that lack familiar spatial cues this study examined spatial perception in three types of environments. The environments were simulated using virtual reality. We examined participants’ ability to estimate the size and distance of stimuli under conditions of minimal, moderate, or maximum visual cues, corresponding to an environment simulating outer space, an alien terrain, or a typical cityscape, respectively. The findings show underestimation of distance in both the maximum and the minimum visual cue environment but a tendency for overestimation of distance in the moderate environment. We further observed that depth estimation was substantially better in the minimum environment than in the other two environments. However, estimation of height was more accurate in the environment with maximum cues (cityscape) than the environment with minimum cues (outer space). More generally, our results suggest that familiar visual cues facilitated better estimation of size and distance than unfamiliar cues. In fact, the presence of unfamiliar, and perhaps misleading visual cues (characterizing the alien terrain environment), was more disruptive than an environment with a total absence of visual cues for distance and size perception. The findings have implications for training workers to better adapt to extreme environments.

Full article

►▼

Show Figures

Open AccessArticle

Virtual Reality Research: Design Virtual Education System for Epidemic (COVID-19) Knowledge to Public

by

Yongkang Xing, Zhanti Liang, Conor Fahy, Jethro Shell, Kexin Guan, Yuxi Liu and Qian Zhang

Cited by 7 | Viewed by 3390

Abstract

Advances in information and communication technologies have created a range of new products and services for the well-being of society. Virtual Reality (VR) technology has shown enormous potential in educational, commercial, and medical fields. The recent COVID-19 outbreak highlights a poor global performance

[...] Read more.

Advances in information and communication technologies have created a range of new products and services for the well-being of society. Virtual Reality (VR) technology has shown enormous potential in educational, commercial, and medical fields. The recent COVID-19 outbreak highlights a poor global performance in communicating epidemic knowledge to the public. Considering the potential of VR, the research starts from analyzing how to use VR technology to improve public education in COVID-19. The research uses Virtual Storytelling Technology (VST) to promote enthusiasm in user participation. A Plot-based VR education system is proposed in order to provide an immersive, explorative, educational experiences. The system includes three primary modules: the Tutorial Module, the Preparation Module, and the Investigation Module. To remove any potential confusion in the user, the research aims to avoid extremely complicated medical professional content and uses interactive, entertainment methods to improve user participation. In order to evaluate the performance efficiency of the system, we conducted performance evaluations and a user study with 80 participants. Compared with traditional education, the experimental results show that the VR education system can used as an effective educational tool for epidemic (COVID-19) fundamental knowledge. The VR technology can assist government agencies and public organizations to increase public understanding of the spread the epidemic (COVID-19).

Full article

►▼

Show Figures

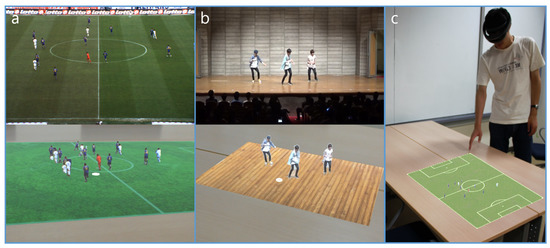

Open AccessFeature PaperArticle

MonoMR: Synthesizing Pseudo-2.5D Mixed Reality Content from Monocular Videos

by

Dong-Hyun Hwang and Hideki Koike

Viewed by 2389

Abstract

MonoMR is a system that synthesizes pseudo-2.5D content from monocular videos for mixed reality (MR) head-mounted displays (HMDs). Unlike conventional systems that require multiple cameras, the MonoMR system can be used by casual end-users to generate MR content from a single camera only.

[...] Read more.

MonoMR is a system that synthesizes pseudo-2.5D content from monocular videos for mixed reality (MR) head-mounted displays (HMDs). Unlike conventional systems that require multiple cameras, the MonoMR system can be used by casual end-users to generate MR content from a single camera only. In order to synthesize the content, the system detects people in the video sequence via a deep neural network, and then the detected person’s pseudo-3D position is estimated by our proposed novel algorithm through a homography matrix. Finally, the person’s texture is extracted using a background subtraction algorithm and is placed on an estimated 3D position. The synthesized content can be played in MR HMD, and users can freely change their viewpoint and the content’s position. In order to evaluate the efficiency and interactive potential of MonoMR, we conducted performance evaluations and a user study with 12 participants. Moreover, we demonstrated the feasibility and usability of the MonoMR system to generate pseudo-2.5D content using three example application scenarios.

Full article

►▼

Show Figures

Open AccessArticle

An ARCore-Based Augmented Reality Campus Navigation System

by

Fangfang Lu, Hao Zhou, Lingling Guo, Jingjing Chen and Licheng Pei

Cited by 11 | Viewed by 10217

Abstract

Currently, the route planning functions in 2D/3D campus navigation systems in the market are unable to process indoor and outdoor localization information simultaneously, and the UI experiences are not optimal because they are limited by the service platforms. An ARCore-based augmented reality campus

[...] Read more.

Currently, the route planning functions in 2D/3D campus navigation systems in the market are unable to process indoor and outdoor localization information simultaneously, and the UI experiences are not optimal because they are limited by the service platforms. An ARCore-based augmented reality campus navigation system is designed in this paper in order to solve the relevant problems. Firstly, the proposed campus navigation system uses ARCore to enhance reality by presenting 3D information in real scenes. Secondly, a visual inertial ranging algorithm is proposed for real-time locating and map generating in mobile devices. Finally, rich Unity3D scripts are designed in order to enhance users’ autonomy and enjoyment during navigation experience. In this paper, indoor navigation and outdoor navigation experiments are carried out at the Lingang campus of Shanghai University of Electric Power. Compared with the AR outdoor navigation system of Gaode, the proposed AR system can achieve increased precise outdoor localization by deploying the visual inertia odometer on the mobile phone and realizes the augmented reality function of 3D information and real scene, thus enriching the user’s interactive experience. Furthermore, four groups of students have been selected for system testing and evaluation. Compared with traditional systems, such as Gaode map or Internet media, experimental results show that our system could facilitate the effectiveness and usability of learning on campus.

Full article

►▼

Show Figures

Open AccessArticle

A Novel Anatomy Education Method Using a Spatial Reality Display Capable of Stereoscopic Imaging with the Naked Eye

by

Tomoki Itamiya, Masahiro To, Takeshi Oguchi, Shinya Fuchida, Masato Matsuo, Iwao Hasegawa, Hiromasa Kawana and Katsuhiko Kimoto

Cited by 7 | Viewed by 3379

Abstract

Several efforts have been made to use virtual reality (VR) and augmented reality (AR) for medical and dental education and surgical support. The current methods still require users to wear devices such as a head-mounted display (HMD) and smart glasses, which pose challenges

[...] Read more.

Several efforts have been made to use virtual reality (VR) and augmented reality (AR) for medical and dental education and surgical support. The current methods still require users to wear devices such as a head-mounted display (HMD) and smart glasses, which pose challenges in hygiene management and long-term use. Additionally, it is necessary to measure the user’s inter-pupillary distance and to reflect it in the device settings each time to accurately display 3D images. This setting is difficult for daily use. We developed and implemented a novel anatomy education method using a spatial reality display capable of stereoscopic viewing with the naked eye without an HMD or smart glasses. In this study, we developed two new applications: (1) a head and neck anatomy education application, which can display 3D-CG models of the skeleton and blood vessels of the head and neck region using 3D human body data available free of charge from public research institutes, and (2) a DICOM image autostereoscopic viewer, which can automatically convert 2D CT/MRI/CBCT image data into 3D-CG models. In total, 104 students at the School of Dentistry experienced and evaluated the system, and the results suggest its usefulness. A stereoscopic display without a head-mounted display is highly useful and promising for anatomy education.

Full article

►▼

Show Figures

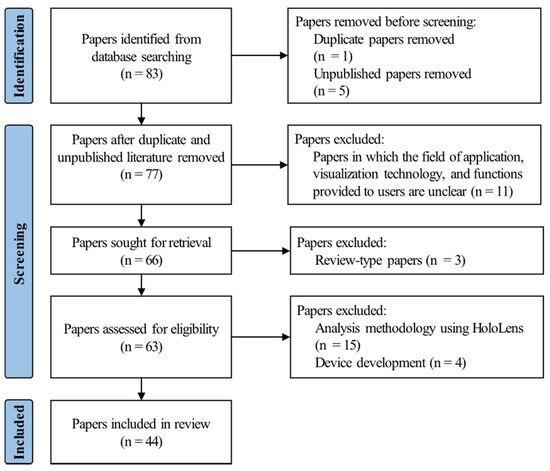

Open AccessReview

Review of Microsoft HoloLens Applications over the Past Five Years

by

Sebeom Park, Shokhrukh Bokijonov and Yosoon Choi

Cited by 72 | Viewed by 11199

Abstract

Since Microsoft HoloLens first appeared in 2016, HoloLens has been used in various industries, over the past five years. This study aims to review academic papers on the applications of HoloLens in several industries. A review was performed to summarize the results of

[...] Read more.

Since Microsoft HoloLens first appeared in 2016, HoloLens has been used in various industries, over the past five years. This study aims to review academic papers on the applications of HoloLens in several industries. A review was performed to summarize the results of 44 papers (dated between January 2016 and December 2020) and to outline the research trends of applying HoloLens to different industries. This study determined that HoloLens is employed in medical and surgical aids and systems, medical education and simulation, industrial engineering, architecture, civil engineering and other engineering fields. The findings of this study contribute towards classifying the current uses of HoloLens in various industries and identifying the types of visualization techniques and functions.

Full article

►▼

Show Figures

Open AccessArticle

Effects of the Weight and Balance of Head-Mounted Displays on Physical Load

by

Kodai Ito, Mitsunori Tada, Hiroyasu Ujike and Keiichiro Hyodo

Cited by 16 | Viewed by 3122

Abstract

To maximize user experience in VR environments, optimizing the comfortability of head-mounted displays (HMDs) is essential. To date, few studies have investigated the fatigue induced by wearing commercially available HMDs. Here, we focus on the effects of HMD weight and balance on the

[...] Read more.

To maximize user experience in VR environments, optimizing the comfortability of head-mounted displays (HMDs) is essential. To date, few studies have investigated the fatigue induced by wearing commercially available HMDs. Here, we focus on the effects of HMD weight and balance on the physical load experienced by the user. We conducted an experiment in which participants completed a shooting game while wearing differently weighted and balanced HMDs. Afterwards, the participants completed questionnaires to assess levels of discomfort and fatigue. The results clarify that the weight of the HMD affects user fatigue, with the degree of fatigue varying depending on the center of mass position. Additionally, they suggest that the torque at the neck joint corresponds to the physical load imparted by the HMD. Therefore, our results provide valuable insights, demonstrating that, to improve HMD comfortability, it is necessary to consider both the balance and reduction of weight during HMD design.

Full article

►▼

Show Figures

Open AccessReview

A Narrative Review of Virtual Reality Applications for the Treatment of Post-Traumatic Stress Disorder

by

Sorelle Audrey Kamkuimo, Benoît Girard and Bob-Antoine J. Menelas

Cited by 4 | Viewed by 2788

Abstract

Virtual reality (VR) technologies allow for the creation of 3D environments that can be exploited at the human level, maximizing humans’ use of perceptual skills through their sensory channels, and enabling them to actively influence the course of events that take place in

[...] Read more.

Virtual reality (VR) technologies allow for the creation of 3D environments that can be exploited at the human level, maximizing humans’ use of perceptual skills through their sensory channels, and enabling them to actively influence the course of events that take place in the virtual environment (VE). As such, they constitute a significant asset in the treatment of post-traumatic stress disorder (PTSD) via exposure therapy. In this article, we review the VR tools that have been developed to date for the treatment of PTSD. The article aims to analyze how VR technologies can be exploited from a sensorimotor and interactive perspective. The findings from this analysis suggest a significant emphasis on sensory stimulation to the detriment of interaction. Finally, we propose new ideas regarding the more successful integration of sensorimotor activities and interaction into VR exposure therapy for PTSD.

Full article

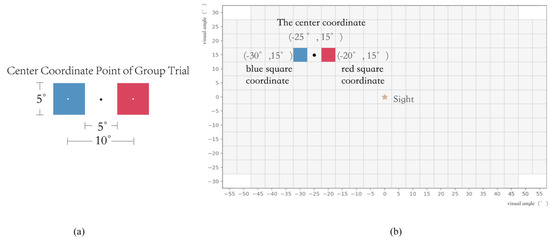

Open AccessArticle

Study on Hand–Eye Cordination Area with Bare-Hand Click Interaction in Virtual Reality

by

Xiaozhou Zhou, Yu Jin, Lesong Jia and Chengqi Xue

Cited by 6 | Viewed by 2265

Abstract

In virtual reality, users’ input and output interactions are carried out in a three-dimensional space, and bare-hand click interaction is one of the most common interaction methods. Apart from the limitations of the device, the movements of bare-hand click interaction in virtual reality

[...] Read more.

In virtual reality, users’ input and output interactions are carried out in a three-dimensional space, and bare-hand click interaction is one of the most common interaction methods. Apart from the limitations of the device, the movements of bare-hand click interaction in virtual reality involve head, eye, and hand movements. Consequently, clicking performance varies among locations in the binocular field of view. In this study, we explored the optimal interaction area of hand–eye coordination within the binocular field of view in a 3D virtual environment (VE), and implemented a bare-hand click experiment in a VE combining click performance data, namely, click accuracy and click duration, following a gradient descent method. The experimental results show that click performance is significantly influenced by the area where the target is located. The performance data and subjective preferences for clicks show a high degree of consistency. Combining reaction time and click accuracy, the optimal operating area for bare-hand clicking in virtual reality is from 20° to the left to 30° to the right horizontally and from 15° in the upward direction to 20° in the downward direction vertically. The results of this study have implications for guidelines and applications for bare-hand click interaction interface designs in the proximal space of virtual reality.

Full article

►▼

Show Figures

Open AccessArticle

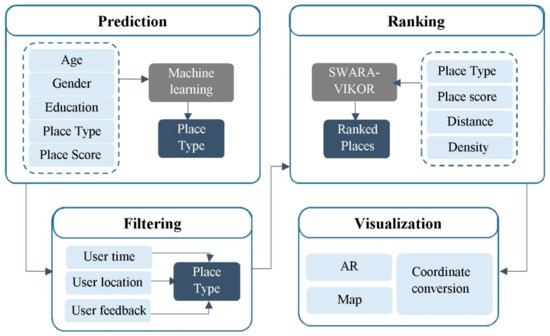

Personalized Augmented Reality Based Tourism System: Big Data and User Demographic Contexts

by

Soheil Rezaee, Abolghasem Sadeghi-Niaraki, Maryam Shakeri and Soo-Mi Choi

Cited by 10 | Viewed by 2591

Abstract

A lack of required data resources is one of the challenges of accepting the Augmented Reality (AR) to provide the right services to the users, whereas the amount of spatial information produced by people is increasing daily. This research aims to design a

[...] Read more.

A lack of required data resources is one of the challenges of accepting the Augmented Reality (AR) to provide the right services to the users, whereas the amount of spatial information produced by people is increasing daily. This research aims to design a personalized AR that is based on a tourist system that retrieves the big data according to the users’ demographic contexts in order to enrich the AR data source in tourism. This research is conducted in two main steps. First, the type of the tourist attraction where the users interest is predicted according to the user demographic contexts, which include age, gender, and education level, by using a machine learning method. Second, the correct data for the user are extracted from the big data by considering time, distance, popularity, and the neighborhood of the tourist places, by using the VIKOR and SWAR decision making methods. By about 6%, the results show better performance of the decision tree by predicting the type of tourist attraction, when compared to the SVM method. In addition, the results of the user study of the system show the overall satisfaction of the participants in terms of the ease-of-use, which is about 55%, and in terms of the systems usefulness, about 56%.

Full article

►▼

Show Figures

Open AccessArticle

Augmented Reality and Machine Learning Incorporation Using YOLOv3 and ARKit

by

Huy Le, Minh Nguyen, Wei Qi Yan and Hoa Nguyen

Cited by 12 | Viewed by 5549

Abstract

Augmented reality is one of the fastest growing fields, receiving increased funding for the last few years as people realise the potential benefits of rendering virtual information in the real world. Most of today’s augmented reality marker-based applications use local feature detection and

[...] Read more.

Augmented reality is one of the fastest growing fields, receiving increased funding for the last few years as people realise the potential benefits of rendering virtual information in the real world. Most of today’s augmented reality marker-based applications use local feature detection and tracking techniques. The disadvantage of applying these techniques is that the markers must be modified to match the unique classified algorithms or they suffer from low detection accuracy. Machine learning is an ideal solution to overcome the current drawbacks of image processing in augmented reality applications. However, traditional data annotation requires extensive time and labour, as it is usually done manually. This study incorporates machine learning to detect and track augmented reality marker targets in an application using deep neural networks. We firstly implement the auto-generated dataset tool, which is used for the machine learning dataset preparation. The final iOS prototype application incorporates object detection, object tracking and augmented reality. The machine learning model is trained to recognise the differences between targets using one of YOLO’s most well-known object detection methods. The final product makes use of a valuable toolkit for developing augmented reality applications called ARKit.

Full article

►▼

Show Figures

Open AccessFeature PaperArticle

Recognition of Customers’ Impulsivity from Behavioral Patterns in Virtual Reality

by

Masoud Moghaddasi, Javier Marín-Morales, Jaikishan Khatri, Jaime Guixeres, Irene Alice Chicchi Giglioli and Mariano Alcañiz

Cited by 7 | Viewed by 2721

Abstract

Virtual reality (VR) in retailing (V-commerce) has been proven to enhance the consumer experience. Thus, this technology is beneficial to study behavioral patterns by offering the opportunity to infer customers’ personality traits based on their behavior. This study aims to recognize impulsivity using

[...] Read more.

Virtual reality (VR) in retailing (V-commerce) has been proven to enhance the consumer experience. Thus, this technology is beneficial to study behavioral patterns by offering the opportunity to infer customers’ personality traits based on their behavior. This study aims to recognize impulsivity using behavioral patterns. For this goal, 60 subjects performed three tasks—one exploration task and two planned tasks—in a virtual market. Four noninvasive signals (eye-tracking, navigation, posture, and interactions), which are available in commercial VR devices, were recorded, and a set of features were extracted and categorized into zonal, general, kinematic, temporal, and spatial types. They were input into a support vector machine classifier to recognize the impulsivity of the subjects based on the I-8 questionnaire, achieving an accuracy of 87%. The results suggest that, while the exploration task can reveal general impulsivity, other subscales such as perseverance and sensation-seeking are more related to planned tasks. The results also show that posture and interaction are the most informative signals. Our findings validate the recognition of customer impulsivity using sensors incorporated into commercial VR devices. Such information can provide a personalized shopping experience in future virtual shops.

Full article

►▼

Show Figures

Open AccessArticle

Virtual Marker Technique to Enhance User Interactions in a Marker-Based AR System

by

Boyang Liu and Jiro Tanaka

Cited by 5 | Viewed by 3660

Abstract

In marker-based augmented reality (AR) systems, markers are usually relatively independent and predefined by the system creator in advance. Users can only use these predefined markers to complete the construction of certain specified content. Such systems usually lack flexibility and cannot allow users

[...] Read more.

In marker-based augmented reality (AR) systems, markers are usually relatively independent and predefined by the system creator in advance. Users can only use these predefined markers to complete the construction of certain specified content. Such systems usually lack flexibility and cannot allow users to create content freely. In this paper, we propose a virtual marker technique to build a marker-based AR system framework, where multiple AR markers including virtual and physical markers work together. Information from multiple markers can be merged, and virtual markers are used to provide user-defined information. We conducted a pilot study to understand the multi-marker cooperation framework based on virtual markers. The pilot study shows that the virtual marker technique will not significantly increase the user’s time and operational burdens, while actively improving the user’s cognitive experience.

Full article

►▼

Show Figures

Open AccessArticle

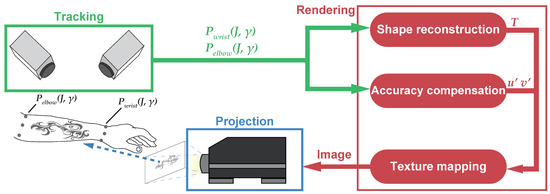

High-Speed Dynamic Projection Mapping onto Human Arm with Realistic Skin Deformation

by

Hao-Lun Peng and Yoshihiro Watanabe

Cited by 6 | Viewed by 4310

Abstract

Dynamic projection mapping for a moving object according to its position and shape is fundamental for augmented reality to resemble changes on a target surface. For instance, augmenting the human arm surface via dynamic projection mapping can enhance applications in fashion, user interfaces,

[...] Read more.

Dynamic projection mapping for a moving object according to its position and shape is fundamental for augmented reality to resemble changes on a target surface. For instance, augmenting the human arm surface via dynamic projection mapping can enhance applications in fashion, user interfaces, prototyping, education, medical assistance, and other fields. For such applications, however, conventional methods neglect skin deformation and have a high latency between motion and projection, causing noticeable misalignment between the target arm surface and projected images. These problems degrade the user experience and limit the development of more applications. We propose a system for high-speed dynamic projection mapping onto a rapidly moving human arm with realistic skin deformation. With the developed system, the user does not perceive any misalignment between the arm surface and projected images. First, we combine a state-of-the-art parametric deformable surface model with efficient regression-based accuracy compensation to represent skin deformation. Through compensation, we modify the texture coordinates to achieve fast and accurate image generation for projection mapping based on joint tracking. Second, we develop a high-speed system that provides a latency between motion and projection below 10 ms, which is generally imperceptible by human vision. Compared with conventional methods, the proposed system provides more realistic experiences and increases the applicability of dynamic projection mapping.

Full article

►▼

Show Figures

Open AccessEditor’s ChoiceArticle

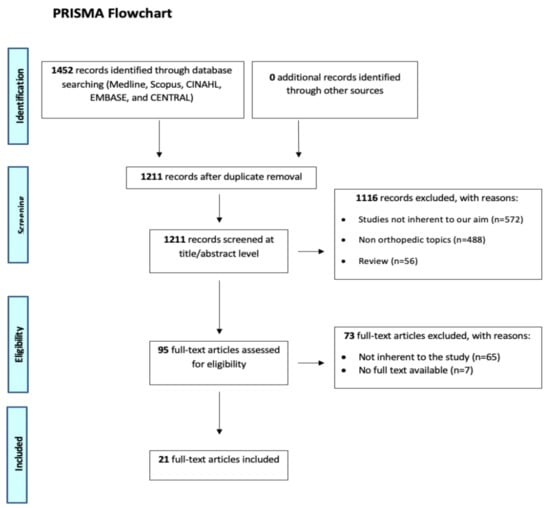

Augmented Reality, Virtual Reality and Artificial Intelligence in Orthopedic Surgery: A Systematic Review

by

Umile Giuseppe Longo, Sergio De Salvatore, Vincenzo Candela, Giuliano Zollo, Giovanni Calabrese, Sara Fioravanti, Lucia Giannone, Anna Marchetti, Maria Grazia De Marinis and Vincenzo Denaro

Cited by 24 | Viewed by 4795

Abstract

Background: The application of virtual and augmented reality technologies to orthopaedic surgery training and practice aims to increase the safety and accuracy of procedures and reducing complications and costs. The purpose of this systematic review is to summarise the present literature on this

[...] Read more.

Background: The application of virtual and augmented reality technologies to orthopaedic surgery training and practice aims to increase the safety and accuracy of procedures and reducing complications and costs. The purpose of this systematic review is to summarise the present literature on this topic while providing a detailed analysis of current flaws and benefits. Methods: A comprehensive search on the PubMed, Cochrane, CINAHL, and Embase database was conducted from inception to February 2021. The Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) guidelines were used to improve the reporting of the review. The Cochrane Risk of Bias Tool and the Methodological Index for Non-Randomized Studies (MINORS) was used to assess the quality and potential bias of the included randomized and non-randomized control trials, respectively. Results: Virtual reality has been proven revolutionary for both resident training and preoperative planning. Thanks to augmented reality, orthopaedic surgeons could carry out procedures faster and more accurately, improving overall safety. Artificial intelligence (AI) is a promising technology with limitless potential, but, nowadays, its use in orthopaedic surgery is limited to preoperative diagnosis. Conclusions: Extended reality technologies have the potential to reform orthopaedic training and practice, providing an opportunity for unidirectional growth towards a patient-centred approach.

Full article

►▼

Show Figures

Open AccessArticle

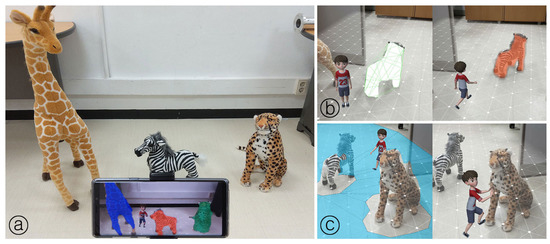

Silhouettes from Real Objects Enable Realistic Interactions with a Virtual Human in Mobile Augmented Reality

by

Hanseob Kim, Ghazanfar Ali, Andréas Pastor, Myungho Lee, Gerard J. Kim and Jae-In Hwang

Cited by 3 | Viewed by 3119

Abstract

Realistic interactions with real objects (e.g., animals, toys, robots) in an augmented reality (AR) environment enhances the user experience. The common AR apps on the market achieve realistic interactions by superimposing pre-modeled virtual proxies on the real objects in the AR environment. This

[...] Read more.

Realistic interactions with real objects (e.g., animals, toys, robots) in an augmented reality (AR) environment enhances the user experience. The common AR apps on the market achieve realistic interactions by superimposing pre-modeled virtual proxies on the real objects in the AR environment. This way user perceives the interaction with virtual proxies as interaction with real objects. However, catering to environment change, shape deformation, and view update is not a trivial task. Our proposed method uses the dynamic silhouette of a real object to enable realistic interactions. Our approach is practical, lightweight, and requires no additional hardware besides the device camera. For a case study, we designed a mobile AR application to interact with real animal dolls. Our scenario included a virtual human performing four types of realistic interactions. Results demonstrated our method’s stability that does not require pre-modeled virtual proxies in case of shape deformation and view update. We also conducted a pilot study using our approach and reported significant improvements in user perception of spatial awareness and presence for realistic interactions with a virtual human.

Full article

►▼

Show Figures

Open AccessReview

Multimodal Interaction Systems Based on Internet of Things and Augmented Reality: A Systematic Literature Review

by

Joo Chan Kim, Teemu H. Laine and Christer Åhlund

Cited by 27 | Viewed by 6980

Abstract

Technology developments have expanded the diversity of interaction modalities that can be used by an agent (either a human or machine) to interact with a computer system. This expansion has created the need for more natural and user-friendly interfaces in order to achieve

[...] Read more.

Technology developments have expanded the diversity of interaction modalities that can be used by an agent (either a human or machine) to interact with a computer system. This expansion has created the need for more natural and user-friendly interfaces in order to achieve effective user experience and usability. More than one modality can be provided to an agent for interaction with a system to accomplish this goal, which is referred to as a multimodal interaction (MI) system. The Internet of Things (IoT) and augmented reality (AR) are popular technologies that allow interaction systems to combine the real-world context of the agent and immersive AR content. However, although MI systems have been extensively studied, there are only several studies that reviewed MI systems that used IoT and AR. Therefore, this paper presents an in-depth review of studies that proposed various MI systems utilizing IoT and AR. A total of 23 studies were identified and analyzed through a rigorous systematic literature review protocol. The results of our analysis of MI system architectures, the relationship between system components, input/output interaction modalities, and open research challenges are presented and discussed to summarize the findings and identify future research and development avenues for researchers and MI developers.

Full article

►▼

Show Figures

Open AccessArticle

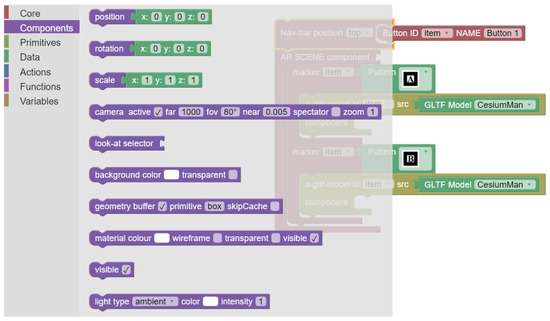

BlocklyXR: An Interactive Extended Reality Toolkit for Digital Storytelling

by

Kwanghee Jung, Vinh T. Nguyen and Jaehoon Lee

Cited by 25 | Viewed by 4135

Abstract

Traditional in-app virtual reality (VR)/augmented reality (AR) applications pose a challenge of reaching users due to their dependency on operating systems (Android, iOS). Besides, it is difficult for general users to create their own VR/AR applications and foster their creative ideas without advanced

[...] Read more.

Traditional in-app virtual reality (VR)/augmented reality (AR) applications pose a challenge of reaching users due to their dependency on operating systems (Android, iOS). Besides, it is difficult for general users to create their own VR/AR applications and foster their creative ideas without advanced programming skills. This paper addresses these issues by proposing an interactive extended reality toolkit, named BlocklyXR. The objective of this research is to provide general users with a visual programming environment to build an extended reality application for digital storytelling. The contextual design was generated from real-world map data retrieved from Mapbox GL. ThreeJS was used for setting up, rendering 3D environments, and controlling animations. A block-based programming approach was adapted to let users design their own story. The capability of BlocklyXR was illustrated with a use case where users were able to replicate the existing PalmitoAR utilizing the block-based authoring toolkit with fewer efforts in programming. The technology acceptance model was used to evaluate the adoption and use of the interactive extended reality toolkit. The findings showed that visual design and task technology fit had significantly positive effects on user motivation factors (perceived ease of use and perceived usefulness). In turn, perceived usefulness had statistically significant and positive effects on intention to use, while there was no significant impact of perceived ease of use on intention to use. Study implications and future research directions are discussed.

Full article

►▼

Show Figures

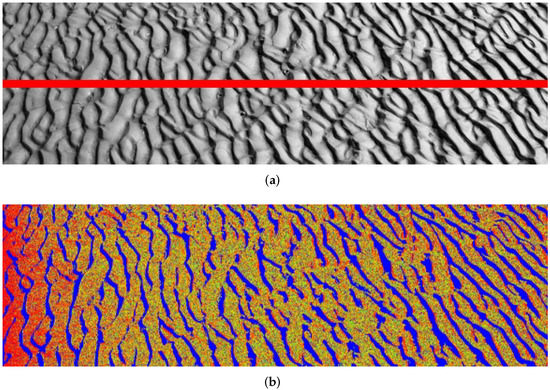

Open AccessArticle

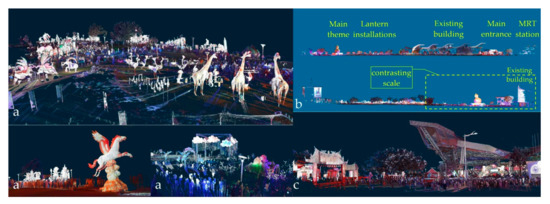

Situated AR Simulations of a Lantern Festival Using a Smartphone and LiDAR-Based 3D Models

by

Naai-Jung Shih, Pei-Huang Diao, Yi-Ting Qiu and Tzu-Yu Chen

Cited by 10 | Viewed by 3242

Abstract

A lantern festival was 3D-scanned to elucidate its unique complexity and cultural identity in terms of Intangible Cultural Heritage (ICH). Three augmented reality (AR) instancing scenarios were applied to the converted scanned data from an interaction to the entire site; a forward additive

[...] Read more.

A lantern festival was 3D-scanned to elucidate its unique complexity and cultural identity in terms of Intangible Cultural Heritage (ICH). Three augmented reality (AR) instancing scenarios were applied to the converted scanned data from an interaction to the entire site; a forward additive instancing and interactions with a pre-defined model layout. The novelty and contributions of this study are three-fold: documentation, development of an AR app for situated tasks, and AR verification. We presented ready-made and customized smartphone apps for AR verification to extend the model’s elaboration of different site contexts. Both were applied to assess their feasibility in the restructuring and management of the scene. The apps were implemented under a homogeneous and heterogeneous combination of contexts, originating from an as-built event description to a remote site as a sustainable cultural effort. A second reconstruction of screenshots in an AR loop process of interaction, reconstruction, and confirmation verification was also made to study the manipulated result in 3D prints.

Full article

►▼

Show Figures

Open AccessArticle

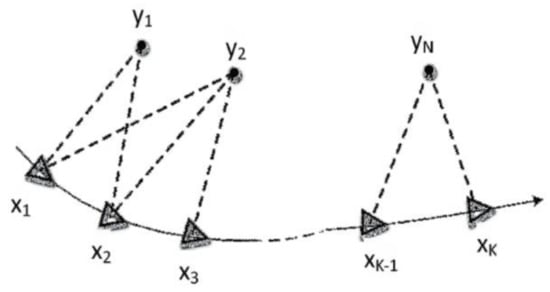

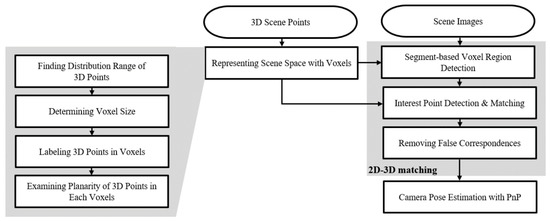

Voxel-Based Scene Representation for Camera Pose Estimation of a Single RGB Image

by

Sangyoon Lee, Hyunki Hong and Changkyoung Eem

Cited by 3 | Viewed by 2142

Abstract

Deep learning has been utilized in end-to-end camera pose estimation. To improve the performance, we introduce a camera pose estimation method based on a 2D-3D matching scheme with two convolutional neural networks (CNNs). The scene is divided into voxels, whose size and number

[...] Read more.

Deep learning has been utilized in end-to-end camera pose estimation. To improve the performance, we introduce a camera pose estimation method based on a 2D-3D matching scheme with two convolutional neural networks (CNNs). The scene is divided into voxels, whose size and number are computed according to the scene volume and the number of 3D points. We extract inlier points from the 3D point set in a voxel using random sample consensus (RANSAC)-based plane fitting to obtain a set of interest points consisting of a major plane. These points are subsequently reprojected onto the image using the ground truth camera pose, following which a polygonal region is identified in each voxel using the convex hull. We designed a training dataset for 2D–3D matching, consisting of inlier 3D points, correspondence across image pairs, and the voxel regions in the image. We trained the hierarchical learning structure with two CNNs on the dataset architecture to detect the voxel regions and obtain the location/description of the interest points. Following successful 2D–3D matching, the camera pose was estimated using

n-point pose solver in RANSAC. The experiment results show that our method can estimate the camera pose more precisely than previous end-to-end estimators.

Full article

►▼

Show Figures

Open AccessArticle

“Blurry Touch Finger”: Touch-Based Interaction for Mobile Virtual Reality with Clip-on Lenses

by

Youngwon Ryan Kim, Suhan Park and Gerard J. Kim

Cited by 1 | Viewed by 2574

Abstract

In this paper, we propose and explore a touch screen based interaction technique, called the “Blurry Touch Finger” for EasyVR, a mobile VR platform with non-isolating flip-on glasses that allows the fingers accessible to the screen. We demonstrate that, with the proposed technique,

[...] Read more.

In this paper, we propose and explore a touch screen based interaction technique, called the “Blurry Touch Finger” for EasyVR, a mobile VR platform with non-isolating flip-on glasses that allows the fingers accessible to the screen. We demonstrate that, with the proposed technique, the user is able to accurately select virtual objects, seen under the lenses, directly with the fingers even though they are blurred and physically block the target object. This is possible owing to the binocular rivalry that renders the fingertips semi-transparent. We carried out a first stage basic evaluation assessing the object selection performance and general usability of Blurry Touch Finger. The study has revealed that, for objects with the screen space sizes greater than about 0.5 cm, the selection performance and usability of the Blurry Touch Finger, as applied in the EasyVR configuration, was comparable to or higher than those with both the conventional head-directed and hand/controller based ray-casting selection methods. However, for smaller sized objects, much below the size of the fingertip, the touch based selection was both less performing and usable due to the usual fat finger problem and difficulty in stereoscopic focus.

Full article

►▼

Show Figures

Open AccessArticle

Enhancing English-Learning Performance through a Simulation Classroom for EFL Students Using Augmented Reality—A Junior High School Case Study

by

Yuh-Shihng Chang, Chao-Nan Chen and Chia-Ling Liao

Cited by 26 | Viewed by 6809

Abstract

In non-English-speaking countries, students learning EFL (English as a Foreign Language) without a “real” learning environment mostly shows poor English-learning performance. In order to improve the English-learning effectiveness of EFL students, we propose the use of augmented reality (AR) to support situational classroom

[...] Read more.

In non-English-speaking countries, students learning EFL (English as a Foreign Language) without a “real” learning environment mostly shows poor English-learning performance. In order to improve the English-learning effectiveness of EFL students, we propose the use of augmented reality (AR) to support situational classroom learning and conduct teaching experiments for situational English learning. The purpose of this study is to examine whether the learning performance of EFL students can be enhanced using augmented reality within a situational context. The learning performance of the experimental student group is validated by means of the attention, relevance, confidence, and satisfaction (ARCS) model. According to statistical analysis, the experimental teaching method is much more effective than that of the control group (i.e., the traditional teaching method). The learning performance of the experimental group students is obviously enhanced and the feedback of using AR by EFL students is positive. The experimental results reveal that (1) students can concentrate more on the practice of speaking English as a foreign language; (2) the real-life AR scenarios enhanced student confidence in learning English; and (3) applying AR teaching materials in situational context classes can provide near real-life scenarios and improve the learning satisfaction of students.

Full article

►▼

Show Figures