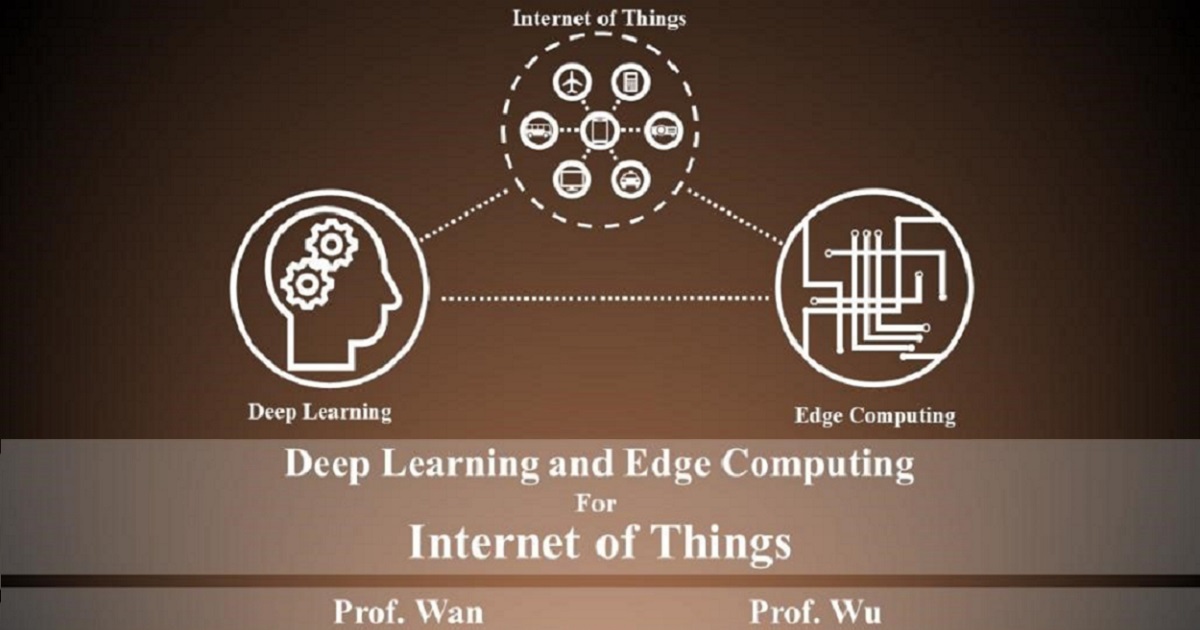

Deep Learning and Edge Computing for Internet of Things

A special issue of Applied Sciences (ISSN 2076-3417). This special issue belongs to the section "Computing and Artificial Intelligence".

Deadline for manuscript submissions: 31 August 2024 | Viewed by 13147

Special Issue Editors

Interests: deep learning; internet of things; edge computing

Special Issues, Collections and Topics in MDPI journals

Interests: computer vision; artifical intelligence; multimedia computing; intelligent water conservancy

Special Issues, Collections and Topics in MDPI journals

Special Issue Information

Dear Colleagues,

The evolution of 5G and Internet of Things (IoT) technologies is leading to ubiquitous connections among humans and their environment, such as applications in autopilot transportation, mobile e-commerce, unmanned vehicles and healthcare, bringing revolutionary changes to our daily lives. Moreovercomputing environment, resulting in the requirement forsupport an increasing range of functionality: multi-sensory data processing and analysis, complex systems control strategies, and, ultimately, artificial intelligence. After several years of development, edge computing for deep learning has shown its incomparable practical value in the IoT environment. Pushing computing resources to the edge in closer proximity to devices enables low-latency service delivery for both safety and applications. However, edge computing still has abundant untapped potential for deep learning. Systems should leverage awareness of the surrounding environment and attach more importance to edge-edge intelligence collaboration and edge-cloud communication. Additionally, computation systems should provide more support for services like edge AI in order to optimize the computing process, including smart scheduling, privacy protection, environment-aware ability etc. Overall, edge computing is predicted to be one of the most useful tools for the ubiquitous IoT environment. This Special Issue aims to explore recent advances in edge computing technologies.

The topics of interest for this Special Issue include, but are not limited to:

- Hardware-software design approaches for edge computing and processing.

- Frameworks and models for edge-computing-enabled IoT.

- Multi-agent planning and coordination for edge computing.

- Testbed and simulation tools for smart edge computing.

- Intelligent computation allocation and offloading.

- Application case studies for the smart edge computing environment.

- Security and privacy for smart edge computing systems.

- Energy-efficient and green computing for edge-computing-enabled IoT.

- Network optimization and communication protocols for edge AI.

- Edge machine-learning architectures dealing with sensor and signal variabilities.

Prof. Dr. Shaohua Wan

Dr. Yirui Wu

Guest Editors

Manuscript Submission Information

Manuscripts should be submitted online at www.mdpi.com by registering and logging in to this website. Once you are registered, click here to go to the submission form. Manuscripts can be submitted until the deadline. All submissions that pass pre-check are peer-reviewed. Accepted papers will be published continuously in the journal (as soon as accepted) and will be listed together on the special issue website. Research articles, review articles as well as short communications are invited. For planned papers, a title and short abstract (about 100 words) can be sent to the Editorial Office for announcement on this website.

Submitted manuscripts should not have been published previously, nor be under consideration for publication elsewhere (except conference proceedings papers). All manuscripts are thoroughly refereed through a single-blind peer-review process. A guide for authors and other relevant information for submission of manuscripts is available on the Instructions for Authors page. Applied Sciences is an international peer-reviewed open access semimonthly journal published by MDPI.

Please visit the Instructions for Authors page before submitting a manuscript. The Article Processing Charge (APC) for publication in this open access journal is 2400 CHF (Swiss Francs). Submitted papers should be well formatted and use good English. Authors may use MDPI's English editing service prior to publication or during author revisions.

Keywords

- edge computing

- deep learning

- Internet of Things

- computational intelligence

- computing systems