Image-Based Plant Disease Identification by Deep Learning Meta-Architectures

Abstract

:1. Introduction

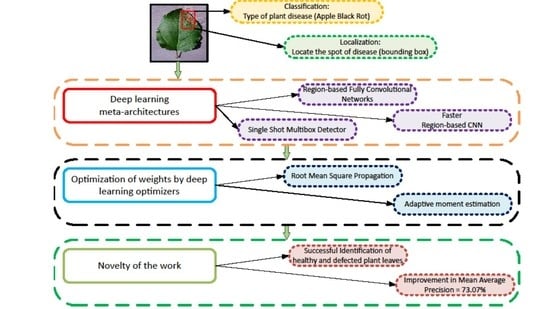

- A comprehensive study of deep learning meta-architectures has been conducted for the identification of disease in several plant species infected by fungi, infection, virus, and bacteria.

- An attempt has been made towards the improvement in the performance of DL meta-architectures specifically for plant disease recognition/identification tasks by using three different state-of-the-art DL optimization methods including Stochastic Gradient Descent (SGD) with Momentum, Adaptative Moment Estimation (Adam), and Root Mean Square Propagation (RMSProp).

- The weights obtained after the training of the DL models could also be used for the other datasets related to plant disease.

2. Materials and Methods

2.1. Generalized Framework

2.2. Dataset Selection

2.3. Annotation of the Training Dataset

2.4. Deep Learning Meta-Architectures

2.4.1. Single Shot MultiBox Detector (SSD)

2.4.2. Faster Region-based Convolutional Neural Network (Faster-RCNN)

2.4.3. Region-based Fully Convolutional Networks (RFCN)

2.5. Deep Learning Optimizers

2.5.1. Stochastic Gradient Descent (SGD) with Momentum

2.5.2. Root Mean Square Propagation (RMSProp)

2.5.3. Adaptive Moment Estimation (Adam)

2.6. Experimental Setup

2.7. Performance Metric

3. Results and Discussion

3.1. Performance of Deep Learning Meta-Architectures

3.1.1. SSD Architecture

3.1.2. Faster-RCNN Architecture

3.1.3. R-FCN Architecture

3.2. Overall Remarks for SSD, Faster RCNN, and RFCN Architectures

- The SSD model achieved the highest mAP among all the DL meta-architectures. This is due to the structural behaviour of the SSD model which provides a fixed-size predictive box set and scores at each feature-layer position of a kernel. The convolutional layers are added to the last of the base network which predicts multiple scales [31]. The projected performance value boxes in each feature map location compared to the default position boxes are determined using an intermediate connected layer in these positions instead of a fully convolution layer.

- Another significant distinction of the SSD model is that the information in ground-level truth boxes allocates to different outputs within the defined collection of detector outputs during SSD training compared to other regional networks. The structure of the network decides which ground box should be matched with its corresponding default box during the training stage, known as matching strategy in SSD. Thereby, the use of several convolutional bounding box outputs connected to features maps at the top of the network made this model successful as compared to other region-based methods.

- The base network SSD combined with the” Inception” model performed better than the Faster-RCNN combined with the same feature extraction method. Moreover, Table 4 shows that the base network Faster-RCNN with feature extractor ResNet-101 showed relatively higher mAP than with the Inception model.

- The RFCN model achieved lower mAP than the SSD and Faster RCNN (with ResNet-101) architectures.

- More interestingly, the SSD architecture was able to detect few of those classes that were completely undetected by the Faster RCNN and RFCN models (as shown in Table 4).

- Following the proposed methodology presented in Section 2, the SSD with Inception-v2 and Faster RCNN with ResNet-101 models achieved the highest mAP among all the other DL meta-architectures. Therefore, they were selected for the next stage of this research.

3.3. Performance Improvement by DL Optimization Algorithms

- The Faster-RCNN with the ResNet-101 model trained by Adam and RMSProp optimizers failed to improve its overall mAP as compared to the SGD (with momentum) optimizer.

- On the other hand, the SSD model achieved 66.51% mAP when it was trained by the momentum optimizer. Then, its mAP was increased by about 2.38% with the RMSProp optimizer. Further improvement of 3.39% in the mAP was observed when the weights of the SSD model were optimized by the Adam optimization algorithm.

- It is also noticed that when the SSD model was trained by Adam optimizer, the average precision of several leaf categories significantly improved, due to which the highest mAP of 73.07% was attained. The AP of classes such as Apple black rot, Apple cedar rust, Tomato early blight, and disease was increased to more than 50%. The AP of few other classes also improved (but still less than 50%) including Tomato target spot, Tomato bacterial spot, Potato late blight, Potato early blight, Pepper bacterial spot, and Peach bacterial spot. The AP of corn gray leaf spot class also improved, which was previously unsuccessful in providing a noticeable AP when the dataset was trained with the SGD with momentum and RMSProp optimizers. However, the further improvement in AP should be considered in future research.

- Figure 15 presents the change in AP for each class of the PlantVillage dataset when they were trained by the SSD model with all the three DL optimizers.

- A summary of the mAP achieved by DL meta-architectures trained with different optimization algorithms is presented in Figure 16.

4. Conclusions and Future Work

- The trained and tested DL models’ pipeline, checkpoints, and weights can be reused as a transfer learning approach for upcoming researches related to plant disease detection.

- Various factors affecting the performance of best-suited DL architecture should be investigated such as data augmentation techniques, batch size, aspect ratios, etc.

- Although, all the classes of the PlantVillage dataset were identified by the proposed methodology; still, few of them achieved a lower average precision. Therefore, few modifications in DL networks can also be proposed in the future to further improve the mean average precision.

- This research could also be beneficial for several robotic systems to identify/classify healthy and unhealthy crops in real-time that would contribute to agricultural automation.

Author Contributions

Funding

Conflicts of Interest

References

- Sankaran, S.; Mishra, A.; Ehsani, R.; Davis, C. A review of advanced techniques for detecting plant diseases. Comput. Electron. Agric. 2010, 72, 1–13. [Google Scholar] [CrossRef]

- Duro, D.C.; Franklin, S.E.; Dubé, M.G. A comparison of pixel-based and object-based image analysis with selected machine learning algorithms for the classification of agricultural landscapes using SPOT-5 HRG imagery. Remote Sens. Environ. 2012, 118, 259–272. [Google Scholar] [CrossRef]

- Yamamoto, K.; Guo, W.; Yoshioka, Y.; Ninomiya, S. On plant detection of intact tomato fruits using image analysis and machine learning methods. Sensors 2014, 14, 12191–12206. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Esteva, A.; Robicquet, A.; Ramsundar, B.; Kuleshov, V.; DePristo, M.; Chou, K.; Cui, C.; Corrado, G.; Thrun, S.; Dean, J. A guide to deep learning in healthcare. Nat. Med. 2019, 25, 24–29. [Google Scholar] [CrossRef] [PubMed]

- Kocić, J.; Jovičić, N.; Drndarević, V. An end-to-end deep neural network for autonomous driving designed for embedded automotive platforms. Sensors 2019, 19, 2064. [Google Scholar] [CrossRef] [Green Version]

- Saleem, M.H.; Potgieter, J.; Arif, K.M. Plant disease detection and classification by deep learning. Plants 2019, 8, 468. [Google Scholar] [CrossRef] [Green Version]

- Adhikari, S.P.; Yang, H.; Kim, H. Learning semantic graphics using convolutional encoder-decoder network for autonomous weeding in paddy field. Front. Plant Sci. 2019, 10, 1404. [Google Scholar] [CrossRef] [Green Version]

- Olsen, A.; Konovalov, D.A.; Philippa, B.; Ridd, P.; Wood, J.C.; Johns, J.; Banks, W.; Girgenti, B.; Kenny, O.; Whinney, J. DeepWeeds: A multiclass weed species image dataset for deep learning. Sci. Rep. 2019, 9, 1–12. [Google Scholar] [CrossRef]

- Marani, R.; Milella, A.; Petitti, A.; Reina, G. Deep neural networks for grape bunch segmentation in natural images from a consumer-grade camera. Precis. Agric. 2020, 1–27. [Google Scholar] [CrossRef]

- Wan, S.; Goudos, S. Faster R-CNN for multi-class fruit detection using a robotic vision system. Comput. Netw. 2020, 168, 107036. [Google Scholar] [CrossRef]

- Ampatzidis, Y.; Partel, V. UAV-based high throughput phenotyping in citrus utilizing multispectral imaging and artificial intelligence. Remote Sens. 2019, 11, 410. [Google Scholar] [CrossRef] [Green Version]

- Fuentes-Pacheco, J.; Torres-Olivares, J.; Roman-Rangel, E.; Cervantes, S.; Juarez-Lopez, P.; Hermosillo-Valadez, J.; Rendón-Mancha, J.M. Fig Plant Segmentation from Aerial Images Using a Deep Convolutional Encoder-Decoder Network. Remote Sens. 2019, 11, 1157. [Google Scholar] [CrossRef] [Green Version]

- Quiroz, I.A.; Alférez, G.H. Image recognition of Legacy blueberries in a Chilean smart farm through deep learning. Comput. Electron. Agric. 2020, 168, 105044. [Google Scholar] [CrossRef]

- Wu, C.; Zeng, R.; Pan, J.; Wang, C.C.; Liu, Y.-J. Plant phenotyping by deep-learning-based planner for multi-robots. IEEE Robot. Autom. Lett. 2019, 4, 3113–3120. [Google Scholar] [CrossRef]

- Saleem, M.H.; Potgieter, J.; Arif, K.M. Plant Disease Classification: A Comparative Evaluation of Convolutional Neural Networks and Deep Learning Optimizers. Plants 2020, 9, 1319. [Google Scholar] [CrossRef]

- Chen, J.; Liu, Q.; Gao, L. Visual Tea Leaf Disease Recognition Using a Convolutional Neural Network Model. Symmetry 2019, 11, 343. [Google Scholar] [CrossRef] [Green Version]

- Kamal, K.; Yin, Z.; Wu, M.; Wu, Z. Depthwise separable convolution architectures for plant disease classification. Comput. Electron. Agric. 2019, 165, 104948. [Google Scholar]

- Karthik, R.; Hariharan, M.; Anand, S.; Mathikshara, P.; Johnson, A.; Menaka, R. Attention embedded residual CNN for disease detection in tomato leaves. Appl. Soft Comput. 2020, 86, 105933. [Google Scholar]

- Geetharamani, G.; Pandian, A. Identification of plant leaf diseases using a nine-layer deep convolutional neural network. Comput. Electr. Eng. 2019, 76, 323–338. [Google Scholar]

- Vaishnnave, M.; Devi, K.S.; Ganeshkumar, P. Automatic method for classification of groundnut diseases using deep convolutional neural network. Soft Comput. 2020, 24, 16347–16360. [Google Scholar] [CrossRef]

- Mohanty, S.P.; Hughes, D.P.; Salathé, M. Using deep learning for image-based plant disease detection. Front. Plant Sci. 2016, 7, 1419. [Google Scholar] [CrossRef] [Green Version]

- Too, E.C.; Yujian, L.; Njuki, S.; Yingchun, L. A comparative study of fine-tuning deep learning models for plant disease identification. Comput. Electron. Agric. 2019, 161, 272–279. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the 2014 IEEE conference on computer vision and pattern recognition (CVPR), Columbus, OH, USA, 24–27 June 2014; pp. 580–587. [Google Scholar]

- Fuentes, A.; Yoon, S.; Kim, S.C.; Park, D.S. A robust deep-learning-based detector for real-time tomato plant diseases and pests recognition. Sensors 2017, 17, 2022. [Google Scholar] [CrossRef] [Green Version]

- Gutierrez, A.; Ansuategi, A.; Susperregi, L.; Tubío, C.; Rankić, I.; Lenža, L. A Benchmarking of Learning Strategies for Pest Detection and Identification on Tomato Plants for Autonomous Scouting Robots Using Internal Databases. J. Sens. 2019, 2019. [Google Scholar] [CrossRef]

- Ramcharan, A.; McCloskey, P.; Baranowski, K.; Mbilinyi, N.; Mrisho, L.; Ndalahwa, M.; Legg, J.; Hughes, D.P. A mobile-based deep learning model for cassava disease diagnosis. Front. Plant Sci. 2019, 10, 272. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ji, M.; Zhang, K.; Wu, Q.; Deng, Z. Multi-label learning for crop leaf diseases recognition and severity estimation based on convolutional neural networks. Soft Comput. 2020, 24, 15327–15340. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in neural information processing systems (NIPS 2012), Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European conference on computer vision (ECCV), Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Hughes, D.; Salathé, M. An open access repository of images on plant health to enable the development of mobile disease diagnostics. arXiv 2015, arXiv:1511.08060. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European conference on computer vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Huang, J.; Rathod, V.; Sun, C.; Zhu, M.; Korattikara, A.; Fathi, A.; Fischer, I.; Wojna, Z.; Song, Y.; Guadarrama, S. Speed/accuracy trade-offs for modern convolutional object detectors. In Proceedings of the 2017 IEEE conference on computer vision and pattern recognition (CVPR), Hawaii Convention Center, Honolulu, Hawaii, 21–26 July 2017; pp. 7310–7311. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in neural information processing systems (NIPS), Montreal Convention Center, Montreal, QC, Canada, 7–10 December 2015; pp. 91–99. [Google Scholar]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-fcn: Object detection via region-based fully convolutional networks. In Proceedings of the Advances in neural information processing systems (NIPS), International Barcelona Convention Center, Barcelona, Spain, 5–10 December 2016; pp. 379–387. [Google Scholar]

- Ruder, S. An overview of gradient descent optimization algorithms. arXiv 2016, arXiv:1609.04747. [Google Scholar]

- Hinton, G.; Srivastava, N.; Swersky, K. Neural Networks for Machine Learning. Available online: http://www.cs.toronto.edu/~hinton/coursera/lecture6/lec6.pdf (accessed on 7 October 2020).

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2818–2826. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, inception-resnet and the impact of residual connections on learning. arXiv 2016, arXiv:1602.07261. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Bergstra, J.; Bengio, Y. Random search for hyper-parameter optimization. J. Mach. Learn. Res. 2012, 13, 281–305. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef] [Green Version]

- Brahimi, M.; Arsenovic, M.; Laraba, S.; Sladojevic, S.; Boukhalfa, K.; Moussaoui, A. Deep learning for plant diseases: Detection and saliency map visualisation. In Human and Machine Learning; Springer: Berlin, Germany, 2018; pp. 93–117. [Google Scholar]

| Classes of PlantVillage Dataset | Disease Cause | Annotation Label | Training Images | Validation Images | Testing Images |

|---|---|---|---|---|---|

| Apple Scab | Fungi | A_Scab | 441 | 126 | 63 |

| Apple Black Rot | Fungi | A_Blk_Rot | 435 | 124 | 62 |

| Apple Cedar Rust | Fungi | A_C_Rust | 192 | 55 | 28 |

| Apple Healthy | - | A_Healthy | 1151 | 329 | 165 |

| Blueberry Healthy | - | B_Healthy | 1051 | 300 | 151 |

| Cherry Healthy | - | Ch_Healthy | 598 | 171 | 85 |

| Cherry Powdery Mildew | Fungi | Ch_Mildew | 736 | 210 | 106 |

| Corn (maize) Common rust | Fungi | Corn_Rust | 835 | 238 | 119 |

| Corn (maize) Healthy | - | Corn_Healthy | 813 | 233 | 116 |

| Corn (maize) Northern Leaf Blight | Fungi | Corn_Blight | 690 | 197 | 98 |

| Corn (maize) Gray leaf spot | Fungi | Corn_Spot | 360 | 102 | 52 |

| Grape Black Rot | Fungi | G_Blk_Rot | 826 | 236 | 118 |

| Grape (Black Measles) | Fungi | G_Blk_Measles | 968 | 277 | 138 |

| Grape Healthy | - | Grp_Healthy | 296 | 85 | 42 |

| Grape Leaf Blight (Isariopsis Leaf Spot) | Fungi | Grp_Blight | 753 | 215 | 108 |

| Orange Huanglongbing (Citrus greening) | Bacteria | O_HLBing | 3855 | 1101 | 551 |

| Peach Bacterial Spot | Bacteria | Pec_Bact_Spot | 1608 | 459 | 230 |

| Peach Healthy | - | Pec_Healthy | 252 | 72 | 36 |

| Pepper Bell Bacterial Spot | Bacteria | Pep_Bact_Spot | 698 | 199 | 100 |

| Pepper Bell Healthy | - | Pep_Healthy | 1034 | 297 | 147 |

| Potato Early Blight | Fungi | Po_E_Blight | 700 | 200 | 100 |

| Potato Healthy | - | Po_Healthy | 107 | 30 | 15 |

| Potato Late Blight | Infection | Po_L_Blight | 700 | 200 | 100 |

| Raspberry Healthy | - | Ras_Healthy | 260 | 74 | 37 |

| Soybean Healthy | - | Soy_Healthy | 3563 | 1018 | 509 |

| Squash Powdery Mildew | Fungi | Sq_Powdery | 1285 | 367 | 183 |

| Strawberry Healthy | - | Straw_Healthy | 319 | 91 | 46 |

| Strawberry Leaf Scorch | Fungi | Straw_Scorch | 776 | 222 | 111 |

| Tomato Bacterial Spot | Bacteria | Tom_Bact_Spot | 1488 | 426 | 213 |

| Tomato Early Blight | Fungi | Tom_E_Blight | 700 | 200 | 100 |

| Tomato Healthy | - | Tom_Healthy | 1114 | 318 | 159 |

| Tomato Late Blight | Infection | Tom_L_Blight | 1336 | 382 | 191 |

| Tomato Leaf Mold | Fungi | Tom_L_Mold | 667 | 190 | 95 |

| Tomato Septoria leaf Spot | Fungi | Tom_Sept | 1240 | 354 | 177 |

| Tomato Spider Mites | Mite | Tom_Sp_Mite | 1174 | 335 | 167 |

| Tomato Target Spot | Fungi | Tom_Target | 984 | 280 | 140 |

| Tomato Mosaic Virus | Virus | Tom_Mosaic | 262 | 74 | 37 |

| Tomato Yellow Leaf Curl Virus | Virus | Tom_Curl | 3750 | 1071 | 536 |

| Base Networks | Feature Extraction Methods | mAP (%) for COCO Dataset |

|---|---|---|

| SSD | Inception v2 | 24 |

| Faster-RCNN | Inception v2 | 28 |

| ResNet-50 | 30 | |

| ResNet-101 | 32 | |

| Inception-ResNet v2 | 37 | |

| R-FCN | ResNet-101 | 30 |

| DL Optimizers | lr | Momentum | |||

|---|---|---|---|---|---|

| SGD with Momentum (default) | 0.01 | 0.9 | - | - | - |

| SGD with Momentum (modified) | 0.0003 | 0.9 | - | - | - |

| Adam (default) | 0.001 | - | 0.9 | 0.999 | 1 × 10−08 |

| Adam (modified) | 0.0002 | - | 0.9 | 0.9997 | 1 × 10−03 |

| RMSProp (default) | 0.001 | 0.0 | 0.9 | - | 1 × 10−08 |

| RMSProp (modified) | 0.0004 | 0.0 | 0.95 | - | 1 × 10−02 |

| Annotated Class Labels | DL Meta-Architectures with Feature Extractors and Optimizers | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| R-FCN ResNet-101 | Faster-RCNN | SSD Inception-v2 | ||||||||

| ResNet-50 | Inception ResNet-v2 | Inception-v2 | ResNet-101 | |||||||

| SGD with Momentum | SGD with Momentum | SGD with Momentum | SGD with Momentum | SGD with Momentum | RMSProp | Adam | SGD with Momentum | RMSProp | Adam | |

| A_Scab | 90.61 | - | 90.04 | 31.22 | 61.37 | 99.83 | 22.93 | 27.24 | 36.16 | 27.27 |

| A_Blk_Rot | 47.36 | 89.69 | - | 24.6 | 41.82 | 57.41 | 43.82 | 45.42 | 45.45 | 54.55 |

| A_C_Rust | 90.24 | 65.33 | - | 7.27 | 34.98 | 91.64 | 35.48 | 45.45 | 45.45 | 54.55 |

| A_Healthy | 90.85 | 99.97 | 2.19 | 75.91 | 43.42 | 6.71 | 18.13 | 90.91 | 100 | 90.91 |

| B_Healthy | 99.97 | - | 100 | 85.58 | 90 | 22.77 | 88.8 | 100 | 90.91 | 100 |

| Ch_Healthy | 79.22 | - | - | 87.32 | 75.69 | 99.84 | 63.66 | 90.86 | 90.91 | 90.91 |

| Ch_Mildew | 40.46 | - | - | 99.62 | 99.55 | 95.39 | 98.87 | 36.36 | 45.45 | 45.45 |

| Corn_Rust | 0.76 | - | - | 99.85 | 99.89 | 100 | 99.63 | 90.91 | 99.89 | 99.92 |

| Corn_Healthy | - | - | - | 100 | 99.96 | 76.13 | 90.87 | 90.91 | 90.91 | 90.91 |

| Corn_Blight | 42.57 | - | - | 51.01 | 66.34 | 93.53 | 96.09 | 45.45 | 54.55 | 54.29 |

| Corn_Spot | 0.31 | - | - | 1.15 | 20.56 | 31.83 | 10.95 | 0.73 | 5.62 | 33.13 |

| G_Blk_Rot | 7 | - | - | 1.16 | 53.9 | 53.87 | 0.05 | 71.26 | 72.73 | 72.73 |

| G_Blk_Measles | 0.09 | - | - | 100 | 100 | 100 | 99.61 | 100 | 90.91 | 99.87 |

| Grp_Healthy | - | - | - | 99.59 | 99.3 | 98.86 | 90.55 | 100 | 90.91 | 100 |

| Grp_Blight | 0.21 | - | - | 72.73 | 99.93 | 94.29 | 81.06 | 72.73 | 72.73 | 72.73 |

| O_HLBing | 12.74 | - | - | 99.99 | 90.91 | 98.14 | 90.91 | 90.91 | 90.91 | 90.91 |

| Pec_Bact_Spot | 0.28 | - | - | 14.23 | 40.23 | 74.16 | - | 9.09 | 18.14 | 27.23 |

| Pec_Healthy | - | - | - | 8.21 | 34.11 | 42.93 | 5.34 | 90.91 | 99.28 | 100 |

| Pep_Bact_Spot | 6.8 | - | - | 1.86 | 2.58 | 35.61 | - | 9.09 | 18.11 | 36.27 |

| Pep_Healthy | 50.95 | - | - | 6.45 | 2.11 | 19.08 | 2.33 | 90.91 | 90.8 | 90.91 |

| Po_E_Blight | 59.95 | - | - | - | 2.51 | 32 | - | 9.09 | 9.09 | 26.84 |

| Po_Healthy | - | - | - | - | - | 0.18 | - | 90.91 | 90.61 | 90.91 |

| Po_L_Blight | 94.77 | - | - | - | - | 1.79 | - | 29.16 | 43.81 | 44.92 |

| Ras_Healthy | 0.23 | - | - | 0.33 | 1.6 | 9.53 | 1.14 | 90.91 | 100 | 100 |

| Soy_Healthy | 88.11 | - | - | 26.03 | 59.43 | 9.6 | 3.35 | 90.91 | 90.13 | 90.91 |

| Sq_Powdery | 99.46 | - | - | 52.68 | 99.4 | 5.65 | 54.61 | 81.82 | 90.91 | 81.82 |

| Straw_Healthy | 99.3 | - | - | 53.33 | 18.07 | 1.1 | 62.53 | 100 | 90.91 | 100 |

| Straw_Scorch | 100 | 98.34 | - | 72.62 | 70.47 | 86.45 | 6.94 | 72.66 | 72.7 | 72.69 |

| Tom_Bact_Spot | 98.85 | - | 99.93 | 0.29 | 2.3 | 5.57 | - | 18.03 | 26.67 | 36.28 |

| Tom_E_Blight | - | - | - | 7.04 | 39.38 | 64.2 | 11.59 | 27.12 | 36.36 | 54.45 |

| Tom_Healthy | 0.2 | - | - | 100 | 87.13 | 49.65 | 100 | 100 | 100 | 100 |

| Tom_L_Blight | - | - | - | 99.96 | 99.96 | 95.92 | 90.36 | 81.79 | 81.72 | 90.77 |

| Tom_L_Mold | 3.87 | - | - | 96.21 | 98.7 | 99.55 | 82.21 | 45.41 | 63.6 | 63.64 |

| Tom_Sept | - | - | - | 99.56 | 95.24 | 100 | 99.83 | 90.86 | 90.88 | 90.91 |

| Tom_Sp_Mite | 85.12 | - | - | 98.52 | 98.06 | 98.73 | 99.9 | 90.88 | 90.88 | 90.91 |

| Tom_Target | 1.12 | - | - | 61.56 | 99.98 | 83.77 | 96.41 | 35.81 | 35.24 | 45.4 |

| Tom_Mosaic | 98.15 | - | - | 9.8 | 85.98 | 22.17 | - | 72.73 | 54.55 | 63.64 |

| Tom_Curl | 85.48 | - | - | 99.68 | 99.98 | 99.86 | 100 | 100 | 100 | 100 |

| mAP (%) | 41.45 | 9.30 | 7.69 | 51.19 | 60.92 | 59.41 | 48.63 | 66.51 | 68.89 | 73.07 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Saleem, M.H.; Khanchi, S.; Potgieter, J.; Arif, K.M. Image-Based Plant Disease Identification by Deep Learning Meta-Architectures. Plants 2020, 9, 1451. https://doi.org/10.3390/plants9111451

Saleem MH, Khanchi S, Potgieter J, Arif KM. Image-Based Plant Disease Identification by Deep Learning Meta-Architectures. Plants. 2020; 9(11):1451. https://doi.org/10.3390/plants9111451

Chicago/Turabian StyleSaleem, Muhammad Hammad, Sapna Khanchi, Johan Potgieter, and Khalid Mahmood Arif. 2020. "Image-Based Plant Disease Identification by Deep Learning Meta-Architectures" Plants 9, no. 11: 1451. https://doi.org/10.3390/plants9111451