1. Introduction

Wildfires pose serious hazards to ecological systems and human safety. To avoid the occurrence of fire accidents, fire risk assessment, fire potential mapping, and fire surveillance systems aim to manage forests and to prevent or detect forest fires at the initial stage. Wildfire risk assessment could be defined as a combination of fire likelihood, intensity, and effects [

1]. Thus, risk assessment contributes to the identification of optimal locations for the installation of fire detection sensors. Fire detection methods based on physical sensors or vision-based algorithms have been widely studied. In the past, most of the traditional detection systems were based on physical sensors. However, in the recent few decades, various kinds of vision-based security and surveillance systems have been developed. Combined with computer vision and image-processing-based methods, they can achieve accurate early fire detection, protecting human life and enhancing environmental security [

2].

Various methods have been applied for wildfire risk assessment [

3], which aims to minimize threats to life, property, and natural resources. The risk assessment includes empirical or statistical studies of ignition and large-fire patterns [

4] and simulation modelling where probabilities are estimated using Monte Carlo sampling of weather data [

5]. In the context of fire risk assessment, both empirical and simulation studies play an important role in understanding the factors that contribute to the severity and spread of fires. Empirical studies involve collecting data through observation and analysis of real-world fire events and their impact on human and ecological systems. This type of research is crucial for gaining a deeper understanding of the various factors that contribute to the spatial planning and development of policies aimed at increasing preparedness for large fires. Such studies provide valuable insights into the human and ecological aspects of fire risk, which can inform the development of more effective and sustainable fire management strategies. However, these studies often have the drawback of being site-specific. On the other hand, wildfire simulation models use physical models to estimate parameters and are required for fine-scale burn probabilities mapping [

6,

7]. By integrating spatial information from both empirical and simulation modelling, it becomes possible to take into account the interplay between human and biophysical factors that contribute to the risk of wildfires. This helps to produce a more comprehensive understanding of the drivers of wildfire risk and enables better decision making for mitigation and preparedness efforts. By combining the strengths of both empirical and simulation studies, a more holistic and accurate assessment of wildfire risk can be achieved [

3].

The mapping of wildfire likelihood and potential fire hazards is used to identify forest areas with higher fire risk, where surveillance or monitoring systems can be installed. According to the most recent review studies, terrestrial systems have high accuracy and quick response times, but they have limited coverage. In order to address the limitation of single-point sensors, the deployment of extensive networks of ground sensors and the use of aerial and satellite-based systems have been proposed as alternative solutions. These systems provide improved coverage and have demonstrated high accuracy and quick response times. While fires can be detected at an early stage through terrestrial and aerial systems, satellite-based imaging sensors such as MODIS can detect small fires within 2 to 4 h after observation [

2]. Given the importance of early fire detection and better coverage, this study considers the use of aerial surveillance systems.

Traditional forest fire surveillance systems capture real-time scenes, providing full-time monitoring, but blind spots always exist if the used cameras have a limited field of view (FOV). This problem can be solved by employing either a sensor network or omnidirectional cameras with a wider FOV. Early implemented methods to achieve an omnidirectional detection include the use of PTZ (pan/tilt/zoom) cameras or multiple camera clusters [

8]. However, their shortages, such as the slow-moving speed of the PTZ mechanism and the additional costs of installation and maintenance, restrict their further applications. Later on, cameras with omnidirectional vision sensors such as parabolic mirror or fisheye lens have become more and more popular due to their real-time applications and low cost [

9,

10,

11]. In recent years, autonomous unmanned aerial vehicles (UAVs) for early fire detection and fighting have been introduced. The goal of aerial systems is to offer a more comprehensive and precise understanding of fires from an aerial perspective. To achieve this, UAVs are utilized which integrate a variety of remote sensing technologies and computer vision techniques based on either machine learning or deep learning [

12]. They mostly use ultra-high-resolution optical or infrared cameras, and they integrate various sensors for navigation and communication. More recently, 360-degree remote sensing systems have been proposed in order to capture images with unlimited field of view and early fire detection.

Traditional wildfire detection methods that utilize optical sensors have long relied on the use of various features related to the physical properties of fire, including its colour, motion, spectral, spatial, temporal, and textural characteristics. These features are then combined and analysed to accurately detect and locate wildfires [

2,

8]. Unlike traditional methods that utilize manually created features, deep learning techniques have the capability to automatically identify and extract intricate feature representations through the process of learning. This results in a more sophisticated and nuanced understanding of the data, leading to improved performance in various detection and classification tasks [

2,

13,

14,

15,

16]. Barmpoutis et al. [

17] went a step further in utilizing deep learning methods by incorporating the concept of higher-level multidimensional texture analysis, which is based on higher-order linear dynamical systems. This combination aimed to enhance the capabilities of the deep learning approach in analysing complex patterns and structures in the data. By leveraging the strengths of both deep learning and multidimensional texture analysis, the authors aimed to achieve improved results in early fire detection. More recently, vision transformers [

18,

19,

20,

21,

22] inspired by the deep learning model that was developed for natural language processing [

23] have been employed for various applications as well as fire detection and classification of fire. More specifically, attention layers have been utilized in different ways by vision transformers. For example, Barmpoutis et al. [

20] investigated the use of a spatial and multiscale feature enhancement module; Xu et al. [

21] designed a fused axial attention module capturing local and global spatial interactions; and Tu et al. [

22] introduced a multiaxis attention model which utilizes global–local spatial interactions. Focusing on fire detection, Ghali et al. [

24] used two vision-based transformers, namely, TransUNet and MedT, extracting both global and local features in order to reduce fire-pixel misclassifications. In another study, a multihead attention mechanism and an augmentation strategy were applied for remote sensing classification including environmental monitoring and forest detection [

25]. An extended approach [

26], using deep ensemble learning method and combining two vision transformers with a deep convolutional model, was employed to initially classify wildfires using aerial images and then to segment wildfire regions. Other researchers focused on the difference between clouds and smoke. More specifically, Li et al. [

27] used an attention model, recursive bidirectional feature pyramid network (RBiFPN), as a backbone network of YOLOV5 frame, improving the detection accuracy of the wildfire smoke. These methods have significantly contributed to the increment of fire detection accuracy.

In this paper, given the urgent priority around protecting forest ecosystems, 360-degree sensors mounted to UAVs and visual transformers are used for early fire detection. More specifically, this paper makes the following contributions:

Fire risk assessment for a Mediterranean suburban forest named Seich Sou is performed.

A model (FIRE-mDT) that combines ResNet-50 and a multiscale deformable transformer model is adapted and introduced for early and accurate fire detection as well as for fire location and propagation estimation.

A dataset that consists of 60 images of a real fire event which occurred on 13 July 2021 in the suburban Seich Sou Forest was created. This case was used for the evaluation of the efficiency of the real-scenario fire detection.

Further validation was performed using the “Fire detection 360-degree dataset” [

17].

2. Mediterranean Suburban Fires

The current trend of climate change in the Mediterranean region that causes more intense summer season droughts and more frequent occurrence of extreme weather events has resulted in an increased number of annual forest fires in the Mediterranean region during the last decades. More specifically, fire frequency has doubled over the last century, in terms of both the number (

Figure 1) of fires and burned area (

Figure 2) [

28]. The comparison of the number of fires for 2021 with the average number of fires for the years 2011–2020 reveals that despite the fact that the number of fires that took place in the Iberian Peninsula countries in 2021 was less than the average for the years 2011–2020, there is still a noticeable trend of increased fire occurrences and extent of burn areas in the Mediterranean countries. This highlights the need for continued vigilance and efforts to mitigate the risk of wildfires in these regions as well as the need for the development of effective early fire detection systems [

29]. It is worth mentioning that 2021 was a particularly devastating year for forest fires, with many experts attributing the severity of the fires to a combination of climate change, extreme weather events, and the impacts of the COVID-19 pandemic on land management practices.

An analysis of forest fire risk in the Mediterranean basin shows that there will be a notable increase in the number of weeks with fire risk in all Mediterranean land areas between 2030 and 2060. This increase is estimated to be between 2 to 6 weeks, with a significant proportion of the increase being classified as extreme fire risk. This means that there will be a heightened likelihood of more intense and destructive fires in the region, which could have far-reaching impacts on both the environment and local communities [

30]. In addition, according to a 2022 study [

31], instances of extreme wildfires are forecasted to increase by 14 percent by the year 2030 and by 30 percent by 2050.

In addition, to explore the evolution of research on forest and suburban fires in the Mediterranean region, we conducted a bibliometric study.

Figure 3 and

Figure 4 show the trend in the number of articles published between 2000 and 2021. The findings reveal an increase in the number of publications for both fields over the past 20 years. However, the number of published articles in the field of Mediterranean fires is higher than these in the field of suburban fires. Narrowing the results of Mediterranean fires to only the suburban fires research area, the search yielded just 16 published articles from 2000. Thus, although suburban forest fires pose a serious threat to both urban and rural communities, as the combination of dense vegetation and close proximity to homes and businesses can lead to rapid spread and significant damage, the bibliometric results indicate that the research in the Mediterranean fire and suburban fire areas is still evolving. In addition,

Figure 5, which represents the ratio of the number of articles published related to suburban fires to the number of articles published related to Mediterranean fires, indicates that the field of Mediterranean suburban fires research has been insufficiently investigated.

3. Materials and Methods

The focus of this study was the suburban forest located in Thessaloniki city, known as Seich Sou. This forest is considered one of the most significant suburban forests in Greece, encompassing a diverse range of plant and animal life. However, in 1997, the forest experienced a devastating wildfire that destroyed more than half of its area. The impact of the 1997 wildfire has been far-reaching, affecting not only the forest ecosystem but also the local community [

33]. The data used in the present study were captured from various sites in the Thessaloniki metropolitan area. Initially, a fire risk assessment was performed and then a UAV equipped with 360-degree cameras was used for the forest surveillance.

3.1. Suburban Forest Fire Risk Assessment

The assessment of wildfire risk requires the use of Geographic Information System (GIS) technology or aerial monitoring [

34] to create, manage, and provide datasets related to fuels, land cover, and topography [

35]. To model fire behaviour, raster datasets such as canopy cover, elevation, fuel, slope, and aspect are also necessary. Additionally, stand-scale features such as crown bulk density, stand height, and canopy base height are needed to characterize the canopy. Meteorological and fuel moisture data, as well as an ignition prediction raster, are also crucial components. Some of these data, such as topography and weather conditions, can be easily obtained from public databases. However, others, such as canopy structure and fuels, require more advanced methods such as remote sensing (LiDAR), spatial data analysis, and statistical tools, as well as ground observations from forest recordings, to be generated with high accuracy [

36].

3.1.1. Vegetation

Seich Sou forest may be classified into the following three primary forest classes based on the examination of land cover: (1)

Pinus brutia forest from previous replanting, (2) evergreen

Ostryo-Carpinion (pseudomaquis) vegetation, and (3) the burned portion of the forest with vegetation that was developed following the 1997 fire (

Figure 6). The land cover in the area is primarily forest land (89.7%), followed by crop land (6.6%) and pastures (1.3%). The remaining 2.4% consists of the ring road and a few other special areas. The average age of “Seich Sou” is about 70 years, and the dominant tree species is

Pinus brutia L.

3.1.2. Distance from Buildings and Urban Fabric

The forest has very long boundaries with the city of Thessaloniki, a fact which significantly increases the threat of cross-boundary fire transmission.

3.1.3. Topography

Datasets of altitude, slope, and aspect are used to describe topography. The adiabatic alteration of temperature and humidity is regulated by the elevation. Along with aspect, the slope dataset is required for calculating the direct impacts on fire spread, adjusting fuel moisture, and converting spread velocities and directions. With a mean elevation of 306.3 m above sea level, a maximum elevation of 569.5 m, and a minimum elevation of 56.8 m, the forest’s relief can be described as hilly–semi-mountainous and relatively steep, with a mean slope of 26.2% and a dominant aspect of SE–S–SW (

Figure 7).

3.1.4. Surface Fuel

Grasslands and forest stands burn differently across the elevation gradient. Vegetation density, height, mixture of grass, trees, and shrubs, and dead vegetation fuel highly influence the probability of ignition and the spread of fire. The vegetation of the forest is mainly of low moisture. Furthermore, the

Tomicus piniperda bark beetle has been responsible for a severe insect infestation since May 2019 [

37], which was discovered as a result of the necrosis of several pine trees (

Pinus bruttia L.). More than 300 hectares of forest were lost due to the infestation, which is still active today. To decrease and stop the spread of the infestation, extensive selective logging has been carried out lately, as well as the removal of affected trees from the forest. Many dead trees are still present in the forest, increasing the dead fuel vegetation.

3.1.5. Weather Data

The climate in the region can be characterized as a typical Mediterranean climate, which is characterized by hot and dry summers, and mild winters. This description is based on the available meteorological data, which provides a comprehensive understanding of the area’s climate patterns (

Figure 8). The average annual rainfall in the area is 444.5 mm, with the highest levels occurring in winter (December) and a secondary peak in spring (May). The average annual temperature is 15.9 °C, with average maximum temperature of 20.4 °C and average minimum temperature of 10.1 °C. The dominant wind direction during the summer (June, July, August) is N–NW, while the wind speed is >3.4 m/s for 33 days during the summer. The above weather conditions during the summer period increase the fire risk.

To conclude, the above fire risk parameters that were analysed, as well as other studies [

38], suggest that Seich Sou, one of the most important suburban forests in Greece, is prone to fires. The rapid spread of fires in suburban forest areas can result in significant damage to both property and wildlife. Thus, the implementation of a machine-learning-based early fire detection system in suburban forests can provide the necessary surveillance for prompt fire detection and contribute to the efforts to reduce the harm caused by forest fires. Furthermore, early fire detection is crucial for reducing the costs associated with wildfire suppression (

Table 1), as firefighting resources can be mobilized quickly, preventing the fire from spreading and becoming more difficult and expensive to manage.

3.2. Forest Surveillance Using Omnidirectional Cameras and Deep Vision Transformers

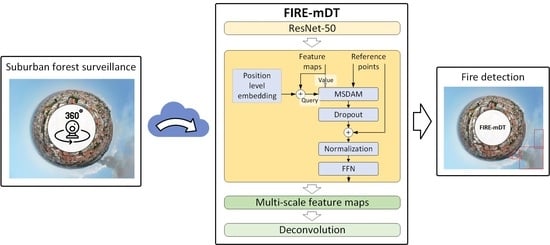

The proposed model (FIRE-mDT) aiming to detect early fire through 360-degree data combines the ResNet-50 as the backbone and the deformable transformer encoder-based feature extraction module [

40] (

Figure 9). The proposed model was applied to stereographic projections as these perform well at minimizing distortions.

The detection transformer (DETR) [

41] is a cutting-edge end-to-end detector; however, it requires significant amount of memory both for training and use in real-time scenarios. More specifically, the DETR has a large memory footprint due to its composition of a convolutional backbone, six encoders and decoders, and a prediction head, as well as due to the need to store the self-attention weights within each multihead self-attention layer. Therefore, for more efficient early fire detection, the deformable DETR is preferred over the original detection transformer [

42]. The deformable DETR has a more efficient approach in determining attention weights as it only calculates them at specific sampling locations instead of every pixel of the feature map. This permits the training and the testing of the model with high-resolution data using standard GPUs, making it more accessible and convenient to use. Furthermore, its performance has been demonstrated to be superior to the Faster R-CNN through extensive experiments [

43], making the deformable DETR a promising solution for early fire detection.

The FIRE-mDT model is designed to retain high-level semantic information and maintain feature resolution, which is achieved through the combination of the ResNet-50 backbone and the deformable transformer module. By setting the stride and dilation of the final stage of the backbone to 1 and 2, respectively, the FIRE-mDT is able to extract higher-level semantic information. The final three feature maps are then fed into the deformable transformer encoder, with the first two being upsampled by a factor of two and the third being encoded through a convolutional layer. The multiscale feature maps are then concatenated, group normalized, and are finally inputted into the deformable transformer encoder for multiscale feature extraction. The result of this process is the multiscale deformable attention feature map, which incorporates the adaptive spatial features that have been added through the self-attention mechanism in the encoder, providing a robust and accurate solution for early fire detection. The model takes into account both local and global dependencies, which are enhanced through the self-attention mechanism.

The deformable transformer encoder, a key component of the FIRE-mDT model, works by enhancing the input feature maps with positional encodings and level information resulting the query vector

. The

is then used as input, along with the feature maps and reference points, into the multiscale deformable attention module (MSDAM). Then, the MSDAM extracts a multiscale, deformable attention feature map. To generate this map, the MSDAM initially computes value, weight, and location tensors and then employs them in the multiscale deformable attention function. The

-th element of the deformable attention feature

at a single head is expressed as follows:

where

and

represents the components of the output of the deformable attention feature

, the attention head, and the sampling offsets, respectively.

is an entity of

. Furthermore,

and

denote the position of a reference point and one of the

corresponding sampling offsets of

and

, respectively. The number of sampling offsets and attention head are set as

and

. Afterwards, the input feature maps are combined with the deformable attention feature map, and then processed through a feedforward network (FFN).

Additionally, the FIRE-mDT model includes a final step to refine the results of the deformable encoder. This step involves a layer of deconvolution and normalization followed by a process of feature fusion that merges multiple features and then passes them through a convolutional layer. The final layer of the model is a regressor that outputs the fire detection results. Finally, to optimize the model performance, we used a focal loss [

44] that defines higher weights on difficult examples, aiming to improve the precision of the model predictions. The optimization process was carried out using the Adam optimizer, along with a mean teacher method [

45], which aims to improve the robustness of the model performance. The learning rate was set equal to 0.5 × 10

−4 and decreased by a factor of 0.5 after 50 epochs. The model was trained using a single NVIDIA GeForce RTX 3090 GPU for 80 epochs with batch size of 16.

3.3. Dataset Description and Evaluation Metrics

For the evaluation of the proposed framework, we used two different datasets. The first dataset includes sixty 360-degree stereographic projection images of the fire event which occurred on 13 July 2021 in the suburban Seich Sou Forest in Thessaloniki. The dataset comprises fifty-eight images captured from the early stages to the late stages of the fire on 13 July 2021 and two images captured after the fire incident. In this fire incident, 90 acres of forest land were burned and 66 firefighters, 22 firefighting vehicles, three Canadair firefighting planes, and two helicopters were mobilized. This dataset was used for the evaluation of the proposed framework in a real fire scenario.

In addition, we used a publicly available dataset named “Fire detection 360-degree dataset” in order to compare the effectiveness of our proposed approach with other state-of-the-art methods. This dataset contains both synthetic and real fire data.

For the training of the proposed FIRE-mDT model, we used the Corsican Fire Database (CFDB) [

46,

47]. Moreover, in addition to the flame annotations in the dataset, smoke annotations were also performed in this study. In addition, to further increase the variability of the training dataset and make the model more robust, an augmentation method was applied. This involved making random modifications to the images in the training set, such as rotation, flipping, and scaling, to increase the diversity of the training data and help the model better generalize to different conditions and scenarios.

In order to gauge the effectiveness of the proposed fire detection model, two evaluation metrics were employed:

F-score [

48] and mean intersection over union (

mIoU) [

49]. The intersection over union, also known as Jaccard index, is a well-established metric that provides a quantitative measurement of the overlap between the detected fire region and the ground truth. It is calculated by dividing the area of overlap between the two regions by the area of their union. This metric is useful in determining the accuracy of fire detection and provides a comprehensive understanding of how well the model is able to detect fires in an image. More specifically, the

mIoU is defined as follows:

The

F-score is a widely adopted evaluation metric that assesses the accuracy of a fire detection model. It is calculated as the harmonic mean of precision and recall, which are two important parameters that measure the performance of a fire detection system. Precision refers to the proportion of correctly identified fire regions among all the detected regions, while recall represents the proportion of correctly identified fire regions among all the actual fire regions. For the calculation of the precision and recall, we estimated the number of correctly detected fire images, the number of false negative images, and the number of images that includes false positives. The

F-score is important because it considers both precision and recall, providing a more comprehensive evaluation of the fire detection model’s performance. By combining these two metrics, the

F-score provides an overall assessment of the model’s accuracy and helps to identify areas where improvement may be necessary. More specifically, the

F-score is defined as follows:

4. Results and Discussion

The evaluation of the proposed fire detection methodology is presented in this section. The experimental evaluation has two main objectives: first, to showcase the performance results of the fire detection model during the Thessaloniki fire event on July 13 2021; second, to showcase the advantage of the proposed framework using 360-degree data over state-of-the-art approaches.

4.1. Case Study of the “Seich Sou” Suburban Forest of Thessaloniki

This study took place in the Seich Sou suburban forest of Thessaloniki city, and a fire detection study of the fire event which occurred on 13 July 2021 (

Figure 10) was performed. For the evaluation of the proposed framework, we created a 360-degree dataset, consisting of sixty 360-degree equirectangular images of the suburban forest of the Thessaloniki fire event. In a similar manner to the previous study [

17], we utilized a 360-degree camera equipped with GPS mounted on an unmanned aerial vehicle (UAV) for capturing 360-degree images. The camera used in this study had a CMOS sensor type with a 1/2.3” sensor size.

The proposed model was applied to the 360-degree images of the fire event in Seich Sou, resulting in an

F-score of 91.6%. More specifically, to estimate the

F-score and to evaluate the effectiveness of the proposed fire detection algorithm, we estimated the number of correctly detected images, identified by at least one accurately detected fire region (true positive) and the number of images that correctly classified as negative to fire (true negative), as well as the number of missed fire images (false negative) and the images that erroneously contained at least one positive fire region (false positive). The proposed model achieved forty-nine true positive images (

Figure 11), correctly identifying regions of fire, as well as two true negative images, accurately identifying regions without fire. However, there were also five false negative images, where the model failed to detect the presence of fire (

Figure 12). In addition, four images were found to have at least one false positive fire region detected in a nonfire region. In general, the framework that was proposed appears to be strong enough to effectively handle both false negatives and false alarms, indicating a high potential for practical use. These results highlight both the strengths and weaknesses of the proposed model, with a relatively high true positive rate and four false positive images indicating a high potential for practical use.

In addition, we investigated the effectiveness of multiscale deformable attention feature maps in fire detection. To this end, we compared the utilization of a single deformable attention feature map with that of multiple deformable attention feature maps. Our analysis revealed that the use of multiscale feature maps resulted in a slight improvement in the F1-score, with an increase of 1.4. The outcomes further demonstrated that higher-level features attained better precision, while lower-level features obtained a better recall score.

Furthermore, taking into account the altitude of the 360-degree camera (in the range of 18 m to 28 m) as well as the GPS coordinates of the UAV (latitude and longitude), the location of the fire was easily estimated, allowing for fire management and planning (

Figure 13 and

Figure 14). In addition, capturing consecutive images also enables the estimation of fire propagation. In this fire incident, the fire services were able to quickly contain the fire and prevent it from spreading significantly due to the combination of low wind conditions and their prompt response. As a result, the fire was successfully controlled and did not cause any major propagation.

Furthermore, for the analysis of this fire event, Fire Information for Resource Management System (FIRMS) was used for the analysis of MODIS and visible infrared imaging radiometer suite (VIIRS) data. It is worth mentioning that the MODIS captures data in 36 spectral bands while the VIIRS provides 22 different spectral bands. Based on the analysis of the suburban fire on 13 July 2021, both MODIS (

Figure 13) and VIIRS (

Figure 14) did not precisely locate the fire as they identified that there were flames on both sides of the ring road of Thessaloniki. In actuality, the fire started only on the upper side of the road. This mislocalization could significantly affect the operational fire management and rescue planning. In terms of latency time, the MODIS fire products are typically generated and delivered to fire management partners within 2–4 h of the MODIS data collection under nearly optimal conditions. However, the terrestrial and aerial-based systems can detect fires at a very early stage with a much shorter latency time, providing fire management teams with critical information in a timely manner to respond quickly to fire incidents. These systems offer a significant advantage over MODIS in terms of speed and effectiveness in detecting early signs of a fire, allowing fire management teams to take proactive measures to prevent the spread of fires.

4.2. Comparison Evaluation

In this study, the proposed fire detection algorithm was thoroughly evaluated using the “Fire detection 360-degree dataset” [

17]. This dataset comprises 150 360-degree images of both forest and urban areas, including both artificially generated and real instances of fire events. To compare the performance of the proposed framework with other state-of-the-art methods, the evaluation results of the proposed framework were analysed and compared with seven other methods. The results of the comparison are presented in

Table 2, which provides a comprehensive evaluation of the proposed algorithm’s performance. The proposed method is compared against SSD [

50], FireNet [

51], YOLO v3 [

52], Faster R-CNN [

43], Faster R-CNN/gVLAD encoding [

13], U-Net [

53], and DeepLab [

17] architectures, and it outperforms these, mainly due to the integrated deformable transformer encoder. More specifically, the proposed system towards fire detection achieves

F-score rates of 95%, improving by up to 0.6% the fire detection results against the second-best approach and the combination of two DeepLab v3+ and an adaptive post-validation method. It is worth mentioning that, in contrast to the DeepLab approach [

17], the proposed model is a single step end-to-end approach. Similarly, the proposed model achieved an

mIoU rate of 78.4% versus the second-best approach, which achieved an

mIoU equal to 77.1%.

4.3. Discussion

During the last decades, urban and wildland regions that cross or intermingle have significantly increased around the world, and these pose a high danger of forest fires. An environment at risk of fire where property and human lives are directly threatened is produced by the interaction of many anthropogenic agents of ignition and combustible forest vegetation with human infrastructure. Thus, both forest fire risk assessment and monitoring approaches need to be combined for an effective prevention and early detection of forest fires. The identification of forests at risk and the ideal locations for the installation of fire detection sensors could be aided by fire risk assessment, while forest detection contributes to the protection of forests and significantly reduces the amount of forest land that is destroyed by fires.

Compared to traditional narrow field-of-view sensors, omnidirectional sensors provide a broader field of view from single or several viewpoints so that they can supply richer visual information of recording areas without suffering from blind spots. Wide field of view or omnidirectional images can be acquired by rotating cameras or employing multiple camera clusters. The former are limited to obtaining omnidirectional images of static scenes; therefore, they cannot be used for real-time applications.

An omnidirectional camera can be used in real-time applications, so it has the advantage of solving any security problems in real time. In addition, cost savings on installation and maintenance can be achieved by replacing multiple narrow field-of-view cameras with a single omnidirectional camera. Although multiple cameras can provide relatively high-resolution omnidirectional images, thanks to the development of higher resolutions and storage technology for video data, large coverage and rich details become more affordable using 360-degree sensors.

Recently, 360-degree optical cameras have been suggested as a flexible and cost-effective remote sensing option for early fire detection. In this study, a vision transformer is introduced as a solution for early fire detection. In addition, we extended the evaluation of their application, and for the first-time, omnidirectional sensors were applied to a real forest fire scenario. The proposed single-stage model seems to be robust, indicating that the proposed framework has the potential to significantly enhance early forest fire detection. Based on the findings of the current study and the case study of the fire event of Seich Sou on 13 July 2021, the application of the proposed framework resulted in an F-score equal to 91.6%. The false negatives in the model’s performance can be partly attributed to the fact that the forest fire had been extinguished and was inactive during the late image acquisition process. As a result, some regions that were previously on fire appeared as nonfire regions in the images, leading to false negatives. On the other hand, some nonfire regions, such as clouds or bright areas, may have been mistakenly identified as fire regions, leading to false positives. Additionally, the thinness of the smoke produced by the fire also contributed to the model’s difficulty in detecting the fire regions accurately, resulting in both false negatives and false positives. It is important to mention that the fire detection model proposed in this study shows improved performance compared to other advanced methods applied to a dataset that is publicly available. This highlights the effectiveness and potential of the proposed approach in the field of early fire detection.

On the other hand, deploying and maintaining a 360-degree camera mounted on a UAV for fire surveillance can involve various potential costs and challenges. The initial cost of acquiring and maintaining the necessary equipment, including the UAV itself, the 360-degree camera, sensors, software, and other accessories, can be high. The UAV’s limited battery life can also pose a challenge for extended surveillance missions, requiring multiple batteries and flights for extended periods of time. In addition, weather conditions such as high winds, rain, and snow can affect the operation of the UAV and the 360-degree camera system, necessitating additional maintenance. Furthermore, operating the UAV system involves inherent safety risks, which can cause damage to the equipment or pose a danger to personnel or the public. This highlights the need for personnel operating or maintaining the UAV system to receive specialized training and certification. Additionally, the data collected from the 360-degree camera must be stored and analysed, which may require additional resources and infrastructure. Furthermore, the UAV must be regularly serviced and maintained to ensure that it operates at peak performance.

Finally, the proposed methodology for early fire detection, including the conversion of the equirectangular images to stereographic projections, achieves a processing speed of 5.3 frames per second (FPS). This result is considered to be good for real-time applications, as it enables the methodology to keep up with rapidly changing conditions and make quick decisions based on the information it detects. This is particularly important in the case of fire detection, where early detection can be critical in preventing or mitigating the spread of fires.