Deep Learning Approaches for Wildland Fires Remote Sensing: Classification, Detection, and Segmentation

Abstract

:1. Introduction

- We explore and analyze recent advanced methods (between 2017 and 2022) for wildfire recognition, detection, and segmentation based on deep learning including vision transformers using aerial and ground images.

- We present the most widely used public datasets for forest fire classification, detection, and segmentation tasks.

- We discuss various challenges related to these tasks, highlighting the interpretability of deep learning models, data labeling, and preprocessing.

2. Deep Learning Approaches for Wildland Fire Classification

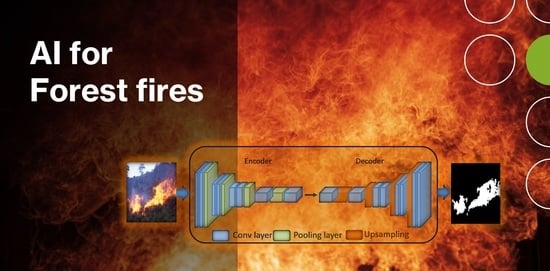

- Convolutional layers extract the features from the input data. Activation functions are then applied in order to add the nonlinear transformation to the network and increase its complexity. Numerous activation functions are used in the literature such as ReLU (Rectified Linear Unit) [34], PReLU (parametric ReLU) [35], LReLU (Leaky ReLU) [36], Sigmoid, etc. The resulting output of this layer is called a feature map or activation map.

- The feature maps feed the pooling layer to reduce its size. Among them, max-pooling and average pooling are the most used pooling methods [37].

- Fully connected layers convert the results of the feature extraction stage to 1-D vector and predict the suitable labels for objects in the input image by computing a confidence score.

3. Deep Learning Approaches for Wildland Fire Detection

3.1. One Stage Detectors

- Batch normalization.

- Image size change: 448 × 448 instead of 224 × 224 used by Yolo v1.

- Use of anchor boxes to visualize numerous predicted objects.

- Use of multi-scale training image ranging from 320 × 320 to 608 × 608.

3.2. Two Stage Detectors

4. Deep Learning Approaches for Forest Fire Segmentation

| Ref. | Methodology | Object Segmented | Dataset | Results (%) |

|---|---|---|---|---|

| [112] | SFEwAN-SD | Flame | Private: 560 images | F1-score = 90.31 |

| [116] | Encoder-decoder based on FusionNet | Flame | CorsicanFire, FiSmo: 212 images | Accuracy = 97.46 |

| [114] | CNN based on SqueezeNet | Flame | Private: various videos | FP = 5.00 |

| [119] | U-Net | Flame | CorsicanFire: 419 images | Accuracy = 97.09 |

| [47] | U-Net | Flame | FLAME: 5137 images | F1-score = 87.70 |

| [121] | wUUNet | Flame | Private: 6250 images | Accuracy = 95.34 |

| [122] | U-Net, U-Net, EfficientSeg | Flame | CorsicanFire: 1135 images | F1-score = 95.00 |

| [126] | SFBSNet | Flame | CorsicanFire: 1135 images | IoU = 90.76 |

| [127] | Deep-RegSeg | Flame | CorsicanFire: 1135 images | F1-score = 94.46 |

| [128] | DeepLab v3+ | Flame | CorsicanFire: 1775 images | Accuracy = 97.67 |

| [129] | DeepLab v3+ + validation approach | Flame/Smoke | Fire detection 360-degree dataset: 150 360-degree images | F1-score = 94.60 |

| [130] | DeepLab v3+ with Xception | Flame | CorsicanFire: 1775 images | Accuracy = 98.48 |

| [131] | DeepLab v3+ | Flame | CorsicanFire, FLAME, private: 4241 images | Accuracy = 98.70 |

| [132] | SqueeZeNet, U-Net, Quad-Tree search | Flame | CorsicanFire, private: 2470 images | Accuracy = 95.80 |

| [133] | FireDGWF | Flame/Smoke | Private: 4856 images | Accuracy = 99.60 |

| [134] | U-Net, DeepLab v3+, FCN, PSPNet | Flame | FLAME: 4200 images | Accuracy = 99.91 |

| [135] | ATT Squeeze U-Net | Flame | CorsicanFire& Private: 6135 images | Accuracy = 90.67 |

| [136] | Encoder-decoder with attention mechanism | Flame | CorsicanFire: 1135 images+ various non-fire images | Accuracy = 98.02 |

| [137] | TransUNet, MedT | Flame | CorsicanFire: 1135 images | F1-score = 97.70 |

| [60] | TransUNet, TransFire | Flame | FLAME: 2003 images | F1-score = 99.90 |

| [74] | MaskSU R-CNN | Flame | FLAME: 8000 images | F1-score = 90.30 |

| [138] | Improved DeepLab v3+ with MobileNet v3 | Flame | FLAME: 2003 images | Accuracy = 92.46 |

5. Datasets

- BowFire (Best of both worlds Fire detection) [153,154] dataset is a public data of fire. It consists of 226 images (119 fire images and 107 non-fire images) with different resolutions, as shown in Figure 4. Fire images represent emergencies with various fire situations (forest, burning buildings, car accidents, industrial fires, etc.) and fire-like objects such as yellow or red objects and sunsets. It includes both forest and non-forest images. Nevertheless, it is important to note that the non-forest images were filtered out to ensure the performance and reliability of the trained wildfire models. BowFire also contains the corresponding masks of fire/non-fire images for the fire segmentation task, as shown in Figure 5.

- FLAME (Fire Luminosity Airborne-Based Machine Learning Evaluation) dataset [47,155] consists of aerial images and raw heat-map footage collected by thermal cameras and visible spectrum onboard two drones (Phantom 3 Professional and Matrice 200). It contains four types of videos that are a green-hot palette, normal spectrum, fusion, and white-hot. It includes 48,010 RGB aerial images (with a resolution of 254 × 254 pix.), which are divided into 17,855 images without fire and 30,155 images with fire for the wildfire classification task, as illustrated in Figure 6. It also comprises 2003 RGB images with a resolution of 3480 × 2160 pix. and their corresponding masks for fire segmentation task, as depicted in Figure 7.

- CorsicanFire dataset [45,156] consists of NIR (near infrared) and RGB images. The NIR images are collected with a longer exposure/integration time. CorsicanFire includes a larger number of fire images with many resolutions (1135 RGB images and their corresponding masks) that are widely used in the context of fire segmentation. It describes the visual information of the fire such as color (orange, white-yellow, and red), fire distance, brightness, smoke presence, and different weather conditions. Figure 8 shows CorsicanFire dataset samples and their corresponding binary masks.

- FD-dataset [157,158] is composed of two datasets, BowFire and dataset-1 [9], which contains 31 videos (14 fire videos and 17 non-fire videos) and fire/non-fire images collected from the internet. It contains 50,000 images with numerous resolutions (25,000 images with fire and 25,000 images without fire) describing various fire incidents such as red elements, burning clouds, and glare lights. It also includes fire-like objects such as sunset and sunrise, as illustrated in Figure 9. This dataset consists of both forest and non-forest images, but it is important to mention that only the forest images were selected for training the forest fire models in order to improve their performance.

- ForestryImages [159] is a public dataset proposed by the University of Georgia’s Center for Invasive Species and Ecosystem Health. It contains a large number of images (317,921 images with numerous resolutions) covering different image categories such as forest fire (44,606 images), forest pests (57,844 images), insects (103,472 images), diseases (30,858 images), trees (45,921 images), plants (149,806 images), wildlife (18,298 images), etc. as shown in Figure 10.

- Firesense dataset [161] is a public dataset developed within the “FIRESENSE - Fire Detection and Management through a Multi-Sensor Network for the Protection of Cultural Heritage Areas from the Risk of Fire and Extreme Weather (FP7-ENV-244088)” project to train and test smoke/fire detection algorithms. It contains eleven fire videos, thirteen smoke videos, and twenty-five non-fire/smoke videos. Figure 12 depicts Firesense dataset samples.

- FiSmo is public data for fire detection developed by Cazzolato et al. [118] in 2017. It contains images and video data with their annotation. It contains 9448 images with multiple resolutions and 158 videos acquired from the web. Each video data presents three labels that are fire, non-fire, and ignore. The image data is collected from four datasets: Flickr-FireSmoke [163] (5556 images: 527 fire/smoke images, 1077 fire images, 369 smoke images, and 3583 non-fire/smoke images), Flickr-Fire [163] (2000 images: 1000 fire images and 1000 non-fire images), BowFire, and SmokeBlock [164,165] (1666 images: 832 smoke images and 834 non-smoke images). Figure 14 presents FiSmo fire detection dataset samples. FiSmo is comprised of forest and non-forest images, but it should be noted that the non-forest images are generally removed to improve the efficiency of the wildfire classification models.

- DeepFire dataset [64,162] was developed to address the problem of wildland fire recognition. It comprises RGB aerial images with a resolution of 250 × 250 pix. downloaded from various research sites using many keywords such as forest, forest fires, mountain, and mountain fires, as depicted in Figure 15. It includes a total of 1900 images, where 950 images belong to the fire incident and 950 images remain to the non-fire incident.

- The FIRE dataset is a public dataset developed by Saeid et al. [69] during the NASA Space Apps Challenge in 2018 for the fire recognition task. It comprises two folders (fireimages and non-fireimages). The first folder consists of 755 fire images with various resolutions, some of which include dense smoke. The second consists of 244 non-fire images such as animals, trees, waterfalls, rivers, grasses, people, roads, lakes and forests. Figure 16 presents some examples of FIRE dataset.

- FLAME2 dataset [72,73] represents public wildfire data collected in November 2021 during a prescribed fire in an open canopy pine forest in Northern Arizona. It contains IR/RGB images and videos recorded with a Mavic 2 Enterprise Advanced dual RGB/IR camera. It is labeled by two human experts. It contains 53,451 RGB images (25,434 Fire/Smoke images, 14,317 Fire/non-smoke images, and 13,700 non-fire/non-smoke images) with a resolution of 254 × 254 pix. extracted from seven pairs of RGB videos with a resolution of 1920 × 1080 pix. or 3840 × 2160 pix. It also includes seven IR videos with a resolution of 640 × 512 pix. Figure 17 shows some examples of FLAME2 dataset.

6. Discussion

6.1. Data Collection and Preprocessing

6.2. Model Results Discussion

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| UAV | Unmanned Aerial Vehicles |

| DL | Deep Learning |

| ML | Machine Learning |

| CNN | Convolutional Neural Network |

| ReLU | Rectified Linear Unit |

| PReLU | Parametric ReLU |

| LReLU | Leaky ReLU |

| DCNN | Deep Convolutional Neural Network |

| LBP | Local Binary Patterns |

| CycleGAN | Cycle-consistent Generative Adversarial Network |

| KNN | K-Nearest Neighbors |

| SVM | Support Vector Machine |

| NCA | Neighborhood Component Analysis |

| RNN | Recurrent Neural Network |

| Yolo | You only look once |

| AP | Average Precision |

| SSD | Single Shot MultiBox Detector |

| mAP | mean Average Precision |

| PANet | Path Aggregation Network |

| FPN | Feature Pyramid Network |

| CSPNet | Cross Stage Partial Network |

| BiFPN | Bi-directional Feature Pyramid Network |

| RPN | Region Proposal Network |

| FP | False Positive rate |

| ASPP | Atrous Spatial Pyramid Pooling |

| IR | Infrared |

| FPS | Frames per second |

| MedT | Medical Transformer |

| BowFire | Best of both worlds Fire detection |

| FLAME | Fire Luminosity Airborne-based Machine learning Evaluation |

| NIR | Near infrared |

References

- Gaur, A.; Singh, A.; Kumar, A.; Kulkarni, K.S.; Lala, S.; Kapoor, K.; Srivastava, V.; Kumar, A.; Mukhopadhyay, S.C. Fire Sensing Technologies: A Review. IEEE Sens. J. 2019, 19, 3191–3202. [Google Scholar] [CrossRef]

- Çelik, T.; Demirel, H. Fire detection in video sequences using a generic color model. Fire Saf. J. 2009, 44, 147–158. [Google Scholar] [CrossRef]

- Toulouse, T.; Rossi, L.; Celik, T.; Akhloufi, M. Automatic fire pixel detection using image processing: A comparative analysis of rule-based and machine learning-based methods. Signal Image Video Process. 2016, 10, 647–654. [Google Scholar] [CrossRef] [Green Version]

- Rossi, L.; Molinier, T.; Akhloufi, M.; Tison, Y.; Pieri, A. A 3D vision system for the measurement of the rate of spread and the height of fire fronts. Meas. Sci. Technol. 2010, 21, 105501. [Google Scholar] [CrossRef]

- Rossi, L.; Akhloufi, M. Dynamic Fire 3D Modeling Using a Real-Time Stereovision System. In Proceedings of the Technological Developments in Education and Automation, Barcelona, Spain, 5–7 July 2010; pp. 33–38. [Google Scholar]

- Cruz, H.; Eckert, M.; Meneses, J.; Martínez, J.F. Efficient Forest Fire Detection Index for Application in Unmanned Aerial Systems (UASs). Sensors 2016, 16, 893. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mueller, M.; Karasev, P.; Kolesov, I.; Tannenbaum, A. Optical Flow Estimation for Flame Detection in Videos. IEEE Trans. Image Process. 2013, 22, 2786–2797. [Google Scholar] [CrossRef] [Green Version]

- Dimitropoulos, K.; Barmpoutis, P.; Grammalidis, N. Spatio-Temporal Flame Modeling and Dynamic Texture Analysis for Automatic Video-Based Fire Detection. IEEE Trans. Circuits Syst. Video Technol. 2015, 25, 339–351. [Google Scholar] [CrossRef]

- Foggia, P.; Saggese, A.; Vento, M. Real-Time Fire Detection for Video-Surveillance Applications Using a Combination of Experts Based on Color, Shape, and Motion. IEEE Trans. Circuits Syst. Video Technol. 2015, 25, 1545–1556. [Google Scholar] [CrossRef]

- Ghali, R.; Jmal, M.; Souidene Mseddi, W.; Attia, R. Recent Advances in Fire Detection and Monitoring Systems: A Review. In Proceedings of the 18th International Conference on Sciences of Electronics, Technologies of Information and Telecommunications (SETIT’18), Hammamet, Tunisia, 20–22 December 2018; Volume 1, pp. 332–340. [Google Scholar]

- Gaur, A.; Singh, A.; Kumar, A.; Kumar, A.; Kapoor, K. Video Flame and Smoke Based Fire Detection Algorithms: A Literature Review. Fire Technol. 2020, 56, 1943–1980. [Google Scholar] [CrossRef]

- Mahmoud, M.A.I.; Ren, H. Forest fire detection and identification using image processing and SVM. J. Inf. Process. Syst. 2019, 15, 159–168. [Google Scholar] [CrossRef]

- Van Hamme, D.; Veelaert, P.; Philips, W.; Teelen, K. Fire detection in color images using Markov random fields. In Proceedings of the Advanced Concepts for Intelligent Vision Systems, Sydney, Australia, 13–16 December 2010; pp. 88–97. [Google Scholar]

- Bedo, M.V.N.; de Oliveira, W.D.; Cazzolato, M.T.; Costa, A.F.; Blanco, G.; Rodrigues, J.F.; Traina, A.J.; Traina, C. Fire detection from social media images by means of instance-based learning. In Proceedings of the Enterprise Information Systems, Barcelona, Spain, 7–9 October 2015; pp. 23–44. [Google Scholar]

- Ko, B.; Cheong, K.H.; Nam, J.Y. Early fire detection algorithm based on irregular patterns of flames and hierarchical Bayesian Networks. Fire Saf. J. 2010, 45, 262–270. [Google Scholar] [CrossRef]

- Ren, B. Neural Network Machine Translation Model Based on Deep Learning Technology. In Proceedings of the Application of Intelligent Systems in Multi-Modal Information Analytics, Online, 23 April 2022; pp. 643–649. [Google Scholar]

- McCoy, J.; Rawal, A.; Rawat, D.B.; Sadler, B.M. Ensemble Deep Learning for Sustainable Multimodal UAV Classification. IEEE Trans. Intell. Transp. Syst. 2022, 1–10. [Google Scholar] [CrossRef]

- Zhang, Y.; Kwong, S.; Xu, L.; Zhao, T. Advances in Deep-Learning-Based Sensing, Imaging, and Video Processing. Sensors 2022, 22, 6192. [Google Scholar] [CrossRef] [PubMed]

- Hazra, A.; Choudhary, P.; Sheetal Singh, M. Recent Advances in Deep Learning Techniques and Its Applications: An Overview. In Proceedings of the Advances in Biomedical Engineering and Technology, Werdanyeh, Lebanon, 7–9 October 2021; pp. 103–122. [Google Scholar]

- Seo, P.H.; Nagrani, A.; Arnab, A.; Schmid, C. End-to-End Generative Pretraining for Multimodal Video Captioning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 17959–17968. [Google Scholar]

- Wang, Y.; Yue, Y.; Lin, Y.; Jiang, H.; Lai, Z.; Kulikov, V.; Orlov, N.; Shi, H.; Huang, G. AdaFocus V2: End-to-End Training of Spatial Dynamic Networks for Video Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 24–28 June 2022; pp. 20062–20072. [Google Scholar]

- Cui, J.; Qiu, H.; Chen, D.; Stone, P.; Zhu, Y. Coopernaut: End-to-End Driving With Cooperative Perception for Networked Vehicles. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–20 June 2022; pp. 17252–17262. [Google Scholar]

- Ait Nasser, A.; Akhloufi, M.A. A Review of Recent Advances in Deep Learning Models for Chest Disease Detection Using Radiography. Diagnostics 2023, 13, 159. [Google Scholar] [CrossRef]

- Mahoro, E.; Akhloufi, M.A. Applying Deep Learning for Breast Cancer Detection in Radiology. Curr. Oncol. 2022, 29, 8767–8793. [Google Scholar] [CrossRef]

- Zhao, Z.Q.; Zheng, P.; Xu, S.T.; Wu, X. Object Detection With Deep Learning: A Review. IEEE Trans. Neural Net. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef] [Green Version]

- Bouguettaya, A.; Zarzour, H.; Taberkit, A.M.; Kechida, A. A review on early wildfire detection from unmanned aerial vehicles using deep learning-based computer vision algorithms. Signal Process. 2022, 190, 108309. [Google Scholar] [CrossRef]

- Akhloufi, M.A.; Couturier, A.; Castro, N.A. Unmanned Aerial Vehicles for Wildland Fires: Sensing, Perception, Cooperation and Assistance. Drones 2021, 5, 15. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Papaioannou, P.; Dimitropoulos, K.; Grammalidis, N. A Review on Early Forest Fire Detection Systems Using Optical Remote Sensing. Sensors 2020, 20, 6442. [Google Scholar] [CrossRef]

- Geetha, S.; Abhishek, C.; Akshayanat, C. Machine vision based fire detection techniques: A survey. Fire Technol. 2021, 57, 591–623. [Google Scholar] [CrossRef]

- Bot, K.; Borges, J.G. A Systematic Review of Applications of Machine Learning Techniques for Wildfire Management Decision Support. Inventions 2022, 7, 15. [Google Scholar] [CrossRef]

- Cruz, H.; Gualotuña, T.; Pinillos, M.; Marcillo, D.; Jácome, S.; Fonseca C., E.R. Machine Learning and Color Treatment for the Forest Fire and Smoke Detection Systems and Algorithms, a Recent Literature Review. In Proceedings of the Artificial Intelligence, Computer and Software Engineering Advances, Quito, Ecuador, 26–30 October 2020; pp. 109–120. [Google Scholar]

- Chaturvedi, S.; Khanna, P.; Ojha, A. A survey on vision-based outdoor smoke detection techniques for environmental safety. ISPRS J. Photogramm. Remote. Sens. 2022, 185, 158–187. [Google Scholar] [CrossRef]

- Liu, Y.H. Feature Extraction and Image Recognition with Convolutional Neural Networks. J. Phys. Conf. Ser. 2018, 1087, 062032. [Google Scholar] [CrossRef]

- Hara, K.; Saito, D.; Shouno, H. Analysis of function of rectified linear unit used in deep learning. In Proceedings of the International Joint Conference on Neural Networks (IJCNN), Killarney, Ireland, 12–17 July 2015; pp. 1–8. [Google Scholar]

- Maas, A.L.; Hannun, A.Y.; Ng, A.Y. Rectifier nonlinearities improve neural network acoustic models. In Proceedings of the ICML, Atlanta, GA, USA, 16–21 June 2013; p. 3. [Google Scholar]

- Jin, X.; Xu, C.; Feng, J.; Wei, Y.; Xiong, J.; Yan, S. Deep Learning with S-Shaped Rectified Linear Activation Units. AAAI Conf. Artif. Intell. 2016, 30, 1737–1743. [Google Scholar] [CrossRef]

- Boureau, Y.L.; Bach, F.; LeCun, Y.; Ponce, J. Learning mid-level features for recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2559–2566. [Google Scholar]

- Lee, W.; Kim, S.; Lee, Y.T.; Lee, H.W.; Choi, M. Deep neural networks for wild fire detection with unmanned aerial vehicle. In Proceedings of the IEEE International Conference on Consumer Electronics (ICCE), Berlin, Germany, 3–6 September 2017; pp. 252–253. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper With Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Lake Tahoe, NV, USA, 5–10 December 2012; pp. 1097–1105. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 3rd International Conference on Learning Representations, ICLR, San Diego, CA, USA, 7–9 May 2015; pp. 1–14. [Google Scholar]

- Zhao, Y.; Ma, J.; Li, X.; Zhang, J. Saliency Detection and Deep Learning-Based Wildfire Identification in UAV Imagery. Sensors 2018, 18, 712. [Google Scholar] [CrossRef] [Green Version]

- Srinivas, K.; Dua, M. Fog Computing and Deep CNN Based Efficient Approach to Early Forest Fire Detection with Unmanned Aerial Vehicles. In Inventive Computation Technologies; Smys, S., Bestak, R., Rocha, Á., Eds.; Springer International Publishing: Coimbatore, India, 29–30 August 2019; pp. 646–652. [Google Scholar]

- Wang, Y.; Dang, L.; Ren, J. Forest fire image recognition based on convolutional neural network. J. Algorithms Comput. Technol. 2019, 13, 1748302619887689. [Google Scholar] [CrossRef] [Green Version]

- Toulouse, T.; Rossi, L.; Campana, A.; Celik, T.; Akhloufi, M.A. Computer vision for wildfire research: An evolving image dataset for processing and analysis. Fire Saf. J. 2017, 92, 188–194. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.; Zhang, Y.; Xin, J.; Wang, G.; Mu, L.; Yi, Y.; Liu, H.; Liu, D. UAV Image-based Forest Fire Detection Approach Using Convolutional Neural Network. In Proceedings of the 14th IEEE Conference on Industrial Electronics and Applications (ICIEA), Xi’an, China, 19–21 June 2019; pp. 2118–2123. [Google Scholar]

- Shamsoshoara, A.; Afghah, F.; Razi, A.; Zheng, L.; Fulé, P.Z.; Blasch, E. Aerial imagery pile burn detection using deep learning: The FLAME dataset. Comput. Net. 2021, 193, 108001. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep Learning With Depthwise Separable Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Arteaga, B.; Diaz, M.; Jojoa, M. Deep Learning Applied to Forest Fire Detection. In Proceedings of the IEEE International Symposium on Signal Processing and Information Technology (ISSPIT), Louisville, KY, USA, 9–11 December 2020; pp. 1–6. [Google Scholar]

- Rahul, M.; Shiva Saketh, K.; Sanjeet, A.; Srinivas Naik, N. Early Detection of Forest Fire using Deep Learning. In Proceedings of the IEEE region 10 conference (TENCON), Osaka, Japan, 16–19 November 2020; pp. 1136–1140. [Google Scholar]

- Sousa, M.J.; Moutinho, A.; Almeida, M. Wildfire detection using transfer learning on augmented datasets. Expert Syst. Appl. 2020, 142, 112975. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Park, M.; Tran, D.Q.; Jung, D.; Park, S. Wildfire-Detection Method Using DenseNet and CycleGAN Data Augmentation-Based Remote Camera Imagery. Remote Sens. 2020, 12, 3715. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-To-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Wu, H.; Li, H.; Shamsoshoara, A.; Razi, A.; Afghah, F. Transfer Learning for Wildfire Identification in UAV Imagery. In Proceedings of the 54th Annual Conference on Information Sciences and Systems (CISS), Princeton, NJ, USA, 18–20 March 2020; pp. 1–6. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Tang, Y.; Feng, H.; Chen, J.; Chen, Y. ForestResNet: A Deep Learning Algorithm for Forest Image Classification. J. Phys. Conf. Ser. 2021, 2024, 012053. [Google Scholar] [CrossRef]

- Dutta, S.; Ghosh, S. Forest Fire Detection Using Combined Architecture of Separable Convolution and Image Processing. In Proceedings of the 1st International Conference on Artificial Intelligence and Data Analytics (CAIDA), Riyadh, Saudi Arabia, 6–7 April 2021; pp. 36–41. [Google Scholar]

- Ghali, R.; Akhloufi, M.A.; Mseddi, W.S. Deep Learning and Transformer Approaches for UAV-Based Wildfire Detection and Segmentation. Sensors 2022, 22, 1977. [Google Scholar] [CrossRef] [PubMed]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Treneska, S.; Stojkoska, B.R. Wildfire detection from UAV collected images using transfer learning. In Proceedings of the 18th International Conference on Informatics and Information Technologies, Xi’an, China, 12–14 March 2021; pp. 6–7. [Google Scholar]

- Zhang, L.; Wang, M.; Fu, Y.; Ding, Y. A Forest Fire Recognition Method Using UAV Images Based on Transfer Learning. Forests 2022, 13, 975. [Google Scholar] [CrossRef]

- Khan, A.; Hassan, B.; Khan, S.; Ahmed, R.; Abuassba, A. DeepFire: A Novel Dataset and Deep Transfer Learning Benchmark for Forest Fire Detection. Mob. Inf. Syst. 2022, 2022, 5358359. [Google Scholar] [CrossRef]

- Dogan, S.; Datta Barua, P.; Kutlu, H.; Baygin, M.; Fujita, H.; Tuncer, T.; Acharya, U. Automated accurate fire detection system using ensemble pretrained residual network. Expert Syst. Appl. 2022, 203, 117407. [Google Scholar] [CrossRef]

- Yandouzi, M.; Grari, M.; Idrissi, I.; Boukabous, M.; Moussaoui, O.; Ghoumid, K.; Elmiad, A.K.E. Forest Fires Detection using Deep Transfer Learning. Int. J. Adv. Comput. Sci. Appl. (IJACSA) 2022, 13, 268–275. [Google Scholar] [CrossRef]

- Ghosh, R.; Kumar, A. A hybrid deep learning model by combining convolutional neural network and recurrent neural network to detect forest fire. Multimed. Tools Appl. 2022, 81, 38643–38660. [Google Scholar] [CrossRef]

- Vento, M.; Foggia, P.; Tortorella, F.; Percannella, G.; Ritrovato, P.; Saggese, A.; Greco, L.; Carletti, V.; Greco, A.; Vigilante, V.; et al. MIVIA Fire/Smoke Detection Dataset. Available online: https://mivia.unisa.it/datasets/video-analysis-datasets/ (accessed on 5 January 2023).

- Saied, A. Fire Dataset. Available online: https://www.kaggle.com/datasets/phylake1337/fire-dataset?select=fire_dataset%2C+06.11.2021 (accessed on 5 January 2023).

- Zheng, S.; Gao, P.; Wang, W.; Zou, X. A Highly Accurate Forest Fire Prediction Model Based on an Improved Dynamic Convolutional Neural Network. Appl. Sci. 2022, 12, 6721. [Google Scholar] [CrossRef]

- K. Mohammed, R. A real-time forest fire and smoke detection system using deep learning. Int. J. Nonlinear Anal. Appl. 2022, 13, 2053–2063. [Google Scholar] [CrossRef]

- Chen, X.; Hopkins, B.; Wang, H.; O’Neill, L.; Afghah, F.; Razi, A.; Fulé, P.; Coen, J.; Rowell, E.; Watts, A. Wildland Fire Detection and Monitoring Using a Drone-Collected RGB/IR Image Dataset. IEEE Access 2022, 10, 121301–121317. [Google Scholar] [CrossRef]

- Hopkins, B.; O’Neill, L.; Afghah, F.; Razi, A.; Rowell, E.; Watts, A.; Fule, P.; Coen, J. FLAME2 Dataset. Available online: https://dx.doi.org/10.21227/swyw-6j78 (accessed on 5 January 2023).

- Guan, Z.; Miao, X.; Mu, Y.; Sun, Q.; Ye, Q.; Gao, D. Forest Fire Segmentation from Aerial Imagery Data Using an Improved Instance Segmentation Model. Remote Sens. 2022, 14, 3159. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Liu, L.; Ouyang, W.; Wang, X.; Fieguth, P.; Chen, J.; Liu, X.; Pietikäinen, M. Deep learning for generic object detection: A survey. Int. J. Comput. Vis. 2020, 128, 261–318. [Google Scholar] [CrossRef] [Green Version]

- Jiao, Z.; Zhang, Y.; Xin, J.; Mu, L.; Yi, Y.; Liu, H.; Liu, D. A Deep Learning Based Forest Fire Detection Approach Using UAV and Yolo v3. In Proceedings of the 1st International Conference on Industrial Artificial Intelligence (IAI), Shenyang, China, 22–26 July 2019; pp. 1–5. [Google Scholar]

- Wu, S.; Zhang, L. Using Popular Object Detection Methods for Real Time Forest Fire Detection. In Proceedings of the 11th International Symposium on Computational Intelligence and Design (ISCID), Hangzhou, China, 8–9 December 2018; pp. 280–284. [Google Scholar]

- Jiao, Z.; Zhang, Y.; Mu, L.; Xin, J.; Jiao, S.; Liu, H.; Liu, D. A Yolo v3-based Learning Strategy for Real-time UAV-based Forest Fire Detection. In Proceedings of the Chinese Control Furthermore, Decision Conference (CCDC), Hefei, China, 22–24 August 2020; pp. 4963–4967. [Google Scholar]

- Tang, Z.; Liu, X.; Chen, H.; Hupy, J.; Yang, B. Deep Learning Based Wildfire Event Object Detection from 4K Aerial Images Acquired by UAS. AI 2020, 1, 166–179. [Google Scholar] [CrossRef]

- Xu, R.; Lin, H.; Lu, K.; Cao, L.; Liu, Y. A Forest Fire Detection System Based on Ensemble Learning. Forests 2021, 12, 217. [Google Scholar] [CrossRef]

- Wang, S.; Zhao, J.; Ta, N.; Zhao, X.; Xiao, M.; Wei, H. A real-time deep learning forest fire monitoring algorithm based on an improved Pruned+ KD model. J. Real-Time Image Process. 2021, 18, 2319–2329. [Google Scholar] [CrossRef]

- Kasyap, V.L.; Sumathi, D.; Alluri, K.; Reddy Ch, P.; Thilakarathne, N.; Shafi, R.M. Early detection of forest fire using mixed learning techniques and UAV. Comput. Intell. Neurosci. 2022, 2022, 3170244. [Google Scholar] [CrossRef]

- Mseddi, W.S.; Ghali, R.; Jmal, M.; Attia, R. Fire Detection and Segmentation using Yolo v5 and U-Net. In Proceedings of the 29th European Signal Processing Conference (EUSIPCO), Dublin, Ireland, 23–27 August 2021; pp. 741–745. [Google Scholar]

- Zhao, L.; Zhi, L.; Zhao, C.; Zheng, W. Fire-YOLO: A Small Target Object Detection Method for Fire Inspection. Sustainability 2022, 14, 4930. [Google Scholar] [CrossRef]

- Xue, Z.; Lin, H.; Wang, F. A Small Target Forest Fire Detection Model Based on Yolo v5 Improvement. Forests 2022, 13, 1332. [Google Scholar] [CrossRef]

- Xue, Q.; Lin, H.; Wang, F. FCDM: An Improved Forest Fire Classification and Detection Model Based on Yolo v5. Forests 2022, 13, 2129. [Google Scholar] [CrossRef]

- Barmpoutis, P.; Dimitropoulos, K.; Kaza, K.; Grammalidis, N. Fire Detection from Images Using Faster R-CNN and Multidimensional Texture Analysis. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 8301–8305. [Google Scholar]

- Lin, J.; Lin, H.; Wang, F. STPM_SAHI: A Small-Target Forest Fire Detection Model Based on Swin Transformer and Slicing Aided Hyper Inference. Forests 2022, 13, 1603. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolo v3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.; Liao, H.M. Yolo v4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Jocher, G.; Stoken, A.; Chaurasia, A.; Borovec, J.; Chanvichet, V.; Kwon, Y.; TaoXie, S.; Changyu, L.; Abhiram, V.; Skalski, P.; et al. Yolo v5. Available online: https://github.com/ultralytics/yolov5 (accessed on 5 January 2023).

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Diwan, T.; Anirudh, G.; Tembhurne, J.V. Object detection using YOLO: Challenges, architectural successors, datasets and applications. Multimed. Tools Appl. 2022, 82, 1–33. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef] [Green Version]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for MobileNetV3. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the Computer Vision—ECCV 2014, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Pincott, J.; Tien, P.W.; Wei, S.; Kaiser Calautit, J. Development and evaluation of a vision-based transfer learning approach for indoor fire and smoke detection. Build. Serv. Eng. Res. Technol. 2022, 43, 319–332. [Google Scholar] [CrossRef]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A New Backbone That Can Enhance Learning Capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Seattle, WA, USA, 13–19 June 2020; pp. 390–391. [Google Scholar]

- Wang, K.; Liew, J.H.; Zou, Y.; Zhou, D.; Feng, J. PANet: Few-Shot Image Semantic Segmentation With Prototype Alignment. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9197–9206. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef] [Green Version]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable DETR: Deformable Transformers for End-to-End Object Detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Minaee, S.; Boykov, Y.; Porikli, F.; Plaza, A.; Kehtarnavaz, N.; Terzopoulos, D. Image Segmentation Using Deep Learning: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 3523–3542. [Google Scholar] [CrossRef]

- Toulouse, T.; Rossi, L.; Akhloufi, M.; Celik, T.; Maldague, X. Benchmarking of wildland fire colour segmentation algorithms. Iet Image Process. 2015, 9, 1064–1072. [Google Scholar] [CrossRef] [Green Version]

- Gonzalez, A.; Zuniga, M.D.; Nikulin, C.; Carvajal, G.; Cardenas, D.G.; Pedraza, M.A.; Fernandez, C.A.; Munoz, R.I.; Castro, N.A.; Rosales, B.F.; et al. Accurate fire detection through fully convolutional network. In Proceedings of the 7th Latin American Conference on Networked and Electronic Media (LACNEM), Valparaiso, Chile, 6–7 November 2017; pp. 1–6. [Google Scholar]

- Frizzi, S.; Kaabi, R.; Bouchouicha, M.; Ginoux, J.M.; Moreau, E.; Fnaiech, F. Convolutional neural network for video fire and smoke detection. In Proceedings of the IECON 2016—42nd Annual Conference of the IEEE Industrial Electronics Society, Florence, Italy, 24–27 October 2016; pp. 877–882. [Google Scholar]

- Wang, G.; Zhang, Y.; Qu, Y.; Chen, Y.; Maqsood, H. Early Forest Fire Region Segmentation Based on Deep Learning. In Proceedings of the Chinese Control Furthermore, Decision Conference (CCDC), Nanchang, China, 3–5 June 2019; pp. 6237–6241. [Google Scholar]

- Iandola, F.N.; Moskewicz, M.W.; Ashraf, K.; Han, S.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <1MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Choi, H.S.; Jeon, M.; Song, K.; Kang, M. Semantic fire segmentation model based on convolutional neural network for outdoor image. Fire Technol. 2021, 57, 3005–3019. [Google Scholar] [CrossRef]

- Quan, T.M.; Hildebrand, D.G.C.; Jeong, W.K. FusionNet: A Deep Fully Residual Convolutional Neural Network for Image Segmentation in Connectomics. Front. Comput. Sci. 2021, 3, 34. [Google Scholar] [CrossRef]

- Cazzolato, M.T.; Avalhais, L.P.S.; Chino, D.Y.T.; Ramos, J.S.; Souza, J.A.; Rodrigues-Jr, J.F.; Traina, A.J.M. FiSmo: A Compilation of Datasets from Emergency Situations for Fire and Smoke Analysis. In Proceedings of the SBBD2017—SBBD Satellite Events of the 32nd Brazilian Symposium on Databases—DSW (Dataset Showcase Workshop), Uberlandia, MG, Brazil, 2–5 October 2017; pp. 213–223. [Google Scholar]

- Akhloufi, M.A.; Tokime, R.B.; Elassady, H. Wildland fires detection and segmentation using deep learning. In Proceedings of the Pattern Recognition and Tracking XXIX, Orlando, FL, USA, 18–19 April 2018; p. 106490B. [Google Scholar]

- Sudre, C.H.; Li, W.; Vercauteren, T.; Ourselin, S.; Jorge Cardoso, M. Generalised Dice Overlap as a Deep Learning Loss Function for Highly Unbalanced Segmentations. In Proceedings of the Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support, Québec City, QC, Canada, 14 September 2017; pp. 240–248. [Google Scholar]

- Bochkov, V.S.; Kataeva, L.Y. wUUNet: Advanced Fully Convolutional Neural Network for Multiclass Fire Segmentation. Symmetry 2021, 13, 98. [Google Scholar] [CrossRef]

- Ghali, R.; Akhloufi, M.A.; Jmal, M.; Mseddi, W.S.; Attia, R. Forest Fires Segmentation using Deep Convolutional Neural Networks. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics (SMC), Melbourne, Australia, 17–20 October 2021; pp. 2109–2114. [Google Scholar]

- Qin, X.; Zhang, Z.; Huang, C.; Dehghan, M.; Zaiane, O.R.; Jagersand, M. U2-Net: Going deeper with nested U-structure for salient object detection. Pattern Recognit. 2020, 106, 107404. [Google Scholar] [CrossRef]

- Yesilkaynak, V.B.; Sahin, Y.H.; Unal, G.B. EfficientSeg: An Efficient Semantic Segmentation Network. arXiv 2020, arXiv:2009.06469. [Google Scholar]

- Dzigal, D.; Akagic, A.; Buza, E.; Brdjanin, A.; Dardagan, N. Forest Fire Detection based on Color Spaces Combination. In Proceedings of the 11th International Conference on Electrical and Electronics Engineering (ELECO), Bursa, Turkey, 28–30 November 2019; pp. 595–599. [Google Scholar]

- Song, K.; Choi, H.S.; Kang, M. Squeezed fire binary segmentation model using convolutional neural network for outdoor images on embedded device. Mach. Vis. Appl. 2021, 32, 1–12. [Google Scholar] [CrossRef]

- Ghali, R.; Akhloufi, M.A.; Souidene Mseddi, W.; Jmal, M. Wildfire Segmentation Using Deep-RegSeg Semantic Segmentation Architecture. In Proceedings of the 19th International Conference on Content-Based Multimedia Indexing, New York, NY, USA, 14–16 September 2022; pp. 149–154. [Google Scholar]

- Harkat, H.; Nascimento, J.; Bernardino, A. Fire segmentation using a DeepLabv3+ architecture. In Proceedings of the Image and Signal Processing for Remote Sensing XXVI, Online, 21–25 September 2020; pp. 134–145. [Google Scholar]

- Barmpoutis, P.; Stathaki, T.; Dimitropoulos, K.; Grammalidis, N. Early Fire Detection Based on Aerial 360-Degree Sensors, Deep Convolution Neural Networks and Exploitation of Fire Dynamic Textures. Remote Sens. 2020, 12, 3177. [Google Scholar] [CrossRef]

- Harkat, H.; Nascimento, J.M.; Bernardino, A. Fire Detection using Residual Deeplabv3+ Model. In Proceedings of the 2021 Telecoms Conference (ConfTELE), Leiria, Portugal, 11–12 February 2021; pp. 1–6. [Google Scholar]

- Harkat, H.; Nascimento, J.M.P.; Bernardino, A.; Thariq Ahmed, H.F. Assessing the Impact of the Loss Function and Encoder Architecture for Fire Aerial Images Segmentation Using Deeplabv3+. Remote Sens. 2022, 14, 2023. [Google Scholar] [CrossRef]

- Perrolas, G.; Niknejad, M.; Ribeiro, R.; Bernardino, A. Scalable Fire and Smoke Segmentation from Aerial Images Using Convolutional Neural Networks and Quad-Tree Search. Sensors 2022, 22, 1701. [Google Scholar] [CrossRef]

- Pan, J.; Ou, X.; Xu, L. A Collaborative Region Detection and Grading Framework for Forest Fire Smoke Using Weakly Supervised Fine Segmentation and Lightweight Faster-RCNN. Forests 2021, 12, 768. [Google Scholar] [CrossRef]

- Wang, Z.; Peng, T.; Lu, Z. Comparative Research on Forest Fire Image Segmentation Algorithms Based on Fully Convolutional Neural Networks. Forests 2022, 13, 1133. [Google Scholar] [CrossRef]

- Zhang, J.; Zhu, H.; Wang, P.; Ling, X. ATT Squeeze U-Net: A Lightweight Network for Forest Fire Detection and Recognition. IEEE Access 2021, 9, 10858–10870. [Google Scholar] [CrossRef]

- Niknejad, M.; Bernardino, A. Attention on Classification for Fire Segmentation. In Proceedings of the 20th IEEE International Conference on Machine Learning and Applications (ICMLA), Virtually, 13–15 December 2021; pp. 616–621. [Google Scholar]

- Ghali, R.; Akhloufi, M.A.; Jmal, M.; Souidene Mseddi, W.; Attia, R. Wildfire Segmentation Using Deep Vision Transformers. Remote Sens. 2021, 13, 3527. [Google Scholar] [CrossRef]

- Li, M.; Zhang, Y.; Mu, L.; Xin, J.; Yu, Z.; Jiao, S.; Liu, H.; Xie, G.; Yingmin, Y. A Real-time Fire Segmentation Method Based on A Deep Learning Approach. IFAC-PapersOnLine 2022, 55, 145–150. [Google Scholar] [CrossRef]

- Chen, L.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking Atrous Convolution for Semantic Image Segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Huang, Z.; Huang, L.; Gong, Y.; Huang, C.; Wang, X. Mask Scoring R-CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 6409–6418. [Google Scholar]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Gillioz, A.; Casas, J.; Mugellini, E.; Khaled, O.A. Overview of the Transformer-based Models for NLP Tasks. In Proceedings of the 15th Conference on Computer Science and Information Systems (FedCSIS), Sofia, Bulgaria, 6–9 September 2020; pp. 179–183. [Google Scholar]

- Han, K.; Wang, Y.; Chen, H.; Chen, X.; Guo, J.; Liu, Z.; Tang, Y.; Xiao, A.; Xu, C.; Xu, Y.; et al. A Survey on Vision Transformer. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 87–110. [Google Scholar] [CrossRef]

- Yang, F.; Yang, H.; Fu, J.; Lu, H.; Guo, B. Learning Texture Transformer Network for Image Super-Resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 5791–5800. [Google Scholar]

- Ye, L.; Rochan, M.; Liu, Z.; Wang, Y. Cross-Modal Self-Attention Network for Referring Image Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 10502–10511. [Google Scholar]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Adeli, E.; Wang, Y.; Lu, L.; Yuille, A.L.; Zhou, Y. TransUNet: Transformers Make Strong Encoders for Medical Image Segmentation. arXiv 2021, arXiv:2102.04306. [Google Scholar]

- Valanarasu, J.M.J.; Oza, P.; Hacihaliloglu, I.; Patel, V.M. Medical Transformer: Gated Axial-Attention for Medical Image Segmentation. arXiv 2021, arXiv:2102.10662. [Google Scholar]

- Chino, D.Y.T.; Avalhais, L.P.S.; Rodrigues, J.F.; Traina, A.J.M. BoWFire: Detection of Fire in Still Images by Integrating Pixel Color and Texture Analysis. In Proceedings of the 2015 28th SIBGRAPI Conference on Graphics, Patterns and Images, Salvador, Brazil, 26–29 August 2015; pp. 95–102. [Google Scholar]

- de Oliveira, W.D. BowFire Dataset. Available online: https://bitbucket.org/gbdi/bowfire-dataset/src/master/ (accessed on 5 January 2023).

- Shamsoshoara, A.; Afghah, F.; Razi, A.; Zheng, L.; Fulé, P.Z.; Blasch, E. FLAME Dataset. Available online: https://ieee-dataport.org/open-access/flame-dataset-aerial-imagery-pile-burn-detection-using-drones-UAVs (accessed on 5 January 2023).

- Toulouse, T.; Rossi, L.; Campana, A.; Celik, T.; Akhloufi, M.A. CorsicanFire Dataset. Available online: https://feuxdeforet.universita.corsica/article.php?id_art=2133&id_rub=572&id_menu=0&id_cat=0&id_site=33&lang=en (accessed on 5 January 2023).

- Li, S.; Yan, Q.; Liu, P. An Efficient Fire Detection Method Based on Multiscale Feature Extraction, Implicit Deep Supervision and Channel Attention Mechanism. IEEE Trans. Image Process. 2020, 29, 8467–8475. [Google Scholar] [CrossRef]

- Li, S.; Yan, Q.; Liu, P. FD-Dataset. Available online: http://www.nnmtl.cn/EFDNet/ (accessed on 5 January 2023).

- The University of Georgia’s Center for Invasive Species and Ecosystem Health. ForestryImages Dataset. Available online: https://www.forestryimages.org/ (accessed on 5 January 2023).

- Cetin, E. VisiFire Dataset. Available online: http://signal.ee.bilkent.edu.tr/VisiFire// (accessed on 5 January 2023).

- Grammalidis, N.; Dimitropoulos, E.C.k. Firesense Dataset. Available online: https://zenodo.org/record/836749#.YumkVL2ZPIU/ (accessed on 5 January 2023).

- Khan, A.; Hassan, B.; Khan, S.; Ahmed, R.; Abuassba, A. DeepFire Dataset. Available online: https://www.kaggle.com/datasets/alik05/forest-fire-dataset (accessed on 5 January 2023).

- Flickr Team. Flickr-FireSmoke and Flickr-Fire Datasets. Available online: https://www.flickr.com/ (accessed on 5 January 2023).

- Cazzolato, M.T.; Bedo, M.V.N.; Costa, A.F.; de Souza, J.A.; Traina, C.; Rodrigues, J.F.; Traina, A.J.M. Unveiling Smoke in Social Images with the SmokeBlock Approach. In Proceedings of the 31st Annual ACM Symposium on Applied Computing, Pisa, Italy, 4–8 April 2016; pp. 49–54. [Google Scholar]

- Cazzolato, M.T.; Bedo, M.V.N.; Costa, A.F.; de Souza, J.A.; Traina, C.; Rodrigues, J.F.; Traina, A.J.M. SmokeBlock Dataset. Available online: https://goo.gl/uW7LxW/ (accessed on 5 January 2023).

| Ref. | Methodology | Object Detected | Dataset | Results (%) |

|---|---|---|---|---|

| [38] | AlexNet, GoogleNet, VGG13 | Flame | Private: 23,053 images | Accuracy = 99.00 |

| [42] | Fire_Net | Flame/Smoke | UAV_Fire: 3561 images | Accuracy = 98.00 |

| [43] | Deep CNN | Flame | Private: 2964 images | Accuracy = 95.70 |

| [44] | AlexNet with an adaptive pooling method | Flame | CorsicanFire: 500 images | Accuracy = 93.75 |

| [47] | XCeption | Flame | FLAME: 47,992 images | Accuracy = 76.23 |

| [49] | ResNet152 | Flame | Private: 1800 images | Accuracy = 99.56 |

| [50] | Modified ResNet50 | Flame | Private: numerous images | Accuracy = 92.27 |

| [51] | Inception v3 | Flame | CorsicanFire: 500 images | Accuracy = 98.60 |

| [53] | DenseNet | Flame | Private: 6345 images | Accuracy = 98.27 |

| [56] | MobileNet v2 | Flame | Private: 2096 images | Accuracy = 99.70 |

| [58] | ForestResNet | Flame | Private: 175 images | Accuracy = 92.00 |

| [59] | Simple CNN and image processing technique | Flame | FLAME: 8481 images | Sensitivity = 98.10 |

| [60] | EfficientNet-B5, DenseNet-201 | Flame | FLAME: 48,010 images | Accuracy = 85.12 |

| [62] | ResNet50 | Flame | FLAME: 47,992 images | Accuracy = 88.01 |

| [63] | FT-ResNet50 | Flame | FLAME: 31,501 images | Accuracy = 79.48 |

| [64] | VGG19 | Flame | DeepFire: 1900 images | Accuracy = 95.00 |

| [65] | ResNet19, ResNet50, ResNet101, InceptionResNet v2, NCA, SVM | Flame | DeepFire & Fire:1650 images | Accuracy = 99.15 |

| [66] | VGG16, ResNet50, MobileNet, VGG19, NASNetMobile, InceptionResNet v2, Xception, Inception v3, ResNet50 v2, DenseNet, MobileNet v2 | Flame | Private: 4661 images | Accuracy = 99.94 |

| [67] | CNN, RNN | Flame | FIRE: 1000 images Mivia: 15,750 images | Accuracy = 99.10 Accuracy = 99.62 |

| [70] | DCN_Fire | Flame/Smoke | Private: 1860 images | Accuracy = 98.30 |

| [71] | InceptionResNet v2 | Flame/Smoke | Private: 1102 images | Accuracy = 99.90 |

| [72] | Xception, LeNet5, VGG16, MobileNet v2, ResNet18 | Flame | FLAME2: 53,451 images | F1-score = 99.92 |

| [74] | DSA-ResNet | Flame | FLAME: 8000 images | Accuracy = 93.65 |

| Ref. | Methodology | Object Detected | Dataset | Results (%) |

|---|---|---|---|---|

| [77] | Modified Yolo v3 | Flame/Smoke | Private: various images & videos | Accuracy = 83.00 |

| [78] | Faster R-CNN, Yolo v1,2,3, SSD | Flame/Smoke | Private: 1000 images | Accuracy = 99.88 |

| [79] | Yolo v3 | Flame | Private UAV data | Precision = 84.00 |

| [80] | ARSB, zoom, Yolo v3 | Flame | Private: 1400 4k images | mAP = 67.00 |

| [81] | Yolo v5, EfficientDet, EfficientNet | Flame | BowFire, FD-dataset, ForestryImages, VisiFire | AP = 79.00 |

| [82] | Yolo v4 with MobileNet v3 | Flame/Smoke | Private: 1844 images | Accuracy = 99.35 |

| [83] | Yolo v4 tiny | Flame | Private: more than 100 images | Accuracy = 91.00 |

| [84] | Yolo v5, U-Net | Flame | CorsicanFire and fire-like objects images: 1300 images | Accuracy = 99.60 |

| [85] | Fire-YOLO | Flame/Smoke | Private: 19,819 images | F1-score = 91.50 |

| [86] | Yolo v5, CBAM, BiFPN, SPPFP | Flame | Private: 3320 images | mAP = 70.30 |

| [87] | FCDM | Flame | Private: 544 images | mAP = 86.90 |

| [88] | Faster R-CNN with multidimensional texture analysis method | Flame | CorsicanFire, Pascal VOC: 1050 images | F1-score = 99.70 |

| [89] | STPM_SAHI | Flame | Private: 3167 images | AP = 89.40 |

| Ref. | Data Name | RGB/IR | Image Type | Fire Area | Number of Images/Videos | Labeling Type |

|---|---|---|---|---|---|---|

| [153,154] | BowFire | RGB | Terrestrial | Urban/Forest | 226 images: 119 fire images and 107 non-fire images226 binary mask | Classification Segmentation |

| [47,155] | FLAME | RGB/LWIR | Aerial | Forest | 48,010 images: 17,855 fire images and 30,155 non-fire images2003 binary mask | Classification Segmentation |

| [45,156] | CorsicanFire | RGB/NIR | Terrestrial | Forest | 1135 images and their corresponding binary mask | Segmentation |

| [157,158] | FD-dataset | RGB | Terrestrial | Urban/Forest | 31 videos: 14 fire videos and 17 non-fire videos 50,000 images: 25,000 fire images and 25,000 non-fire images | Classification |

| [159] | ForestryImages | RGB | Terrestrial | Forest | 317,921 images | Classifcation |

| [160] | VisiFire | RGB | Terrestrial | Urban/Forest | 12 videos | Classification |

| [161] | Firesense | RGB | Terrestrial | Urban/Forest | 29 videos: 11 fire videos, 13 smoke videos, and 25 non-fire/smoke videos | Classification |

| [68] | MIVIA | RGB | Terrestrial | Urban/Forest | 31 videos: 17 fire videos and 14 non-fire videos | Classification |

| [118] | FiSmo | RGB | Terrestrial | Urban/Forest | 9448 images and 158 videos | Classification |

| [64,162] | DeepFire | RGB | Terrestrial | Forest | 1900 images: 950 fire images and 950 non-fire images | Classification |

| [69] | FIRE | RGB | Terrestrial | Forest | 999 images: 755 fire images and 244 non-fire images | Classification |

| [72,73] | FLAME2 | RGB/LWIR | Aerial | Forest | 53,451 images: 25,434 fire images, 14,317 fire/non-smoke images, and 13,700 non-fire | Classification |

| Task | Ref | Data Augmentation Techniques |

|---|---|---|

| Wildfire Classification | [38] | Crop, horizontal/vertical flip |

| [49] | Crop, rotation | |

| [51] | Crop | |

| [53] | Horizontal flip, rotation, zoom rotation, brightness, CycleGAN | |

| [56] | Shift, rotation, flip, blur, varying illumination intensity | |

| [58] | Crop, horizontal flip | |

| [60] | Rotation, shear, zoom, shift | |

| [62] | Horizontal flip, rotation | |

| [63] | Mix-up, rotation, flip | |

| [66] | Rotation, horizontal/vertical mirroring, Gaussian blur, pixel level augmentation | |

| [67] | Horizontal/vertical flip, zoom | |

| Wildfire Detection | [84] | Translation, image scale, mosaic, mix-up, horizontal flip |

| Wildfire segmentation | [60,122,127,137] | Horizontal flip, rotation |

| [116,126] | Left/right symmetry | |

| [131] | Translation, rotation, horizontal/vertical reflection, left/right reflection | |

| [134] | Flip, rotation, crop, noise |

| Task | Ref | Methodolgy | Configuration | Time (FPS) |

|---|---|---|---|---|

| Wildfire Classification | [38] | GoogLeNet | 3 NVIDIA GTX Titan X GPUs | 24.79 |

| [60] | EfficientNet-B5, DenseNet201 | NVIDIA Geforce RTX 2080Ti GPU | 55.55 | |

| [63] | FT-ResNet50 | NVIDIA GeForce RTX 2080Ti GPU | 18.10 | |

| Wildfire Detection | [77] | Modified Yolo v3 | Drone with NVIDIA 4-Plus-1 ARM Cortex-A15 | 3.20 |

| [81] | Yolo v5, EfficientDet, EfficientNet | NVIDIA GTX 2080Ti GPU | 14.97 | |

| [82] | Yolo v4 with MobileNet v3 | NVIDIA Jetson Xavier NX GPU | 19.76 | |

| [86] | Yolo v5, CBAM, BiFPN, SPPFP | NVIDIA GeForce GTX 1070 GPU | 44.10 | |

| [87] | FCDM | NVIDIA GeForce RTX 3060 GPU | 64.00 | |

| [89] | STPM_SAHI | NVIDIA RTX 3050Ti GPU | 19.22 | |

| Wildfire Segmentation | [60] | TransUNet | NVIDIA V100-SXM2 GPU | 1.96 |

| TransFire | 1.00 | |||

| [137] | TransUNet | NVIDIA Geforce RTX 2080Ti GPU | 0.83 | |

| MedT | 0.37 | |||

| [112] | SFEwAN-SD | NVIDIA GTX 970 MSI GPU | 25.64 | |

| [121] | wUUNet | NVIDIA RTX 2070 GPU | 63.00 | |

| [127] | Deep-RegSeg | NVIDIA Tesla T4 GPU | 6.25 | |

| [131] | DeepLab v3+ | NVIDIA GeForce RTX 3090 GPU | 0.98 | |

| [133] | FireDGWF | 2 NVIDIA GTX 1080Ti GPUs | 6.62 | |

| [134] | U-Net | NVIDIA GeForce RTX 2080Ti GPU | 1.22 | |

| DeepLab v3+ | 1.47 | |||

| FCN | 2.33 | |||

| PSPNet | 2.04 | |||

| [135] | ATT Squeeze U-Net | NVIDIA GeForce GTX 1070 GPU | 0.65 | |

| [138] | Improved DeepLab v3+ with MobileNet v3 | NVIDIA RTX 2080 Ti GPU | 24.00 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ghali, R.; Akhloufi, M.A. Deep Learning Approaches for Wildland Fires Remote Sensing: Classification, Detection, and Segmentation. Remote Sens. 2023, 15, 1821. https://doi.org/10.3390/rs15071821

Ghali R, Akhloufi MA. Deep Learning Approaches for Wildland Fires Remote Sensing: Classification, Detection, and Segmentation. Remote Sensing. 2023; 15(7):1821. https://doi.org/10.3390/rs15071821

Chicago/Turabian StyleGhali, Rafik, and Moulay A. Akhloufi. 2023. "Deep Learning Approaches for Wildland Fires Remote Sensing: Classification, Detection, and Segmentation" Remote Sensing 15, no. 7: 1821. https://doi.org/10.3390/rs15071821