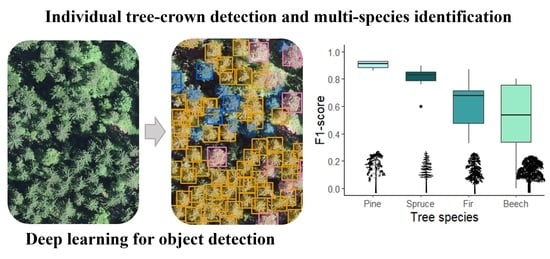

Individual Tree-Crown Detection and Species Identification in Heterogeneous Forests Using Aerial RGB Imagery and Deep Learning

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area

2.2. Data

2.2.1. Aerial RGB Imagery

2.2.2. Training and Validation Data

2.2.3. Test Data

2.3. CNN-Based Tree Species Mapping

2.4. Model Performance Assessment

3. Results

3.1. CNN Performance for Single-Species Detection Models

3.2. CNs Performance for Multi-Species Detection Models

3.3. Effect of Training Data Augmentation and Forest Stand Conditions on Model Performance

4. Discussion

4.1. Significance of the Study

4.2. Performance of CNNs in Detecting Individual Tree Species with Single-Species Models

4.3. Performance of CNNs in Detecting Multiple Tree Species in Multi-Species Models

4.4. Model Generalization

4.5. Reference Data and Application

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- FAO; UNEP. The State of the World’s Forests 2020: Forests, Biodiversity and People, The State of the World’s Forests (SOFO); FAO: Rome, Italy; UNEP: Rome, Italy, 2020; ISBN 978-92-5-132419-6. [Google Scholar]

- Lopatin, J.; Dolos, K.; Kattenborn, T.; Fassnacht, F. How canopy shadow affects invasive plant species classification in high spatial resolution remote sensing. Remote Sens. Ecol. Conserv. 2019, 5, 302–317. [Google Scholar] [CrossRef]

- Shang, X.; Chisholm, L.A. Classification of Australian Native Forest Species Using Hyperspectral Remote Sensing and Machine-Learning Classification Algorithms. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2013, 7, 2481–2489. [Google Scholar] [CrossRef]

- Neuner, S.; Albrecht, A.; Cullmann, D.; Engels, F.; Griess, V.C.; Hahn, W.A.; Hanewinkel, M.; Härtl, F.; Kölling, C.; Staupendahl, K.; et al. Survival of Norway spruce remains higher in mixed stands under a dryer and warmer climate. Glob. Chang. Biol. 2015, 21, 935–946. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Härtl, F.H.; Barka, I.; Hahn, W.A.; Hlásny, T.; Irauschek, F.; Knoke, T.; Lexer, M.J.; Griess, V. Multifunctionality in European mountain forests—An optimization under changing climatic conditions. Can. J. For. Res. 2016, 46, 163–171. [Google Scholar] [CrossRef] [Green Version]

- Sylvain, J.-D.; Drolet, G.; Brown, N. Mapping dead forest cover using a deep convolutional neural network and digital aerial photography. ISPRS J. Photogramm. Remote Sens. 2019, 156, 14–26. [Google Scholar] [CrossRef]

- Șandric, I.; Irimia, R.; Petropoulos, G.P.; Anand, A.; Srivastava, P.K.; Pleșoianu, A.; Faraslis, I.; Stateras, D.; Kalivas, D. Tree’s detection & health’s assessment from ultra-high resolution UAV imagery and deep learning. Geocarto. Int. 2022, 37, 10459–10479. [Google Scholar] [CrossRef]

- de Lima, R.A.F.; Phillips, O.L.; Duque, A.; Tello, J.S.; Davies, S.J.; de Oliveira, A.A.; Muller, S.; Honorio Coronado, E.N.H.; Vilanova, E.; Cuni-Sanchez, A.; et al. Making forest data fair and open. Nat. Ecol. Evol. 2022, 6, 656–658. [Google Scholar] [CrossRef]

- Lechner, A.M.; Foody, G.M.; Boyd, D.S. Applications in Remote Sensing to Forest Ecology and Management. One Earth 2020, 2, 405–412. [Google Scholar] [CrossRef]

- Achim, A.; Moreau, G.; Coops, N.C.; Axelson, J.N.; Barrette, J.; Bédard, S.; E Byrne, K.; Caspersen, J.; Dick, A.R.; D’Orangeville, L.; et al. The changing culture of silviculture. For. Int. J. For. Res. 2021, 95, 143–152. [Google Scholar] [CrossRef]

- Cavender-Bares, J.; Schneider, F.D.; Santos, M.J.; Armstrong, A.; Carnaval, A.; Dahlin, K.M.; Fatoyinbo, L.; Hurtt, G.C.; Schimel, D.; Townsend, P.A.; et al. Integrating remote sensing with ecology and evolution to advance biodiversity conservation. Nat. Ecol. Evol. 2022, 6, 506–519. [Google Scholar] [CrossRef]

- Waser, L.T.; Rüetschi, M.; Psomas, A.; Small, D.; Rehush, N. Mapping dominant leaf type based on combined Sentinel-1/-2 data–Challenges for mountainous countries. ISPRS J. Photogramm. Remote. Sens. 2021, 180, 209–226. [Google Scholar] [CrossRef]

- Brodrick, P.G.; Davies, A.B.; Asner, G.P. Uncovering Ecological Patterns with Convolutional Neural Networks. Trends Ecol. Evol. 2019, 34, 734–745. [Google Scholar] [CrossRef] [PubMed]

- Kattenborn, T.; Leitloff, J.; Schiefer, F.; Hinz, S. Review on Convolutional Neural Networks (CNN) in vegetation remote sensing. ISPRS J. Photogramm. Remote Sens. 2021, 173, 24–49. [Google Scholar] [CrossRef]

- Hoeser, T.; Kuenzer, C. Object Detection and Image Segmentation with Deep Learning on Earth Observation Data: A Review-Part I: Evolution and Recent Trends. Remote Sens. 2020, 12, 1667. [Google Scholar] [CrossRef]

- Katal, N.; Rzanny, M.; Mäder, P.; Wäldchen, J. Deep Learning in Plant Phenological Research: A Systematic Literature Review. Front. Plant Sci. 2022, 13, 805738. [Google Scholar] [CrossRef] [PubMed]

- Kattenborn, T.; Eichel, J.; Fassnacht, F.E. Convolutional Neural Networks enable efficient, accurate and fine-grained segmentation of plant species and communities from high-resolution UAV imagery. Sci. Rep. 2019, 9, 17656. [Google Scholar] [CrossRef] [Green Version]

- Schiefer, F.; Kattenborn, T.; Frick, A.; Frey, J.; Schall, P.; Koch, B.; Schmidtlein, S. Mapping forest tree species in high resolution UAV-based RGB-imagery by means of convolutional neural networks. ISPRS J. Photogramm. Remote Sens. 2020, 170, 205–215. [Google Scholar] [CrossRef]

- Onishi, M.; Ise, T. Explainable identification and mapping of trees using UAV RGB image and deep learning. Sci. Rep. 2021, 11, 903. [Google Scholar] [CrossRef]

- Weinstein, B.G.; Marconi, S.; Bohlman, S.; Zare, A.; White, E. Individual Tree-Crown Detection in RGB Imagery Using Semi-Supervised Deep Learning Neural Networks. Remote Sens. 2019, 11, 1309. [Google Scholar] [CrossRef] [Green Version]

- Pleșoianu, A.-I.; Stupariu, M.-S.; Șandric, I.; Pătru-Stupariu, I.; Drăguț, L. Individual Tree-Crown Detection and Species Classification in Very High-Resolution Remote Sensing Imagery Using a Deep Learning Ensemble Model. Remote Sens. 2020, 12, 2426. [Google Scholar] [CrossRef]

- Chadwick, A.J.; Goodbody, T.R.H.; Coops, N.C.; Hervieux, A.; Bater, C.W.; Martens, L.A.; White, B.; Röeser, D. Automatic Delineation and Height Measurement of Regenerating Conifer Crowns under Leaf-Off Conditions Using UAV Imagery. Remote Sens. 2020, 12, 4104. [Google Scholar] [CrossRef]

- Lumnitz, S.; Devisscher, T.; Mayaud, J.R.; Radic, V.; Coops, N.C.; Griess, V.C. Mapping trees along urban street networks with deep learning and street-level imagery. ISPRS J. Photogramm. Remote Sens. 2021, 175, 144–157. [Google Scholar] [CrossRef]

- Yan, S.; Jing, L.; Wang, H. A New Individual Tree Species Recognition Method Based on a Convolutional Neural Network and High-Spatial Resolution Remote Sensing Imagery. Remote Sens. 2021, 13, 479. [Google Scholar] [CrossRef]

- Weinstein, B.G.; Marconi, S.; Bohlman, S.A.; Zare, A.; White, E.P. Cross-site learning in deep learning RGB tree crown detection. Ecol. Inform. 2020, 56, 101061. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot Multibox Detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, NY, USA, 11–14 October 2016; Volume 9905, pp. 21–37. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- Dos Santos, A.A.; Marcato Junior, J.; Araújo, M.S.; Di Martini, D.R.; Tetila, E.C.; Siqueira, H.L.; Aoki, C.; Eltner, A.; Matsubara, E.T.; Pistori, H.; et al. Assessment of CNN-Based Methods for Individual Tree Detection on Images Captured by RGB Cameras Attached to UAVs. Sensors 2019, 19, 3595. [Google Scholar] [CrossRef] [Green Version]

- Parvathi, S.; Selvi, S.T. Detection of maturity stages of coconuts in complex background using Faster R-CNN model. Biosyst. Eng. 2021, 202, 119–132. [Google Scholar] [CrossRef]

- Osco, L.P.; Arruda, M.D.S.D.; Marcato Junior, J.; da Silva, N.B.; Ramos, A.P.M.; Moryia, A.S.; Imai, N.N.; Pereira, D.R.; Creste, J.E.; Matsubara, E.; et al. A convolutional neural network approach for counting and geolocating citrus-trees in UAV multispectral imagery. ISPRS J. Photogramm. Remote Sens. 2020, 160, 97–106. [Google Scholar] [CrossRef]

- Li, Y.; Tang, B.; Li, J.; Sun, W.; Lin, Z.; Luo, Q. Research on Common Tree Species Recognition by Faster R-CNN Based on Whole Tree Image. In Proceedings of the 2021 IEEE 6th International Conference on Signal and Image Processing (ICSIP), Nanjing, China, 9–11 July 2021; pp. 28–32. [Google Scholar]

- Zamboni, P.; Junior, J.; Silva, J.; Miyoshi, G.; Matsubara, E.; Nogueira, K.; Gonçalves, W. Benchmarking Anchor-Based and Anchor-Free State-of-the-Art Deep Learning Methods for Individual Tree Detection in RGB High-Resolution Images. Remote Sens. 2021, 13, 2482. [Google Scholar] [CrossRef]

- Zhang, C.; Atkinson, P.M.; George, C.; Wen, Z.; Diazgranados, M.; Gerard, F. Identifying and mapping individual plants in a highly diverse high-elevation ecosystem using UAV imagery and deep learning. ISPRS J. Photogramm. Remote Sens. 2020, 169, 280–291. [Google Scholar] [CrossRef]

- Zhang, C.; Zhou, J.; Wang, H.; Tan, T.; Cui, M.; Huang, Z.; Wang, P.; Zhang, L. Multi-Species Individual Tree Segmentation and Identification Based on Improved Mask R-CNN and UAV Imagery in Mixed Forests. Remote Sens. 2022, 14, 874. [Google Scholar] [CrossRef]

- Fassnacht, F.E.; Latifi, H.; Stereńczak, K.; Modzelewska, A.; Lefsky, M.; Waser, L.T.; Straub, C.; Ghosh, A. Review of studies on tree species classification from remotely sensed data. Remote Sens. Environ. 2016, 186, 64–87. [Google Scholar] [CrossRef]

- Jaskierniak, D.; Lucieer, A.; Kuczera, G.; Turner, D.; Lane, P.; Benyon, R.; Haydon, S. Individual tree detection and crown delineation from Unmanned Aircraft System (UAS) LiDAR in structurally complex mixed species eucalypt forests. ISPRS J. Photogramm. Remote Sens. 2020, 171, 171–187. [Google Scholar] [CrossRef]

- La Rosa, L.E.C.; Sothe, C.; Feitosa, R.Q.; de Almeida, C.M.; Schimalski, M.B.; Oliveira, D.A.B. Multi-task fully convolutional network for tree species mapping in dense forests using small training hyperspectral data. ISPRS J. Photogramm. Remote Sens. 2021, 179, 35–49. [Google Scholar] [CrossRef]

- Ecke, S.; Dempewolf, J.; Frey, J.; Schwaller, A.; Endres, E.; Klemmt, H.-J.; Tiede, D.; Seifert, T. UAV-Based Forest Health Monitoring: A Systematic Review. Remote Sens. 2022, 14, 3205. [Google Scholar] [CrossRef]

- Swisstopo SwissBOUNDARIES3D. Available online: https://www.swisstopo.admin.ch/en/geodata/landscape/boundaries3d.html (accessed on 22 February 2022).

- Beck, H.E.; Zimmermann, N.E.; McVicar, T.R.; Vergopolan, N.; Berg, A.; Wood, E.F. Present and future Köppen-Geiger climate classification maps at 1-km resolution. Sci. Data 2018, 5, 180214. [Google Scholar] [CrossRef] [Green Version]

- Rigling, A.; Schaffer, H.P. Forest Report Condition and Use of Swiss Forests; Federal Office for the Environment FOEN; Swiss Federal Institute for Forest; Snow and Landscape Reseach WSL: Bern, Birmensdorf, 2015; p. 145. [Google Scholar]

- Swisstopo SWISSIMAGE10cm. Available online: https://www.swisstopo.admin.ch/de/geodata/images/ortho/swissimage10.html (accessed on 7 April 2022).

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Computer Vision, Proceedings of the ECCV 2014, Zurich, Switzerland, 6–12 September 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Geiger, A.; Lenz, P.; Urtasun, R. Are We Ready for Autonomous Driving? The KITTI Vision Benchmark Suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Kuznetsova, A.; Rom, H.; Alldrin, N.; Uijlings, J.; Krasin, I.; Pont-Tuset, J.; Kamali, S.; Popov, S.; Malloci, M.; Kolesnikov, A.; et al. The Open Images Dataset V. Int. J. Comput. Vis. 2020, 128, 1956–1981. [Google Scholar] [CrossRef] [Green Version]

- Bienz, R. Tree Species Segmentation and Classification Algorithm for SwissimageRS 2018 (Swisstopo) 2021. Available online: https://github.com/RaffiBienz/arborizer (accessed on 18 February 2021).

- LWF Long-Term Forest Ecosystem Research (LWF)-WSL. Available online: https://www.wsl.ch/en/about-wsl/programmes-and-initiatives/long-term-forest-ecosystem-research-lwf.html (accessed on 3 February 2022).

- WSL Swiss National Forest Inventory (NFI), Data of Survey 2009/2017. 11–15 2021-Nataliia Rehush-DL1342 Swiss Federal Institute for Forest, Snow and Landscape Research WSL, Birmensdorf. Available online: https://lfi.ch/index-en.php (accessed on 5 September 2022).

- Correia, D.L.P.; Bouachir, W.; Gervais, D.; Pureswaran, D.; Kneeshaw, D.D.; De Grandpre, L. Leveraging Artificial Intelligence for Large-Scale Plant Phenology Studies from Noisy Time-Lapse Images. IEEE Access 2020, 8, 13151–13160. [Google Scholar] [CrossRef]

- Puliti, S.; Astrup, R. Automatic detection of snow breakage at single tree level using YOLOv5 applied to UAV imagery. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102946. [Google Scholar] [CrossRef]

- Choi, K.; Lim, W.; Chang, B.; Jeong, J.; Kim, I.; Park, C.-R.; Ko, D.W. An automatic approach for tree species detection and profile estimation of urban street trees using deep learning and Google street view images. ISPRS J. Photogramm. Remote. Sens. 2022, 190, 165–180. [Google Scholar] [CrossRef]

- Brandtberg, T. Towards structure-based classification of tree crowns in high spatial resolution aerial images. Scand. J. For. Res. 1997, 12, 89–96. [Google Scholar] [CrossRef]

- Immitzer, M.; Vuolo, F.; Atzberger, C. First Experience with Sentinel-2 Data for Crop and Tree Species Classifications in Central Europe. Remote Sens. 2016, 8, 166. [Google Scholar] [CrossRef]

- Fricker, G.A.; Ventura, J.D.; Wolf, J.A.; North, M.P.; Davis, F.W.; Franklin, J. A Convolutional Neural Network Classifier Identifies Tree Species in Mixed-Conifer Forest from Hyperspectral Imagery. Remote Sens. 2019, 11, 2326. [Google Scholar] [CrossRef] [Green Version]

- Weinstein, B.G.; Marconi, S.; A Bohlman, S.; Zare, A.; Singh, A.; Graves, S.J.; White, E.P. A Remote Sensing Derived Data Set of 100 Million Individual Tree Crowns for the National Ecological Observatory Network. eLife 2021, 10, e62922. [Google Scholar] [CrossRef] [PubMed]

- Natesan, S.; Armenakis, C.; Vepakomma, U. Resnet-based tree species classification using uav images. ISPRS-Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2019, XLII-2/W13, 475–481. [Google Scholar] [CrossRef] [Green Version]

- Korpela, I.; Mehtätalo, L.; Markelin, L.; Seppänen, A.; Kangas, A. Tree species identification in aerial image data using directional reflectance signatures. Silva Fenn. 2014, 48, 1087. [Google Scholar] [CrossRef] [Green Version]

- Xu, M.; Bai, Y.; Ghanem, B. Missing Labels in Object Detection. CVPR Workshops 2019, 3, 5. [Google Scholar]

- Lines, E.R.; Allen, M.; Cabo, C.; Calders, K.; Debus, A.; Grieve, S.W.D.; Miltiadou, M.; Noach, A.; Owen, H.J.F.; Puliti, S. AI applications in forest monitoring need remote sensing benchmark datasets. arXiv 2022, arXiv:2212.09937. [Google Scholar] [CrossRef]

- Ahlswede, S.; Schulz, C.; Gava, C.; Helber, P.; Bischke, B.; Förster, M.; Arias, F.; Hees, J.; Demir, B.; Kleinschmit, B. TreeSatAI Benchmark Archive: A multi-sensor, multi-label dataset for tree species classification in remote sensing. Earth Syst. Sci. Data 2023, 15, 1–22. [Google Scholar] [CrossRef]

- Deng, Z.; Sun, H.; Zhou, S.; Zhao, J.; Lei, L.; Zou, H. Multi-scale object detection in remote sensing imagery with convolutional neural networks. ISPRS J. Photogramm. Remote. Sens. 2018, 145, 3–22. [Google Scholar] [CrossRef]

- Roundshot 360 Degree Webcam in Verschiedenen Anwendungen (360 Degree Webcam in Various Applications). Available online: https://www.roundshot.com/xml_1/internet/en/application/d170/f172.cfm (accessed on 5 September 2022).

- Pearse, G.D.; Tan, A.Y.S.; Watt, M.S.; Franz, M.O.; Dash, J.P. Detecting and mapping tree seedlings in UAV imagery using convolutional neural networks and field-verified data. ISPRS J. Photogramm. Remote. Sens. 2020, 168, 156–169. [Google Scholar] [CrossRef]

- Randin, C.F.; Ashcroft, M.B.; Bolliger, J.; Cavender-Bares, J.; Coops, N.C.; Dullinger, S.; Dirnböck, T.; Eckert, S.; Ellis, E.; Fernández, N.; et al. Monitoring biodiversity in the Anthropocene using remote sensing in species distribution models. Remote Sens. Environ. 2020, 239, 111626. [Google Scholar] [CrossRef]

- Beloiu, M.; Stahlmann, R.; Beierkuhnlein, C. Drought impacts in forest canopy and deciduous tree saplings in Central European forests. For. Ecol. Manag. 2022, 509, 120075. [Google Scholar] [CrossRef]

- Kälin, U.; Lang, N.; Hug, C.; Gessler, A.; Wegner, J.D. Defoliation estimation of forest trees from ground-level images. Remote Sens. Environ. 2019, 223, 143–153. [Google Scholar] [CrossRef]

- Beloiu, M.; Poursanidis, D.; Hoffmann, S.; Chrysoulakis, N.; Beierkuhnlein, C. Using High Resolution Aerial Imagery and Deep Learning to Detect Tree Spatio-Temporal Dynamics at the Treeline. In Proceedings of the EGU General Assembly Conference Abstracts, Virtually, 19–30 April 2021; p. EGU21-14548. [Google Scholar] [CrossRef]

- Beloiu, M.; Poursanidis, D.; Tsakirakis, A.; Chrysoulakis, N.; Hoffmann, S.; Lymberakis, P.; Barnias, A.; Kienle, D.; Beierkuhnlein, C. No treeline shift despite climate change over the last 70 years. For. Ecosyst. 2022, 9, 100002. [Google Scholar] [CrossRef]

| Scientific Name | Common Name | Training | Validation | Test | Total |

|---|---|---|---|---|---|

| Picea abies | Norway spruce | 3113 | 346 | 497 | 3956 |

| Abies alba | Silver fir | 2137 | 238 | 128 | 2503 |

| Pinus sylvestris | Scots pine | 539 | 60 | 26 | 625 |

| Fagus sylvatica | European beech | 3763 | 418 | 172 | 4353 |

| Total | 9552 | 1062 | 823 | 11,437 |

| Model | Detected sp. | Precision | Recall | F1 Score | AP | TP | FP | FN |

|---|---|---|---|---|---|---|---|---|

| Spruce | Spruce | 0.93 | 0.80 | 0.86 | 0.78 | 189 | 15 | 48 |

| Fir | Fir | 0.81 | 0.86 | 0.84 | 0.78 | 110 | 25 | 18 |

| Pine | Pine | 0.50 | 0.73 | 0.59 | 0.57 | 19 | 19 | 7 |

| Beech | Beech | 0.77 | 0.74 | 0.75 | 0.64 | 126 | 38 | 46 |

| Model | Detected sp. | Precision | Recall | F1 Score | AP | TP | FP | FN |

|---|---|---|---|---|---|---|---|---|

| Spruce-fir | Spruce | 0.91 | 0.79 | 0.85 | 0.77 | 188 | 18 | 49 |

| Fir | 0.94 | 0.38 | 0.54 | 0.37 | 49 | 3 | 79 | |

| Spruce-fir-pine | Spruce | 0.96 | 0.68 | 0.79 | 0.66 | 160 | 6 | 77 |

| Fir | 0.83 | 0.63 | 0.72 | 0.58 | 81 | 17 | 47 | |

| Pine | 1.00 | 0.85 | 0.92 | 0.85 | 22 | 0 | 4 | |

| Spruce-fir-beech | Spruce | 0.94 | 0.80 | 0.86 | 0.78 | 189 | 12 | 48 |

| Fir | 0.95 | 0.44 | 0.60 | 0.42 | 56 | 3 | 72 | |

| Beech | 0.97 | 0.40 | 0.56 | 0.39 | 66 | 2 | 105 | |

| Spruce-fir-pine-beech | Spruce | 0.99 | 0.63 | 0.77 | 0.63 | 150 | 1 | 87 |

| Fir | 1.00 | 0.38 | 0.55 | 0.38 | 48 | 0 | 80 | |

| Pine | 0.96 | 0.88 | 0.92 | 0.88 | 23 | 1 | 3 | |

| Beech | 0.93 | 0.30 | 0.46 | 0.29 | 52 | 4 | 121 |

| Model | Spruce-Fir | Spruce-Fir-Pine | Spruce-Fir-Beech | Spruce-Fir-Pine-Beech | ||||

|---|---|---|---|---|---|---|---|---|

| Non-Aug | Aug | Non-Aug | Aug | Non-Aug | Aug | Non-Aug | Aug | |

| All species | 0.70 | 0.81 | 0.81 | 0.69 | 0.68 | 0.71 | 0.67 | 0.73 |

| Spruce | 0.85 | 0.87 | 0.79 | 0.67 | 0.86 | 0.86 | 0.77 | 0.85 |

| Fir | 0.54 | 0.74 | 0.72 | 0.65 | 0.60 | 0.75 | 0.55 | 0.80 |

| Pine | - | - | 0.92 | 0.84 | - | - | 0.92 | 0.39 |

| Beech | - | - | - | - | 0.55 | 0.37 | 0.46 | 0.49 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Beloiu, M.; Heinzmann, L.; Rehush, N.; Gessler, A.; Griess, V.C. Individual Tree-Crown Detection and Species Identification in Heterogeneous Forests Using Aerial RGB Imagery and Deep Learning. Remote Sens. 2023, 15, 1463. https://doi.org/10.3390/rs15051463

Beloiu M, Heinzmann L, Rehush N, Gessler A, Griess VC. Individual Tree-Crown Detection and Species Identification in Heterogeneous Forests Using Aerial RGB Imagery and Deep Learning. Remote Sensing. 2023; 15(5):1463. https://doi.org/10.3390/rs15051463

Chicago/Turabian StyleBeloiu, Mirela, Lucca Heinzmann, Nataliia Rehush, Arthur Gessler, and Verena C. Griess. 2023. "Individual Tree-Crown Detection and Species Identification in Heterogeneous Forests Using Aerial RGB Imagery and Deep Learning" Remote Sensing 15, no. 5: 1463. https://doi.org/10.3390/rs15051463