Author Contributions

Conceptualization, J.X. and X.Y.; methodology, J.X.; software, J.X.; validation, J.X., X.Y. and C.L.; formal analysis, J.X.; investigation, J.X. and C.L.; resources, J.X. and X.Y.; data curation, J.X. and X.Y.; writing—original draft preparation, J.X.; writing—review and editing, J.X., X.Y. and C.L.; visualization, J.X.; supervision, X.Y.; project administration, J.X.; funding acquisition, X.Y. All authors have read and agreed to the published version of the manuscript.

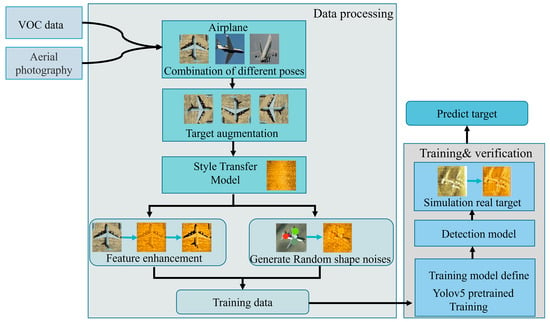

Figure 1.

Data processing to generate training data.

Figure 1.

Data processing to generate training data.

Figure 2.

Our process framework.

Figure 2.

Our process framework.

Figure 3.

Real sonar images (a) Airplane with incline pose. (b) Airplane right pose. (c) Shipwreck with lateral pose. (d) Shipwreck with frontage pose.

Figure 3.

Real sonar images (a) Airplane with incline pose. (b) Airplane right pose. (c) Shipwreck with lateral pose. (d) Shipwreck with frontage pose.

Figure 4.

Multiple attitudes of the optical image on same target.

Figure 4.

Multiple attitudes of the optical image on same target.

Figure 5.

Data expansion in one target.

Figure 5.

Data expansion in one target.

Figure 6.

Original sonar image with optical target transfer to sonar style target. (a) Original sonar image. (b) Optical image. (c) Traditional style transfer image. (d) Feature enhancement style transfer image.

Figure 6.

Original sonar image with optical target transfer to sonar style target. (a) Original sonar image. (b) Optical image. (c) Traditional style transfer image. (d) Feature enhancement style transfer image.

Figure 7.

Example of shadow and reflector on real sonar target.

Figure 7.

Example of shadow and reflector on real sonar target.

Figure 8.

Frequency analysis on original sonar image. (a) Gray image on original sonar target. (b) Fourier transform on sonar image. (c) Gaussian filter frequency in frequency image. (d) Gaussian filtered image.

Figure 8.

Frequency analysis on original sonar image. (a) Gray image on original sonar target. (b) Fourier transform on sonar image. (c) Gaussian filter frequency in frequency image. (d) Gaussian filtered image.

Figure 9.

Frequency analysis on style transfer sample image. (a) Gray image using style transfer directly. (b) Fourier transform on sonar image. (c) Gaussian filter frequency in frequency image. (d) Gaussian filtered image.

Figure 9.

Frequency analysis on style transfer sample image. (a) Gray image using style transfer directly. (b) Fourier transform on sonar image. (c) Gaussian filter frequency in frequency image. (d) Gaussian filtered image.

Figure 10.

Style transfer process. (a) General style transfer process. (b) Enhancement feature based on style transfer.

Figure 10.

Style transfer process. (a) General style transfer process. (b) Enhancement feature based on style transfer.

Figure 11.

Frequency analysis on enhancement style transfer sample image. (a) Gray image on using style transfer directly. (b) Fourier transform on sonar image. (c) Gaussian filter frequency in frequency image. (d) Gaussian filtered image.

Figure 11.

Frequency analysis on enhancement style transfer sample image. (a) Gray image on using style transfer directly. (b) Fourier transform on sonar image. (c) Gaussian filter frequency in frequency image. (d) Gaussian filtered image.

Figure 12.

Examples of incomplete target. (a) Sediment on target. (b) Defective target.

Figure 12.

Examples of incomplete target. (a) Sediment on target. (b) Defective target.

Figure 13.

Over noise target. (a) Over noise on target. (b) Style transfer on target.

Figure 13.

Over noise target. (a) Over noise on target. (b) Style transfer on target.

Figure 14.

Examples of real sonar images.

Figure 14.

Examples of real sonar images.

Figure 15.

Indicator of training. (a) Precision trend in training. (b) Recall trend in training. (c) Object loss is the error caused by confidence. (d) Class loss is the error caused by target’s type.

Figure 15.

Indicator of training. (a) Precision trend in training. (b) Recall trend in training. (c) Object loss is the error caused by confidence. (d) Class loss is the error caused by target’s type.

Figure 16.

Detection result on validation data. (a) Simulated incomplete target. (b) Style transfer target. (c) Detection result in sonar background.

Figure 16.

Detection result on validation data. (a) Simulated incomplete target. (b) Style transfer target. (c) Detection result in sonar background.

Figure 17.

Comparison of style models in the training and real data.

Figure 17.

Comparison of style models in the training and real data.

Figure 18.

Real data verification process.

Figure 18.

Real data verification process.

Figure 19.

Wreckage simulation process and detection result.

Figure 19.

Wreckage simulation process and detection result.

Figure 20.

Difference size on same target. (a) target size is (32,32); (b) target size is (64,64); (c)target size is (128,128).

Figure 20.

Difference size on same target. (a) target size is (32,32); (b) target size is (64,64); (c)target size is (128,128).

Figure 21.

Detection on real data (Without Style transfer).

Figure 21.

Detection on real data (Without Style transfer).

Table 1.

Amount of original and augmentation data by target type.

Table 1.

Amount of original and augmentation data by target type.

| Segment No. | Class | Org Dataset | Augmentation Dataset |

|---|

| 1 | Aeroplane | 739 | 1591 |

| 2 | Bicyle | 254 | 557 |

| 3 | Car | 781 | 1698 |

| 4 | Person | 650 | 1390 |

| 5 | Ship | 583 | 1289 |

Table 2.

Three sonar styles applied on our style transfer process.

Table 2.

Three sonar styles applied on our style transfer process.

Table 3.

Noise quantities and type mapping.

Table 4.

Style transfer result on

Table 3.

Table 5.

Comparison on existing methods performance.

Table 5.

Comparison on existing methods performance.

| Model | Precision | Recall | mAP

(IOU = 0.5) |

|---|

| StyleBank + fastrcnn [4] | 0.860 | 0.705 | 0.786 |

| Whitening and Coloring Transform [3] | 0.875 | 0.836 | 0.75 |

| Improved style transfer+yolov5 [2] | 0.853 | 0.945 | 0.876 |

Our method 1:

fast style + yolov5 + shape noise | 0.899 | 0.861 | 0.865 |

Our method 2:

fast style + Fastrer R-CNN + shape noise | 0.919 | 0.792 | 0.882 |

Table 6.

Comparison of model in precision and recall.

Table 6.

Comparison of model in precision and recall.

| Model | Precision | Recall |

|---|

| fast style + Yolov5 + shape noise | 0.899 | 0.861 |

| faststyle + Fastrer R-CNN + shape noise | 0.919 | 0.792 |

| fast style + Yolov5 + shape noise and mix real data | 0.957 | 0.944 |

| fast style + Yolov5 + gauss noise | 0.868 | 0.819 |

| fast style + Yolov5 + salt and pepper noise | 0.870 | 0.833 |

| fast style + Yolov5 | 0.873 | 0.764 |

| StyleBank + Yolov5 | 0.755 | 0.563 |

| faststyle + Faster R-CNN | 0.809 | 0.764 |

Table 7.

Detection result with two datasets.

Table 7.

Detection result with two datasets.

| Model | Dataset | Precision | Recall |

|---|

| style transfer+ yolov5 | remote sensing images + VOC2007 | 0.873 | 0.764 |

| style transfer + yolov5 | remote sensing images | 0.815 | 0.736 |

Table 8.

Detection confidence on 4 types of noises (test in validation data).

Table 8.

Detection confidence on 4 types of noises (test in validation data).

| | Noise Type | Line | Point | Rectangle | Mixed |

|---|

| Noise Quantity | |

|---|

| 0 | 0.954 |

| 4 | 0.945 | 0.921 | 0.946 | 0.959 |

| 6 | 0.954 | 0.931 | 0.956 | 0.972 |

| 8 | 0.967 | 0.954 | 0.961 | 0.978 |

Table 9.

Noises and style transfer image.

Table 10.

Detection result for 3 types of noise.

Table 10.

Detection result for 3 types of noise.

| Model | Precision | Recall |

|---|

| fast style + yolov5 + shape noise | 0.899 | 0.861 |

| fast style + yolov5 + gauss noise | 0.868 | 0.819 |

| fast style + yolov5 + salt and pepper noise | 0.870 | 0.833 |

Table 11.

Detection result for 2 style models.

Table 11.

Detection result for 2 style models.

| Model | Precision | Recall |

|---|

| fast style + yolov5 | 0.873 | 0.764 |

| StyleBank + yolov5 | 0.755 | 0.563 |