Fast Segmentation and Dynamic Monitoring of Time-Lapse 3D GPR Data Based on U-Net

Abstract

:1. Introduction

2. Experiment and Methods

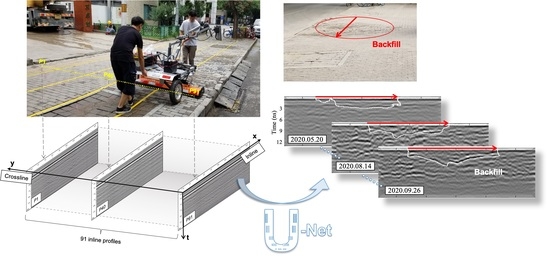

2.1. Field Experiment and Data

2.2. U-Net Structure and Model Training

- Contracting path: This path is used to obtain the context information. It has four layers, and each layer consists of two identical 3 × 3 convolutions and rectified linear unit (ReLU) activation functions. Downsampling is performed through a 2 × 2 max pooling operation. The number of feature channels is doubled after each downsampling. On this path, four max pooling operations are performed to extract the feature information from the sample.

- Expansive path: This is the upsampling part used to locate the target. A 2 × 2 convolution is applied to half of the feature channels, and then two 3 × 3 convolutions are used, each followed by a ReLU function. In the last layer, a 1 × 1 convolution is used to map the feature vectors to the corresponding prediction classification to complete the data segmentation and make the size of the output data consistent with that of the input data.

2.3. Data Segmentation

2.4. Accuracy Evaluation

- , good result;

- , unsatisfactory result, and the segmentation is invalid.

2.5. Mask Comparison

3. Results

3.1. Training and Segmentation

- When , a good prediction is obtained. The target region was successfully segmented, and the borders fit well (the yellow arrows in Figure 8a–d). In a few cases, as indicated by the red arrow on the right in Figure 8b, the output of U-Net was better than the manual ground truth. The borders of the segmentation masks were slightly different from the manual ground truth (the red arrows in Figure 8c,d) at times, but the error was acceptable, with less than one wavelength.

- When , the segmentation results are weak. Some of the segmentation maps were incomplete, and the boundaries were incorrect, as the red arrows indicate in Figure 8e,f.

3.2. Monitoring

- June 4: As shown in Figure 11a, changes in the left and right borders are noted on the blue masks from Y = 1.95 m to Y = 2.25 m, and changes in the bottom borders can be seen on the yellow masks from Y = 2.35 m to Y = 3.35 m.

- August 8: As shown in Figure 11b, a significant change in the lateral boundaries can be seen from Y = 2.10 m to Y = 2.65 m and from Y = 3.00 m to Y = 4.50 m (yellow masks in Figure 11b). The bottom borders also change from Y = 2.10 m to Y = 2.65 m (yellow masks) and from Y = 3.90 m to Y = 4.40 m (blue masks).

4. Discussion

4.1. Did the Backfill Pit Really Change?

4.2. Outlook

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Datasets | Date | Datasets | Date | Datasets | Date |

|---|---|---|---|---|---|

| (a) | 05.20 | (f) | 08.02 | (k) | 08.30 |

| (b) | 06.04 | (g) | 08.05 | (l) | 09.05 |

| (c) | 06.18 | (h) | 08.08 | (m) | 09.12 |

| (d) | 07.22 | (i) | 08.14 | (n) | 09.26 |

| (e) | 07.30 | (j) | 08.22 | (o) | 10.25 |

Appendix A.1. Raw Data and Misalignments

Appendix A.2. Antenna Lifting Test

Appendix A.3. Preprocessing on Inline Profiles

Appendix A.4. Three-Dimensional Time Zero Normalization

Appendix A.5. Three-Dimensional Data Combination and Imaging

References

- Reeves, B.A.; Muller, W.B. Traffic-speed 3-D noise modulated ground penetrating radar (NM-GPR). In Proceedings of the 2012 14th International Conference on Ground Penetrating Radar, Shanghai, China, 4–8 June 2012; pp. 165–171. [Google Scholar] [CrossRef]

- Simonin, J.M.; Baltazart, V.; Hornych, P.; Dérobert, X.; Thibaut, E.; Sala, J.; Utsi, V. Case study of detection of artificial defects in an experimental pavement structure using 3D GPR systems. In Proceedings of the 15th International Conference on Ground Penetrating Radar, Brussels, Belgium, 30 June–4 July 2014; pp. 847–851. [Google Scholar] [CrossRef]

- Linjie, W.; Jianyi, Y.; Ning, D.; Changjun, W.; Gao, G.; Haiyang, X. Analysis of interference signal of ground penetrating radar in road detection. J. Hubei Polytech. Univ. 2017, 33, 35–40. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet large scale visual recognition challenge. Int. J. Comput. Vis. 2014, 45, 211–252. [Google Scholar] [CrossRef]

- Tompson, J.; Jain, A.; Lecun, Y.; Bregler, C. Joint training of a convolutional network and a graphical model for human pose estimation. arXiv 2014, arXiv:1406.2984. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Region-based convolutional networks for accurate object detection and segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 142–158. [Google Scholar] [CrossRef] [PubMed]

- Shan, K.; Guo, J.; You, W.; Lu, D.; Bie, R. Automatic facial expression recognition based on a deep convolutional-neural-network structure. In Proceedings of the IEEE International Conference on Software Engineering Research, London, UK, 7–9 June 2017; pp. 123–128. [Google Scholar] [CrossRef]

- Besaw, L.E.; Stimac, P.J. Deep convolutional neural networks for classifying GPR B-Scans. In Proceedings of the SPIE the International Society for Optical Engineering, San Diego, CA, USA, 9–13 August 2015; SPIE: Bellingham, WA, USA, 2015; Volume 9454. [Google Scholar] [CrossRef]

- Lameri, S.; Lombardi, F.; Bestagini, P.; Lualdi, M.; Tubaro, S. Landmine detection from GPR data using convolutional neural networks. In Proceedings of the 2017 25th European Signal Processing Conference (EUSIPCO), Kos, Greece, 26 October 2017; pp. 508–512. [Google Scholar] [CrossRef]

- Kim, N.; Kim, S.; An, Y.K.; Lee, H.J.; Lee, J.J. Deep learning-based underground object detection for urban road pavement. Int. J. Pavement Eng. 2020, 21, 1638–1650. [Google Scholar] [CrossRef]

- Kim, N.; Kim, S.; An, Y.K.; Lee, J.J. A novel 3D GPR image arrangement for deep learning-based underground object classification. Int. J. Pavement Eng. 2021, 22, 740–751. [Google Scholar] [CrossRef]

- Klesk, P.; Godziuk, A.; Kapruziak, M.; Olech, B. Fast analysis of C-scans from ground penetrating radar via 3-D haar-like features with application to landmine detection. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3996–4009. [Google Scholar] [CrossRef]

- Jing, H.; Vladimirova, T. Novel algorithm for landmine detection using C-scan ground penetrating radar signals. In Proceedings of the 2017 Seventh International Conference on Emerging Security Technologies (EST), Canterbury, UK, 6–8 September 2017; pp. 68–73. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015, Proceedings of the 8th International Conference, Munich, Germany, 5–9 October 2015; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2015; Volume 9351, p. 9351. [Google Scholar] [CrossRef]

- Berezsky, O.; Pitsun, O.; Derysh, B.; Pazdriy, I.; Melnyk, G.; Batko, Y. Automatic segmentation of immunohistochemical images based on U-net architecture. In Proceedings of the 2021 IEEE 16th International Conference on Computer Sciences and Information Technologies (CSIT), Lviv, Ukraine, 22–25 September 2021; pp. 29–32. [Google Scholar] [CrossRef]

- Ling, J.; Qian, R.; Shang, K.; Guo, L.; Zhao, Y.; Liu, D. Research on the dynamic monitoring technology of road subgrades with time-lapse full-coverage 3D ground penetrating radar (GPR). Remote Sens. 2022, 14, 1593. [Google Scholar] [CrossRef]

| Indicators | Parameters |

|---|---|

| Antenna type | Ground-coupled antenna arrays |

| Number of channels | 30 |

| Center Frequency | 2 GHz |

| Channels spacing (crossline) | 0.05 m |

| Scans spacing (inline) | 0.02 m |

| Sampling points | 512 |

| Time window | 15 ns |

| Step | Processing Method | Parameter/Object |

|---|---|---|

| Time zero correction | Antenna lifting test | 2 ns |

| Background removal (BGR) | Sliding window | 150 scans |

| Frequency filtering | Butterworth filter | 500–1500 MHz |

| 3D time zero normalization | Correlation | Direct-coupling |

| 3D data combination | Correlation | Overlapping data |

| Training Dataset | Testing Dataset | |

|---|---|---|

| Number | 249 | 52 |

| Sampling points of GPR data | 16,434,000 | 3,432,000 |

| Proportion of total data | 19.5% | 7.1% |

| Data acquisition date | 20 May 2019–26 September 2019 | 25 October 2019 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shang, K.; Zhang, F.; Song, A.; Ling, J.; Xiao, J.; Zhang, Z.; Qian, R. Fast Segmentation and Dynamic Monitoring of Time-Lapse 3D GPR Data Based on U-Net. Remote Sens. 2022, 14, 4190. https://doi.org/10.3390/rs14174190

Shang K, Zhang F, Song A, Ling J, Xiao J, Zhang Z, Qian R. Fast Segmentation and Dynamic Monitoring of Time-Lapse 3D GPR Data Based on U-Net. Remote Sensing. 2022; 14(17):4190. https://doi.org/10.3390/rs14174190

Chicago/Turabian StyleShang, Ke, Feizhou Zhang, Ao Song, Jianyu Ling, Jiwen Xiao, Zihan Zhang, and Rongyi Qian. 2022. "Fast Segmentation and Dynamic Monitoring of Time-Lapse 3D GPR Data Based on U-Net" Remote Sensing 14, no. 17: 4190. https://doi.org/10.3390/rs14174190