1. Introduction

Activities related to cultural heritage (CH) documentation represent a challenging assignment, characterised by several constraints and specifications necessary to meet the requirements of heritage documentation, often dealing with limited resources. Thanks to technological developments in the last few decades, several instruments and techniques have become available that facilitate the completion of tasks associated with CH documentation. These topics have been a central research asset for the overall community of experts, especially in the geomatics field.

An important research theme, which has been stressed by several authors, is connected to the possibility of using low-cost and commercial off-the-shelf (COTS) sensors to tackle CH documentation, optimising the ratio between cost and effectiveness of the results (in terms of metric and geometric accuracy, level of detail, and overall quality of the acquired information). The optimization of resources has also been stressed by the commissioner of the documentation process [

1,

2], due to a general lack of resources in the CH domain, in terms of both funding and expertise. Accordingly, the need to develop more sustainable and effective solutions to complete the documentation process is both developer- and user-oriented.

Nevertheless, another aspect to be considered is related to the overall democratization of the documentation process, due to the broader involvement of non-expert users in the process.

Over the last few decades, the miniaturization of electro-mechanical components and sensors has been leveraged by systems with increased computational power. This sort of second digital revolution has been supported by various technological enhancements, resulting in a wider panorama of instruments and solutions for the documentation process in general, and in the CH domain in particular.

Furthermore, the general decrease in the retail price of these electronic components is another crucial factor for their diffusion. Finally, in the field of portable devices, the evolution mentioned above has also been paired with a constant growth of computational power and overall performance of smartphones and tablets, resulting in the enhancement of computer vision (CV) algorithms and general optimizations of the data processing workflow for these kind of applications.

In the field of 3D documentation, the use of commercial and low-cost solutions has been well-described in the previous literature; in which, different types of sensors have been tested, presenting diverse results.

A well-known experience in this sense was related to the “Project Tango”, released by Google LLC in 2014 and closed in 2017. The project foresaw the development of ad hoc devices capable of retrieving their position and orientation in a 3D environment for augmented reality (AR), motion tracking, and navigation purposes. Devices from “Project Tango” have been tested by several researchers in the broader spectrum of applications; for example, Tomaštík et al. [

3] have evaluated the possibility of using Tango technology for forest inventory tasks to achieve results comparable with other 3D recording methods, such as terrestrial laser scanning (TLS) and photogrammetry, and showed that the results were highly influenced by the scanning methodology adopted during field acquisition. Hyyppä et al. [

4] have performed similar tests for forest inventory, comparing the results achieved with Tango technology and Microsoft Kinect.

Several tests have also been performed using Tango technology for indoor and outdoor positioning. Nguyen and Luo [

5] have assessed the positional accuracy of the Tango platform in indoor environments considering different scene conditions, with an accuracy in the range from centimetres to meters. Marques et al. [

6] have proposed an enhanced extension of the Tango localization system, through the use of fiducial markers.

Concerning the use of Tango technology for indoor scanning, Zlatanova and Diakitè [

7] and Froehlich et al. [

8] have performed preliminary analyses regarding the possibilities offered by Tango devices for the creation of 3D models based on real-world environments.

On the other hand, the first version of Microsoft Kinect was released at the end of 2010, designed to be used for gaming purposes with the Xbox console series. It contains different embedded sensors, which are used to analyse and track the movements of players. Nevertheless, this device has also achieved widespread use for other purposes aside from the gaming world, especially in the fields of robotics, geomatics, and CV.

Part of the literature regarding the Kinect is dedicated to deeper analysis of this device, particularly in terms of its main specifications and the achievable accuracies. Smisek et al. [

9] have analysed the resolution of the depth measurement of the Kinect and its accuracy and proposed a calibration procedure for the overall system. Khoshelham [

10] has investigated the accuracy and the quality of Kinect depth data acquired with different strategies and at different distances from the object of interest.

The Kinect was considered a game-changer, especially in the fields of robotics and CV. A review of the use of Kinect for CV applications can be found in Han et al. [

11]; while more information on its use for robotics applications can be found in the studies of El-Laithy et al. [

12] and Fankhauser et al. [

13]. Concerning its use for 3D documentation tasks, more information can be found in the studies of Mankoff and Russo [

14], Lachat et al. [

15], Fankhauser et al. [

13], and Kersten et al. [

16].

The technology developed with the Kinect converged in the Microsoft HoloLens project, which was first released for developers in March 2016. The Microsoft HoloLens is a pair of smart glasses developed for use in mixed reality applications. Despite its primary use for mixed reality applications, Microsoft HoloLens can also be deployed for the acquisition of 3D reality-based models. Trotta et al. [

17] have tested different solutions for enhancement of the 3D data acquired by HoloLens and evaluated the accuracy of the achieved models; Weinmann et al. [

18] have compared the accuracy of HoloLens with a TLS down-sampled data set for indoor 3D mapping, confirming the possibility of using this device for fast surveying application. Furthermore, the same research group [

19] has recently published a detailed review of the possibilities provided by the HoloLens for the completion of these tasks.

Another promising sensor that has been tested by several research groups is related to the generation of point clouds using the so-called time-of-flight (ToF) cameras [

20,

21]. These devices are typically characterized by just a few thousand pixels, a maximum unambiguous measurement range of up to thirty meters, and small dimensions. Their main advantages, with respect to other 3D measurement techniques, include the possibility of acquiring data at video frame rates and to obtain 3D point clouds without scanning and from just one point of view. ToF cameras usually deliver a range image and an amplitude image with infrared modulation intensities at video frame rates: the range image (or depth image) contains, for each pixel, the measured radial distance between the considered pixel and its projection onto the observed object, while the amplitude image contains the strength of the reflected signal by the object for each pixel. In some cases, an intensity image is also delivered, which represents the mean of the total light incident on the sensor (i.e., reflected modulated signal and background light of the observed scene). As reported in [

21], these sensors have been employed successfully for 3D documentation, presenting satisfactory results in terms of the final products and related accuracy. At present, the main applications of ToF cameras are related to robot navigation [

22], security and monitoring [

23], and logistics [

24].

A wider analysis of the possibilities offered by the use of personal devices in the production of metric 3D data has also been conducted within the context of an interesting European Union-founded project: the REPLICATE project [

25].

Finally, in 2020, Apple released two products embedding new LiDAR sensors, representing a novelty in the world of personal devices: the iPad Pro and the iPhone 12 pro.

The integration of LiDAR into a consumer-grade personal device generated a lot of hype both among consumer and professional operators. However, at the time of writing, only a few technical articles have been published concerning the use of these two devices for fast surveying applications; therefore, the achievable accuracy using these devices is yet to be confirmed. Vogt et al. [

26] have tested the possibility offered by the latest Apple devices for industrial 3D scanning, focusing both on the LiDAR system and the frontal TrueDepth camera. The authors tested the two sensors on small objects (LEGO bricks) and compared the results with an industrial 3D scanning system. In particular, they focused on the impact of the colour of the selected bricks during the scanning process and the overall scanning accuracy. The authors concluded that, at the actual state of advancement, the possibility offered by these devices may not be sufficient for most industrial applications; however, they may fit specific applications with lower requirements, in terms of accuracy.

Murtiyoso et al. [

27] have presented an initial assessment regarding the use of Apple LiDAR for heritage documentation purposes. Two applications enabling the acquisition of point clouds with the iPad Pro were tested in three different scenarios: (i) Small–medium objects; (ii) building exterior façades; and (iii) indoor mapping. In all three scenarios, the data acquired with the Apple device were compared with a TLS or close range photogrammetry (CRP) reference data set. The authors highlighted that one of the main issues connected with this solution for heritage documentation is related to the high noise of the acquired point cloud; however, with ad hoc strategies, the use of Apple LiDAR in this field can lead to promising results.

Gollob et al. [

28] have proposed some considerations regarding the use of Apple LiDAR for forest inventory purposes. Three different applications were tested implementing an ad hoc acquisition strategy, and the results were compared with other, more consolidated range-based techniques and traditional measurement approaches for tree diameter estimation. The authors concluded that the iPad LiDAR can provide faster and more flexible techniques, compared to conventional measurement approaches; however, in this case, high accuracies and spatial resolutions are yet to be achieved.

Luetzenburg et al. [

29] have evaluated the use of the iPhone 12 Pro, through exploiting different apps for the 3D survey of rocks and cliffs for geological purposes, stressing the high potential and competitiveness of the miniaturized technology integrated into a smartphone. The authors of previous research work dedicated to the preliminary assessment of the Apple LiDAR capabilities have agreed on the fact that the implementation of this technology in consumer-grade personal devices represents a ground-breaking innovation and that promising developments are to be expected in the near future, confirming the outcomes of a preliminary assessment of Apple devices equipped with LiDAR sensors carried out by the same research group that authored this manuscript [

30].

King et al. [

31] have tested the ability of iPhone 12 Pro LiDAR for monitoring snow depth changes over time in small areas (up to 5 m

2); they analysed the effectiveness of the LiDAR device under low-temperature conditions (−20 °C), in comparison with ruler measurements, stressing the low-cost aspect and portability of the device.

Tavani et al. [

32] have evaluated the iPhone 12 Pro’s global navigation satellite system (GNSS) accuracy, inertial measurement unit (IMU) and magnetometer effectiveness, photo and video capabilities, and LiDAR sensor for geological studies. The iPhone 12 Pro GNSS receiver is able to capture measurements with accuracy within a few metres within seconds, demonstrating it to be competitive, in comparison to the same accuracies achieved in minutes by other mobile devices. Regarding the LiDAR module, the 3D Scanner App, EveryPoint, and Pix4Dcatch were tested for the mapping of indoor and outdoor test areas and in a real-case scenario for surveying a rock cliff, stressing the advantage of user-friendly iOS applications for metric survey and portability of the device in the field; however, they also reported drawbacks with respect to the online processing of some apps (e.g., Pix4Dcatch), requiring that the data be uploaded to the cloud, and reported a ‘doming effect’ in the geometric reconstruction of the scene.

Díaz-Vilariño et al. [

33] have observed the capability of the LiDAR sensor embedded in the iPad Pro for 3D modelling in two consecutive rooms and an outdoor scenario, comparing the Apple point clouds with TLS and building information modelling (BIM) models considering surface coverage, as well as local and global precision. They stressed the LiDAR Apple’s devices inability with small-scale objects, as well as its suitability only for small environments; furthermore, they reported that the LiDAR is not influenced by lightning conditions.

Balado et al. [

34] have tested the performance of the iPad Pro LiDAR, in comparison to two other LiDAR sensors, for surveying CH in the open air; the authors stressed the good compromise between low cost and achievable results for the iPad Pro, even if the obtained point cloud was found not to be suitable for stone individualization on the basis of curvature analysis and connected components algorithm.

The LiDAR embedded in the iPhone 12 Pro has also been tested for human body measurements [

35], stressing the potentiality of this technology in manifold domains.

2. Materials and Methods

The research presented in this work aims to test two different Apple devices: the iPad Pro (4th generation), released in March 2020, and the iPhone 12 Pro, released seven months later in October 2020. The devices employed for the tests presented in this publication were equipped as follows. The iPad Pro [

36] weights 684 g and is characterised by a 12.9′′ Retina display, an A12Z Bionic chip with 64-bit architecture, 8-core GPU, 8-core CPU, 16-core Neural Engine, 8 GB RAM, 512 GB capacity, and iOS 14.4.2 software version; it is equipped with two RGB rear cameras—a 12 MP wide camera and a 10 MP ultra-wide camera—and a LiDAR sensor. The iPhone 12 Pro [

37] has a 6.1′′ screen, an A14 Bionic chip (5 nm dimension) with 6-core CPU, 4-core GPU, 16-core Neural Engine, 6 GB RAM, 256 GB memory, and iOS 14.7.1 software version; it has three RGB 12 MP rear cameras—wide, ultra-wide, and zoom camera—and a LiDAR sensor, with a total weight of 187 g. In particular, the A14 Bionic chip and 16-core Neural Engine integrated into the iPhone 12 Pro improve its performance, in terms of speed, while also allowing it to save energy. Being personal devices, both of these platforms are portable and lightweight but present a few noticeable differences in specifications. The iPad Pro has a bigger screen, allowing one to better observe the acquisition process in real-time, while the iPhone 12 Pro is lighter and the A14 Bionic chip performs better than the A12Z mounted on the iPad Pro. As far as the price range is concerned, the cost of the iPad Pro is around EUR 1700 for the Long-Term Evolution (LTE) version, while the iPhone 12 Pro costs around EUR 1100 (as of January 2022).

Only a few official technical specifications are available for the laser sensors embedded in the latest Apple products; however, according to a literature review (e.g., Murtyioso et al. [

27]), the sensor is a solid-state LiDAR (SSL), a type of LiDAR that, in contrast with traditional LiDAR systems, avoids the use of mechanical motorized parts to ensure higher scalability and reliability, especially for autonomous vehicle and robotics applications [

38,

39]. As described by Aijazi et al. [

40], 3D SSL is a new technology that relies on silicon chips without moving parts, characterized by a longer operational life and smaller sizes than traditional LiDAR, which uses rotating mechanisms. More specifically, different authors ([

27,

28,

29]) have suggested that the LiDAR embedded in Apple devices uses an emitter composed of a vertical-cavity surface-emitting laser (VCSEL) sending laser pulses with a diffraction optics element (DOE), enabling miniaturization of the sensor; meanwhile, the receiver is a single-photon avalanche diode (SPAD). The LiDAR is based on a direct time-of-flight (dToF) technology with a near infra-red (NIR) complementary metal-oxide semiconductor (CMOS) image sensor (CIS) for the SPADs. Each sensor can directly measure the time between the emission of the light and the detection of the received light [

41]. The Sony CIS combined with the VCSEL from Lumentum—integrated into both the iPhone 12 Pro and iPad Pro—features a global matrix composed of 576 points, emitted alternately in 4 different arrays, each of which consists of 144 points; the global matrix is composed of 9 sub-matrices, each of them with 8 dots per column and 8 dots per row [

29,

42]. The points measured by the LiDAR sensor combine information acquired by the cameras and motion sensors integrated into Apple devices, supported by the CV algorithm of the Bionic chip [

43]. The declared range of the LiDAR sensor is 5 m, and Luetzenburg et al. [

29] have concluded that there are no differences between the LiDAR sensors embedded in the iPad and iPhone.

2.1. Available Applications for Metric Survey Purposes

Thanks to Apple’s ARKit [

44], the depth information obtained from the LiDAR sensor is converted into points. These data are used in combination with the motion tracking sensors and data coming from the camera (as orientation and position) for different uses enabled by different apps, including for measurements (Measure), improvement of camera auto-focus in low-light conditions (Camera), entertainment and gaming (e.g., Hello LiDAR: Pro AR Filters, Playground AR), medical purposes (e.g., Complete Anatomy Platform 2020, Seeing AI), interior design (e.g., Ikea Place), or survey operations (e.g., Magicplan, RoomScan, Canvas: Pocket 3D Room Scanner). In all of these applications, the LiDAR assists in the positioning of AR objects in the framed real scene in AR apps or provides measurements of real objects framed by the RGB camera.

The Apple store has listed an increasing number of applications exploiting the LiDAR sensor, but few are dedicated to retrieving 3D point clouds (

Appendix A,

Table A1), which can be exported and further processed for surveying. Especially after the launch of the iPad Pro and the iPhone 12 Pro on the market, several applications have been designed and released to exploit the possibilities offered by the sensors integrated into these mobile devices to measure 3D scenes and provide coloured 3D models, not only relying on the LiDAR sensors embedded in these devices but also exploiting the data derived from other embedded sensors. Some applications are solely based on a photogrammetric approach, using the back-facing camera of the devices (e.g., Scanner 3D Qlone), others use the TrueDepth front-facing camera (e.g., 3D Scan Anything), while a considerable number of applications are based on the LiDAR sensor (e.g., SiteScape—LiDAR 3D Scanner, EveryPoint, Sakura 3D SCAN, Scaniverse—LiDAR 3D Scanner, Modelar—3D LiDAR Scanner, Pix4Dcatch: 3D scanner, Polycam—LiDAR & 3D Scanner, Heges 3D Scanner, Lidar Scanner 3D, 3D Scanner App, RTAB-Map—3D LiDAR Scanner, and Forge—LiDAR 3D Scanner). Therefore, deep research on the Apple Store was carried out in September 2021 (keeping in mind that the development of these solutions is relatively rapid and constantly evolving), in order to find all the applications using the integrated LiDAR to produce 3D models for metric documentation purposes. These applications were then installed and tested to assess their main characteristics and, finally, a selected number of apps were tested more in-depth. These applications are reported in

Appendix A,

Table A1. Various parameters were considered in the analysis of the available applications, including:

Licensing (free, open-source, commercial);

Type of acquired data (point cloud, 3D meshes, etc.);

Ease of use;

Level of user’s control on the acquisition settings (number of customizable parameters).

The underlying algorithm adopted by each application is not always reported in its description on the online store or related documentation, as well as the different products that can be generated. Nevertheless, a relevant group of applications claims to exploit the LiDAR sensor to generate 3D models, but the role of the LiDAR sensor is not always clear and specific tests are required to assess how these applications work. All of them seem to exploit the LiDAR sensor to register the geometric data and the RGB camera to colorize the 3D model/point cloud, but some of them rely on a photogrammetric approach (e.g., Pix4Dcatch and Polycam) and, therefore, can also be used on other devices without requiring an Apple device with integrated LiDAR sensor. Regarding the camera position and attitude estimation of the device while reconstructing the 3D model, only RTAB-Map—3D LiDAR Scanner (

https://introlab.github.io/rtabmap/, accessed on 14 January 2022) declares to be based on a simultaneous localization and mapping (SLAM) algorithm. All of the listed applications (except for Heges 3D Scanner) were released starting from 2020, when the iPad Pro and iPhone 12 Pro with integrated LiDAR sensor were released, and are subject to constant updates, indicating the high interest in this new technology. Most of them are free of charge and support the most common interoperable file extensions for 3D models, such as .e57, .ply, .obj, and .las.

After some preliminary tests, we decided to focus on three selected iOS applications to further evaluate the performances of the LiDAR sensors: SiteScape version 1.0.12 by SiteScape Inc. [

45], EveryPoint version 2.9 by URC Ventures Inc. [

46], and 3D Scanner App version 1.9.5 by Laan Labs [

47]. These applications were selected as they meet specific criteria related to CH documentation: The user license is free (CH documentation generally benefits from limited funding), customisable acquisition settings (it is possible to have more control from the user side), and they generate 3D models as point clouds (the availability of the raw data set, rather than a derivative product, grants wider control on the metric and geometric quality of the final product).

Overview of the Three Selected Applications

The selected iOS applications, which were installed both on the iPad Pro and iPhone 12 Pro, offer various acquisition settings, which can be customized according to the operator’s needs.

- I.

SiteScape allows for customisation of the “scan mode” (“max area” or “max detail”), “point density” (“low”, “medium”, or “high”), and “point size” (“low”, “medium”, or “high) (

Figure 1a). The “max area” scanning mode lets the operator acquire longer scans than the “max detail” mode, which, to the contrary, limits the scanning time but acquires a point cloud that is eight times denser. The “point density” defines the acquired number of points, where the “medium”/“high” quality corresponds to two/four times, respectively, the number of points obtained in the “low” quality mode. Finally, the “point size” influences only the dimensions of points visible in real-time, not the acquired data set itself.

- II.

EveryPoint (

Figure 1b) provides three scanning modes: “EveryPoint LiDAR Fusion” mixes ARKit data with data coming from the LiDAR sensor and a photogrammetric algorithm developed by EveryPoint; “ARKit LiDAR Points” uses only data obtained by the LiDAR sensor and ARKit; and “ARKit LiDAR Mesh” generates a 3D mesh surface calculated with ARKit based on the LiDAR data. Regarding the “ARKit LiDAR Points” mode, a bar allows the operator to set the scan density but without providing a corresponding value to the scale bar; meanwhile, if the option “Use Smoothed Depth Map” is enabled, a smoothing tool is applied to generate a point cloud with a lower level of noise.

- III.

3D Scanner app (

Figure 1c) provides two scanning modes, named “low res” and “high res”. The “low res” has no parameters to set, it provides the simplest mode to capture a 3D scene, which is suggested to be used for large scans. The “high res” allows the operator to produce a better scan, offering four different settings: “max depth” (ranging between 0.3 m and 5.0 m with a step of 0.1 m), “resolution” (ranging from 5 mm to 20 mm with a step of 1 mm), “confidence” (“low”, “medium”, or “high”), and “masking” (“none”, “object”, “person”). The “max depth” permits reducing the 5 m maximum scanning distance of the surveyed object from the device, such that the operator can decrease the distance down to 0.3 m to avoid undesired objects in the scanning. The “resolution” parameter defines the density of the point cloud, where 5 mm represents the highest resolution, while 20 mm is the lowest. The “confidence” parameter limits the data acquired by the LiDAR sensor, where the “high” value registers only the best quality data but decreases the amount of registered data; for example, through deleting the farthest points from the device. The “masking” setting allows for definition of which elements to measure: if the “none” value is selected, the app acquires all the scene visible from the LiDAR sensor; with the “person” value, the app tries to identify the shape of a person and acquires only the geometry of a human body excluding the rest; and, with the “object” value, the app tries to identify an element in the foreground, attempting to avoid the recording of the background.

Regarding the data acquisition phase, SiteScape [

48] recommends maintaining a distance between 1 and 4 m from the surveyed object. The EveryPoint app suggests moving freely, avoiding fast movements, and keeping the camera pointed towards the scene to survey. 3D Scanner App [

49] reminds the user that quick movements can affect the quality of the result and advises that re-scanning the same portion within one scan cannot improve the outcome (advice that is probably limited to this specific application). No further information about the best path to follow is provided, such as up and down patterns or closed loops around an object. None of the three apps declare the limit on the time available to perform a scan. The time and the maximum number of points for each scan are related to the scan settings, mainly in terms of scan density. SiteScape provides a bar to indicate the available space to conclude a scan; EveryPoint shows the number of points acquired during the acquisition, without stopping the scan at a certain point; and 3D Scanner App does not provide any information about limits during acquisition. After examining the available settings, the iOS app settings tested in the present research are reported in

Table 1.

2.2. Experimental Setup for Sensor Performance Evaluations

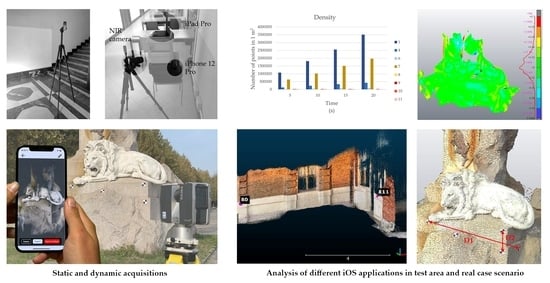

The work presented in this contribution is mainly divided into two parts: An assessment of the sensor characteristics and performances, and a second part dedicated to using Apple LiDAR in real-case scenarios of built and cultural heritage asset surveying.

The first step was achieved by completing an extensive evaluation of the sensor and assessing its behaviour under different operational conditions. Data acquisition tests were carried out by changing different variables that can affect the sensor’s performances: static and dynamic configurations were both considered, as well as both indoor and outdoor scenarios and varying materials and dimensions of the surveyed objects. In fact, besides distance from the object and operator movements, external factors, such as illumination, reflectance, texture, colour, and shape may also influence the scan quality [

26].

The first set of tests was also aimed at assessing any possible differences between the performance of the iPad Pro and the iPhone 12 Pro LiDAR, in order to define how to further proceed with additional acquisition in real-case scenarios.

The second step of the work is dedicated to real case applications considering objects with different scales and shapes. As will be detailed in the following sections, the acquisitions in this second phase were carried out using only one device, according to the outcome of the first phase. Three different CH assets were considered in the second phase, as described in

Section 2.2.3.

2.2.1. Static Acquisitions

Two tests were proposed to compare the iPad Pro and iPhone 12 Pro LiDAR sensors, in order to verify whether they embed the same sensor. In the first test, the two devices were positioned in front of a vertical surface, one above the other (landscape orientation). Then, in order to acquire the data, the three selected applications were used, changing the parameters offered by each app according to the following iOS apps settings: SiteScape1, SietScape2, SiteScape3, SiteScape4, SiteScape5, SiteScape6, EveryPoint1, EveryPoint2, 3D Scanner App1, 3D Scanner App2, and 3D Scanner App3 (see

Table 1). For each iOS app setting, a video was registered using a modified DSLR CANON EOS SL1, which is capable of capturing the NIR spectrum with a wavelength range between 700 and 1100 nm. The emitted VCSEL matrix of dots visible in each video was analysed by counting the number and pattern of dots. In the second test, the iPhone 12 Pro was mounted on a tripod, and a set of static point clouds at 1, 2, 3, and 4 m were acquired with the following iOS app settings: SiteScape1, SiteScape2, SiteScape3, SiteScape4, SiteScape5, SiteScape6 (see

Table 1), as has already been performed with the iPad Pro by Spreafico et al. [

30]. This test was performed only with the SiteScape app, as EveryPoint and 3D Scanner App do not allow for acquisition in static mode. Acquisitions were rapid and performed without moving the device from its position. The total number of points composing the matrix and the distance between two adjacent points were evaluated and compared to those recorded by the iPad Pro using the same acquisition set-up.

2.2.2. Dynamic Acquisition: Performance on Sample Areas

For dynamic acquisitions, only the iPhone 12 Pro was tested, using SiteScape, EveryPoint, and 3D Scanner Apps with the following settings: SiteScape1, SiteScape4, SiteScape6, EveryPoint1, EveryPoint2, 3D Scanner App1, 3D Scanner App2, and 3D Scanner App3 (see

Table 1). For the SiteScape app, only three settings were considered: one with the highest resolution (SiteScape1), one with medium resolution (SiteScape4), and one with the lowest resolution (SiteScape6). Three different tests were performed to compare the three apps, with the aim of assessing the influence of overlapping acquisitions on the same part of the acquired object, the influence of sunlight, and the impact of different materials. For the first indoor test, the operator held the device, moving it slowly from one side to another at a distance of 1 m from a vertical white plaster wall with four coded artificial markers. A data set with two acquisition strategies for each of the iOS app settings was recorded, for a total of 32 acquisitions; one consisting of a single swipe (5 s), and one with overlapped swipes (10, 15, and 20 s).

The second test considered the same portion of an outdoor pink plaster wall, surveyed according to the last acquisition schema in the previous list. A first acquisition was performed with direct sun on the wall, and a second was conducted under shadow conditions, in order to evaluate the influence of sunlight. The third test was performed to assess the performance of the iPhone LiDAR on eight different materials: white plaster, pink plaster, concrete, raw wood, polished stone, brick, river stone, and black opaque metal (

Figure 2). For this test, the operator followed the abovementioned acquisition strategy lasting 20 s.

For the three tests, the scans were co-registered in the same coordinate system using four artificial markers. Then, for each scan, the following parameters were computed on the same area (1 m2) using the CloudCompare software: the total number of points, number of neighbours in a sphere of radius of 5 cm, and roughness (with a kernel radius of 5 cm).

2.2.3. Selected Case Studies and Experimental Setup

To better evaluate the performance of the Apple LiDAR in the field of heritage documentation, we decided to focus on three different application scales: Single medium-size objects (e.g., statues and decorative elements), interior medium-scale assets (e.g., rooms or medium/small spaces), and outdoor medium-scale asset (e.g., façades or portions of buildings).

For each application scale, a specific case study was selected (

Figure 3): Case study A was a statue (single medium-size object), Case Study B was a decorated room (interior medium-scale), and Case Study C was a portion of an exterior façade.

Acquisitions were carried out with SiteScape, EveryPoint, and the 3D scanner app installed on the iPhone device. At the same time, ground reference data were also acquired. TLS scans were recorded to obtain 3D models for validation purposes. For the TLS acquisition, a Faro Focus

3D X330 was used, the main specifications of which are reported in

Table 2.

Case Studies A and C were surveyed with a single scan in portrait orientation, each lasting about 1 min, and moving the device horizontally from left to right at a distance of around 1 m from the object. Case Study B was completed in approximately 3 min with a single scan, starting and closing the scan in the same corner of the room, moving according to a continuous strip, and repeating the following movements in sequence until reaching the closing point: from up to down, then moving to the right, going from down to up, then moving to the right. The distance from the object was around 2 m. For the three case studies, the iOS apps settings used were SiteScape1, EveryPoint1, and 3D Scanner App1. Data collected with the iPhone were processed inside the mobile device, following a dedicated pipeline for each application, and then exported in an interoperable format (.las) for further processing and analyses. Before data validation, the iPhone LiDAR data were georeferenced in the same reference system of the TLS reference data set. Firstly, point-based registration was carried out, followed by a refined co-registration using iterative closest point (ICP) registration.

4. Discussion

The research presented in this work evaluated the performance of the LiDAR sensors embedded in the new series of Apple personal devices: iPad Pro and iPhone 12 Pro. The tests aimed to explore the possibilities offered by this solution within the framework of CH documentation, focusing on three different representation scales and determining the precision and accuracy of the derived 3D point clouds (which has only been partially tackled by other research groups in the available scientific literature). The different iOS apps available to exploit the survey capabilities of the LiDAR sensor were analysed, and three were selected for further testing, first in ad hoc tests to evaluate the main characteristics of the sensors and then to analyse their operational workflow in outdoor and indoor environments under different lighting conditions and on different materials.

The analysis outcome suggested that the iPad Pro and iPhone 12 Pro utilize the same LiDAR sensor. Nevertheless, each tested application (SiteScape, EveryPoint, and 3D Scanner App) provided different results, highlighting the crucial role of the software component when exploiting the same hardware setup. Each iOS app allows a few parameters to be set, customizing the scanning point density in the range from 200 (3D Scanner App) to 2,000,000 (SiteScape) points/m2 at 1 m distance on the surveyed surface. Generally, SiteScape permits us to acquire a higher number of points, in comparison to 3D Scanner App and EveryPoint; meanwhile, the 3D Scanner App does not increase the number of points if the operator scans more than one time the same area, leading to a less noisy point cloud. This is most probably related to a better point cloud post-processing and filtering, which, nevertheless, can impact the level of detail.

The tests carried out in shaded and sunlight conditions and on different materials suggested that the sensor is not highly influenced by either the illumination or the material.

The tests performed, considering three different real case studies characterized by different mapping scales, were crucial to highlight the operational limitations of tested iOS apps coupled with the LiDAR sensor, depending mainly on the dimensions and characteristics of the object (and, consequently, the overall acquisition time). This analysis highlighted that the quality of the acquired data also depends on the iOS application, mainly related to precision and accuracy, geometric reconstruction, maximum acquisition time and, consequently, the maximum size of the acquired object.

It is likely that the algorithms for the device positioning and 3D data acquisition implemented in these applications are different, and that each application exploits and integrates the data available from the other sensors of the smartphone (e.g., gyroscope, camera, GNSS, and so on) in different ways.

The continuous enhancement and updating of the available applications are a clear sign that CV algorithms exploiting SLAM and photogrammetric approaches are still being developed and further adapted. The optimization of these algorithms and their performances is important, considering the limited hardware resources of the mobile devices (including the battery capacity), which, despite the growing rate over the last years, still pose limitations when managing big data sets, such as 3D LiDAR point clouds.

All of the performed analyses confirmed the actual potentialities and limitations of the Apple LiDAR for real 3D surveying applications. At the actual state of development, it is possible to state that this sensor (coupled with the available iOS apps, to be appropriately tested and selected according to the user’s requirements) can be successfully used for the documentation of small and medium-size objects (e.g., statues, museum objects, small rooms or small parts of buildings, decorative architectural elements, and so on) with a precision of few centimetres and a high level of detail. For the surveying of larger buildings or sites, it is necessary to develop iOS apps embedding SLAM algorithms (possibly also exploiting visible cameras), which are capable of estimating the position and attitude of the sensor during the survey with higher accuracy. Nevertheless, in the case of fast documentation approaches at smaller nominal map scales, the current solutions already meet the requirements.

Concerning the acquisition phase, it was noticed that acquiring the same portion of objects multiple times did not necessarily improve the quality of the data acquired (depending on the iOS app), and that a quite fast acquisition can be performed with the aim of covering all the object surface, paying some attention to avoiding abrupt movements.

Given the crucial role of the software component, it would be highly beneficial to include an advanced modality enabling fine-tuning of the acquisition parameters for skilled users aimed at optimizing the quantity and quality of the derived 3D models. No customization of the processing phase is currently available, and no technical information is provided regarding the type of automatically applied post-processing algorithms. Finally, it would be helpful to add a re-processing option for the acquired data that—as for most of the available SLAM solutions on the market—allows for the correction of drift error and significant deviations.