2.2. SSIFCM Clustering Algorithm

The traditional FCM does not consider the spatial neighborhood information of pixels in image classification and only uses the gray information of the image to calculate the membership degree. As a result, the noise pixels are easily misclassified due to abnormal feature information, which is only suitable for images with less noise. In order to classify HSRRS images using FCM, it is necessary to consider spatial neighborhood information in the clustering process. To compensate for the nonuniformity of traditional FCM, Ahmed et al. introduced a parameter α that allows pixels to be affected by their adjacent labels in the FCM objective function. He obtained the bias-corrected fuzzy C-means (BCFCM) algorithm [

25] based on deviation correction. The objective function is as follows:

where

is the grayscale value the

j-th pixel in the image,

C represents the expected number of categories,

N is the number of pixels in the given image,

is the membership of the

j-th pixel belonging to the

i-th category in the image,

is the

i-th clustering center, parameter

m is a weighting exponent on each fuzzy membership and determines the amount of fuzziness of the resulting classification, parameter

controls the effects of the neighbor item,

stands for the domain pixel in the surrounding window of

and

is the cardinality of

,

is the neighborhood pixels in the window around pixel

j, and

is a norm metric, which represents the Euclidean distance between the pixel and the clustering center denoting Euclidean distance between pixels and clustering centuries.

represents the sum of Euclidean distances from all pixels to each cluster center. FCM clustering is essentially finding the corresponding membership matrix and clustering center when the objective function

J takes the minimum value.

BCFCM mainly calculates the membership degree of the sample points to the clustering center by optimizing the objective function, so as to judge the classification of the sample points. The pixels in the HSRRS image are the sample points of the data set in the BCFCM algorithm, and their characteristics (such as spectral characteristics) are sample characteristics. The algorithm is not sensitive to noise, but the introduction of spatial neighborhood information makes the distance between the pixels in the local neighborhood window and the clustering center repeat, which increases the computational complexity.

The introduction of superpixels achieves the preservation of spatial neighborhood information while effectively reducing the amount of computation. To reduce the number of pixels in the image, increase the feature information of the object, and improve the effectiveness of image classification and calculation efficiency, the SLIC algorithm is used to realize the pre-segmentation of the image, and the pixel value in the original image region is replaced by the spectral mean of the superpixel region.

In this paper, the input image is segmented by superpixel, and the superpixel region is regarded as the basic unit of subsequent classification. The statistical method of the regional CIELab color histogram is used to extract the spectral features of superpixels.

Q superpixels are obtained in the HSRRS image, and the spectral mean

of each superpixel region is calculated to extract spectral features and encode the superpixel regions in the image. Finally, the Euclidean distance between the superpixels and each clustering center is calculated to complete clustering.

where

represents the clustering of the center

(the

g-th superpixel). After the completion of superpixel segmentation and spectral feature extraction, the uncertainty and spatial function are added to merge the superpixel segmentation regions based on BCFCM. The algorithm merges adjacent superpixels with similar attributes or features into a region according to the merging criterion. The objective function of the SSIFCM algorithm is:

where

represents the spectral mean of adjacent superpixels around superpixel

,

stands for the set of neighboring superpixels that exist in a window around

and

is the neighborhood superpixels in the window around

,

is the number of pixels in superpixel

.

represents intuitionistic fuzzy entropy (IFE) [

26], which is considered to express the degree of fuzziness in the clusters. The IFE was introduced to maximise the valid data points in the clustering and minimise the entropy of the data matrix. When the uncertainty of the elements in each cluster is known, the corresponding IFE can be calculated. IFE is defined as:

where

is the uncertainty and represents the degree of hesitancy of superpixel

to the

ith cluster. The objective functions of the SSIFCM algorithm are as follows:

In this paper, the same method as traditional FCM is used to find the minimum value of the objective function, and iterative optimization is used to optimize the objective function. According to the Lagrange multiplier method, the following equation is constructed:

Taking the derivatives of

and

, the equation is set to 0 to obtain the calculation formula of the superpixel fuzzy membership

and initial cluster center

of the SSIFCM:

On the basis of BCFCM, the objective function is modified to cluster the superpixels. At the same time, the spectral characteristics of each superpixel and its neighborhood superpixels are considered, which effectively inhibits the salt and pepper phenomenon caused by BCFCM that only uses image pixels for clustering. However, the BCFCM, after the introduction of superpixels, only achieves clustering by calculating membership degree, which still fails to solve the problems of unsupervised classification of HSRRS images, such as categories that are difficult to control, lack of a clear definition of classification approaches, and classification uncertainty caused by human factors. In contrast, intuitionistic fuzzy clustering takes into account the functions of uncertainty (hesitation), membership, and non-membership, which can describe the fuzzy characteristics in the real world in detail and better solve the uncertainty problems in unsupervised classification [

27,

28]. Uncertainty

is expressed as:

where

represents the non-membership degree, indicating the degree that superpixel

does not belong to the

i-th cluster. According to Sugeno’s intuitive fuzzy supplement [

29], the non-membership degree can be expressed as:

where

λ is the empirical value (5 in this paper). The increase in

λ will reduce the value of the non-membership degree, which makes the algorithm close to the traditional FCM. After the initial clustering center is obtained, IFS is introduced to calculate the membership degree

of the intuitive fuzzy superpixel:

Spatial features are essential features of remote sensing images. By measuring the location of the superpixel in the image and the spatial relationship between the neighboring superpixels, the purpose of distinguishing different ground objects can be achieved. In addition, it has an excellent auxiliary role in solving the problem of “same object with different spectrums” and “different objects with the same spectrum” in HSRRS image classification. For the fact that adjacent superpixels have similar feature intensity and can be easily classified into the same category, a spatial function

is introduced to express the possibility that the superpixel

belongs to the

i-th cluster center. When the spatial function value is high, most of the superpixels surrounding the

neighborhood belong to the same cluster center. While the spatial function strengthens the original membership degree of the homogeneous region, the weight of the noise pixels is also reduced through the labels of adjacent pixels. For this purpose, a

equal-weight mask centered on superpixel

is used. The spatial function is expressed as:

where

represents the fuzzy membership degree of neighborhood superpixels in the

i-th cluster.

The superpixel spatial intuitionistic fuzzy membership is calculated as:

where

represents the intuitive unclear membership degree of superpixel

to the

i-th cluster, where

p and

q are parameters representing relative weights used to determine the initial fuzzy membership degree

and spatial function

.

The cluster center updating formula is:

where

is a cluster center after updating and represents the

i-th cluster center after iteration. The value of the superpixel spatial intuitionistic fuzzy membership matrix is updated during each iteration, and the clustering center is updated repeatedly synchronously. When the difference values of the membership matrix reach the set threshold range in the two adjacent updates or the set maximum number of iterations is completed, it indicates that the clustering center has reached the optimal value, and the iteration ends at this time. The difference of superpixel spatial intuitionistic fuzzy membership is:

where

represents the membership matrix of the intuitionistic fuzzy of the superpixel space updated last time.

represents the renewed superpixel spatial intuitive fuzzy membership matrix.

is the threshold.

Z is the set of superpixel feature vectors. denotes a set of superpixel neighbors. C represents the number of clusters. Parameter controls the neighborhood’s affect as a predefined limit. The fuzziness of the cluster is controlled by m. is the termination error. The max_iter is the maximum number of iterations. p is the relative weight of initial membership. q is the relative weights of spatial functions. is the intuitionistic parameter. denotes the cluster center. In this research, the values of , m, , max_iter, p, q, and are set to 0.2, 2, 0.05, 100, 1, 3, and 5, respectively.

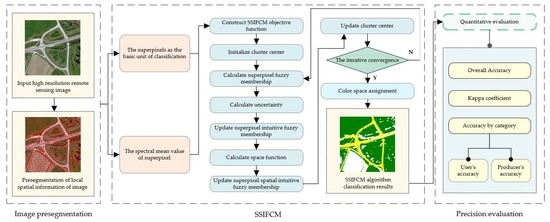

Based on the above process, the proposed algorithm can be summarized as follows:

Step 1: input HSRRS image;

Step 2: convert RGB to CIELab;

Step 3: superpixel computing by SLIC by Equations (1)–(3). The maximum color distance is set as , the number of segmentations k is the empirical value obtained from a large number of experiments;

Step 4: extract the spectral features of superpixels using CIELab color histogram;

Step 5: the unsupervised classification uses SSIFCM as in Algorithm 1.

| Algorithm 1 The proposed superpixel spatial intuitionistic fuzzy C-means (SSIFCM). |

| Input:, , C, α, m, ε, max iter, p, q, and λ

|

| Output: and |

| 1. Initialize randomly the cluster center , |

| 2. for r D 0, 1, …, to max iter do |

| 3. Calculate the superpixel fuzzy membership by Equation (12) |

| 4. Calculate uncertainty by Equation (14) |

| 5. Update the superpixel intuitive fuzzy membership by Equation (17) |

| 6. Calculate the space function by Equation (18) |

| 7. Update the superpixel spatial intuitive fuzzy membership by Equation (19) |

| 8. Update the cluster center by Equation (20) |

| 9. if then |

| 10. break |

| 11. End if |

| 12. End for |

The overall technical flow of the proposed algorithm is shown in

Figure 1.