1. Introduction

The remote sensing field has seen rapid development in recent years, which has resulted in a deluge of overhead high-resolution imagery from airborne and spaceborne remote sensing platforms. Although this huge data provides plentiful new sources, it far exceeds the capacity of humans to visually analyze and thus demands highly automatic methods. This data volume has also seen continual enhancements in temporal and spatial resolution of remote sensing information [

1,

2,

3,

4,

5,

6]. These circumstances necessitate the development of new human–machine teaming approaches for visual imagery analysis workflows, specifically techniques that leverage state-of-the-art computer vision and machine learning. This increasing amount of geospatial big data are utilized in a key task in the earth observation, change detection (CD), which is one of the most active topics in the remote sensing area due to the persistent occurrence of catastrophes such as hurricanes, earthquakes and floods [

6,

7,

8,

9], in addition to the interactivity of human with natural phenomena [

10]. The objective of CD is finding the change in the land cover utilizing co-located multitemporal remote sensed images [

11]. It is a key technology that has been investigated in many fields such as disaster management, deforestation, and urbanization [

7,

12].

More abundant details of geographical information can be acquired from the high spatial resolution (HSR) images that can be obtained from various sources for example IKONOS, QuickBird, and WorldView [

13]. Thus, high resolution images are in demand or imperative for various remote sensing fields [

14]. However, the improvements of spatial resolution conveys challenges to automated change detection processes [

15]. Generally, change detection methods fall under three categories, traditional pixel-based change detection (PBCD), object-based change detection (OBCD), and hybrid change detection (HCD) [

15].

Pixel based methods [

16,

17,

18] compare the multitemporal images pixel-by-pixel. There are many traditional pixel-based algorithms, such as image differencing, image rationing, principal component analysis (PCA), and change vector analysis (CVA). Using isolated pixels as the basic units in the pixel-based methods results in a over-sensitive change detection [

13]. Additionally, they can be affected by noise, in particular, when handling the rich information content of high resolution data [

19]. For the object-based methods [

20,

21,

22,

23,

24], first a segmentation procedure is applied to extract the features of ground objects. Next, using an object-based comparison process the change map is obtained. The final result of OBCD is usually sensitive to the segmentation process [

13]. Existing HCD techniques have proven suboptimal as well [

25,

26], in so much as the classical HCD methods belong to intuitive decision-level fusion schemes of PBCD and OBCD [

13].

In addition to the above three categories the change detection can be gathered into two categories from the view of the ultimate aim, binary CD methods and multitype CD methods. While binary CD emphases highlighting the change, multitype CD objectives is to differentiates various types of changes [

27]. In this paper, we propose a new approach for change detection, which is a chip-based approach facilitating multitype CD. This chip-based approach enables analysis at a variety of geospatail levels-of-detail; from chip-level change analysis up through larger spatial region analysis.

Feature learning is an efficient approach to represent imagery data [

28]. The literature shows that the deep learning based feature extraction demonstrates superior generalization properties that allow us to take advantage of the abilities of the transfer learning. In this scenario, the knowledge that is gained from a deep network that is pretrained on one domain can be transferred to other domains. Those features that are extracted from the deep networks, a.k.a. deep visual features, are proven to have more robust domain invariance ability in the remote sensing field than the features extracted from shallow networks [

29,

30,

31,

32,

33]. Additionally, deep features have the advantage of capturing the spatial context information efficiently [

29,

30,

31,

32,

33].

In the architecture of CNNs, convolutional layers and pooling layers are stacked interchangeably for feature extraction. The CNN-based deep model structure makes it significantly appropriate for feature learning of images, which is capable of overcoming the deformation of spatial features caused by rotation and translation [

34]. Several researchers have utilized convolutional neural networks (CNN) to extract features from satellite data for a variety of use cases. Li et al. [

35] proposed a deep CNN-based method for enhancing 30-m resolution land cover mapping utilizing multi-source remote sensing image. The CNN-based classifier is employed to extract high resolution features from Google Earth imagery. Zhong et al. [

36] built deep neural networks with numerous architectures to classify summer crops utilizing EVI time series.

Zhang et al. [

37] combined the local information extracted by histogram of oriented gradients (HOG) along with the CNN features representing contextual information. They utilized a CNN model trained on ImageNet (ILSVRC2012) as the contextual feature extractor. In [

38], the authors trained a CNN to label topographic features, such as roads, buildings, etc. In [

39], features and feature combinations extracted from many deep convolutional neural network (DCNN) architectures were evaluated in an unsupervised maner, then leveraged for classification of the UC Merced benchmark dataset. The authors found that both ResNet and VGG have design parameters that work well with spatial data.

In real-world application, data usually contain complex many-to-many relationships [

40]. To detect the multi-type change, the data should be clustered first. Various image clustering algorithms have been utilized as an unsupervised pattern recognition technique for different tasks involving HR-RSI. Fuzzy c-means (FCM) is one of the soft computing techniques in which each data point has a degree of membership in each cluster [

41]. That makes it a well suited approach for HR-RSI because image chips may have complex composition, such as trees, grass, roadways, buildings, water bodies, and more.

In the age of Big Data, it is no longer necessary to constrain broad area analysis to coarse and low-resolution geospatial data. One of our key contributions is to highlight how the details and features in the visual present within high-resolution imagery can be utilized to tease out the differences between various clusters. In lower resolution imagery, small individualized details are barely discernible as visual features. Importantly, automated methods that can detect these subtle changes can capture importing aspects related to change, such as subtle tree removal operations that are not clear-cut lumber harvests.

The main contributions of this paper can be summarized as the following:

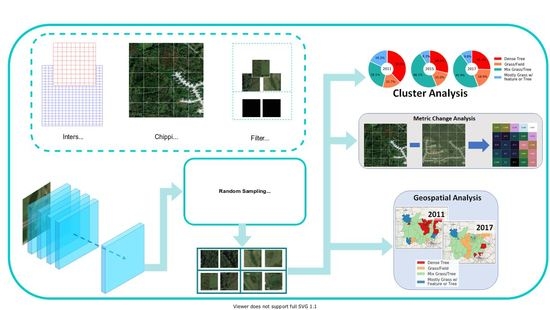

A chip-based approach has been proposed for multi-temporal change detection. The beauty of the proposed approach is that, unlike state of the art methods, it supports detecting the change in a large scale area with massive amount of HR remote sensed imagery data, while also facilitating higher resolution level-of-detail analysis. In other words, our proposed method gives an insight into the distribution of each cluster within the study area as a single unit, as sub-areas represented by the census blocks, and at the local chip level. Additionally, it overcomes the challenges of unaligned and multi-resolution corresponding imagery.

Generation of orthogonal deep visual features utilizing a DCNN along with the transfer learning techniques to extract the deep features for change detection analysis, considering the deep features represent the remote sensing data better than the traditional features extraction methods. Additionally, we project the DCNN extracted features into an orthogonal space preserving most of the information for enhanced unsupervised machine learning utilization. This solves a key challenge in the real world applications, as sufficient training data is not always available.

Soft clustering based on fuzzy c-means algorithm has been utilized to aggregate small image patches of similar visual composition. This is because the surface of the Earth is not composed of crisp distinctions of land cover in the high-resolution domain. Instead, any local image patch could be a mixture of various land cover types. Fuzzy c-means can capture this characteristic efficiently by the means of the soft partition.

Three analysis methodologies are introduced: cluster analysis, geospatial analysis, and metric change analysis. To successfully analyze the varied resolution images, that may be misaligned, change in the sub datasets is computed at the chip level using a neighborhood approach.

The remainder of this paper is organized as follows.

Section 2 describes the datasets and the preprocessing stages.

Section 3 presents our approach for extracting visual features from deep neural models that feed into a dimensionality reduction and soft partitioning.

Section 4 details our analysis methodologies of the proposed approach.

Section 5 outlines the experimental setup and presents the results.

Section 6 interprets and describes our findings. Finally,

Section 7 concludes the research and highlights future directions.

2. Datasets and Preprocessing

In this section, we describe two datasets and our preprocessing steps. The general workflow of this research is shown in

Figure 1, and

Table 1 lists the symbols used in this research.

2.1. Datasets Description

In this research, two main datasets were utilized. First, a publicly available benchmark dataset is used to train the deep neural feature extractor. The second dataset is our study area encompassing Columbia, MI, USA and the surrounding areas, including three different years.

2.1.1. RSI-CB256 Benchmark Dataset

The Large Scale Remote Sensing Image Classification Benchmark via Crowdsource Data (RSI-CB) [

42] is used in this research to train the deep neural feature extractor. Specifically, the

pixel sub dataset (RSI-CB256). According to the authors, it is 0.22–3 m GSD, which makes it higher spatial resolution than many other existing datasets. Higher spatial resolution means higher information details.

The authors collected

points of interest (POI) from Open Street Map (OSM), which directed the imagery scraping from Google Earth and Bing Maps. In total, there are 24,000 images across 6 categories with 35 subclasses including town, stream, storage_room, sparse_forest, snow_mountain, shrubwood, sea, sapling, sandbeach, river_protection_forest, river, residents, pipeline, parkinglot, mountain, marina, mangrove, lakeshore, hirst, highway, green_farmland, forest, dry_farm, desert, dam, crossroadsbridge, container, coastline, city_building, bridge, bare_land, city_avenue, artificial_grassland, airport_runway, airplane. The major countries where the POI were chosen from include USA, China, and UK [

42]. The RSI-CB dataset is an imbalanced dataset; some classes consists of more than 1300 image while other less than 200 images. Thus, data augmentation was a vital for our research to get a better performance.

2.1.2. CoMo Dataset

The second dataset consists of three subsets of raster data. Each subset corresponding to a different year.

Table 2 summarizes the properties of this dataset. The datasets have been collected over the following dates 5 April 2011, 10 October 2015, and 4 May 2017.

Of note, the tiles at the edges of the scanned area have different spatial extents than the rest of the tiles. This is true for the three sub-datasets. Additionally, the 2011 dataset is neither aligned nor has the same resolution as the other two datasets.

For the sake of this research, each tile is divided into 256 × 256 pixel chips to be consistent with the images of RSI-CB256 dataset. Each regular tile yields 4096 chips, which is a significant amount of data; more than 108 billion pixels in 2011 dataset and more than 24 billion pixels in each of 2015 and 2017 datasets. Handling such data at pixel-level is extremely computationally expensive, without a commensurate value in the resulting pixel-wise output. In this way, the chip-level approach, in the combination with DCNN features extraction, represents a suitably efficient data analysis technique that balances resolution of analysis (level-of-detail) with computational costs.

2.2. Region Imagery Preprocessing

To detect the change in the area of the interest, the three temporal subsets of the CoMo data should cover the same area. However, as can be perceived from the range of the geographic coordinates, the year 2011 covers different area than 2015 and 2017. This is a common problem with large-scale remote sensing datasets. Specifically, datasets that are collected over various years. The change analysis is limited to only the overlapping sub-area among the analyzed datasets. Using the accompanying geospatial polygons from the data provider, we are able to intersect the overlapping area and trim each year of the CoMo dataset to an appropriate set of scene tiles. This intersected geometry represents the final study area.

For the purpose of this research, each tile is partitioned into several chips of equal size, chosen to be 256 × 256 pixels for consistency with the RSI-CB256. Thus, each regular tile yields about 4096 chips. It is worth noting that the tiles at the edge of the scanned area are not always the same size of the regular tiles based on the source imagery capture; and therefore may yield a lower number of chips.

In this research we are interested in the center location of each chip. Thus, we defined Equation (1a,b) to obtain the center of each chip from its upper left corner. This equation assumes the scene, tiles, and chips are north-up.

As mentioned, the size of the tiles at the edges are not the same size of the regular tiles, therefore, some chips at the very edges have partial information. These chips are not useful and could negatively affect the subsequent processing and are therefore filtered out.

Algorithm 1 shows the pseudo code that checks if the chip is NoData chip or not. If it is NoData chip we will exclude it from further processing. First, the size of the chip is compared against a predefined threshold. If it is less than the threshold, it could be a useless chip that needs to be excluded. To make sure this chip does not contain useful information, it is compared at the pixel level to check whether most of the pixels in the chips are NoData or not. Comparing the size of the file first helps with expediting the process as comparing all the chips in such big data is computationally expensive.

Table 3 lists the details of the intersected area after the preprocessing. The 2011 datasets is slightly different than the other two datasets even after the processing because we are targeting the chip level and these data are not aligned with other two datasets. However, this difference is a result of spatial intersection of the sub-scene tiles with respect to the geospatial extent of the study area, where the tiles of the 2011 scene extend from the polygon different from the 2015 and 2017 scenes. In the case of all three subsets of the CoMo data, the chips are further refined to both remove chips out of the extent as well as remove chips with significant

NoData values. Furthermore, notice that the number of the chips in the intersected 2011 dataset is higher than the other two datasets due to the higher pixel resolution of this dataset. These characteristics highlight two particular challenges of the CoMo datasets—and many other large-scale multi-temporal datasets—that our methods detailed in

Section 4 sufficiently handle. First, the data is of varied resolution and it is not necessary to resample the data to uniform spatial pixel resolution. Secondly, our methods will accommodate data that is not perfectly aligned, which is often a necessary condition of PBCD and even many OBCD.

| Algorithm 1: Filtering out the NoData Chips |

![Remotesensing 13 01661 i001]() |

3. Deep Visual Feature Extraction and Clustering

This section describes the deep feature extraction, transformation to orthogonal visual features for reduced dimension, and clustering. Algorithm 2 details the process, along with the subsequent

Section 3.2 and

Section 3.3.

3.1. Deep Neural Feature Extraction Training

As mentioned previously, deep learning has proven its superiority in extracting the features over hand-crafted methods. In this research, the ResNet50 [

43] DCNN model is trained with the RSI-CB256 dataset after transfer learning from the ImageNet dataset [

44]. The most remarkable characteristic of ResNet is depth [

45].

The RSI-CB256 dataset is an imbalanced dataset; some classes consist of more than 1300 images while others less than 200 images. The data are divided into three subsets: training, validation, and testing leads to low performance. For this reason, preprocessing is a mandatory requirement. First, the data have been shuffled to avert any bias. Next, to avoid overfitting, the oversampling has been done after dividing the data into training, validation, and testing. This will guarantee there is no duplication in the data across the three subsets. The image rotation is used in oversampling the data. The images have been rotated in various angles including the following ranges [0–7, 83–97, 173–187, 263–277]. These ranges have been chosen to avoid losing the information from the image in case it rotated with large angles. In each subset, the classes have been oversampled to be equal to the largest class in the subset. Furthermore, the training dataset has been horizontally flipped, zoomed, cropped to avoid overfitting.

During training with RSI-CB256, the last convolutional layer is fine-tuned along with the training of the new classification phase to ensure the resulting features are adapted from the ground photo to the overhead imagery domain. The resulting DCNN generates 2048 deep visual features that are then assembled into 800 higher-level features (i.e., hidden layer) within the multilayer perceptron (MLP) classification phase before the final

softmax node classifier output (see [

46] for further details).

To ensure the proper training of the DCNN, the RSI-CB256 was organized into a 60/20/20 percent training, validation, and blind testing split. After training, the validation and blind test sets had classification performance of

98.72% and

99.31%, respectively.

Figure 2 shows the validation and testing Confusion matrices for the RSI-CB dataset. As the figure reveals, all the matrices contain the data around the diagonal line, which confirms the classification performance result. The activations of the 800 node hidden layer are the extracted deep visual features in this research (Algorithm 2, line 3 and 14).

3.2. Deep Orthogonal Visual Features

With 800 deep visual features, there is the potential for much redundant information, and therefore we utilize PCA to reduce the feature space (and the associated memory and computation requirements). We leverage PCA to minimize information loss during feature reduction (Algorithm 2, lines 6–7 and 24–25). PCA reduces numerical redundancy by projecting the original feature vector to a lower subspace, minimizing information loss [

47]. Additionally, in the context of clustering driven by high dimensional dissimilarity metrics, reducing the effects related to the

curse of dimensionality can improve the derived clusters. In this research, we reduce the deep visual features to 108, 110, and 102 dimensions for years 2011, 2015, and 2017, respectively; in each case preserving 99% of the data variances. These

deep orthogonal visual features are then suitable for cluster analysis and other unsupervised machine learning methods that leverage metric spaces. We randomly sample the study area (30%) to derive the principle components and the reprojection eigenvectors, which is then applied to the entire study area.

| Algorithm 2: Feature Extraction and Clustering |

![Remotesensing 13 01661 i002]() |

3.3. Fuzzy Land Cover Clustering

As with any large swath of remote sensing imagery, our study area is inherently non-discrete. This is especially true of high-resolution imagery, where small objects (visual features) are discernible and the composition of a collection of visual features within a local spatial extent drive the assessment of that localized area. These visual features may include trees, areas of grass, buildings (or other impervious surfaces), water, and more. In this research, the computational locality is at the chip-level, as defined in

Section 2.2. To facilitate large-scale analysis when the potential variability of the chip content is so diverse, we leverage soft-computing techniques. Specifically, we employ the fuzzy c-means (FCM) method, Ref. [

48], to generate a soft partition of data into groups of similar visual content.

Within the FCM model, each data point (chip) is measured for similarity to cluster centroids to generate a cluster membership in

[

49,

50]. Each chip’s deep orthogonal visual features are measured against the center of each cluster using Euclidean distance to form a partition matrix,

u. The FCM algorithm oscillates between computing weighted mean positions (center of mass) for each cluster and updating cluster memberships (the fuzzy partition matrix). Similar to the development of the deep orthogonal visual features, we leverage a 30% sample to learn the cluster centers in the deep orthogonal visual feature space. The final cluster centers are then used to compute a final

u. The FCM algorithm is Algorithm 2, line 8 that trains the

FuzzModel. Algorithm 2 line 26 uses this model to label all chips into the learned clusters.

The fuzzy partition matrix is utilized to create soft labels of the study area chips. Specifically, each chip’s strongest cluster membership is extracted as both a label and a membership degree; thereby making the information better aligned for geospatial visualization and human interpretation.

The fuzzy partition matrix

u is reduced to firm labels with membership via Equation (

2).

where

is the individual membership of chip

j in cluster

i,

is the number of clusters, and

N is the number of data points. The resulting tuple vector encodes the cluster assignment for each chip while preserving its membership information.

The unsupervised machine learning methods, such as fuzzy clustering, are usually very data dependent. Due to variability encountered in seasons, atmosphere, and sun/sensor geometry, we cannot expect unsupervised methods to generate data labels (i.e., cluster labels) that are coherent across scenes. For this reason, we aggregate the clusters into key semantic classes that dominate our study area: dense tree, grass field, mixed grass and tree, and mostly grass with tree or feature. This semantic grouping allows the mapping of any scene into numerically comparable chips. The grouping can be determined from any clustering with greater than the number of semantic categories. After mapping each group of clusters to its target semantic category, the partition probabilities of each group members have been added together to produce the final partition probability of the target semantic category.

6. Discussion

In the real world, the study area are not always have predefined classes. Specially when the area has a verity of groups that change over time. Thus, in this research, we utilized clustering methods unlike many researches [

57,

58,

59,

60,

61], who used classification methods to detect the change. Clustering method groups the images according to similarities between the features of these images and at the same time it differentiates them from other groups. In addition to that we have extracted deep features of the images using deep CNN with transfer learning technique to generate a rich feature maps instead of the traditional methods. The first convolution layers of the deep CNN capture the low detail features while the last layers generate high level features. The deep features has proven its superiority in many fields such as infrared images [

62], thyroid histopathology images [

63], and fluoroscopic images [

64].

It can be noticed from

Figure 4 that the dominated cluster in 2011 is the dense tree. While in 2015 and 2017 the dominated cluster became mix grass/tree. This is true for all other blocks as well. This indicates that some trees have been replaced with grass. This kind of transformation could happen either naturally, as a result of human activities, or a combination of them. Additionally, if we examine some blocks closely such as blocks

B1–B7 in the year 2011, it can be noticed that not all the clusters are represented in those blocks (represented as zero value in the respective figure cells). In some other cases, the whole block consists of only a single cluster, such as blocks

B1 from the year 2015 and

B85 from the year 2017. This can happen when the blocks are very small or in remote areas.

It can be concluded from comparing the geomaps (

Figure 7) that the general trend is decreasing in the dense tree cluster (red color) while increasing the Mix Grass/Tree cluster (green color). Additionally, the Grass/Field cluster (orange color) started to replace the Mix Grass/Tree cluster in the middle of the study area in the year 2017.

In

Figure 8, it can be noticed that the area of longitude range (−92.4, −92.1) and latitude of range (38.75, 39.0) has the largest size of change during the first time period. This is can be observed from the high red peek in the figure. Note that Columbia city lies within this range of coordinates, which explains the highest change compared to the other parts of study area. The terrain of the land cover is more clear in the 2D versions of the norm2. For instance, the Missouri River can be seen in the diagonal of the area. The river is visually discernible because the magnitude of the change for the river is consistent and yet different from the surrounding area. Note that the yellow area corresponds to the peek red area in the 3D version.

Comparing the results obtained using norm2 with those obtained from JSD metric, JSD appears to present more dynamic values/scale for analysis. Additionally, the dark color in the 2D version of JSD (

Figure 9d) goes back to the low change that happened during the time period 2015–2017, which can be confirmed from the 3D version as well. Therefore, we can see that the choice of change metric here (norm2 or JSD) can be made based on the degree of sensitivity desired in the analysis.

Comparing the results of the M-N approach, with the results of 1-N approach, the general views of the changes look similar. However, the range of the values are smaller for the M-N approach, which is likely due to the neighborhood averaging as a normalizing effect.

Recall the chosen distance comprises the neighbors that are defined based on the Moore neighborhood. This is true for 2015 and 2017 datasets. However, for the 2011 dataset the same value for the distance is used to ensure the consistency. As noted previously, the 2011 dataset is neither aligned with nor has the same resolutions as the other two datasets (2015 and 2017). However, obtaining the neighbors within the same distance as 2015 and 2017 is satisfactory to get the averaged values of the corresponding area and detect the change. This is one of the key techniques that facilitates the analysis without normalizing the imagery pixel resolutions across time periods and also negates the need to achieve pixel level alignment compared with pixel-based method such as [

65,

66,

67].

Our proposed method can detect the change in a large-scale area represented by a massive amount of HR remote sensed imagery data while also facilitating higher resolution level-of detail analysis. While classical HCD techniques have shown to be suboptimal, Refs. [

25,

26], as they belong to intuitive decision-level fusion schemes PBCD and OBCD. For example, the final result of OBCD is usually sensitive to the segmentation process, while the pixel-based methods result in an over-sensitive change detection as they use isolated pixels as the basic units [

13]. Additionally, they can be affected by noise when handling the rich information content of high-resolution data [

19].

It can be seen from

Figure 12 that new roads and buildings (residential area) are constructed and many trees are removed. The measurements of norm2 and JSD metrics reflect this change well. It can be noticed that the area where the new buildings are constructed has the highest values of measurements in both metrics compared to the other chips of the sampled area. This demonstrates the efficiency of our proposed method in capturing the changes in the study area; even down to the chip level-of-detail. It is worth mentioning that the two metrics norm2 and JSD coincide on the degree of the change but utilizing different scales. This demonstrates that relatively small, localized change areas can be measured and prioritized for human analytical review irrespective of the size of the large-scale area that is processed.

7. Conclusions

To summarize this research, a novel approach for detecting the change in a large-scale HR remote sensed imagery dataset based on chip-level measurements has been proposed. The orthogonal deep visual features technique have been utilized to extract the features from the datasets as a primarily step before clustering the data. Next, due to the inherent hybrid natural of remote sensing data, fuzzy c-means clustering has been utilized to cluster the RS data to better accommodate the non-discrete nature of large RS scenes.

After clustering the data, several analysis methodologies, (cluster, geospatial, and change metric analysis), have been applied to explore the change during the specified time periods. Additionally, a neighborhood method has been proposed to compare unaligned, multi-resolution datasets. The results obtained are based on various metrics such as norm2 and Jensen–Shannon divergence, in addition to the visual geo-maps and the percentage of the change. The area is examined at multiple levels-of-detail, including one whole unit, subunits represented by the census blocks, and at the local chip level. In each level-of-detail, it has been shown that the proposed method was able to detect the change efficiently.

There are numerous opportunities for future work, ranging from alternative feature extraction techniques to further development of change analysis. Further research will also explore reducing the size of the image chips to facilitate even higher resolution levels-of-detail, approaching the object level. Additional areas of interest for change analysis can be explored, such as from different world regions that have significantly different composition, i.e., not primarily trees and grass lands.