1. Introduction

Forest classification at tree species’ levels is important for the management and sustainable development of forest resources [

1]. Mapping the distribution of forest resources is important due to their strong association with qualitative monitoring indicators such as spatial locations, as well as with many quantitative indicators like forest stocks, forest carbon storage, and biodiversity [

2]. Satellite images have been widely used to map forest resources due to their efficiency and increasing availability [

3].

With more accurate and richer spatial and textural information, high spatial resolution (HSR) remote sensing images can be used to extract more specific information of forest types [

4]. In the recent past, a growing number of studies have been conducted on this topic [

5,

6]. Important milestones have been achieved, but there remain limitations [

7,

8]. One of the key limitations is that there is poor statistical separability of the images spectral range as there are a limited number of wavebands in such images [

9]. As a result, in the case of forests with complex structures and more tree species, the phenomenon of “same objects with different spectra” and “different objects with the same spectra” can lead to serious difficulties in extracting relevant information. Thus, it raises the requirements for advanced forest information extraction methods.

Deep learning models are a kind of deep artificial neural network methods that have attracted substantial attention in recent years [

10]. They have been successfully applied in land cover classification as they can adaptively learn discriminative characteristics from images through supervised learning, in addition to extracting and integrating multi-scale and multi-level remote sensing characteristics [

11,

12,

13,

14]. Compared with traditional machine learning methods, these models are capable of significantly improving the classification accuracy of land cover types, especially in areas with more complicated land cover types [

15,

16,

17].

With the ongoing development of artificial intelligence technologies, several efficient deep-learning-based optimization models for the classification of land cover types have been proposed [

18]. According to several recent studies, fusion individual deep-learning classifiers such as a convolutional neural network (CNN) into a multi-classifier can further improve the classification capacity of each classifier [

19,

20,

21]. At the same time, other studies have shown that a CNN designed with a two-branched structure can extract panchromatic and multispectral information in remote sensing images individually, ensuring better classification quality compared to single structures [

22]. In addition, some related research also indicated that the combination of a CNN and traditional image analysis technology such as conditional random fields (CRF) [

23] is conducive to further improve the classification accuracy [

24].

In recent years, to explore the classification effectiveness of deep-learning models for mapping forest types at tree species level, some scholars have attempted to apply advanced deep-learning classification methods to HSR satellite images. Guo (2020) proposed a two-branched fully convolutional network (FCN8s) method [

25] and successfully extracted forest type distribution information at the dominant tree species level in the Wangyedian forest farm of Chifeng City [

26] with China’s GF2 data. The study revealed that the deep characteristics extracted by the two-branch FCN8s method showed a certain diversity and can enrich the input data sources of the model to some degree. Thus, it is a simple and effective optimization method. At the same time, compared with the traditional machine learning algorithms such as support vector machine and so on, there is a significant improvement in the resultant overall classification accuracy (OA). However, the classification accuracy of some forest types or dominate tree species, such as White birch (

Betula platyphylla) and Aspen (

Populus davidiana) in the study area, needs to be improved further.

Relevant studies have shown that when classifying forest types using FCN8s, the use of CRF as an independent post-processing step can further improve the classification accuracy [

27,

28]. However, even though the CRF method can improve the results of classification to some extent as a post-processing step independent of the FCN8s training, the free structures are unable to fully utilize CRF’s inferential capability. This is because the operation of the model is separated from the training of the deep neural network model. Consequently, the model parameters cannot be updated with the iterative update of weights in the training phase. To address this limitation, Zheng (2015) has proposed a conditional random field as recurrent neural networks (CRFasRNN) model in which the CNN and CRF are constructed into a recurrent neural network (RNN) structure. Then, training the deep neural network model and operation of the CRF post-processing model can be implemented in an end-to-end manner. The advantages of the CNN and CRF models are thus fully combined. At the same time, the parameters of the CRF model can also be synchronously optimized during the whole network training, resulting in significant improvements in the classification accuracy [

29].

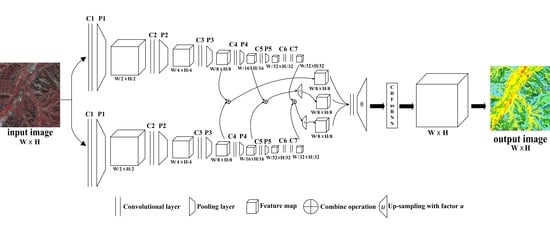

In the paper, we proposed a novel end-to-end deep learning fusion method for mapping the forest types at tree species level based on HSR remote sensing data. The proposed model based on the previous published two-branch FCN8s method and imbedded a CRFasRNN layer into the network as the post-processing step, which is named dual-FCN8s-CRFasRNN in the paper.

The main contributions of this paper are listed as follows:

An end-to-end deep fusion dual-FCN8s-CRFasRNN network was constructed to optimize the forest classification performance refined to the tree species level in an automated way by combining the advantageous properties of multi-modality representations and the powerful features of post-processing step to recover a high-resolution feature representation and to improve the pixel-wise mapping accuracy in an automatic way.

A CRFasRNN module was designed to insert into the network to comprehensively consider the powerful features of post-processing step to optimize the forest classification performance refined to the dominant tree species level in an end-to-end automated way.

The remainder of the paper was structured as follows. The Materials and Method are presented in detail in

Section 2. The Results are given in

Section 3.

Section 4 then discusses the feasibility of the optimized model. Finally,

Section 5 concludes the paper.

4. Discussion

The study showed that the deep learning fusion model has great potential in the classification of forest types and tree species. The proposed dual-FCN8s-CRFasRNN method indicated its applicability for forest type classification at a tree species level from HSR remote sensing imagery. The experimental results showed that, the dual-FCN8s-CRFasRNN model could extract the dominant tree species or forest types which were widely distributed in two study areas, especially for the extraction of the plantation species such as Chinese pine, Larix principis, and Eucalyptus which all had an OA above 90%. However, for the other forest land types in the Wangyedian forest farm and the other category in the GaoFeng forest farm, the classification accuracy was poor. That may be because the above two categories included many kinds of surface features, presenting spectral characteristics that are complex and difficult to distinguish.

Through the comparative analysis of the research results of the two experimental areas, it could be seen that the classification accuracy of the GaoFeng forest farm in Guangxi was relatively low compared with the classification results of the Wangyedian forest farm in North China. The PA of Masson pine, Star anise, and Miscellaneous wood were less than 70%. This may have been caused by two reasons. Firstly, the spectral is similar among these forest land types, which inevitably increased the difficulty of distinguishing them. Secondly, the number of collected samples of these forest land types compared to Eucalyptus was relatively small, which leads to an under-fitting phenomenon of the model.

To verify the effectiveness of the proposed method and clarify the optimization effect of embedding CRFasRNN layer in the model published earlier, the paper not only compared the dual-FCN8s-CRFasRNN model with the deep learning model published earlier, but also compared the results of post-processing with CRF. The results showed that the optimal classification results were obtained after adding CRFasRNN layer in both experimental areas compared with the above two methods. The classification effect was improved obviously especially for the category with less distribution range.

To further prove that embedding CRFasRNN layer into deep learning model is a general and effective optimization method. This study further compared and analyzed the optimization effect of embedding CRFasRNN layer in the classic FCN8s model. The results showed that the model with CRFasRNN layer got better classification accuracy than the classic FCN8s model and the previously published two branch fcn8s model. At the same time, in terms of processing efficiency, it also reduced the processing time compared with using CRF post-processing method.

For including NDVI indices and using a fine-tuning strategy, the proposed method outperformed those without NDVI feature and fine-tuning strategy in terms of the OA and the Kappa coefficient achieved. To further clarify the effectiveness of the use of NDVI index, this study further replaced the NDVI index with green NDVI (GNDVI) [

47] index in two experimental areas as showed in

Table 10 and

Table 11. The results showed that the accuracy of the dual-FCN8s-CRFasRNN model with GNDVI index was with OA of 89.47% and 73.70% and Kappa coefficient of 0.8804 and 0.6898 for Wangyedian and GaoFeng forest farm, respectively, which was very similar with the model using NDVI index and the difference between them was less than 1%. From the classification results of a single category, the use of GNDVI index improved the effect of broad-leaved mixed forest, such as the other forest types in Wangyedian forest farm and the Miscellaneous in GaoFeng forest farm.

Compared with the previous research results of the fine classification of forest types using HSR data, the method proposed in the study got better performance. Immitzer et al. (2012) carried out tree species classification with random forest using WorldView-2 satellite data and the overall accuracy for classifying 10 tree species was around 82% [

48]. Adelabu et al. (2015) employed ground and satellite based QuickBird data and random forest to classify five tree species in a Southern African Woodland with OA of 86.25% [

49]. Waser et al. (2014) evaluated the potential of WorldView-2 data to classify tree species using object-based supervised classification methods with OA of 83% [

50]. Cho et al. (2015) based on WorldView-2 data performed tree species and canopy gaps mapping in South Africa with OA of 89.3% [

51]. Sun et al. (2019) optimized three different deep learning methods to classify the tree species, the results showed that VGG16 had the best performance, with an overall accuracy of 73.25% [

52]. Cao (2020) based on the airborne charge coupled device (CCD) orthophoto proposed an improved Res-UNet model for tree species classification in GaoFeng forest farm. The result showed that the proposed method got an OA of 87% which was higher than this study; however, the experimental area of this study was smaller, and the accuracy of Eucalyptus classification is 88.37%, which is lower than this paper [

53]. Xie (2019) based on multi-temporal ZY-3 data, carried out the classification of tree species, forest type, and land cover type in the Wangyedian forest farm [

54], which is same test area utilized in our research. The OA (84.9%) was much low than our result, but the accuracy of broad-leaved tree species such as White birch and Aspen (approximately 85%) was a little bit higher than ours. This may be due to the use of multi-temporal data to optimize the performance. The effect of using multi-temporal data for the fine classification of forest types had also been proven by other studies. For example, Ren et al. based on multi-temporal SPOT-5 and China GF-1 data achieved the fine classification of forest types with an accuracy of up to 92% [

55]. Agata (2019) classified tree species over a large area based on multi-temporal Sentinel-2 and DEM with a classification accuracy of 94.8% [

56]. Based on these results, studies are planned to combine multi-temporal satellite data with the deep learning method for forest type fine classification as the next step in the research. In addition, the proposed method also needs to be assessed in other forest areas to evaluate the effect of different forest structures and other tree species.

5. Conclusions

As an effort to optimize the forest mapping performance refined to the tree species level in an automated way, this study developed a novel end-to-end deep learning fusion method (dual-FCN8s-CRFasRNN) for HSR remote sensing images by combining the advantageous properties of multi-modality representations and the powerful features of post-processing step, and verified its applicability for the two areas which are located in the North and South of China respectively. With an overall accuracy of 90.1% and 74.39% for two test areas, respectively, we could demonstrate the high potential of the model for forest mapping at tree species level. The results also showed that it could get a remarkable result for some plantation tree species, such as Chinese pine and Larix principis in the northern test area, and Eucalyptus in the southern test area. The embedding of the CRFasRNN post-processing module could effectively optimize the classification result. Especially for the tree species with small distribution range, the improvement effect is obvious. Through comprehensive comparison of classification accuracy and processing time, embedding CRFasRNN layer in deep learning model not only automatically completed post-processing operation in an end-to-end manner, but also improved classification effect and reduced processing time.

Given the importance of mapping forest resources, the proposed dual-FCN8s-CRFasRNN model provided a feasible optimized idea for mapping the forest type at tree species levels for HSR image, and will substantially contribute to the improvement for the management and sustainable development of forest resources in the country.

In the future, we will further exploit the potentials of deep learning based on multi-temporal data, as well as investigating the means to build the model with limited number of training samples for the forest type classification at tree species level of high-spatial-resolution images.